Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

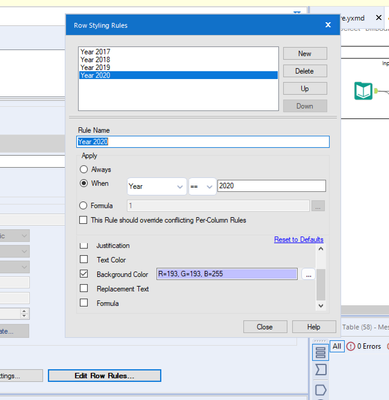

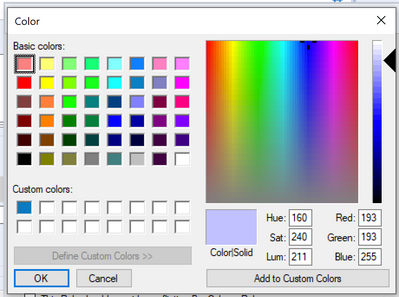

Having just participated in weekly challenge 293 there is a requirement to output a table with certain conditional row colours. However the configuration is based on rgb colour codes, whereas the desired output displays the colours using hex codes. 95% of the development time on this challenge was to get matching colour formatting, so being able to insert hex codes would improve this experience.

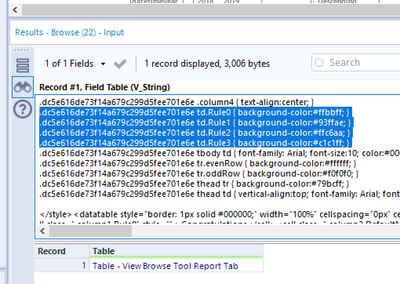

Hopefully this is the right place to post this and it hasn't been suggested already but I think it would be useful to add a numeric indicator to the formula tool to show how many formulas are being done with one tool. It would be useful when going back into or sharing workflows that a user would know more than one function is being carried out at that point. Currently I change the annotation to show how many but I think it would be useful if the icon changed dynamically. Below is a mockup of what I think it should look like.

Thanks,

Pete

@RithiS ,

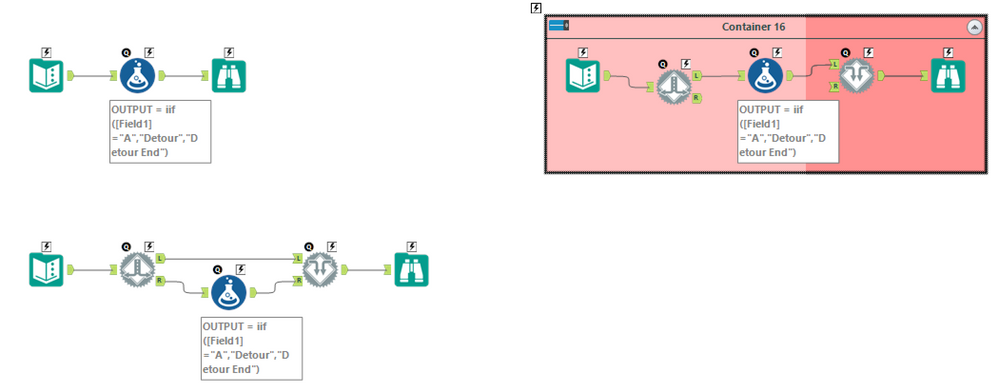

I'm a fan of using DETOUR tools in Alteryx. I often place "test" code into a standard workflow and opt to use it or not based upon a detour. The challenge is that adding a detour and detour end invariably leads to having to re-route connectors (default of adding tools is to connect to the left). Here's a picture:

What I'd like to do is SELECT the tool or tools that I want to connect around (in this case, just the formula tool). I'd like to right-click and DETOUR. The detour and detour end would be added (putting the selected tools in the path of the RIGHT option. This would greatly speed up the tool configuration process.

If you want to go for extra credit, you could modify the GUI to express which direction a detour is travelling in a standard workflow (e.g. make connections wireless or dashed when not selected).

Cheers,

Mark

Hi

I'm really missing a search in the medata phane?

If I am on data phane:

If im browsing though metadata:

Hello!

I like to annotate my workflows when finished, and it can be a bit of a pain to add more and more comment tools by searching for them, or going through the current right-click menu:

What would be nice is the option to right click anywhere on the canvas, and have the option of 'add comment', similar to how we have the option for 'add container' when selecting tools on the canvas.

Cheers!

When we industrialize our workflows, we often use a parameter file with a command like :

AlteryxEngineCmd.exe MyAnalyticApp.yxwz AppValues.xml

I would like to have the parameter file path with its extension as an engine constant, like we have the workflow name.

It can be daunting to find the tool that is currently being processed by the engine in workflows that contain hundreds of tools with many ins, outs, and branches. During runtime, I want to be shown the tool that is running on the canvas. This functionality should be in the form of a button or something to direct focus to that area. It should not be the default.

As a best practice, I'd like to automagically change any drive mapping to UNC when saving my workflows. This applies to both local and gallery saves.

Cheers,

Mark

Currently, if you download and Alteryx package from an alternative version it doesn't allow import into a newer version.

Workflows allow this with a warning it would be good to allow it on packages too.

In the dynamic input tool,

Where you “Read a List of Data Sources”, there should be a radio button below the “Action” field, to

“INCLUDE FIELD OF DATA SOURCES”,

Then you’d have an output field with the isolated name from which the data was sourced. You wouldn't be required to "include full file path" then parse out the sheet the data came from.

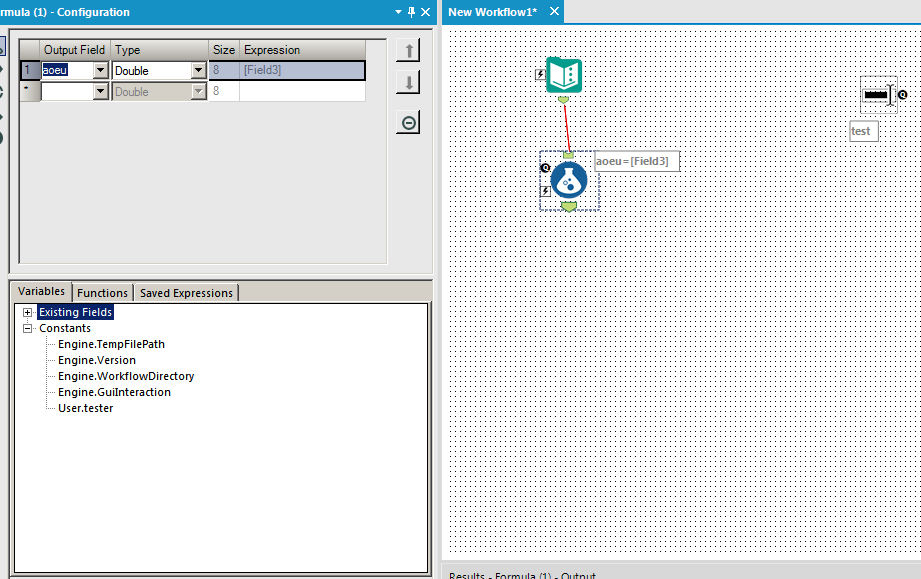

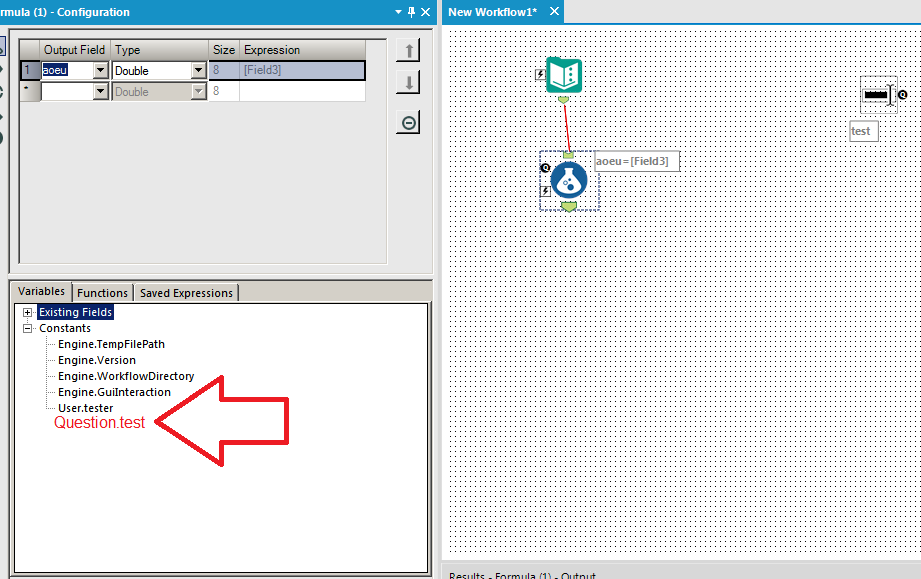

Whenever I add an interface tool, it adds a constant just like the 4 engine constants and any user constants. It would be useful if tools like the formula and filter automatically added question constants to the list for you to use. This would be identical to how user constants behave currently. Here is the before and after for visual effect:

BEFORE:

AFTER:

Create new connector to pull Salesforce Reports

We are a large company with tens of thousands employees using Salesforce on a daily basis. Over the years, we have worked with Salesforce to make many customizations and create many reports to provide data for various reporting needs. However, we have increasingly found it inefficient and prone to error to download the reports manually. We have many teams using the Salesforce reports as a base to create additional business insights.

Alteryx is a great tool to manage data ETL and workflows, but it does not support pulling data from Salesforce reports directly. Instead, it only offers connectors to pull data from base Salesforce objects. The data from Salesforce objects such as tables can be useful, but do not necessarily offer the logical view of Salesforce reports, and may require a lot of efforts to reconcile the data consistency against the reports our users are used to. Sometimes, it may be impossible to repeat producing the same data from Salesforce tables as those from Salesforce reports. That in turn would cause a lot of efforts spent by the reporting teams, their audience, and users of the Salesforce reports to match things up.

Salesforce does not have any out-of-box solution to schedule downloading the reports. At our request, their support team did some research and have not found a good 3rd-party solution in the Salesforce App Exchange ecosystem that supports this need.

I strongly believe this is a great opportunity for Alteryx. Salesforce already has an API that allows for building custom applications to pull Salesforce reports. However, most Salesforce users are more business oriented and do not necessarily have the appetite to engage with their IT staff or external resources provide to develop such apps and bear the burden to main them.

I have attached the Salesforce Reports and Dashboards API Developer Guide for your reference.

Sincerely,

Vincent Wang

Please upgrade the "curl.exe" that are packaged with Designer from 7.15 to 7.55 or greater to allow for -k flags. Also please allow the -k functionality for the Atleryx Download tool.

-k, --insecure

(TLS) By default, every SSL connection curl makes is verified to be secure. This option allows curl to proceed and operate even for server connections otherwise considered insecure.

The server connection is verified by making sure the server's certificate contains the right name and verifies successfully using the cert store.

Regards,

John Colgan

I would love to see a "Product" option added to the summarize tool. I can currently count, sum, mean etc., but I can't multiply my data while grouping. There are numerous "work arounds", but a native product function built into the summarize tool would be great.

Thanks for listening!

I'm sure there's a reason behind it, but can we please be allowed to run calculations on null values in a formula tool? right now, if we sum three values (1 + 3 + [null]) it produces [null], can the formula tool just ignore the null values? the only way around this is to fill the [null] cells with a value and that adds an additional step to what should be a fairly straight forward process. That value would have to be different for a multiplication formula vs an addition formula in order to not change the answer materially whereas ignoring the value is a more consistent solution.

Hi there Alteryx team,

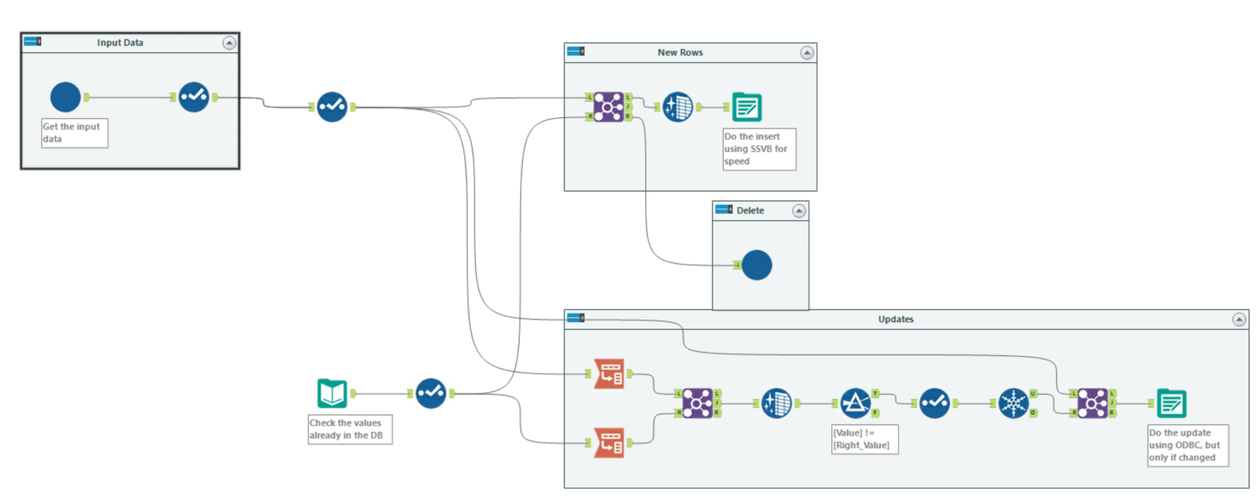

When we load data from raw files into a SQL table - we use this pattern in almost every single loader because the "Update, insert if new" functionality is so slow; it cannot take advantage of SSVB; it does not do deletes; and it doesn't check for changes in the data so your history tables get polluted with updates that are not real updates.

This pattern below addresses these concerns as follows:

- You explicitly separate out the inserts by comparing to the current table; and use SSVB on the connection - thereby maximizing the speed

- The ones that don't exist - you delete, and allow the history table to keep the history.

- Finally - the rows that exist in both source and target are checked for data changes and only updated if one or more fields have changed.

Given how commonly we have to do this (on almost EVERY data pipe from files into our database) - could we look at making an Incremental Update tool in Alteryx to make this easier? This is a common functionality in other ETL platforms, and this would be a great addition to Alteryx.

Hello all,

First of all, I really appreciate the effort made by Alteryx to provide an efficient way to try the software, especially the nonAdmin install. It helped me a lot to show Alteryx to Tableau or Qlik Users, solving in a few minutes their use cases. But there is still a little thing that can break the "whaoo effect" : for the trial, you just have an installer and this installer can be blocked by security. It happened to me today and it was SOOOOOO frustrating.

Best regards,

Simon

Many software & hardware companies take a very quantitative approach to driving their product innovation so that they can show an improvement over time on a standard baseline of how the product is used today; and then compare this to the way it can solve the problem in the new version and measure the improvement.

For example:

- Database vendors have been doing this for years using TPC benchmarks (http://www.tpc.org/) where a FIXED set of tasks is agreed as a benchmark and the database vendors then they iterate year over year to improve performance based on these benchmarks

- Graphics card companies or GPU companies have used benchmarks for years (e.g. TimeSpy; Cinebench etc).

How could this translate for Alteryx?

- Every year at Inspire - we hear the stats that say that 90-95% of the time taken is data preparation

- We also know that the reason for buying Alteryx is to reduce the time & skill level required to achieve these outcomes - again, as reenforced by the message that we're driving towards self-service analytics & Citizen-data-analytics.

The dream:

Wouldn't it be great if Alteryx could say: "In the 2019.3 release - we have taken 10% off the benchmark of common tasks as measured by time taken to complete" - and show a 25% reduction year over year in the time to complete this battery of data preparation tasks?

One proposed method:

- Take an agreed benchmark set of tasks / data / problems / outcomes, based on a standard data set - these should include all of the common data preparation problems that people face like date normalization; joining; filtering; table sync (incremental sync as well as dump-and-load); etc.

- Measure the time it takes users to complete these data-prep/ data movement/ data cleanup tasks on the benchmark data & problem set using the latest innovations and tools

- This time then becomes the measure - if it takes an average user 20 mins to complete these data prep tasks today; and in the 2019.3 release it takes 18 mins, then we've taken 10% off the cost of the largest piece of the data analytics pipeline.

What would this give Alteryx?

This could be very simple to administer; and if done well it could give Alteryx:

- A clear and unambiguous marketing message that they are super-focussed on solving for the 90-95% of your time that is NOT being spent on analytics, but rather on data prep

- It would also provide focus to drive the platform in the direction of the biggest pain points - all the teams across the platform can then rally around a really deep focus on the user and accelerating their "time from raw data to analytics".

- A competitive differentiation - invite your competitors to take part too just like TPC.org or any of the other benchmarks

What this is / is NOT:

- This is not a run-time measure - i.e. this is not measuring transactions or rows per second

- This should be focussed on "Given this problem; and raw data - what is the time it takes you, and the number of clicks and mouse moves etc - to get to the point where you can take raw data, and get it prepped and clean enough to do the analysis".

- This should NOT be a test of "Once you've got clean data - how quickly can you do machine learning; or decision trees; or predictive analytics" - as we have said above, that is not the big problem - the big problem is the 90-95% of the time which is spent on data prep / transport / and cleanup.

Loads of ways that this could be administered - starting point is to agree to drive this quantitatively on a fixed benchmark of tasks and data

@LDuane ; @SteveA ; @jpoz ; @AshleyK ; @AJacobson ; @DerekK ; @Cimmel ; @TuvyL ; @KatieH ; @TomSt ; @AdamR_AYX ; @apolly

We now have the ability to output to an ESRI File Geodatabase, which is great, but it only allows you to output it to the WGS84 coordinate system. I would like to have the same functionality to export it to other projections or coordinate systems similar to the ESRI Shapefile or ESRI Personal Geodatabase output tools (we specifically need NAD83 but I'm sure others would like other options as well).

- New Idea 274

- Accepting Votes 1,815

- Comments Requested 23

- Under Review 173

- Accepted 58

- Ongoing 6

- Coming Soon 19

- Implemented 483

- Not Planned 115

- Revisit 61

- Partner Dependent 4

- Inactive 672

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

963 -

Data Products

2 -

Desktop Experience

1,537 -

Documentation

64 -

Engine

126 -

Enhancement

330 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

194 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

80 -

UX

223 -

XML

7

- « Previous

- Next »

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

- simonaubert_bd on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

| User | Likes Count |

|---|---|

| 23 | |

| 5 | |

| 5 | |

| 5 | |

| 5 |