Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

As Tableau has continued to open more APIs with their product releases, it would be great if these could be exposed via Alteryx tools.

One specifically I think would make a great tool would be the Tableau Document API (link) which allows for things like:

- Getting connection information from data sources and workbooks (Server Name, Username, Database Name, Authentication Type, Connection Type)

- Updating connection information in workbooks and data sources (Server Name, Username, Database Name)

- Getting Field information from data sources and workbooks (Get all fields in a data source, Get all fields in use by certain sheets in a workbook)

For those of us that use Alteryx to automate much of our Tableau work, having an easy tool to read and write this info (instead of writing python script) would be beneficial.

It would be useful if enhancements could be made to the Sharepoint Input tool to support SSO. In my organisation we host a lot of collaborative work on SharePoints protected by ADFS authentication and directly pulling data from them is not supported with the SharePoint input tool, it is blocked. The addition of this feature to enable it to recognise logins would be very useful.

A problem I'm currently trying to solve and feel like I'm spending way too much time on it..

I have a data set which has some data in it from multiple languages, and I only want English values. I was able to get rid of the words with non English letters with a little regular expression and filtering. However, there's some words that do contain all English letters but aren't English. What I'm trying to do is bring in an English dictionary to compare words and see which rows have non English words according to the dictionary. However, this is proving to be a bit harder than I thought. I think I can do it, but it feels like this should be much simpler than it is.

It would be great to have a tool that would run a "spell check" on fields (almost all dictionaries for all languages are available free online). This could also be useful also just for cleaning up open text types of data where people type stuff in quickly and don't re-read it! 🙂

Currently the OLEDB/ODBC connection string for a server that requires a password can be injected into with a password that contains a ; or a |. There may be other values that cause this as well - these are the ones our company has found so far.

This lowers the security of passwords for our other systems, by limiting what characters we can use.

// This is my new formula MAX([Price] * [Quantity],0) // This was my old formula // [Price] * [Quantity]

Imagine being able to SELECT your text block (could be many lines) and right-clicking to see an option to Comment or Un-Comment those configuration statements. I thought that you'd like it too.

Cheers,

Mark

90% of the time when dragging in an input tool I need to drag in a select tool to pick only the fields that you want. Best practice suggests this should be 100% of the time for efficiency. Embeding this functionality within the input tool itself would save a step.

I'd like to be able to disable a tool container but not minimize it so I can still see what's in there. Maybe disabled containers could be grayed out the way the output tools are when you disable them. We would still need to retain current features in case people like it that way, but it would be nice to choose.

To keep from being too specific, the "Idea" is that Alteryx Designer should do better at recognizing and handling Date/Times on input. Thoughts include:

1) Offer more choices in the Parse: DateTime tool, including am/pm.

2) Allow users to add new formats to the Parse: DateTime lists.

3) Include user-added formats in the Preparation: Auto Field tool's library.

4) Don't require zero-padding of days and hours in the DateTimeParse() function. (1/1/2014 1:23:45 AM looks enough like a date that DateTimeParse() should be able to figure it out, but it stumbles on day and hour.)

My particular difficulty is that I have incoming date/times with AM/PM components. I've gone ahead and created a macro to take care of that for now, but it certainly seems like that sort of thing would be handled automatically.

Thanks!

A recent post solution (https://community.alteryx.com/t5/Data-Preparation-Blending/Can-somebody-tell-me-where-is-quot-Choose...) by @patrick_digan alerted me to a loss of functionality of the Input Tool. In order to define a range of data via SQL to Excel (e.g. Sheet3$A1:C10) you need to know a work-around instead of just modifying the SQL. The work-around is to modify the XML. I would like to see that functionality returned to the Input tool.

Cheers,

Mark

Allow User to set priorities of streams in tool and to be able to release streams in a certain order.

Hello all,

When using in-database, all you have in select or formula are the Alteryx field types (V_String, etc..).

However, since you're mostly writing in database, in the end, there is a conversion of Alteryx field types to real SQL field types (like varchar). But how is it done ? As of today, it's a total black box. Some documentation would be appreciated.

Best regards,

Simon

Hello all,

So, right now, we have two very separated products : Alteryx Designer and Alteryx Designer Cloud. But what if you want to go from Alteryx Designer on your desktop to the cloud ?

well, you will have to rewrite every single workflow because you can't publish or import your current workflow on Alteryx Designer Cloud. You cannot export Designer Cloud workflow to Alteryx Designer on Desktop either.

This is a huge limitation on cloud implementation and sells and the ONLY product I know that's not compatible between on-premise and cloud.

Please Alteryx, this is a no-brainer situation if you want to convince your customers !

Best regards,

Simon

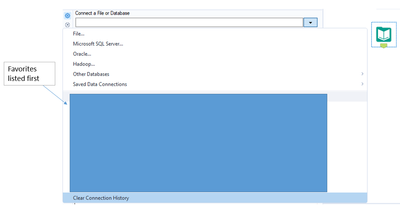

In the Input tool, I rely heavily on the recent connection history list. As soon as a file falls off of this list, it takes me a while to recall where it's saved and navigate to the file I'm wanting to use. It would be great to have a feature that would allow users to set their favorite connections/files so that they remain at the top of the connection history list for easy access.

Currently : the "Label" element in the Interface Designer Layout View is a single line text input.

Why could it be impoved : the "Label" element is often used to add a block of text in an analytical application interface. And adding a block of text in a single line text input is **staying polite** quite the struggle.

Solution : make this single line text input a text box just like the formula editor.

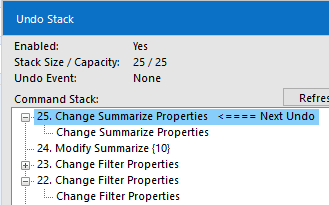

The Edit menu allows you to see what your next undo/redo actions are. This is super helpful, however sometimes I decide to scrap an idea I was starting on and need to perform multiple undo's in a row. It would be great if we could see a list of actions like in the debug undo/redo stack menu then select how many steps we'd like to undo/redo.

For example, using the below actions, if I want to undo the Change Summarize Properties and also the Modify Summarize, currently I have to do that in two steps. I'd like to be able to click the Modify Summarize and have the workflow undo all commands up to and including that one.

Please add XBRL - eXtensible Business Reporting Language (https://www.xbrl.org/ , Wikipedia , http://www.xbrleurope.org/ ) as output file format.

XBRL is based on XML and is used in financial word, for example all public companies in USA send their financial reports to Stock Exchange Commison in XBRL format. (http://xbrl.sec.gov/)

In Japan Central Bank and Financial Services Agency (FSA) are collecting financial data for banks and financial companies using XBRL format.

Thank you.

Regards,

Cristian

When using the Alteryx email tool to send a text to a mobile device via carrier URL (i.e. xxxxxxxxx@vtxt.com) bad characters appear. This is due to the fact that the Alteryx email tool is sending in XML format and the carriers only accept HTML.

This was determined after working with the Alteryx support team to determine the root cause of that bad characters appearing on the mobile phone.

We are using an Alteryx workflow as an early warning system for technical issues to upper management. These advisories or alerts are being sent to mobile devices via email tool.

Regards,

Andrew Hooper

This idea arose recently when working specifically with the Association Analysis tool, but I have a feeling that other predictive tools could benefit as well. I was trying to run an association analysis for a large number of variables, but when I was investigating the output using the new interactive tools, I was presented with something similar to this:

While the correlation plot draws your high to high associations, the user is unable to read the field names, and the tooltip only provides the correlation value rather than the fields with the value. As such, I shifted my attention to the report output, which looked like this:

While I could now read everything, it made pulling out the insights much more difficult. Wanting the best of both worlds, I decided to extract the correlation table from the R output and drop it into Tableau for a filterable, interactive version of the correlation matrix. This turned out to be much easier said than done. Because the R output comes in report form, I tried to use the report extract macros mentioned in this thread to pull out the actual values. This was an issue due to the report formatting, so instead I cracked open the macro to extract the data directly from the R output. To make a long story shorter, this ended up being problematic due to report formats, batch macro pathing, and an unidentifiable bug.

In the end, it would be great if there was a “Data” output for reports from certain predictive tools that would benefit from further analysis. While the reports and interactive outputs are great for ingesting small model outputs, at times there is a need to extract the data itself for further analysis/visualization. This is one example, as is the model coefficients from regression analyses that I have used in the past. I know Dr. Dan created a model coefficients macro for the case of regression, but I have to imagine that there are other cases where the data is desired along with the report/interactive output.

So while working through a workflow that takes up a bunch of canvas space, I find myself jumping between two points, one at the beginning and one at the end. Every time I need to jump to the other point, I have to zoom out and scroll over and down and then zoom back in.

What if there was a tool that you could drop on the canvas as a "point of Interest" that if you select it (perhaps) on some other part of the interface, takes you right to that spot in the workflow. I know that currently you can look up tools and it will take you to the location of the tool, but it can be difficult when you are jumping around 4 or 5 different spots to remember which tool number is which.

I would use this all the time!

Just a thought

In order to make the connections between Alteryx and Snowflake even more secure we would like to have the possibility to connect to snowflake with OAuth in an easier way.

The connections to snowflake via OAuth are very similar to the connections Alteryx already does with O365 applications. It requires:

- Tenant URL

- Client ID

- Client Secret

- Get Authorization token provided by the snowflake authorization endpoint.

- Give access consent (a browser popup will appear)

- With the Authorization Code, the client ID and the Client Secret make a call to retrieve the Refresh Token and TTL information for the tokens

- Get the Access Token every time it expires

With this an automated workflow using OAuth between Alteryx and Snowflake will be possible.

You can find a more detailed explanation in the attached document.

- New Idea 297

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 168

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

241 -

Category Join

104 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,554 -

Documentation

64 -

Engine

127 -

Enhancement

347 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

206 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |