Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi,

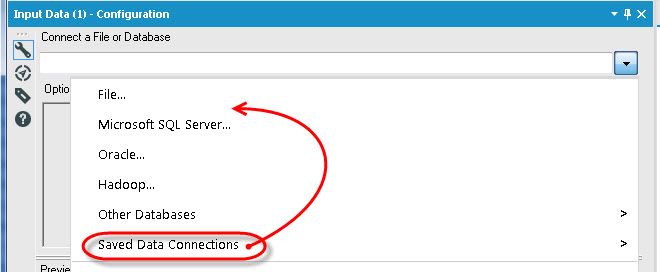

In the Input tool, it would be useful to have the Saved Database Connections options higher in the menu, not last. Most users I know frequently use this drop down, and I find myself always grabbing the Other Databases options instead as it expands before my mouse gets down to the next one. I would vote to have it directly after File..., that way the top two options are available, either desktop data or "your" server data. To me, all the other options are one offs on a come by come basis, don't need to be above things that are used with a lot more frequency. Just two cents from a long time user...love the product either way!

Thanks!!

Eli Brooks

Recently, I posted one problem regarding on merging a column with same value using the table tool. I do have a hard time to make a solution until @HenrietteH helped on how to do it. What she showed was helped me a lot to do what I want in my module, however it would be more easier if we are going to add this feature on the table tool itself.

Thank you

A user may need to perform regression test on their workflows when there is a version upgrade of Alteryx. To save users time and effort, users can be encouraged to submit a few workflows in a secure area of the Alteryx Gallery. Prior to a version release, the Alteryx product testing team can perform a regression test of these workflows using automation. Thus when users receive an upgraded version of Aleryx it is more robust and with the added assurance that the workflows they had submitted will continue to work without errors.

Many tab files lately (I am finding when they are created in mapinfo 16) Alteryx cannot read. I have posted about this in other forums but wanted to bring it up on the product ideas section as well.

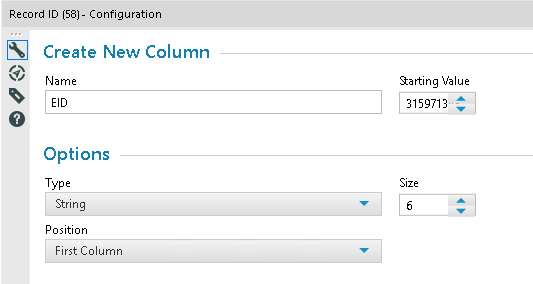

user is not able to see the complete value

only 7 digits are showing up

When using an SQLite database file as an input, please provide the option to Cache Data as is available when opening an ODBC database. Currently, every time the input tool is selected, it takes an inordinate amount of time for Alteryx to become responsive; especially if the query is complex. SQLite is not a multi user database and is locked when accessed so there is no need to continuously poll it for up-to-date values. Pulling data when run or if F5/Refresh is pressed would be sufficient.

The issue may be more primitive than what is solved by having a Cache Data option. I am more concerned that the embedded query seems to be "checked" or "ran" every time the tool is selected. I think this issue was resolved with other file formats but SQLite was overlooked.

Hi,

Would it be possible to simplify some of the workaround processes that are needed for generating Chart Titles when using grouping by adding the potential for using the grouping variable in the Title string so that accurate descriptions can be generated. At the moment it requires the use of a Report Text tool which is not as neat if considering the output that is necessarily generated by grouping.

Only a thought,

Peter

Hello,

When i build my workflows i often times have to save, close, and reopen just to see data flow through tools and check for errors.

It would be awesome if there was a validate button next to the run button so we dont have to go through all of this just to check for errors...

It would also be nice if we didnt need a button but the data automatically flowed through the tools to see compatibility automatically.

Nick

Would like a component to analyse an incoming dataset and suggest a key for the data, i.e. detect what field or composite of fields would uniquely identify a record in the data. The key could then be detected by the output data component and add primary key's to tables when created. Great for when using the drop and recreate option, i.e. would retain an index on the key.

These windows when pulled out as stand-alone windows are free floating, able to be placed anywhere on any screen you have connected.

If one of these screens gets disconnected those windows don't change position. So if your monitors die or go away for some reason there is no way to get them back unless you get a second monitor.

I propose you change the coding to where when a window is re-enabled it always shows up at position [0,0] on monitor 1.

Very frustrating.

In the Explorer box configuration section, it would be great to add a navigation button to pick a URL address (pin point) to a directory/folder so that the user don't have to learn by heart (or copy paste) the exact URL address to make use of the tool.

I have three groups: a control group, a group that got product A, and then a group that got product B. There is a way to test the differences across all groups rather than running separate t-tests (which introduces type I error several times). If my outcome is the percent of people who were contacted, I want to see if the percent is different across groups.

Control Group % who were contacted: 10%

Product A group % who were contacted: 25%

Product B group % who were contacted: 33%

I shouldn't have to run a t-test comparing control to A, then another comparing control to B, and then a third comparing A to B. I know the method is pairwise comparisons but I'm not finding how I can do this in alteryx and I've looked on the community and surprisingly the answer seems to be "you can't" but this is not a rare statistical test!

A product analyst at alteryx help build a macro in R to run the tests but the variables need to be categorical rather than continuous. The ideal solution is that an additional predictive analytics tool can run these ANOVA tests and there's something to specify whether the variable is categorical or continuous.

-Justin

Hi,

As it is so important to be able to calculate and present time related concepts in modern businesses, it is not possible to have a better output choice? I have seen the reporting chart tool, I have looked at the TS Plot tool and even noticed that the Laboratory Charting tool has disappeared. So can you please provide an output tool that provides some focused functionality on this lacking part?

Kind regards,

Peter

Loading large grd files takes a long time, I see they load as polygons. I find the data crunches much faster as centroids. Please add "load as centroid" as input option (same as mapinfo "tab" files).

Also, would be great to load as integer (or select data type), since there are often too many decimals.

Last, but not least, the ability to export as grd or grc would be great since there are other platforms that use these files.

Thanks!

Gary

1. The Union tool

When switching to Manual method and then adding fields up stream, the result is a warning "Field was not found". I don't look for warnings. This should create a red error. Having fields fall off the workflow is a pain.

2. Unique tool

Changing fields upstream causes the tool to error out when the workflow runs. No issues are shown before the run.

3. Having containers all open up when I reopen a workflow is a nightmare when you have 20+ containers all over lapping.

One of my favorite features of Alteryx is the in-line browse in the results window, as well as the descriptive log, highlighting record counts into and out of tools. As I develop bigger and longer-running workflows, I would love to be able to save off these results to provide my QA analyst with a "cached" version of a run without them having to run it themselves. Providing them not only with a well-documented workflow, but with a complete data flow would be tremendously helpful in getting work checked. Our current process is to pass workflows off, and encourage the QA analyst to run them with the "Disable all Tools that write Output" option checked. While this is not an issue for smaller workflows, it is inefficient for larger ones, and also can cause some difficulties with access logistics like missing or inconsistent connection aliases.

I have seen some requests asking for saving of browse results (https://community.alteryx.com/t5/Alteryx-Product-Ideas/Save-Browse-Results-Until-Next-Run/idi-p/1827), but these primarily seem to be geared at data caching for further workflow development. My aim is to save the browse and results statuses of a completed workflow for the purpose of QA.

Note: I posed this as a question in the Data Preparation and Blending forum, but didn't receive an answer so thought I would propose it as an idea.

It would be great if the deselecting of fields in a select tool updated the output window(before next run) as a "review" to make sure you are removing what you expect and/or you can see other items left behind that should be removed. This would also be useful for seeing field names update as you organize and rename.

Often I join tables w/o pre-selecting the exact fields i want to pass and so I clean up at the end of the join. I know this is not the best way but a lot of times i need something downstream and have to basically walk through the whole process to move the data along.

As recommended by HenrietteH at Alteryx to submit as a suggested future enhancement.

I have Oracle 11 and Oracle 12 clients on my machine. With Toad, I can toggle between either client's current home, but it appears that is not a feature in Alteryx. So now I am unable to execute ETL jobs off my machine or server because Alteryx gets confused. Currently, the only remedy I can think of is having 11 client uninstalled, which can cause dependency issues if I need it in the future.

In the DateTime tool, you should be able to specify AM PM. Some other programs I use would do this with an 'a' at the end. Here is an example of what I think it should be

MM/dd/yyyy hh:mm a

| Input Date | Output Date |

| 09/10/2017 11:36 AM | 2017-09-10 11:36:00 |

| 09/10/2017 11:36 PM | 2017-09-10 23:36:00 |

Maybe I am missing something and this is already doable, but so far I haven't found a clean way to do it.

This may be too much of an edge case...

I would like to be able to feed a dynamic input component with a input file and a format template file, so make the input component completely dynamic. This is because I have excel spreadsheets that I want to download, read and process hourly throughout the day. Every 4 hours another 4 columns are added to the spreadsheet, thereby changing the format of the spreadsheet. This then causes the dynamic input component to error because the input file does not match the static format template. I would be happy to store the 6 static templates, but feed these into to the dynamic input with the matching input file, thus making the component entirely dynamic. Does this make sense???

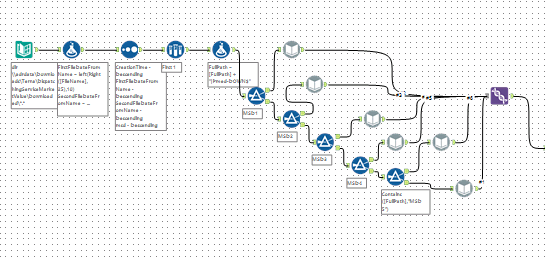

BTW, my workaround was to define six dynamic inputs, filter on the file type, then union the results:

- New Idea 290

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,550 -

Documentation

64 -

Engine

127 -

Enhancement

342 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 16 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |