Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Would it be possible to change the default setting of writing to a tde output to "overwrite file" rather than the "create new file" setting? Writing to a yxdb automatically overwrites the old file, but for some reason we have to manually make that change for writing to a tde output. Can't tell you how many times I run a module and have it error out at the end because it can't create a new file when it's already been run once before!

Thanks!

Just ran into this today. I was editing a local file that is referenced in a workflow for input.

When I tried to open the workflow, Alteryx hangs.

When I closed the input file, Alteryx finished loading the workflow.

If the workflow is trying to run, I can understand this behavior but it seems odd when opening the workflow.

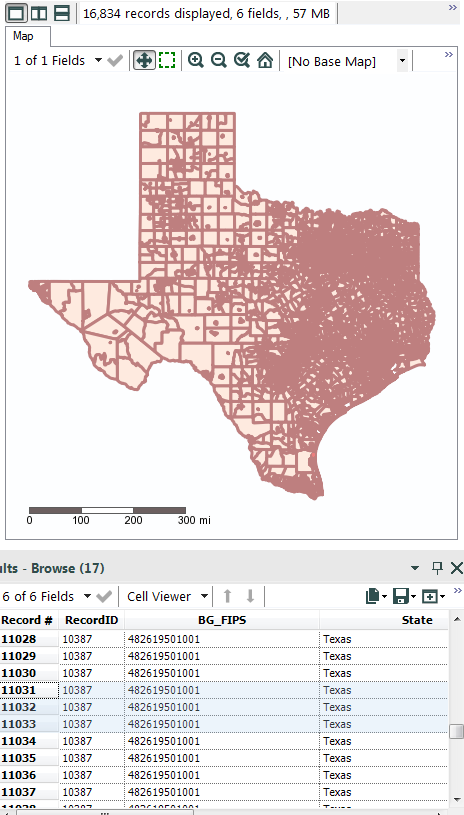

When viewing spatial data in the browse tool, the colors that show a selected feature from a non-selected one are too similar. If you are zoomed out and have lots of small features, it's nearly impossible to tell which spatial feature you have selected.

Would be a great option to give the user the ability to specify the border and/or fill color for selected features. This would really help them stand out more. The custom option would also be nice so we can choose a color that is consistent with other GIS softwares we may use.

As an example, I attached a pic where I have 3 records selected but takes some scanning to find where they are in the "map".

Thanks

There is MD5 hashing capability,using MD5_ASCII(String) and MD5_UNICODE(String) found under string functions

it seems to be possible to encrypt/mask sensitive data...

BUT! using the following site it's child's play to decrypt MD5 --> https://hashkiller.co.uk/md5-decrypter.aspx

I entered password and encrypt it with MD5 giving me 5f4dcc3b5aa765d61d8327deb882cf99

Site gave me decrypted result in 131 m/s...

It may be wise to have;

SHA512_ASCII(String) and

SHA512_UNICODE(String)

Best

Altan Atabarut @Atabarezz

Google Big Query can contain so-called complex queries, this means that a table "cell" can host an array. These arrays are currently not supported in the SIMBA ODBC driver for Google Big Query (GBQ), ie. you can't download the full table with arrays.

All Google Analytics Premium exports to GBQ contain these arrays, so as an example, please have a look at the dataset provided via this link:

https://support.google.com/analytics/answer/3416091?hl=en

The way to be able to query these tables is via a specific GBQ SQL query, for instance:

Select * From flatten(flatten(flatten(testD113.ga_sessions,customDimensions),hits.customVariables),hits.customDimensions).

This is not currently supported from within the Input tool with the Simba ODBC driver for GBQ, which I would like to suggest as a product idea.

Thanks,

Hans

Regularly put true in or false in expecting it to work in a formula.

As I understand SFTP support is planned to be included in the next release (10.5). Is there plans to support PKI based authentication also?

This would be handy as lots of companies are moving files around with 3rd parties and sometimes internally also and to automate these processes would be very helpful. Also, some company policies would prevent using only Username/Password for authentication.

Anybody else have this requirement? Comments?

I know there's the download but have a look at that topic, the easiest solution so far is to use an external API with import.io.

I'm coming from the excel world where you input a url in Powerquery, it scans the page, identify the tables in it, ask you which one you want to retrieve and get it for you. This takes a copy and paste and 2 clics.

Wouldn't it be gfreat to have something similar in Altery?

Now if it also supported authentication you'd be my heroes 😉

Thanks

Tibo

I've found that double-clicking in the expression builder results in varying behavior (possibly due to field names with spaces?)

It would be great if double-clicking a field selected everything from bracket to bracket inclusive, making it simpler to replace one field with another.

For instance:

Current:

IF [AVG AGE] >=5 THEN "Y" ELSE "N" ENDIF

Desired:

IF [AVG AGE] >=5 THEN "Y" ELSE "N" ENDIF

I periodically consume data from state governments that is available via an ESRI ArcGIS Server REST endpoint. Specifically, a FeatureServer class.

For example: http://staging.geodata.md.gov/appdata/rest/services/ChildCarePrograms/MD_ChildCareHomesAndCenters/Fe...

Currently, I have to import the data via ArcMap or ArcCatalog and then export it to a datatype that Alteryx supports.

It would be nice to access this data directly from within Alteryx.

Thanks!

This request is largely based on the implementation found on AzureML; (take their free trial and check out the Deep Convolutional and Pooling NN example from their gallery). This allows you to specify custom convolutional and pooling layers in a deep neural network. This is an extremely powerful machine learning technique that could be tricky to implement, but could perhaps be (for example) a great initial macro wrapped around something in Python, where currently these are more easily implemented than in R.

Very confusing.

DateTimeFormat

- Format sting - %y is 2-digit year, %Y is a 4-digit year. How about yy or yyyy. Much easier to remember and consistent with other tools like Excel.

DateTimeDiff

- Format string - 'year' but above function year is referenced as %y ?? Too easy to mix this up.

Also, documentation is limited. Give a separate page for each function and an overview to discuss date handling.

Would it be possible to add the capability to import or build a CSS for reporting in a future release, I am sure I am not the first to think about having Style Sheets in reports so you do not have to define fonts, colors and all that HTML stuff to each output line.

Alteryx S3 connector currently supports only SSE-S3 encryption. Current version of alteryx S3 connector does not use AWS Signature Version 4, so it fails to upload/download S3 objects which encrypted using AWS KMS keys. This is much needed feature for S3 connector.

The Field Summary tool is a very useful addition for quickly creating data dictionaries and analysing data sets. However it ignores Boolean data types and seems to raise a strange Conversion Error about 'DATETIMEDIFF1: "" is not a valid DateTime' - with no indication it doesn't like Boolean field types. (Note I'm guessing this error is about the Boolean data types as there's no other indication of an issue and actual DateTime fields are making it through the tool problem free.)

Using the Field Summary tool will actually give the wrong message about the contents of files with many fields as it just ignores those of a data type it doesn't like.

The only way to get a view on all fields in the table is using the Field Info tool, which is also very useful, however it should be unnecessary to 'left join' (in the SQL sense) between Field Info and Field Summary to get a reliable overview of the file being analysed.

Therefore can the Field Summary tool be altered to at least acknowledge the existence of all data types in the file?

I have run into an issue where the progress does not show the proper number of records after certain pieces in my workflow. It was explained to me that this is because there is only a certain amount of "cashed" data and therefore the number is basd off of that. If I put a browse in I can see the data properly.

For my team and me, this is actually a great inconvenience. We have grown to rely on the counts that appear after each tool. The point of the "show progress" is so that I do not have to insert a browse after everything I do so that it takes up less space on my computer. I would like to see the actual number appear again. I don't see why this changed in the first place.

Currently, when creating scatter graphs you are unable to order the plots based on a sub-group of the data (ie the legend). It would be nice to have the ability to pick which part of the legend is displayed first, above the other data plots. Could we also have the option to take the 3D element off the scatter graph plots?

Thanks,

Oliver

It would be great to get a random x number of records or x % of records for every grouped field in the sample tool.

Right now, the sample tool is lacking the random % feature and the random % tool is lacking the group by feature.

Just like the File Geodatabase, Esri has an enterprise version for servers, in our case, Oracle. We would like the option to output to an Oracle GDB like we can to a normal Oracle DB. This would greatly assist in our process flows.

Please extend the Workflow Dependencies functionality to include dependencies of used macros in the worflow too. Currenctly macros are simply marked as dependencies by themselves, but the underlying dependencies (e.g. data sources) of these macros are not included.

We have a large ETL process developed with Alteryx that applies several layers of custom and complex macros and several data sources referenced using aliases. Currently the process is deployed locally (non-server) and executed ad-hoc, but will be moved to the server platform at some point.

Recently I had to prep an employee for running the process. This requires creating aliases and associated connections and making sure that access to needed network locations is in place (storing macros, temp files, etc.). Hence I needed to identify all aliases and components/macros used. As everything is wrapped nicely by a single workflow, I hoped that the workflow dependencies functionality would cover dependencies in the macro nodes within, but unfortunately it didn't and I had to look through the dependencies of 10-15 macros.

- New Idea 255

- Accepting Votes 1,818

- Comments Requested 25

- Under Review 168

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 118

- Revisit 64

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

112 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

636 -

Category Interface

238 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

391 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

958 -

Data Products

3 -

Desktop Experience

1,522 -

Documentation

64 -

Engine

125 -

Enhancement

314 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

11 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

New Request

187 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

77 -

UX

223 -

XML

7

- « Previous

- Next »

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- nzp1 on: Easy button to convert Containers to Control Conta...

-

Qiu on: Features to know the version of Alteryx Designer D...

- DataNath on: Update Render to allow Excel Sheet Naming

- aatalai on: Applying a PCA model to new data

- charlieepes on: Multi-Fill Tool

- seven on: Turn Off / Ignore Warnings from Parse Tools

- vijayguru on: YXDB SQL Tool to fetch the required data