Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi

1:

I'm from Denmark, and like several other european countries we use commas instead of dot as decimal seperator. And we use dot as thousand seperator.

So if im working in a flow with loads of price fields, lets say cost price, amount per unit, amount and amount including vat i need to do a multi field replace. Else I dont get the output i can work with in excel or other programs.

So it would be great beeing able to set seperators on a flow level, like you can in excel when importing.

2.

Beeing able to set a date format on a flow level.

Lets say my input data is 12.12.2019 and i need 2019-12-12 in my output. If i work with several different date fields i need to use several datetime fields.

Alternate could be a multi field datetime ?

3.

Having a search function when using the select ? And maybe a numbers order.

So if i scroll down, i could enter 3 - which means this would now be my 3. shown field?

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

Slightly off the track, but definitely needed...

I'd like like to propose a novel browsing.

With this new feature you may no longer require the traditional Browse Tool, to the extent that it may be decommissioned later. Here's how new Browse would work.

So far Browse Tool is helpful for mid-stream data sanity check...

But a complex workflow will need so many Browse Tools, thereby wasting a lot of canvas space and unnecessarily complicating / slowing the workflow further.

Expected Browse:

Clicking on any tool should automatically populate its results in the Results window without the need of Browse Tool.

1) Tools with a single output: Clicking on the tool or its output plug should reveal its data (ex: Summarize Tool)

2) Tools with more than one output: Clicking on each of the output plug should reveal its data (ex: Join)

BONUS: Clicking on the input plug of a tool should reveal its input data

-

Category Input Output

-

Data Connectors

Imagine We have following o/p fields like Name,RegNo,Mark1,Mark2 ,total

Here total can be computed and can be brought as derived field using formula tool.

However Name and RegNo will be the same in O/p too.

- Instead of Mapping Manually mapping Output_Name to Input_Name, A smart Mapping feature can be introduced so that it can automatically map based on name of the columns.

- Once smart mapping is done,Developer can review and make changes if needed.

- This will reduce the manual intervention of selecting from existing fields.

- Will be helpful when we process 100s of Input data into Output report.

Thanks,

Krishna

-

Category Input Output

-

Data Connectors

Currently, if the same Excel file is being updated on the workflow, but different sheets within the file, it will error out if the saving process overlaps one another. And there are some cases that using the tool Block Until Done will not work because there are two data streams (for example if you have a filter and is saving the data from the two outputs on the same file).

It would be great if we could output to the same Excel file more than once on the same workflow.

-

Category Input Output

-

Data Connectors

Hi,

It would be very useful for me If I could consolidate in the same output two different inputs: 1- the whole output flow; 2- The summarize from the output. That would save some time from doing pivot table analysis for instance.

Thanks

-

Category Input Output

-

Category Reporting

-

Data Connectors

-

Desktop Experience

Currently if I read multiple files through Directory Tool +Dynamic Input, I will not know which final records is from which file, which can be extreme useful.

I also know, the files need to be the same schema (a second limitation), but the filename itself will be handy

-

Category Input Output

-

Data Connectors

One feature in the input tool that would be great to have added to the already existing "Output file name as field" option would be to set the field at the beginning of the data set or end (i.e. you could set your default, click a check box or select from two options). Because with large data sets sometimes you need to do data manipulation on that field and it can be easier to work with right away if at the beginning of data set. Right now you have to drag in a select tool to fix it.

-

Category Input Output

-

Data Connectors

Dear Alteryx Community,

I've tried my best to make sure this suggestion wasn't posted before so hoping I haven't missed a feature already present in Alteryx or re-posting an idea already submitted.

In any case, there is one operation I do so much that I wonder if it could be made easier. I would consider myself a very basic Alteryx users so many of my workflows usually end in either Excel files, CSV, or Alteryx Database files. In any case, here is what I would love (if possible)

In my workflow, I wish I could Right-click on my Output Tool using a file-based output such as Excel, .csv, .yxdb and have a R-Click menu option which said "Start Workflow". This would open a new Workflow with one input tool already present with a the path being the same as what was in the Output tool that I right-clicked on.

So many times I create an output and then need to use that output. This usually means I have to copy/paste the path, create an Input Tool and paste that path in. Would be so many easier if a few of these steps could be done automatically.

Otherwise, if the community knows a simpler/better way...I'm would love to know.

Thanks in advance

Amar

-

Category Input Output

-

Data Connectors

Would be interested if it was possible to turn off individual output tools individually rather than also disabling tools that write output, as it would be good to choose which output tools can write output, instead of placing them in a tool container for all of the output tools.

-

Category Input Output

-

Data Connectors

I notice that at least my Output files are tied up "being used by another program" after the workflow is closed. I have to actually close out of Alteryx to release the file. The file s/b released as soon as the workflow using it is done running. Failing that, as soon as the workflow is closed vs having to close Alteryx completely.

...or is this just my issue?

-

Category Input Output

-

Data Connectors

In the Output Data tool, when the file type is YXDB, one of the options is to Save Source & Description.

Splitting these into "Save Source" and "Save Description" -- independent options -- would be useful.

Sometimes I don't want the file recipient to know how a field was derived but I want to include a description.

Right now there is no easy way of doing this.

-

Category Input Output

-

Data Connectors

Occasionally, the Calgary Loader tool will not write out all fields passed to it. This seems to happen after writing out a certain number of fields then later, when rerunning, adding a new output field. Very annoying because you don't know it will happen until processing is complete and you examine the result. I usually manually delete the calgary files prior to rerunning, to avoid the versioning, but it still happens.

Also, please make the versioning optional with a check box, default off.

-

Category Calgary

-

Category Input Output

-

Data Connectors

-

Desktop Experience

Hello,

I work with alteryx databases a lot to store some historical data so I don't have to pull it in the future. It would be great if there was a way when creating the database, where I could lock them for editing and can only be edited by select usernames and passwords.

Thanks,

Chris

-

Category Input Output

-

Data Connectors

Hello All,

I received from an AWS adviser the following message:

_____________________________________________

Skip Compression Analysis During COPY

Checks for COPY operations delayed by automatic compression analysis.

Rebuilding uncompressed tables with column encoding would improve the performance of 2,781 recent COPY operations.

This analysis checks for COPY operations delayed by automatic compression analysis. COPY performs a compression analysis phase when loading to empty tables without column compression encodings. You can optimize your table definitions to permanently skip this phase without any negative impacts.

Observation

Between 2018-10-29 00:00:00 UTC and 2018-11-01 23:33:23 UTC, COPY automatically triggered compression analysis an average of 698 times per day. This impacted 44.7% of all COPY operations during that period, causing an average daily overhead of 2.1 hours. In the worst case, this delayed one COPY by as much as 27.5 minutes.

Recommendation

Implement either of the following two options to improve COPY responsiveness by skipping the compression analysis phase:

Use the column ENCODE parameter when creating any tables that will be loaded using COPY.

Disable compression altogether by supplying the COMPUPDATE OFF parameter in the COPY command.

The optimal solution is to use column encoding during table creation since it also maintains the benefit of storing compressed data on disk. Execute the following SQL command as a superuser in order to identify the recent COPY operations that triggered automatic compression analysis:

WITH xids AS (

SELECT xid FROM stl_query WHERE userid>1 AND aborted=0

AND querytxt = 'analyze compression phase 1' GROUP BY xid)

SELECT query, starttime, complyze_sec, copy_sec, copy_sql

FROM (SELECT query, xid, DATE_TRUNC('s',starttime) starttime,

SUBSTRING(querytxt,1,60) copy_sql,

ROUND(DATEDIFF(ms,starttime,endtime)::numeric / 1000.0, 2) copy_sec

FROM stl_query q JOIN xids USING (xid)

WHERE querytxt NOT LIKE 'COPY ANALYZE %'

AND (querytxt ILIKE 'copy %from%' OR querytxt ILIKE '% copy %from%')) a

LEFT JOIN (SELECT xid,

ROUND(SUM(DATEDIFF(ms,starttime,endtime))::NUMERIC / 1000.0,2) complyze_sec

FROM stl_query q JOIN xids USING (xid)

WHERE (querytxt LIKE 'COPY ANALYZE %'

OR querytxt LIKE 'analyze compression phase %') GROUP BY xid ) b USING (xid)

WHERE complyze_sec IS NOT NULL ORDER BY copy_sql, starttime;

Estimate the expected lifetime size of the table being loaded for each of the COPY commands identified by the SQL command. If you are confident that the table will remain under 10,000 rows, disable compression altogether with the COMPUPDATE OFF parameter. Otherwise, create the table with explicit compression prior to loading with COPY.

_____________________________________________

When I run the suggested query to check the COPY commands executed I realized all belonged to the Redshift bulk output from Alteryx.

Is there any way to implement this “Skip Compression Analysis During COPY” in alteryx to maximize performance as suggested by AWS?

Thank you in advance,

Gabriel

-

Category Input Output

-

Data Connectors

Good afternoon,

I work with a large group of individuals, close to 30,000, and a lot of our files are ran as .dif/.kat files used to import to certain applications and softwares that pertain to our work. We were wondering if this has been brought up before and what the possibility might be.

-

Category Input Output

-

Data Connectors

Yeah, so when you have 15 workflows for some folks and you've actually decided to publish to a test database first, and now you have to publish to a production database it is a *total hassle*, especially if you are using custom field mappings. Basically you have to go remap N times where N == your number of new outputs.

Maybe there is a safety / sanity check reason for this, but man, it would be so nice to be able to copy an output, change the alias to a new destination, and just have things sing along. BRB - gotta go change 15 workflow destination mappings.

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Hi all,

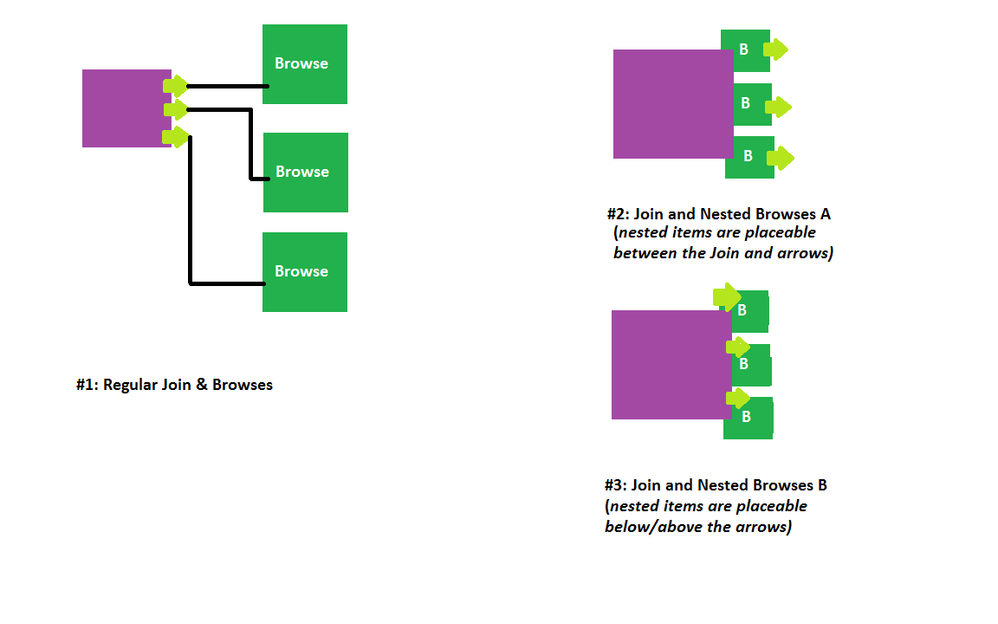

I often feel like workflows can easily become overly cluttered with Browses, especially around Join and other multi-output tools. I need to see the output of most of the data streams, so I can't necessarily just delete the Browses and be done with them (as I'll like need to see them over and over again).

To solve this problem, Alteryx could potentially add a "nested browse" view or tool. I've attached a picture to show what I mean. This would unclutter the area right after multi-output tools significantly.

What are your thoughts? Have I missed any neat trick or functionality that can already do something like this?

-

Category Input Output

-

Data Connectors

There should be an option to not update values with Null-values in the database, when using the tool Output Data, with the options:

- File Format = ODBC Database (odbc:)

- Output Options = Update;Insert if new

This apply to MS SQL Server Databases for my part, but might affect other destinations as well?

-

Category Input Output

-

Data Connectors

I would love to see the ability to have a hyperlink (or even more formatting options) in my app output message.

Currently I when I have an app that runs and I want my users to move off to a different web location they have to copy and paste the url that I have rather than just clicking a link and going to the external report.

-

Category Apps

-

Category Input Output

-

Data Connectors

-

Desktop Experience

When outputting files, it is usually beneficial for characters that would cause trouble with formatting/syntax to be properly escaped. However, there are situations where suppressing this behavior is desirable.

Of particular importance for such a feature is in the outputting of JSON files. Currently, if a file is output as JSON it will always have quotations escaped if they occur within a field, regardless of whether this conforms to the JSON standard. There are a variety of current workaround for this, including pre-formatting all fields to look like JSON and then outputting as a \0 delimiter CSV, but in many cases there is no need to escape any characters when outputting a JSON.

A simple toggle--as was created for suppressing BOM in CSVs--to disable character escaping would make the creation of JSON objects simpler and reduce the amount of workarounds required to output proper JSON.

-

Category Input Output

-

Data Connectors

- New Idea 273

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 174

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 62

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

246 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

962 -

Data Products

2 -

Desktop Experience

1,533 -

Documentation

64 -

Engine

126 -

Enhancement

326 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

192 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

79 -

UX

222 -

XML

7

- « Previous

- Next »

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...