Alteryx Connect Ideas

Share your Connect product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Connect

Hi Alteryx team,

In Connect, users are able to open workflows, open reports (like Tableau) or use data source in a workflow using the blue button on an asset page. With the new functionality of cataloguing APIs, would it be possible to implement this button for API endpoints as well, meaning users would be able to trigger the API directly from Connect?

Thank you very much.

Michal

-

Connect

-

Designer Integration

-

User Interface

We had an idea, when you have an yxdb file, you have all the metadata including the field descriptions column from previous formulas or selects, it would be so usefull to have that in connect so that you can easily write a dictionnary from alteryx designer.

Our Data Catalogue in Connect has about 2 millions items (tables, views, columns).

I see next issues:

- We collect metadata from about 10+ DBMS. So after each Metadata loader run, Alteryx Connect will start load_alteryx_db script and process whole staging area (DB_*) tables, not only current extracted metadata set from single DBMS. It will lead huge redundancy.

- Follows from first issue: One-by-one comparison of loaded metadata will take a lot of time in real environment with 1-2 millions items (ordinary situation in large Bank). And this comparison will be executed several times. It will increase the redundancy in the number of DBMS servers.

All queries in this script containing column or table name as a parameter (e.g. src.TABLE_NAME='${query_table_name}' AND src.COLUMN_NAME='${query_column_name}') will be executed as many times as number of columns in Data Catalogue (millions times). It will work very slow because it executes a lot of queries.

Can you optimize somehow this process?

-

Administration

-

Connect

-

Designer Integration

-

Loaders

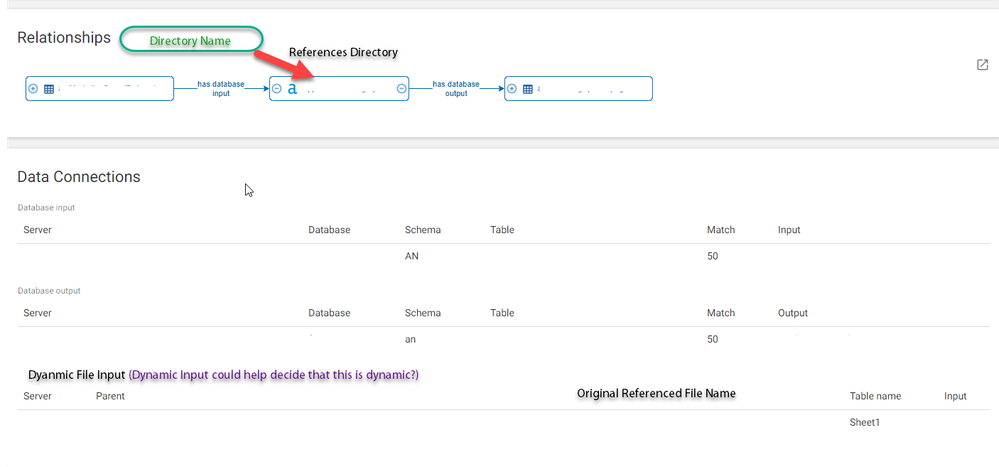

We have some scheduled workflows that utilize the download tool for API calls. When we scrape them with connect there aren't any references to them in the "Relationships" or "Data Connections" areas.

Even if this is something that would be difficult for Alteryx to scrape through a workflow, I would love the ability to create entities like this and manually connect them as a data source. Like we have some partners where there 10 to 15 API calls are required to pull the entire data set. It would be great to know which workflows reference those APIs so that if changes are made on their side, we can easily identify which workflows are impacted.

-

Connect

-

Designer Integration

-

User Interface

I published a workflow today that scans a directory for files and then pushes them to a Dynamic Input. I noticed that on Connect there is no relationship there anywhere referencing that we are scraping a directory.

Connect has the db inputs and outputs and the "File Input" that references what the Dynamic input is originally set to go find, but there is nothing referencing the directory other than any notes that I have added to the description.

The reason that I think this may be important. We connect to a folder where FTP files are dumped by a powershell script and we want to go through that folder with Alteryx and pull and upload as needed. However the file that existed in the original input (when we created this workflow) no longer exists. So the visual relationship is broken in Connect as soon as that file is dropped. If perhaps we don't have a tool that references this sort of connection to a directory, having the ability to designate a dynamic connection to the original file might be good instead. We just want to be able for those in the future to reference a location, rather than a file that hasn't existed in a while.

-

Connect

-

Designer Integration

-

User Interface

@OndrejCsummarizes connect as "a state-of-the-art Data Catalog with a social twist".

I define it in a broader fashion as data analytics social network, a collective intelligence or #datahive...

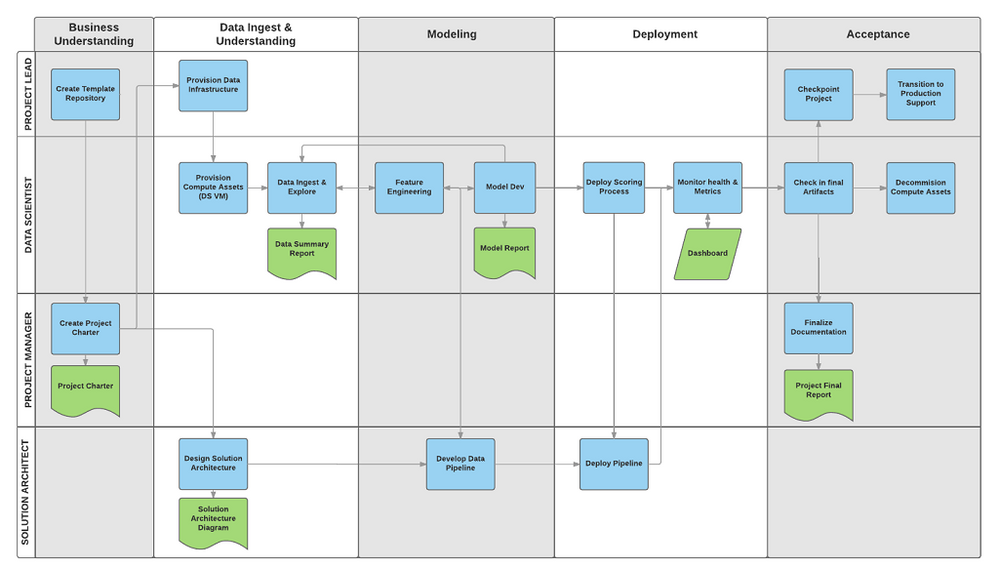

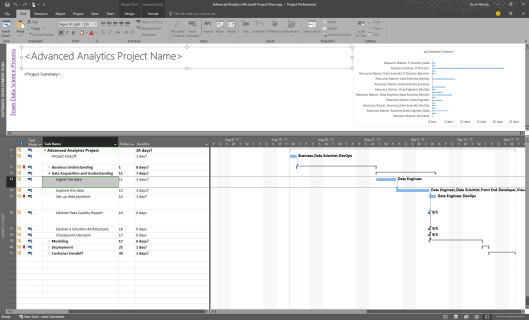

I would propose adding Analytics projects and related documents and the relevant relationship data;

- Project charter,

- Solution approach document,

- Data dictionary,

- Project timeline (a gantt chart etc.)

- Roles & responsibilities

into the picture so that any team can track their Data Science project progress there...

Here is a nice process flow view of a DS process

docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/overview

A Microsoft Project view of the Analytics projects at hand...

-

Administration

-

Connect

-

Designer Integration

-

User Interface

as per the title, when selecting "Use in workflow" a user should have the option to connect with the in-db tools when applicable rather than being stuck on a green input tool with an odbc connection. Ditto when searching from the omnibox in designer.

-

Connect

-

Designer Integration

A lot of information is not captured when colleagues run numerous SQL codes on server. Oracle, SQL Server, Azure and others...

Would there be a clever way of capturing and archiving all this queries run?

It may be wise to collect these for several reasons;

- SQL code profiling is an important matter especially for DB admins. You can see the most required tables and fields etc.

- Also have a grasp on most frequent and time consuming joins to enhance DB performance

- Figure the queries that can be replicated in Alteryx and deployed to a server so that no business user needs to run SQL code instead they will be reverted to the gallery.

So found out a similar feature is now available in SQL Server 2016 --> https://docs.microsoft.com/en-us/sql/relational-databases/performance/monitoring-performance-by-usin...

Clicking the ‘Use in Workflow’ button in connect downloads a workflow file with the Table which I can open in Alteryx Designer.

When I open in Alteryx it asks for Userid/PW but there is no option for SSO (single sign on):

If I leave the username and password blank then the connection fails and I get an error.

In the case of SSO the connection string should be:

SSO: odbc:Driver={HDBODBC};SERVERNODE=saphXXX.europe.company.com:30415;Trusted_connection=yes;

instead of this:

UseridPW: odbc:Driver={HDBODBC};SERVERNODE=saphXXX.europe.company.com:30415;UID=USERNAME;PWD=__EncPwd1__

Please note ALL of our users use SSO so current functionality is useless to us.

I have raised this as a bug with support but as usual they ask me to post here.

This option should also ask if the connection is In-DB connection also per this post:

-

Connect

-

Designer Integration

- New Idea 41

- Comments Requested 7

- Under Review 9

- Accepted 14

- Ongoing 0

- Coming Soon 1

- Implemented 32

- Not Planned 10

- Revisit 9

- Partner Dependent 0

- Inactive 0

-

Admin UI

3 -

Administration

37 -

API SDK

1 -

Category Connectors

1 -

Connect

117 -

Designer Integration

9 -

Enhancement

13 -

Gallery

1 -

General

38 -

Installation

2 -

Licensing

1 -

Loaders

31 -

Loaders SDK

4 -

New Request

10 -

Settings

2 -

Setup & Configuration

15 -

User Interface

39 -

UX

10

- « Previous

- Next »

-

niklas_greiling

er on: Sharing Workflow Results in Alteryx Gallery - CristonS on: Alteryx Connect on Ms SQL Server - login using int...

- CristonS on: Gallery Loader Tool Collection Enhancement

- CristonS on: Improve Connect Metadata Loaders to pull column co...

- CristonS on: Extend assets name limit

- JanLaznicka on: Automation of the installation and upgrade process

- CristonS on: Date + Timestamp directly in connect_catalina log ...

-

KylieF on: Alternate Names for source systems

-

KylieF on: Custom field location and design enhancement

-

KylieF on: Metadata Loader for Databricks