Alteryx Designer Desktop Knowledge Base

Definitive answers from Designer Desktop experts.- Community

- :

- Community

- :

- Support

- :

- Knowledge

- :

- Designer Desktop

- :

- Planting Seeds: An Introduction to Decision Trees

Planting Seeds: An Introduction to Decision Trees

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

02-21-2018 01:25 PM - edited 08-03-2021 03:45 PM

Decision Trees are algorithms that sort data based on predictor variables. As a predictive model, decision trees use observations of predictive variables to make conclusions about a target variable. Decision trees have a structure similar to that of a flow-chart, where each internal node is a test attribute, and each branch is an outcome of a test. A major benefit of decision trees is that they are relatively straight forward and easy to interpret.

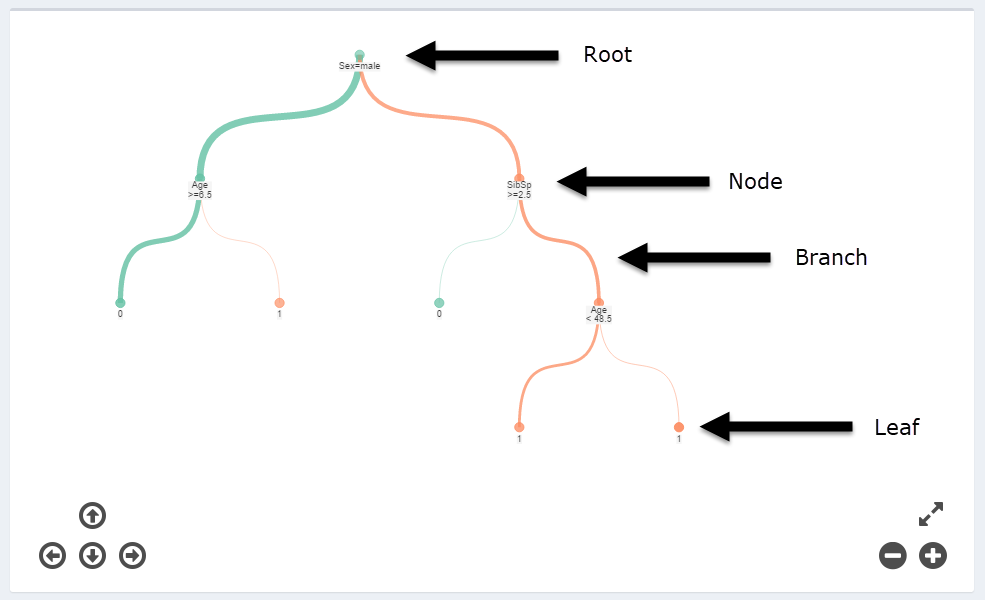

Creating a decision tree involves selecting input variables (one target, and one or more predictors), and creating split points on the predictor variable(s) until an effective tree is constructed. Decision trees are made up of nodes, roots, leaves (sometimes called terminal nodes), and branches.

All points in the decision tree are nodes. The topmost node is the “root” and the terminal nodes are the “leaves”. Each leaf is a classification label for the output variable (y) which is used to make the prediction. The internal (non-terminal) nodes represent decision points that the data are split at. The branches are the outcomes of each test (node).

Decision trees can be applied to categorical or numeric data. When the target variable (what you are trying to predict) iscategorical,aclassification treeis constructed. When the target variable iscontinuous,aregression treeis constructed. Classification and regression trees are very similar, but do differ on a few points: most notably how splits (variable thresholdson which the data are divided) are determined.

For both classification and regression trees, variables and split points are chosen using an algorithm that only considers a single split point at a time (locally optimal), as opposed to the context of each split in the whole model (this is known as a greedy algorithm). The intent of the greedy algorithm is to find a globally optimal model by making the best possible choice at each individual split.

Splits in a classification tree are determined either by minimizing a measure of misclassification (known as Gini Impurity) at each split or by creating splits that result in the "purest" daughter nodes (this method is often referred to as Information Gain).

Gini Impuritymeasures how often a randomly chosen record from the data set used to train the model will be incorrectly labeled if it was randomly labeled according to the distribution of labels in the subset. Gini Impurity reaches zero when all records in a node fall into a single category.

Information Gain is anentropy-basedinformation index. In this context, entropy can be thought of as a measure of unpredictability – so a group where each record has the same value would have zero entropy. Information gain attempts to determine which predictor variables and split thresholds are most useful for determining the target variable by minimizing the entropy of the resulting groups. Information Gain can be thought of as the decrease in entropy after a dataset is split, and is calculated by subtracting the average entropy of the resulting daughter nodes after a split from the entropy of the parent node.

Gini Impurity and Information Gain are very similar in the context of constructing a classification tree. If you are interested in learning more, a paper on the theoretical comparison of Gini Impurity and Information Gain criteria can be found here. Typically, it does not make a significant difference whether you use Gini Impurity or Information Gain to determine splits.

Regression trees splits are most often constructed by minimizing thesum of the squared errorsat each split, also known as Least SquaresCriterion. Sum of squared error is calculated by taking the difference between the value predicted by the split and the actual known value of the training data for each record (this is known as the error or residual), squaring that value, and then summing the squared errors for all training records that pass through the node.

For both classification and regression trees, the tree stops “growing” (i.e. adding split points/nodes to sort the data) based on a stopping criterion, for example, a minimum number of training instances assigned to each leaf and node of the tree.

To improve predictive power of the model, after construction decision trees are often "pruned". In the context of decision trees,pruningrefers to the technique used to reduce the size of decision trees by removing sections of the tree that do not contribute significant predictive power to the overall model. The goal of pruning is to remove branches from the decision tree without reducing predictive accuracy, measured against across-validationdata set. Pruning reduces the overall complexity of the final decision tree model and combats modeloverfitting, thereby improving overall model accuracy.

What are a few limitations of Decision Tree Models?

Decision trees tend to be less accurate than many other predictive modeling approaches. Decision trees also tend to not be very robust, meaning a small change in training data can equate to a large change in the tree. Due to the nature of greedy algorithms, a globally-optimal (best overall possible model) decision tree cannot be guaranteed.Decision trees can also be prone to overfitting, meaning the model performs well on the data it was trained with, but poorly on other data sets.

Many limitations of decision trees have been addressed by ensemble learning, withmethods like random forestor boosting. However, these modelstend to be less approachable and are often more opaque.

What about strengths?

Decision trees are easy to illustrate, understand, and interpret. They can be applied to both categorical and continuous data, they are resistant to outliers in a data set, as well as to irrelevant predictor variables. Additionally, decision trees allow you to specifically identify variable thresholds used to sort your data by the target variable.

In Summary

The Decision Tree Tool is one of the most popular predictive tools in Alteryx and the Data Science community as a whole. This is probably because it is a highly approachable and easily understood modeling method. However, there are limitations to this method, and, as with all methods, it is important to understand how the underlying algorithm works, and how modeling decisions might impact your outcome.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Thank you for taking the time to put this together @SydneyF - appreciate you making the effort to bring data science to folk who don't speak statistics or computational math.

Can I clarify understanding on a few bits?

- Classification trees vs. Regression trees: Are you able to give a simple worked example of a regression tree? Would the nodes still give decisions points with the leaves being coefficients in some way?

For example: between ages 1 and 10, the energy level decreases linearly with number of hours awake (energy = 1* hours awake) - however after 10 years old we have to factor in exercise and nutrition rather than just hours awake? Am I on the right track? Possibly there is an easy teaching example that you know of to demonstrate these kind of trees?

- Gini vs. Entropy.

For the entropy algo - it sounds like you're saying that the algorithm is very simple - how do I minimize the degree of variety in the child nodes. So if I have one option with splits my M&Ms perfectly into red in one pile and blue in another; or another option which has a slightly messy split, then the entropy algorithm will favour the clean split.

Are you able to give a worked example of a low-entropy and a high-entropy split so that folks can see it, possibly follow along with the calculation and get an intuitive understanding?

Gini is a little more confusing. Could you go through a simple example to demonstrate this? Reason for asking is that all the explanations and links for Gini tend to rely on the formulation of Gini (i.e. execution of the math) - not the meaning that people can take away as understanding (to build the right kind of intuitions over time).

Is Gini impurity a synonym for Entropy (since Gini Impurity is zero if the set is only one category - as is the entropy of that set)?

Thanks @SydneyF

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hi @SeanAdams,

Regression Trees -

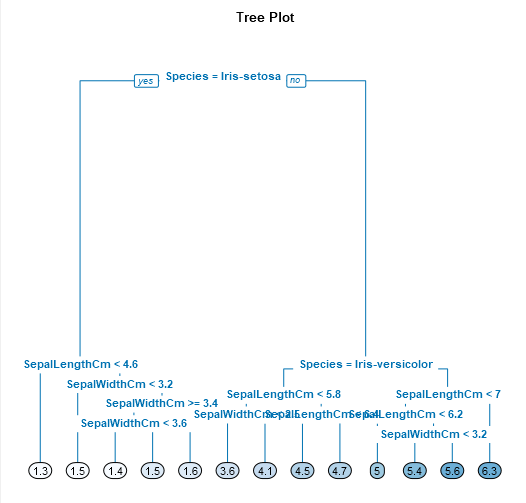

In traditional regression trees, the terminal nodes of the tree are the estimated (predicted) values of the target variable. These values are calculated as the average response (target variable value) of all the records from your training data that were sorted into each leaf (terminal node). Nodes within the tree will function the same way as they do in a classification tree, where each node will act as a decision point to sort data into different branches.

Because the predictions are static for each leaf, the predictions of a regression tree are "jagged" and not truly continuous. Regression trees are more prone to overfitting than classification trees. Decision trees do not make any assumptions about the relationship between variables being linear.

You might find these lecture notes on Regression trees helpful for understanding this further.

Information Gain -

Your M&M example works well in understanding the intuition of information gain.

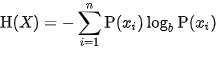

Information gain leverages entropy to determine what splits (nodes) to create in a decision tree, where entropy is the sum of the probability of each label (group) in the dataset, times the log probability of that same group. Effectively, entropy is measuring how unpredictable the label of any individual in a group is. If a group of observations all has the same label, entropy is 0 because the labels are all the same. If a group of observations is perfectly split between two labels, the entropy of that group is 1.

Information gain is the entropy of the distribution of the parent node, minus the entropy of the distributions in the child nodes (calculated as a weighted average for each resulting split). The split that results in the largest information gain (the smallest entropy) is selected.

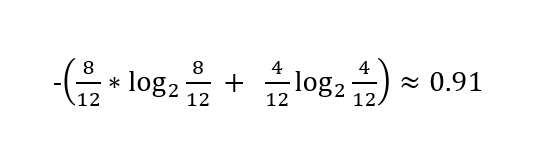

If we have a dataset of 12 M&Ms, where 8 are green and 4 are blue, the entropy of that dataset can be calculated as:

If we have a split that results in one daughter node made up of eight green and the other is four blue the entropy of the two splits can be calculated as:

The first two equations are calculations of entropy for each daughter node, and the third is the weighted average (which was kind of silly to do, because it's zero, but hopefully it makes the process more clear).

If we have a split that results in one daughter node made up of two green and two blue, and the other node is six green and two blue, the resulting entropy would be:

So to calculate the information gain:

Split 1: 0.91 - 0 = 0.91

Split 2: 0.91 - 0.86 = 0.05

Here, we see that the first split was (obviously) better, resulting in a higher information gain. So in this case, the tree would select that split.

There are also helpful worked examples for calculating information gain here and here.

Gini Impurity -

Gini Impurity measures how often a randomly chosen record from the data set used to train the model will be incorrectly labeled if it was randomly labeled according to the distribution of labels in the subset (e.g., if half of the records in a group are "A" and the other half of the records are "B", a record randomly labeled based on the composition of that group has a 50% chance of being labeled incorrectly). Gini Impurity reaches zero when all records in a group fall into a single category (i.e., if there is only one possible label in a group, a record will be given that label 100% of the time). This measure is essentially the probability of a new record being incorrectly classified at a given node in a Decision Tree, based on the training data.

I would just think about Gini impurity it as the probability of guessing the label of an individual observation wrong, using the overall distribution of the labels in the dataset to do the guessing. The decision tree will select the split where the probability of guessing a variable label wrong is reduced. If a daughter node is mostly the same label, the probability of guessing the label of a randomly selected record incorrectly is reduced.

For a working example of Gini Impurity - I would check out this post. There are nice pictures and they even use green and blue dots as an example.

The difference between information gain and Gini impurity is definitely subtle, which is why it doesn't typically make a difference to use one over the other. However, the formulas used to calculate Entropy and Gini impurity are different:

Entropy:

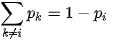

Gini Impurity:

Entropy is a measure of uncertainty, and Gini impurity is a measure of misclassification.

I hope this helps!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

@SydneyF - thank you!

This really is superb. Explaining quantitative math and probability to people who don't have this background is really very tough - and so most experts don't make the effort.

This article and your followup does a good job of bringing these tough concepts down to much more practical and concrete examples so that people can come to grips with them using real-world things (without getting lost in jargon or intimidating terms) and develop the intuition about why and how things work without having to memorize formulae. (Given that the internet is great for searching - in my mind the intuition is more important than memorizing the formula if you only had time for one or the other)

Thank you again @SydneyF

PS: there's a good video here that gives a grasp of why Log base 2 is used in the Entropy formula:

-

2018.3

17 -

2018.4

13 -

2019.1

18 -

2019.2

7 -

2019.3

9 -

2019.4

13 -

2020.1

22 -

2020.2

30 -

2020.3

29 -

2020.4

35 -

2021.2

52 -

2021.3

25 -

2021.4

38 -

2022.1

33 -

Alteryx Designer

9 -

Alteryx Gallery

1 -

Alteryx Server

3 -

API

29 -

Apps

40 -

AWS

11 -

Computer Vision

6 -

Configuration

108 -

Connector

136 -

Connectors

1 -

Data Investigation

14 -

Database Connection

196 -

Date Time

30 -

Designer

204 -

Desktop Automation

22 -

Developer

72 -

Documentation

27 -

Dynamic Processing

31 -

Dynamics CRM

5 -

Error

267 -

Excel

52 -

Expression

40 -

FIPS Designer

1 -

FIPS Licensing

1 -

FIPS Supportability

1 -

FTP

4 -

Fuzzy Match

6 -

Gallery Data Connections

5 -

Google

20 -

In-DB

71 -

Input

185 -

Installation

55 -

Interface

25 -

Join

25 -

Licensing

22 -

Logs

4 -

Machine Learning

4 -

Macros

93 -

Oracle

38 -

Output

110 -

Parse

23 -

Power BI

16 -

Predictive

63 -

Preparation

59 -

Prescriptive

6 -

Python

68 -

R

39 -

RegEx

14 -

Reporting

53 -

Run Command

24 -

Salesforce

25 -

Setup & Installation

1 -

Sharepoint

17 -

Spatial

53 -

SQL

48 -

Tableau

25 -

Text Mining

2 -

Tips + Tricks

94 -

Transformation

15 -

Troubleshooting

3 -

Visualytics

1

- « Previous

- Next »