Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello,

This is a popular feature on other tools, such as Talend (now Talaxie) : the ability to export the workflow as a vectorized screenshot in svg.

Why ? it helps to build documentation, svg being vectorized, it means the picture can be zoomed in without losing quality.

Of course, that would mean before that Alteryx use svg for icons as required here https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/svg-support-for-icon-comment-image-e...

Best regards

Simon

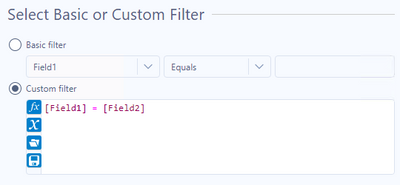

Sometimes I want to set up a filter to compare the values in two fields in my data set. The basic filter option would be much more powerful and configuration would be quicker if this option allowed this.

For example, currently I must use a custom filter to check if Field1 and Field2 are equal:

I would love to have the option to either use a static value in the basic filter (as you can now) or select a field name from a dropdown:

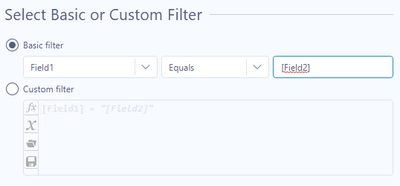

I want a feature to enable join by custom conditions. Currently, in Join tool, allowed condition is only equality of specific fields and specific position, however, in SQL, we can join data by much more flexible conditions like;

SELECT TableA.id FROM TableA INNER JOIN TableB ON TableA.id=TableB.id and TableA.value > TableB.value

Of course, my idea can be easily realized by using combination of Appendix Field + Filter tool, but I meant to say is that Appendix-Fields is quite expensive operation in calculation cost, and it would generate many unnecessary records, which is annoying us in case of handling a huge dataset.

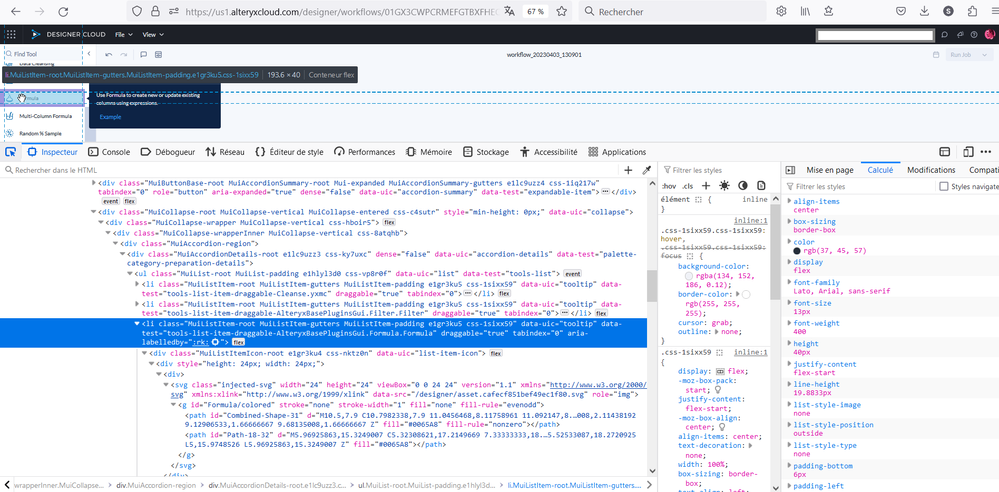

I suppose this kind of flexible conditions can be specified by using expression editor, thereby configuration window of this feature would look like the below image; Adding one more radio button option, and expression editor similar to one used in Filter tool.

Any positive/negative feedback on my idea would be appreciated. Thank you for your attention!

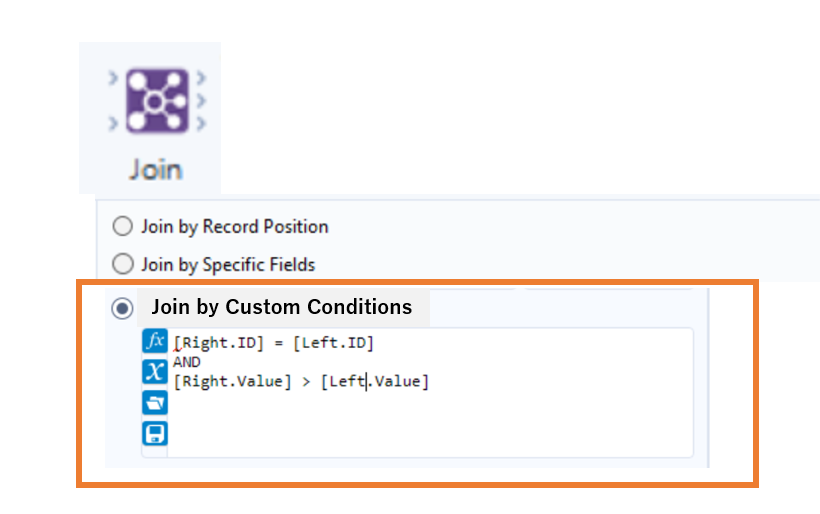

Hello,

Working on Dataiku DSS and there is a cool feature : they can tag tools, parts of a worklow.. and then emphasize the tools tagged.

Best regards,

Simon

The Email tool does not send out e-mails after an error occurred in the workflow. Since this usually is a good thing, it sometimes would be helpful being able to send out e-mails also in case of errors.

In particular, I want to send out an e-mail with a detailed and formatted custom error message.

Thus, please add a check box "Also send mail in case of errors" which is off by default.

Side note: The Event "Send mail After Run With Errors" does not work for me because it is too inflexible. Just sending out the OutputLog is not helpful because the error message might be hidden after hundreds of rows.

Idea: “Create THEN Append” Output Mode for Files and Databases

When outputting data in Alteryx—whether to an Excel file or a database table—the standard practice is:

First run: Set the output tool to “Create New Sheet” or “Create New Table.”

Subsequent runs: Manually change the setting to “Append to Existing.”

This works fine, but it’s very easy to forget to switch from "Create" to "Append" after the first run—especially in iterative development or when building workflows for others.

Suggested Enhancement:

Add a new option to the Output Data tool called:

“Create THEN Append”

Behavior:

On the first run, it creates the file/sheet or table.

On future runs, it automatically switches to append mode without needing manual intervention.

Why This Matters:

Prevents data loss from accidentally overwriting files/tables.

Improves automation and reusability.

Makes workflows more reliable when shared with others.

Mirrors functionality found in many ETL tools that allow dynamic "upsert-like" behavior.

Applies To:

Excel outputs (new sheet creation vs. append)

Database outputs (new table vs. append to existing)

CSV or flat file outputs where structure remains consistent

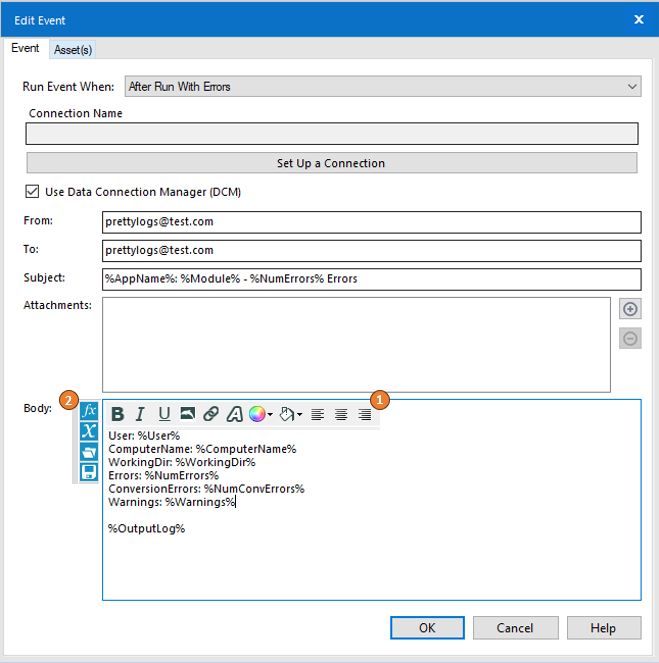

Everyone knows the importance of adding the appropriate controls and governance to your workflows - and often, this means including events that will generate notifications if a workflow is running with errors.

But who is the audience of that email? If it's not a developer, will that person know what they are reading and where to focus?

How about a developer that would like to customize the message that the end user will receive?

Porting some existing functionality from other tools in the Alteryx toolkit to the Events page could easily provide added flexibility to event generation:

1) Add a formatting bar to the tool like shown in the image below

-- Style changes

-- Alignment

-- Highlighting

-- Coloring

-- Images

2) Add a function bar to the tool like shown in the image below

-- Ability to view all available variables

-- Ability to apply formulas using variables

-- Ability to save formulas

What do you think? Give this post a thumbs up if you find the post helpful!

Hello,

This is a feature I haven't seen in any data prepation/etl. The core feature is to detect the unique key in a dataframe. More than often, you have to deal with a dataset without knowing what's make a row unique. This can lead to misinterpret the data, cartesian product at join and other funny stuff.

How do I imagine that ?

a specific tool in the Data Investigation category

Entry; one dataframe, ability to select fields or check all, ability to specify a max number of field for combination (empty or 0=no max).

Algo : it tests the count distinct every combination of field versus the count of rows

Result : one row by field combination that works. If no result : "no field combination is unique. check for duplicate or need for aggregation upstream".

ex :

order_id line_id amount customer site

| 1 | 1 | 100 | A | U_250 |

| 1 | 2 | 12 | A | U_250 |

| 1 | 3 | 45 | A | U_250 |

| 2 | 1 | 75 | A | U_250 |

| 2 | 2 | 12 | A | U_250 |

| 3 | 1 | 15 | B | U_250 |

| 4 | 1 | 45 | B | U_251 |

The user will select every field but excluding Amount (he knows that Amount would have no sense in key)

The algo will test the following key

-each separate field

-each combination of two fields

-each combination of three fields

-each combination of four fields

to match the number of row (7)

And gives something like that

choice number of fields field combination

| very good | 2 | order_id,line_id |

| average | 3 | order_id,line_id, customer |

| average | 3 | order_id,line_id, site |

| bad | 4 | order_id,line_id, site, customer |

| … | … | …. |

Best regards,

Simon

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

Hello all,

This is a very interesting feature of the List Box and Drop Down interface tool : the ability to select fields

However such a feature is not available for in-database, highly limiting the use of macros.

Please change.

Best regards,

Simon

Hello all,

As of now, you have two very distinct kinds of connection :

-in memory alias

-in database alias

It happens than every single time I use a in-database alias I have to create the same for in memory since some operations cannot be realized in in-database (such as pre-sql or interface tools)

What does that mean for us :

-more complex settings operations/training/tests

-unefficient worflows that have to deal with two kinds of alias.

What I propose :

-a single "connection alias", that can be used either for in-db either for in-memory,

-one place to configure

-the in-db or in-memory being dependant on the tools you use

Best regards,

Simon

Additional Dynamic Select Mode for All Native (Non-Macro) Tools with Select Functionality (with or without Data Type Selection)

This is the updated version of an idea I posted a while ago (which only included Multi-Field Formula), and after the release of Alteryx Designer 2025.1, which I found to be very successful from a new tool and functionality perspective, I decided to post about it.

My proposition is to add the Dynamic Select functionality* (at least the Select via a Formula mode) to all native (non-macro) tools in all tool categories that include a Select functionality (as an alternative, where the user would be OK with not being able to also change the field types of the selected fields, such as Join and Append tools, the opposite would apply to Multi-Field Formula, where the user would be able to dynamically select which fields the Multi-Field Formula would be applied to, in addition to changing the data type), including but not limited to (to account for any new tool with a Select functionality that might be added in the future):

Preparation Category

- Auto Field

- Data Cleanse Pro (added in 2025.1)

- Multi-Field Formula

- Multi-Row Formula (for Group By option)

- Rank (for Group By option)

- Record ID (for Group By option)

- Sample (for Group By option)

- Tile (for Group By option)

- Unique

Join Category

- Append Fields

- Find Replace

- Join

- Join Multiple

Transform Category

- Arrange

- Cross Tab

- Make Columns (for Grouping Fields (Optional) option)

- Running Total (for both Group By (Optional) and Create Running Total options)

- Transpose (for both Key Columns and Data Columns options, the tool would generate an error if the Dynamic Select formula written for both options are selecting the same field(s), as the Transpose tool is not supposed to allow it)

- Weighted Average (for Grouping Fields (Optional) option)

In-Database Category

- Select In-DB

Reporting Category

- Layout (for Group By and Per Column Configuration options)

- Table (for Group By and Per Column Configuration options)

Machine Learning Category

- Transformation (for Select Features mode only, as the other two modes with Select functionality (Clean Up Missing Values and One Hot Encoding) require Method and Missing Category Action specification)

Developer Category

- Download (for And values from these fields option present in Headers and Payload tabs)

- Dynamic Rename (for the Select functionality present in Formula mode)

Spatial Category

- Find Nearest

- Spatial Info

- Spatial Match

Data Investigation Category

- Pearson Correlation

Skipping Address and Demographic Analysis categories as they have tools that seem to be using a static input, therefore not requiring a Dynamic Select functionality.

Laboratory Category

- JSON Build (for Grouping Fields (Optional) option)

- Transpose In-DB (with a similar logic to the regular Transpose tool found in Transform category)

*The Dynamic Select functionality added tools that have more than one input anchor (such as Join and Join Multiple) could have new additional fields the users can utilize, such as:

- [Origin] (can have the values "L" or "R" for Join and Append tools)

- [Connection_ID] (can have the values 1, 2, 3 etc. for Join Multiple tool)

- [Unknown] (can have the values "True" or "False" for the Data Columns option of the Transpose tool, or any other tools such as Join that would have the Dynamic or Unknown Columns option as a part of their Select functionality)

Hello all,

A few years ago, I asked for svg support in Alteryx (https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/svg-support-for-icon-comment-image-e... ). Now, there is Alteryx Designer Cloud with other icons... already in svg !

So I think it would be great to have an harmonization between designer and cloud.

Best regards,

Simon

To increase Performance on some old Buissness Logic, i am trying to switch an existing system to In-DB tools. This has given me a lot of headache because there is no Multi-Field Formular Tool in the In-DB section. It is a very tedious job to run through every workflow to manually set the same regex for a table with more than 20 Fields.

I have had the idea to implement such a tool myself but i think this could be helpful for other developers in Alteryx Desktop too, so i am bringing this up here.

The Idea is to have a similar approach to the new Multi-Formular Tool like the other already existing Tool in Preperation.

Idea

I feel the necessity of the features to know the version of Alteryx Designer Desktop for each user within an organization.

As well as some usage data of each user like 'Last Used' are available in License Portal, if 'Version of Alteryx Designer Desktop' for each user is also available in License Portal, it would be more manageable and could enhance the governance in organization.

Background

When the organization uses Alteryx Server and Designer Desktop, it is more challenging to make alignment of version of these products.

We frequently see our users install/upgrade to newer version of Alteryx Designer than that of Alteryx Server, and cause incompatibility issue when interacting with Alteryx Server.

Although we instruct our users to install the particular version, they sometimes upgrade to newer version later on by themselves, but it's not detectable.

I mean, even if they're using a wrong version of Alteryx Designer Desktop, we won't realize it until a problem occurs.

In order to identify such users and rectify their version, administrator shall be able to know which version they use whenever needed.

License Portal would be one of the best platform to make that information available in my opinion.

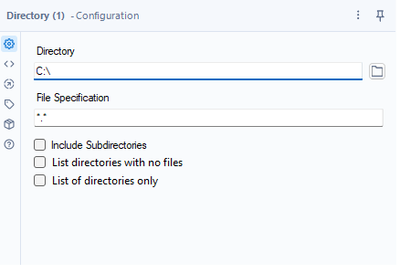

Hi everyone,

Add two additional features to a directory tool. Something like this:

Use cases:

1. Since it is not possible to use a folder browse on the Gallery, this could help a basic user create a list of possible folders to select from with the help of a drop-down

2. Directory analysis for cleaning purposes - currently, if you want to get a list of the folders with Alteryx, it takes forever for big file servers since Alteryx is mapping all the files

Both are achievable today through regex or a bat script.

Thank you,

Fernando Vizcaino

Description:

Currently, when running a workflow in Alteryx that writes output to a file (e.g., Excel), if the target file is already open (such as being open in Excel), the workflow will proceed through all steps and only fail at the very end when it attempts to write to that file.

Problem:

This behavior is inefficient and frustrating, especially with large workflows. You only find out there's a problem after all the upstream processes have already run. It wastes time and compute resources, and it can also be confusing for less experienced users.

Suggested Improvement:

Alteryx Designer should prevalidate output file accessibility at the beginning of workflow execution. Specifically:

Check if the output files are locked or otherwise unavailable before starting the run.

If any output file cannot be written to, immediately halt the workflow with a clear, early warning.

Optionally, display a prompt listing which outputs are unavailable and why.

Benefit:

This enhancement would save users significant time, reduce confusion, and improve user experience, especially in development or iterative testing workflows. It aligns with good design principles by failing fast and providing immediate feedback.

Hello all,

As of today, when you want to retrieve or create a file on Apache Spark for Databricks, you have only two choices : CSV and Avro

However it's clearly missing parquet file type :

-it's faster

-it's better for storage

-it's standard and already supported as input/output of Alteryx or for HDFS so doesn't seem hard to add here.

Best regards,

Simon

In the regex tool, there is a checkbox called "copy unmatched text to output".

Unfortunately, if you are using regex from within the formula tool, this is not an option. It would be helpful if this could be added as an optional parameter in the regex formula i.e:

REGEX_Replace(String, pattern, replace, icase=1, unmatched=1)

Without this, regex outputs can sometimes be confusing, as string characters not specified by the pattern (unmatched) appear in the output. This confusion would be alleviated with the optional parameter.

Hello,

As of today, DCM is great to store credentials. But once we want to dive deeper in technicity, like using macros or Applications, it's really bad. One of the things I hate is that we can't retrieve any informations from the DCM connection, just the id. Not good for logs, really bad for understanding and have some conditional logic related to connection type or name.

Here an example

Nice, I managed to retrieve an id but I have no idea of what it means : what kind of connection? what's name?

Best regards,

Simon

- New Idea 377

- Accepting Votes 1.784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1.605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Vorherige

- Nächste »

- abacon auf: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS auf: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC auf: Date time now input (date/date time output field t...

- EKasminsky auf: Limit Number of Columns for Excel Inputs

- Linas auf: Search feature on join tool

-

MikeA auf: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 auf: Select Tool - Bulk change type to forced

-

Carlithian auf: Allow a default location when using the File and F...

- jmgross72 auf: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com auf: Select/Unselect all for Manage workflow assets

| Benutzer | Anzahl |

|---|---|

| 32 | |

| 6 | |

| 5 | |

| 3 | |

| 3 |