Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Would like to see a connector (similar to existing AWS S3 connectors) for Google Cloud storage. I need to download data from the Developer Play console, and they provide a mechanism for accessing the csv data files directly:

https://support.google.com/googleplay/android-developer/answer/6135870

If we had a Input data connector to browse and select CSV files from Google Cloud (either specifically or dynamically), that would make it much easier than running it via a Python script

Currently the OLEDB/ODBC connection string for a server that requires a password can be injected into with a password that contains a ; or a |. There may be other values that cause this as well - these are the ones our company has found so far.

This lowers the security of passwords for our other systems, by limiting what characters we can use.

I am bringing this over from this post.

It would be really cool to have a workflow that you could configure to your server that we could schedule to pull down the new Cass updates and install them. Since they have to be reinstalled every two months, it would help to manage that.

I think that the data updates are set up with an FTP site, Cass could be done essentially the same way. Download it there and then use the command module to run the install? I may be over simplifying the process but it seems like this is something that Alteryx could tackle.

The ‘Existing File Action’ configuration setting needs to address the situation where columns change.

Currently the following options exist, with instruction as follows at https://help.alteryx.com/20213/designer/microsoft-power-bi-output-tool:

There are two expectations when using this tool:

1. Reloads are built to completely replace the contents of a dataset, i.e. an Append is not being performed

2. Columns will change over time with continued development

There is therefore a need for an ‘Overwrite (update columns)’ option. However, when this Existing File Action is used it updates column names, but it does not delete the contents prior to upload. An append onto the existing data, but with new column names is therefore performed.

I acknowledge that the instructions do not say that existing rows are deleted.

This leaves the need to perform a workaround:

- Publish with ‘Overwrite (update columns)’

- Publish (immediately after) with ‘Overwrite (keep existing columns)’

If step 2 is not done data will be appended which would lead to duplication issues.

Either these Existing File Actions need to be renamed to be clearer as to their operation, or preferably an option that updates the columns and sets up new (non-appended) data is required.

A problem I'm currently trying to solve and feel like I'm spending way too much time on it..

I have a data set which has some data in it from multiple languages, and I only want English values. I was able to get rid of the words with non English letters with a little regular expression and filtering. However, there's some words that do contain all English letters but aren't English. What I'm trying to do is bring in an English dictionary to compare words and see which rows have non English words according to the dictionary. However, this is proving to be a bit harder than I thought. I think I can do it, but it feels like this should be much simpler than it is.

It would be great to have a tool that would run a "spell check" on fields (almost all dictionaries for all languages are available free online). This could also be useful also just for cleaning up open text types of data where people type stuff in quickly and don't re-read it! 🙂

Hi there,

the Snowflake documentation only refers to connection strings which use a DSN such as this page Snowflake | Alteryx Help which refers to the connection string as odbc:DSN=Simba_Snowflake_JWT;UID=user;PRIV_KEY_FILE=G:\AlteryxDataConnectorsTeam\OAuth project\PEMkey\rsa_key.p8;PRIV_KEY_FILE_PWD=__EncPwd1__;JWT_TIMEOUT=120

However - for canvasses which need to be productionized on Alteryx Server - it is critical to use dsn-less connection strings so that the canvasses can be deployed and run on any worker node without having to set up DSNs on every worker node.

A DSN-less connection string looks like this:

ODBC:DRIVER={SnowflakeDSIIDriver};UID=UserName;pwd=Password;WAREHOUSE=compute_wh;SERVER=server.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Please could you consider making an update to the help texts to provide and describe a DSN-free connection string as well as the DSN driven connections?

Many thanks

Sean

Often I need to add filters or other tools early on after the workflow is already been mostly built. If a tool connects to one tool I can drag the filter over the connecting line and add the filter seamlessly. However in large workflows there is often this situation:

The Filter will only connect to one of the lines I'm hovering over. If I could connect to all lines simultaneously and drop in the connection to achieve this (would be awesome):

Environment variables act as a shortcut so that different computers can be configured in different ways, but a particular path will still point to the right place.

For example if you open up explorer and go to %TEMP%\ - you will open up whichever folder is set up as Temp on this machine. This is super useful so that you can use a particular logical folder without knowing the actual placement on every machine (for example the Windows Directory)

This works partially in the Directory / input - when you put in the environment variable, it is able to search possible subdirectories (screenshot 1) but it does not work once you run the workflow (screenshot 2).

It seems as if the designer hits the Windows API directly, but it does not work within the engine.

Please could you alter the engine to be able to make full use of the environment variables on the machine in question in the directory path or input tool path?

Hello all,

Despite a few limitations, Alteryx is great when you work with full table (i.e when you rewrite entirely the table). But in real life, very few workflows work like that :

Here are some real life use cases that should be easy to deal with on Alteryx :

-delta on a key

-delta on a key + last record based on a date

-update records

-start_date and end_date for a value

etc

Best regards,

Simon

Hi,

Carlson Companies is moving to a Vertica environment and it would be great if that was supported with the In-database tools. That would definitely help and expand the use of Alteryx at our company!

Thanks,

Tyler Mittelstadt

In order to speed up our workflows (which are very heavily tied to databases and DB queries) it would be valuable to be able to inspect the actual queries which were run against the SQL server so that we can index to optimize these queries directly in SQL Enterprise Manager (or the same on any DB platform - we have the same problem on DB2)

The idea would be to have a simple screen where I can run the workflow with a SQL profiling turned on, and then capture the output of either the entire workflow (grouped by connection so that I can tune one database with only the queries that apply) or a specific component on the canvas.

I appreciate that this is not something that would be required by a fair population of your users - but I'm sure that this will be helpful for any enterprise / corporate customers.

Thank you

Sean

I often use user constants in my workflows and ever since the Workflow tab has been buried under the Canvas tab in the Workflow Configuration, I often forget to adjust my constants. Out of sight, out of mind.

My suggestion would be to create a tool that could be placed on the canvas with the constant name in the title area and a text box which shows the current value and allows the user to change the value before processing.

I would like to see documentation around the Wildcard options for the Event Email triggers. Some of the options I would like to see include:

1. FileName (not the directory location, for the Subject Line)

2.Specific Message Type Log (Errors Only, Warning Only, etc)

3. Total Runtime

4. Workflow Version # (From Gallery)

5. Alteryx Created Version

6. Files Created (Full Directory location so it is clickable)

Currently there are the following fields available:

"Alteryx": %AppName%

Full Directory Location: %Module%

User: %User%

Computer Name: %ComputerName%

Working Directory : %WorkingDir%

Error Count: %NumErrors%

Conversion Errors Count : %NumConvErrors%

Warning Count : %Warnings%

Full Log: %OutputLog%

(originally raised as discussion : https://community.alteryx.com/t5/Data-Sources/Input-Data-Tool-Record-Limit-control/td-p/58718)

Hi all,

When using a record limit on a database query - the actual query being executed on the server depends very much on the connection type (Native SQL; OleDB; and ODBC). However, for all 3 of these, it seems that the record limit is being enacted on the client side, not on the server. What I mean by this is that when I take the exact queries that are being run by Alteryx on the server (by looking at a SQL Profile trace on the server), and run these in a query window, you can see that the row-limit is not occurring in SQL, but in Alteryx.

(to test this, I ran several queries with and without the record limit; profiled them using SQL profiler; and the profile trace was identical either way)

Aside from putting "Select top(100) from..." in all the queries that that we create, or using in-DB queries for every simple query - could we instead have an option to force the row limitation down to the server on a regular InputData tool, so that we can take advantage of the server's ability to optimize?

Thank you

Sean

There are circumstances where the python install section of Alteryx fails - however it does not report failure - in fact it reports success, and the only way to check if the Jupyter install fails is to check the Jupyter.log to look for error messages.

Can we add two features to Alteryx install / platform to manage this:

- If the installer for Python fails - please can you make this explicit in feedback to the user

- Can we also add a way for the user to repair the Python install - either using standard MSI Repair functionality, or a utility or a menu system in Designer.

Thank you

Sean

Functions such as Year([Date Field]), Month([Date Field]), and Day([Date Field]) would really help with date-based formulas and filter tests.

I am working with complex workflows which use multiple files as input, located on network drives. Input tools are Input Data, Directory, Wildcard Input, Wildcard XLSX Input (from CReW macros).

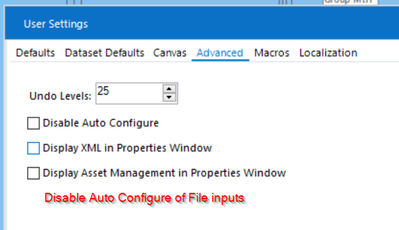

Regularly, I experience very slow Designer when working on the workflows (Window freezes for quite some time), especially when working from home. Switching off Auto Configure did not really help because I the column list sometimes does not converge even after pressing F5 multiple times, and when actively working on workflows, I have to press F5 all the time...

To improve this issue, I would like to propose a "Disable Auto Configure of File Inputs" which would not watch the loaded files but still would update the configurations downstream. This could include to not check automatically whether Macro files on network drives have changed. F5 could be used to update all configurations manually.

But with Alteryx you are only allowed to join a perfect match. It would be really great if you could add that functionality into 9.0

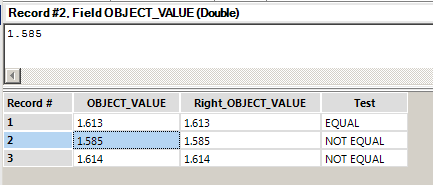

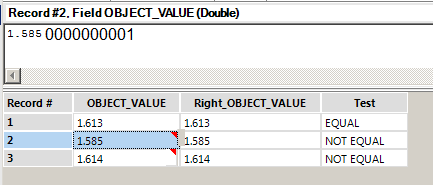

I would love to see Alteryx add an indicator whenever a number being displayed is being truncated. For example, this picture is currently confusing:

The displayed numbers should all be equal according to their displayed value, but in reality a different number is being stored in the background. I would propose something like this:

1) Any number that is being truncated when displayed would have that red triangle in the upper right corner. This already happens when a formula tool result is truncated, but I would like for it to be displayed on all data.

2) Clicking on the cell would show the actual value, not the truncated value. This would be great when debugging.

I understand that numbers are more complex than meets the eye, and I think that changes like this would help alleviate some of the mystery (like why 2 of my numbers above aren't equal).

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 31 | |

| 7 | |

| 3 | |

| 3 | |

| 3 |