Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Let's "Elevate" Alteryx to enter the Euclidean space and add the Z-Coordinates to our spatial tools!

Cleanse Macro

Given a choice between the delivered macro and the CReW macro, I’ll choose the CReW macro for both speed and functionality. Wikipedia says, “Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data.” If Alteryx were to convert the macro to a true tool, here is my feature request list:

Performance:

- AMP compatible – Fast!

- Faster than the CReW macro for deleting empty fields/rows

- Resolve time it takes to load the tool (current macro versions are slow), html is faster.

Feature Enhancement:

- Allow selection of fields based on data type

- Include incoming/outgoing SELECT functionality

- Allow for PREFIX functionality (like multi-field formula), but NOT default

- Read incoming metadata to provide color coding of fields to indicate where potential problems exist (e.g. NULL, Whitespace) – part of browse everywhere currently

- Allow for Nulls to convert to 0/blank or 0/blank to convert to Null

- When removing punctuation, provide for exceptions (e.g. Numeric set of negative, comma and period).

- Include HTML tag removal

- Support internationalization (character sets)

Going the extra mile:

- Display or opt for output, cleanup metrics. How dirty was my data? Potentially, allow for ERROR to stop workflow if garbage is detected.

- Optional: Detect outliers in numeric data. I’ve got an outlier detection macro that we can review, but while you are passing all of the data for numeric values, explaining or tagging outliers would be useful. Could be a box-whisker on numeric values maybe?

- Make outlier actionable

- Identify in data (new field indicator)

- Remove

- Modify/Impute

- Test/Preview against metadata: (pre-run), see what the incoming/outgoing results would be on *all of the metadata before I run the workflow.

- camelCase: https://en.wikipedia.org/wiki/Camel_case

- Identify/Replace unknown values (e.g. N/A, Not Applicable, #) with Null() or other?

- Identify/Remove duplicate values within a cell

- See also: https://en.wikipedia.org/wiki/Data_cleansing

- Option to point to a “personal” dictionary for spelling or validation

- Provide “smart” annotation on tool

- Make outlier actionable

Added in Alteryx Version 2020.3, the Browse tool no longer shows a profile of the complete dataset (it is capped when the record data size reached 300MB).

My proposed solution is an optional override of the record size limit on the browse tool (which will make the profiling take longer, but actually profile the entire dataset). I would also like a general user setting to set the default behavior of the browse tool to either be limited or unlimited.

Below is the newly included documentation of the Data Profiling Limit, which I'm proposing can be overridden.

Data Profiling Limit

Data Profiling in the Browse tool is capped at 300 MB. This allows you to process very large datasets faster. For each record in the incoming dataset, we process the record and add the record size to a counter. Once the counter reaches 300 MB, we stop processing records.

It is important to note that there is no specific number of records that we can process. This depends on the dataset since a record size can range from 1 byte to a few thousand bytes. This record size is different from the file size, displayed in the Results grid and Data Profiling Holistic View. The file size is generally different since it has been compressed to optimize spacing.

In other words, 300 MB of record size is not the same as 300 MB of file size.

This new tool can cause confusion when looking at the data profile (e.g. if you expect the sum to be $3 million, but the browse tool is only showing 2% of your total records in the profile tool, the profile sum may only show $60 thousand).

The sampled version with a cutoff of 300MB is rarely useful if you are using browse tools to get a quick sense of the variable profiles on medium sized datasets (around 1 million records) since this rarely will fit into the 300MB record size limit.

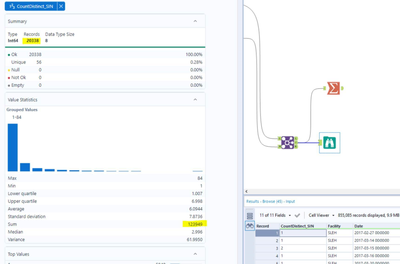

An example can be shown in the image below, where the dataset contains 855,085 records, but the browse tool is profiling only the first 20,338.

Again, being able to override this 300MB record size limit would fix the problem created in the 2020.3 change to the browse tool.

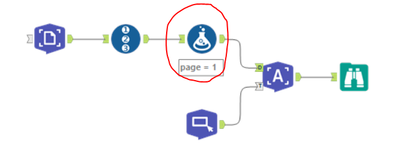

When using the text mining tools, I have found that the behaviour of using a template only applies to documents with the same page number.

So in my use case I've got a PDF file with 100+ claim statements which are all laid out the same (one page per statement). When setting up the template I used one page to set the annotations, and then input this into the T anchor of the Image to Text tool. Into the D anchor of this tool is my PDF document with 100+ pages. However when examining the output I only get results for page 1.

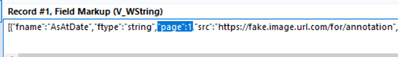

On examining the JSON for the template I can see that there is reference to the template page number:

And playing around with a generate rows tool and formula to replace the page number with pages 1 - 100 in the JSON doesn't work. I then discovered that if I change the page number on the image input side then I get the desired results.

However an improvement to the tool, as I suspect this is a common use case for the image to text tool, is to add an option in the configuration of the image to text tool to apply the same template to all pages.

Hello all,

Some Database, including Hive, support natively scheduled queries (yes, the scheduling configuration is inside the database, not through etl/dataprep system). I think this would be an interesting feature for in-db workflow output : you play the worflow once and then only have to run it when it changes, the database do the scheduling.

https://cwiki.apache.org/confluence/display/Hive/Scheduled+Queries

Intro

Executing statements periodically can be usefull in

- Pulling informations from external systems

- Periodically updating column statistics

- Rebuilding materialized views

Best regards,

Simon

Alteryx doesnt support querying tables within Apache Ignite via Ignite ODBC connector. Connectivity from Ignite being an in memory database with Alteryx would help in better connectivity via ODBC.

Currently, if you download and Alteryx package from an alternative version it doesn't allow import into a newer version.

Workflows allow this with a warning it would be good to allow it on packages too.

The email tool, such a great tool! And such a minefield. Both of the problems below could and maybe should be remedied on the SMTP side, but that's applying a pretty broad brush for a budding Alteryx community at a big company. Read on!

"NOOOOOOOOOOOOOOOOOOO!"

What I said the first time I ran the email tool without testing it first.

1. Can I get a thumbs up if you ever connected a datasource directly to an email tool thinking "this is how I attach my data to the email" and instead sent hundreds... or millions of emails? Oops. Alteryx, what if you put an expected limit as is done with the append tool. "Warn or Error if sending more than "n" emails." (super cool if it could detect more than "n" emails to the same address, but not holding my breath).

2. make spoofing harder, super useful but... well my company frowns on this kind of thing.

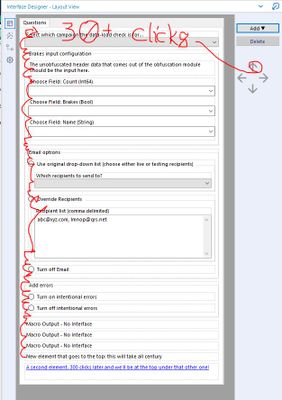

I've recently been delving into using the interface tools and there are a couple of glaring issues for me as a developer/designer, all having to do with the UI, ironically (yes, I used that correctly!) with the interface tools. The irony here is that the interface tools utilise a poor user interface.

Firstly, I finished this video to ensure I was indeed doing things correctly, and I was.

The UI for designer's interface tools is incredibly sluggish. In order to rearrange tools, each time you create a new one, you have to push the up arrow for each tool and you have to traverse the groupings.

Instead of this, I suggest two changes to the interface designer.

- 1. Allow a control-click in the interface designer layout view so that multiple elements can be selected and they can traverse the groupings and be moved together. When one has, say 4 elements in 2 nested groupings, that is a lot of clicks to get your new element to the top. Having to do it with its radio-button partner: pretty much infuriating.

ONE at a time, children. No control-clicking. That would lead to pandemonium?Allow control-click in the tree view as well. The fact that we can only click one item at a time and move it one slot at a time is incredibly time consuming. It seems a no-brainer to at least allow a control-click here.

- Bonus: Include the ability to jump to the top and/or pop out a level with left/right arrows in the tree interface.

I know not everyone is building macros/apps and dealing with this, so I have little faith that this will jump to the top of your queue. But this is a painful part of the UI. I don't know if your UX designers could easily fix this or if it is more pain on your end than the pain you're giving me, but I just want to say: This hurts. 35 clicks every time I add a new element with no option to 'move to top' like you (wonderfully) do in the select tool is a big drag on my time (hint: maybe add that sort of functionality too; the select tool manages this stuff so well!). Which is supposedly valuable. In theory. But it certainly doesn't feel that way when I've spent 10 minutes clicking an up arrow (and yes, my UI is slow. And I may be exaggerating, but not by much!).

Thank you for your continued improvement!

-Çædric Justice

Alteryx Developer

Cambia Healthcare

Preface: I have only used the in-DB tools with Teradata so I am unsure if this applies to other supported databases.

When building a fairly sophisticated workflow using in-DB tools, sometimes the workflow may fail due to the underlying queries running up against CPU / Memory limits. This is most common when doing several joins back to back as Alteryx sends this as one big query with various nested sub queries. When working with datasets in the hundereds of millions and billions of records, this can be extremely taxing for the DB to run as one huge query. (It is possible to get arround this by using in-DB write out to a temporary table as an intermediate step in the workflow)

When a routine does hit a in-DB resource limit and the DB kills the query, it causes Alteryx to immediately fail the workflow run. Any "temporary" tables Alteryx creates are in reality perm tables that Alteryx usually just drops at the end of a successful run. If the run does not end successfully due to hitting a resource limit, these "Temporary" (perm) tables are not dropped. I only noticed this after building out a workflow and running up against a few resource limits, I then started getting database out of space errors. Upon looking into it, I found all the previously created "temporary" tables were still there and taking up many TBs of space.

My proposed solution is for Alteryx's in-DB tools to drop any "temporary" tables it has created when a run ends - regardless of if the entire module finished successfully.

Thanks,

Ryan

Please enhance the dynamic select to allow for dynamic change data type too. The use case can be by formula or update in an action for a macro. If you've ever wanted to mass change or take precision action in a macro, you're forced to use a multi-field formula. It would be rather helpful and appreciated.

Cheers,

Mark

Two additions to the formula tool that would be great to see:

- When I select a function (like DateTimeParse) and hit F1 for help - please could you take me to the help for this specific function?

- For the parameters which are ordinal or "magic value" type parameters - please can you create a simple formula builder so that we don't have to keep on going into the help text to find out which % flag to use for a month in MM format; and which is MMM format.

- What I'm thinking here is a simple pop-up box that allows you to create the parameter you want

- Alternatively - provide a direct hint-text for the parameter in question or inline intellitext like Visual Studio or Eclipse

- Overall though - the date functions seem to have grown up at different times - and so they treat dates in different ways - dateTimeDiff uses "Hours" which is pretty common, the DateTimeParse uses magic values like "%Y", and the new date time conversion tool uses the standard form used in Windows of MM-YYYY etc. So it woudl be worth looking at a refactoring of the date functions to bring them all to a standard treatment of date parameters.

Thank you

Sean

Hi Alteryx 🙂

When you set maximum records per file, the filename gets _# appended. Great! But in reality you get:

Filename.csv

Filename_1.csv

Filename_2.csv

The first filename doesn't get a number. I think that it should.

Cheers,

Mark

The v10 formula configuration window had two very small advantages. First, it always had an extra 'line' for another output field (no pressing '+' required). Second, it defaulted to letting you immediately begin typing the name of the next column (no need to press 'Select Column' then 'Add New Column'). I know these are minor, but every little thing counts when you're doing heavy development.

It has been brought up that the following comments were given during the beta. While I appreciate the reasoning of requiring 'obvious intention,' my personal opinion is that it is overkill in this scenario. Even for new users, the old design was quite intuitive.

"Thanks for taking the time to provide feedback! This touches a conversation topic that has been ongoing here at Alteryx. While we want workflow development to be as fast as possible, we also are trying to address the overall usability of the tool and make sure it is very clear what we intend the user to do. We decided to have the UI ask for an explicit action (pick an existing field to edit or click to add a new field) to help make those options clear, as we have found that users don't always understand from the existing tool that this is the first decision they should make when using the tool. That being said, your feedback is definitely valuable. I will be sure to bring this up as we are making improvements to the new tool and see if there's a compromise that we can make on speed vs. obvious intention. Thanks for taking the time!"

I do a lot of work with SQL code in the PRE/POST SQL options and when I get an error, it usually returns the entire code and a little bit about what is wrong. These long strings are hard to read in the current tooltip format as if you hover over to see the entire error, the tooltip goes away after 5 seconds. So I am frantically reading through lines of error code 5 seconds at time. Can we make it so the tooltip just hangs out until I move my cursor off of it?

API Security requirements are constantly evolving and strengthening. As API architectures migrate from traditional authentication models (Basic, OAuth, etc.) to more secure, certificate-based models, like MTLS/MSSL, leveraging Alteryx Designer will become increasingly difficult, especially for larger organizations trying to scale the use of Alteryx across a large user base, with vastly diverse skillsets.

I realize issuing API calls with certificates is possible via the Run Command tool. We consider this a temporary workaround, and not a permanent, strategic solution. The Run Command tool can be clunky to use when passing in variables and passing the output back into the workflow for downstream processing.

Therefore, I would like to request a more scalable approach to issuing MTLS/MSSL API calls. Can an option be added to the Download Tool to allow for certificates to be passed on API calls?

Python pandas dataframes and data types (numpy arrays, lists, dictionaries, etc.) are much more robust in general than their counterparts in R, and they play together much easier as well. Moreover, there are only a handful of packages that do everything a data scientist would need, including graphing, such as SciKit Learn, Pandas, Numpy, and Seaborn. After utliizing R, Python, and Alteryx, I'm still a big proponent of integrating with the Python language much like Alteryx has integrated with R. At the very least, I propose to create the ability to create custom code such as a Python tool.

I often encounter situations where I need to apply the same formula to several columns. Doing this requires copy/pasting the formula several times and then updating the variable names in the formula for each output column. I wish there was a built in "Current Output Column" variable so that I could build one formula and use that for each column.

For example:

Please consider adding the ability in the Power BI Output Tool to create/modify multiple tables per dataset - having to work with only single table datasets in Power BI is very limiting.

Its definately not a good UX that the full browse is now in the output window. I usually have my Output on autohide and its a few extra clicks to see the browses now... Can we have both the Browse Everywhere tab in Output and Configuration Panel?

- New Idea 272

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 174

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 116

- Revisit 62

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

77 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

640 -

Category Interface

239 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

394 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

961 -

Data Products

2 -

Desktop Experience

1,533 -

Documentation

64 -

Engine

126 -

Enhancement

325 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

192 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

79 -

UX

222 -

XML

7

- « Previous

- Next »

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...