Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

...and now for probably the most trivial request in a long time, but also one of the most annoying things (for me anyway)..........

When viewing a browse window, it's so darn awesome to be able to sort and search. However, it would be even awesomeer (yes, I just made up a word) if when you actually conducted a sort or search, you could make your selection (for sorts) or type in your criteria (for searches) and simply press the "Enter" button on the keyboard and have it do the same thing that selecting "Apply" with the mouse does. This is common Windows functionality and I think should be easy to implement.

In Powerpoint, you can right-click on a picture and replace it with a different picture without losing formatting.

Similar functionality would be useful for replacing custom macros.

- I would like to be able to switch an old version of a custom macro with a new version in situ, without losing the connections to other tools, interface tools, or location in a container.

Currently, the only option is to insert the new custom macro and then reset all incoming and outgoing connections. Some downstream tools (e.g., crosstab) lose their existing settings and that has to be reset too. On complicated workflows, this can introduce silent errors.

This capability would be especially helpful for team coding, where different team members are revising different modules for a parent workflow.

Currently:

Right-clicking on the canvas shows Insert > Macro > (choose from list of open macros)

Right-clicking on an existing macro shows Open Macro

New functionality:

Right-clicking on an existing macro shows Replace/Change Macro > (choose from list of open macros)

A typical macro does the same job every time. I therefore want it to have the same annotation each time.

I want it to have a default annotation that I save in the Interface Designer. This annotation will be shown on the canvas whenever the macro is added.

We are experiencing performance issues with fetching schema/table/columns info on Alteryx Designer when using Vertica DB.

From the troubleshoot with Alteryx support, the query hitting "odbc_columns" is contributing to the performance issue. Vertica DBA suggests to use "columns" instead of "odbc_columns". Submitting this request to change the query.

Refer to case 00551930 for more info.

When I'm organizing my workflow, sometimes I want to move a whole tool container on the canvas. Currently, the only way to do this is to first find the header then select and drag this. When the ends of the container is off screen, it can be hard to know how much I wanted to move my container to get it where I wanted relative to the other tools around it. I feel like it would be nice to be able to select anywhere on the tool container and drag it around (possibly holding right click and dragging so that current tool selection capabilities aren't hindered).

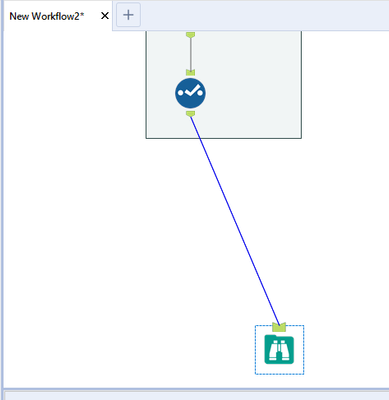

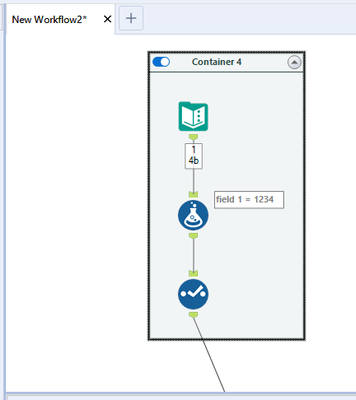

In the (simplified) images below, you'll see that I want my tool container to vertically align just above the browse tool:

I can't currently see the top of the tool container to move it, though, so I must first navigate to that part of the workflow to select the header.

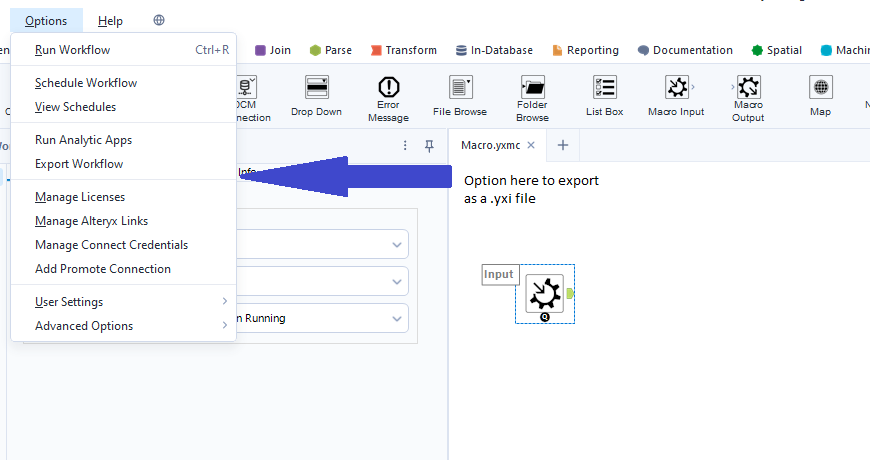

Creating .yxi files currently is a manual and a bit of a fiddly process. It would be great to just have an option in the menu to click which would auto package the macro into a tool installer file.

Hi -

We are using the new(ish) Anaplan connector tools; in particular, the "Anaplan Output" tool (send data TO Anaplan).

The issue that I'm having is that the Anaplan Output Tool only accepts a CSV file. This means that I must run one workflow to create the CSV file, then another workflow to read the CSV file and feed the Anaplan Output Tool.

If it were possible to have an output anchor on the Output tool that would simply pass the CSV records through to the Anaplan Output tool, the workflows would be drastically simplified.

Thanks,

Mark Chappell

When switching modes sometimes it reboots and looses all the code:

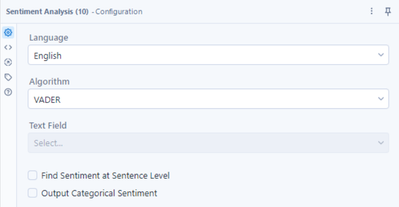

Currently only VADER algorithm is available however other algorithms might be interesting alternative. By other algorithms I mean: TextBlob, Flair and Custom option.

Cheers,

Pawel

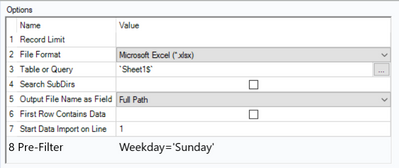

Pre-Filter as new option in Input Tool might reduce import data and allows to input only selected data (ie. for specific period or meeting certain conditions).

Cheers,

Pawel

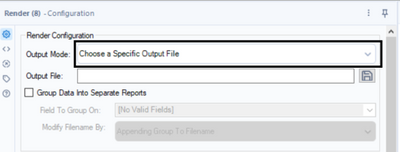

Currently saving file output as PowerPoint is possible only doing workaround as in Megan's article (link below) using Render Tool. It might be more intuitive to implement PowerPoint to supported options in "Output Mode" dropdown.

Cheers,

Pawel

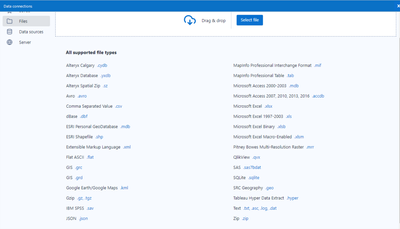

Add PowerPoint format file (ppt/pptx) into supported file type as direct connection in Input Tool.

PS. I know that we have workaround allowing to import PowerPoint slides into Alteryx but I'm describing automated solution :)

Cheers,

Pawel

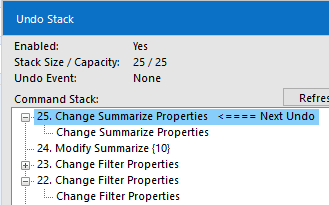

The Edit menu allows you to see what your next undo/redo actions are. This is super helpful, however sometimes I decide to scrap an idea I was starting on and need to perform multiple undo's in a row. It would be great if we could see a list of actions like in the debug undo/redo stack menu then select how many steps we'd like to undo/redo.

For example, using the below actions, if I want to undo the Change Summarize Properties and also the Modify Summarize, currently I have to do that in two steps. I'd like to be able to click the Modify Summarize and have the workflow undo all commands up to and including that one.

Currently the Databricks in-database connector allows for the following when writing to the database

- Append Existing

- Overwrite Table (Drop)

- Create New Table

- Create Temporary Table

This request is to add a 5th option that would execute

- Create or Replace Table

Why is this important?

- Create or Replace is similar to the Overwrite Table (Drop) in that it fully replaces the existing table however, the key differences are

- Drop table completely removes the table and it's data from Databricks

- Any users or processes connected to that table live will fail during the writing process

- No history is maintained on the table, a key feature of the Databricks Delta Lake

- Create or Replace does not remove the table

- Any users or processes connected to that table live will not fail as the table is not dropped

- History is maintained for table versions which is a key feature of Databricks Delta Lake

- Drop table completely removes the table and it's data from Databricks

While this request was specific to testing on Azure Databricks the documentation for Azure and AWS for Databricks both recommend using "Replace" instead of "Drop" and "Create" for Delta tables in Databricks.

Hyperion Smartview Connect

Hey YXDB Bosses,

Let's move forward with our YXDB. Maybe give AMP a real edge over e1. Here are some things that could may YXDB super-powered:

- Metadata

- Workflow information about what created that poorly named output file.

- When was the file originally created/updated.

- SORT order. If there is a sort order for the data, what is it?

- Other stuff

- INDEX. Currently you get spatial indexes (or you can opt out). If I want to search through a 100+MM record file, it is a sequential read of all of the data. With an index I could grab data without the expense of a calgary file creation. Don't go crazy on the indexing option, just allow users to set 1+ fields as index (takes more time to write).

- I'm sure that you've been asked before, but CREATE DIRECTORY if the output directory doesn't exist.

- Old School - Crazy Idea

- Generation Data Groups (GDG)

This will likely make @NicoleJ 's eyes roll 🙄 but back in the days, we could write our data to the SAME filename and the old data became 1 version older. You could read the (0) version of the file or read from 1, 2, 3 or more previous versions of the data using the same name (e.g. .\Customers|||3). The write of the output file would do all of the backing up of the data (easy to use) and when the initial defined limit expires, the data drops off.

- Generation Data Groups (GDG)

Just a little more craziness from me

cheers

Hi UX interested parties,

Here are some ideas for you to consider:

1. These lines are BORING and UNINFORMATIVE. I'd like to understand (pic = 1,000 words) more when looking at a workflow.

- A line could communicate:

- Qty of Records

- Size of Data

- Is the data SORTED

- What sort order

- Quality of Data

If you look at lines A, B, C in the picture above. Nothing is communicated. Weight of line, color of line, type of line, beginning line marker/ending line marker, these are all potential ways that we could see a picture of the data without having to get into browse everywhere to see the information. If we hover over the data connection, even more information could appear (e.g. # of records, size of file) without having to toggle the configuration parameters.

2. Wouldn't it be nice to not have to RUN a workflow to know last SAVED metadata (run) of a workflow? I'd like to open a "saved" workflow and know what to expect when I run the workflow. Heck, how long does it take the beast to run is something that we've never seen unless we run it.

3. I'd like to set the metadata to display SORT keys, order. Sort1 Asc, Sort 2 Desc .... This sort information is very helpful for the engine and I'll likely post about that thought. As a preview, when a JOIN tool has sorted data and one of the anchors is at EOF, then why do we need to keep reading from the other anchor? There won't be another matched record (J) anchor. In my example above, we don't ask for the L/R outputs, so why worry about the rest of the join?

4. Have you ever seen a map (online) that didn't display watermark information? I think that the canvas experience should allow for a default logo (like mine above, but transparent) in the lower right corner of the canvas that is visible at all times. Having the workflow name at the top in a tab is nice, but having it display as a watermark is handy.

5. Once the workflow has RUN, all anchors are the same color. How about providing GREY/White or something else on EMPTY anchors instead of the same color? This might help newbies find issues in JOIN configuration too.

6. If the tool has ERRORs you put a RED exclamation mark. I despise warnings, but how about a puke colored question mark? With conversion errors, the lines could be marked to let you know the relative quantity of conversion errors (system messages have a limit)

Just a few top of mind things to consider ....

Cheers,

Mark

Alteryx really needs to show a results window for the InDB processes. It is like we are creating blindly without it. Work arounds are too much of a hassle.

We really need a block until done to process multiple calculations inDB without causing errors. I have heard that there is a Control Container potentially on the road map. That needs to happen ASAP!!!!

Hello!

A quite minor, pedantic issue from me today.

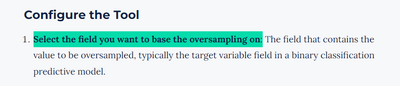

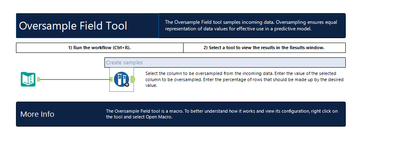

Currently, the Oversample Field Tool's naming and configuration suggest that the tool can over sample data:

However, I would argue the tool under samples data instead.

Here are a few sources that explain this much better than I can:

And an image is taken from Medium:

Effectively either step is to create a similar (or same) number of records between each class. Under sampling is the process of taking samples from the majority class, and ending up with a smaller dataset than started with. Over sampling is the process of duplicating records within the minority class, and creates a larger dataset.

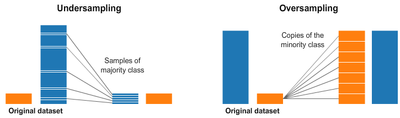

When using the Oversample tool within Alteryx, using the example workflow for reference:

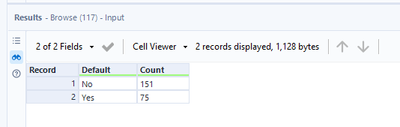

When summarizing the input:

And the output:

It's clear that the data has actually been under sampled, in that random samples have been taken from the majority class to match the minority, rather than creating duplicate minority records.

I would suggest a quick renaming of the tool to "Undersample Field Tool", and documentation to not cause confusion to new users of the platform.

Kind Regards,

TheOC

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |