Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I recently came to know that Alteryx doesn't support Denodo Data sources. We at our company are using Denodo as a data virtualization tool and also Alteryx is used for data blending. The request is for Alteryx to start supporting Denodo as a data source so that our company can reach out to Alteryx for any support related issues with Denodo.

Hello!

I remember a while ago running into a peculiar error:

'The R.exe exit code (4294967295) indicted an error'. This was peculiar, as the data output was still seemingly correct, however, the error made me double-check the community for answers.

There are some very technical sources here:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/R-tool-Fake-Errors/td-p/25163

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509

but in short, this seems to be caused by a return code from C++ libraries, being understood by R as an error. Its a very inconsistent error, typically caused by low memory. This creates what most call a 'fake error' - the code runs perfectly fine, but seems to produce an error that doesn't actually indicate anything wrong.

Within those threads, its also stated that calling the garbage collection function (gc()) does tend to solve the problem on R exit, however this requires a user to understand basic R, and have access to the macro to be able to change the code - thus making predictive analytics more intimidating than it already is for new Alteryx users.

The first occurrence of this error seems to be way back in 2015, however the error is still being reported by users (see posts from 2020 and 2021):

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Password-protected-Excel-files-R-solut...

https://community.alteryx.com/t5/Alteryx-Designer-Knowledge-Base/Error-The-R-exe-exit-code-n-indicat...

An important issue of these 'fake errors', is not only that they cause confusion, but also that they will cause analytic apps and server workflows to not work as expected, and stop running depending on the configuration.

My suggestion would be to revisit this issue, as by my understanding it occurs inconsistently, and calling garbage collection does not always seem to fix it. Even if the Error message is still created, it may be worth Alteryx suppressing these errors, in the case they are not real errors.

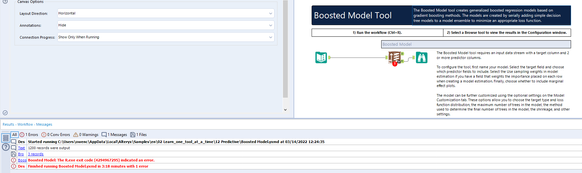

Steps to reproduce:

(as mentioned, its very inconsistent)

1. Open the Boosted Model example workflow

2. *10 the number of maximum trees in the model, in the boosted model configuration (Model customization)

3. Run the workflow, inspect the results (which are seemingly correct), and the error message in the results window.

Hope this helps!

TheOC

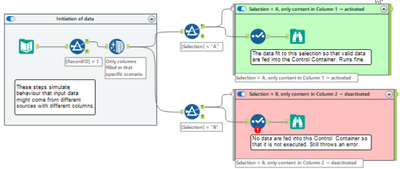

Sometimes, Control Containers produce error messages even if they are deactivated by feeding an empty table into their input connection.

(Note that this is a made up example of something which can happen if input tables might be from different sources and have different columns so that they need separated treatment.)

According to the product team, this is expected behaviour since a selection does not allow zero columns selected. This might be true (which I doubt a bit), but it is at least counter-intuitive. If this behaviour cannot be avoided in total, I have a proposal which would improve the user experience without changing the entire workflow validation logic.

(The support engineer understands the point and has raised a defect.)

Instead of writing messages inside Control Containers directly to the log output (on screen, in logfile) and to mark the workflow as erroneous, I propose to introduce a message (message, warning, error) stack for tools inside Control Containers:

- When the configuration validation is executed:

- Messages (messages, warnings, errors) produced outside of Control Containers are output to the screen log and to the log files (as today).

- Messages (messages, warning, errors) produced inside of Control Containers are not yet output but stored in a message stack.

- At the moment when it is decided whether a Control container is activated or deactivated:

- If Control Container activated: Write the previously stored message stack for this Control Container to the screen and to the log output, and increase error and warning counts accordingly.

- If Control Container deactivated: Delete the message stack for this Control Container (w/o reporting anything to the log and w/o increasing error and warning count).

This would result in a different sequence of messages than today (because everything inside activated Control Containers would be reported later than today). Since there’s no logical order of messages anyways, this would not matter. And it would avoid the apparently illogical case that deactivated Control Containers produce errors.

Where it stands now, only a file input tool can be used to pull data from Google BigQuery tables. The issue here is that the data is streamed and processed locally, meaning the power of BigQuery processing isn't actually being leveraged.

Adding BigQuery In-Database as a connection option would appeal to a wide audience. BigQuery is also standard SQL compliant with the SQL 2011 standard, so this may make for an even easier integration.

The guide line of Shape File is below. They recommend that you use only letters and numbers.

"Spaces and certain characters are not supported in field names. Special characters include hyphens such as in x-coordinate and y-coordinate; parentheses; brackets; and symbols such as $, %, and #. Essentially, eliminate anything that is not alphanumeric or an underscore."

But many GIS tools can read and write 2 byte field name at Shape File.

(e.g. QGIS https://qgis.org/en/site/index.html)

And Esri Japan says Shape file can use 2 byte field name.

https://www.esrij.com/gis-guide/esri-dataformat/shapefile/

We want to use 2 byte field name at Shape File on Alteryx Designer.

(e.g. UTF-8 , Shift-JIS )

Thanks,

Kajitani

I believe many have voiced out this as their pain point within the Community. Essentially, there is no straightforward method to import multiple Excel files which are password protected.

I understand that there is an R solution suggested by several users, however, that is not ideal as it can be difficult to obtain permission from internal Tech team to install the package on the users' computers.

Re-saving them without password is not only a hassle, but also raises concerns for data protection and security.

This may have been raised before, but we would like to see the equivalent of PRICE and YIELD formulas from Excel in Alteryx's Formula tool. I believe many users in the finance industry are using formulas like these frequently and it would be helpful to be able to replicate the formula in Alteryx.

Manually building the formula is possible, however it is unnecessarily complicated especially if you are working on different calendar basis e.g. 30 /360 European.

Thank you!

At the moment if a part of your python code takes more than 30s to run, Jupyter times out and Alteryx cancels the workflow. This makes the Python Tool unusable for anything intensive and the timeout should be removed by default or be configurable per workflow.

I've made this idea as none of the solutions in these threads feel satisfactory:

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

Hi all,

Something really interesting I found - and never knew about, is there are actually in-DB predictive tools. You can find these by having a connect-indb tool on the canvas and dragging on one of the many predictive tools.

For instance:

boosted model dragged on empty campus:

Boosted model tool deleted, connect in-db tool added to the canvas:

Boosted Model dragged onto the canvas the exact same:

This is awesome! I have no idea how these tools work, I have only just found out they are a thing. Are we able to unhide these? I actually thought I had fallen into an Alteryx Designer bug, however it appears to be much more of a feature.

Sadly these tools are currently not searchable for, and do not show up under the in-DB section. However, I believe these need to be more accessible and well documented for users to find.

Cheers,

TheOC

Our company often builds applications where we need the ability for it to dynamically update dropdowns based on a user's previous selections.

For example:

- A user needs to select their Server, database, and table for analysis (3 dropdowns).

- When the user selects their server, a query is run to get a list of all databases on that server. Then the database dropdown will automatically populate with this list of databases.

- The user then makes a database selection, and a query is then run to get all tables within that database. The table dropdown will automatically populate with this list of tables.

- The user makes their table selection, and then runs their analysis using the server, database, and table variables with values that they have selected from each dropdown.

We can do this in other programs, but unfortunately the lack of dynamic selections/dependent dropdowns is a big limitation for us when building Alteryx applications. Our current workarounds are chaining applications together, or using PyQt within the workflow. Chaining is clunky and often causes unforeseen issues when uploading to Server with errors that are non-descriptive, and using PyQt comes with Python versioning issues.

If this interactivity can somehow be added to Alteryx applications it would be a huge upgrade to our current Alteryx processes. Any suggestions for further workarounds would also be helpful!

Thank you,

Amanda

Hi,

This is a small thing but it really messes with my OCD. It would be great if we could manually move the connection lines between tools , this would make large workflows a lot nicer to look at and easier to follow.

I am aware of the wireless tool but i like to see connections, just want them a bit neater.

Thanks

Assuming some source control or versioning is in place, a formal compare tool would be a nice addition. This would be useful for determining what is different between two versions of a workflow, and that knowledge is very useful when modifying a production process: when formally moving a new (modified) process into production, part of the checks and balances would be to run a formal comparison against the workflow being replaces, and ensure that all differences are accounted for.

This sort of audit is notoriously difficult when the differences are buried deep in the configuration settings of various tools within Alteryx. I do see that the .yxmd files are XML based, so perhaps we could create our own compare tool based thereon, but it would be better (more trustworthy) to have one formally provided by Alteryx. Thanks!

Tableau v2018.3 introduced multiple table extracts. These are particularly useful for fact table to fact table joins and fact table to entitlement table joins for row-level security where the number of rows created by the join and/or size of join results would be prohibitively large. Also they are useful for fact table to spatial joins where we might have multiple spatial objects (for example custom province/district/health facility catchment) for each row of fact table data.

So in Alteryx I'd like to be able to specify 2+ tables & their join keys and then write out a .hyper multiple tables extract.

Jonathan

I would like Alteryx to offer a native Fuzzy Join tool that allows two datasets with completely different schemas to be joined using Fuzzy matching logic (Dice coefficient algorithm, Levenshtein distance algorithm, etc.). Any matches would be output to a new table with either exactly matched or fuzzy matched primary and secondary records. I want this tool be supported by Server as well.

Originally posted here: https://community.alteryx.com/t5/Data-Sources/Input-Data-Tool-Can-we-control-use-of-Cursors/m-p/5871...

Hi there,

I've profiled a simple query using SQL Server Profiler (Query: Select * from northwind.dbo.orders; row limit: 107; read Uncommitted: true) and interestingly it opens up a cursor if you connect via ODBC or SQL Native; but not by OleDB - full queries and profile details are on the discussion thread above.

However - in some circumstances a cursor is not usable - e.g. https://community.alteryx.com/t5/Data-Sources/Error-SQL-Execute-Cursors-Not-supported-on-Clustered-C... because SQL doesn't allow cursors on columnstore indexed tables & columns

Is there any way (even if we need to manually adjust via the XML settings) to ask Alteryx not to create the cursor and execute directly on the server as written?

Thank you

Sean

Instead of using the arrows, I think it would be nice to be able to drag and drop the questions to rearrange them in the Interface Designer. This would go more hand in hand with the drag and drop experience of Alteryx.

Additionally, when a lot of interface tools are on the canvas, Designer really slows down if you need to rearrange the order of the tools in the Interface Designer. I would like to see if there is any way that this can be sped up.

Thanks!

Hi Alteryx Devs -

Doing a simple, but cumbersome workflow with a lot of database inputs. It was going slow, and every time I tried to paste something into it from another workflow, I'd get lock ups. Get the 'Workflow must be run for field meta info to be accurate' error. Google tells me that I need to check the 'Disable Auto Configuration' option. OK. It is in user settings, but this means for my other workflows that don't have problems like this (i.e., 99% of my workflows), I'll have that functionality applied when it really is only a problem for the minority.

Should be a relatively simple fix to give this option at workflow properties time instead of user settings time.

Thanks.

brian

With the continued growth of Graph Databases, it would be nice for Alteryx to creates a new tool set that would allow input/output connectors for Graph Databases like Neo4j which software tools like Pentaho and Talend already have.

Keith.

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 31 | |

| 7 | |

| 3 | |

| 3 | |

| 3 |