Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi UX interested parties,

Here are some ideas for you to consider:

1. These lines are BORING and UNINFORMATIVE. I'd like to understand (pic = 1,000 words) more when looking at a workflow.

- A line could communicate:

- Qty of Records

- Size of Data

- Is the data SORTED

- What sort order

- Quality of Data

If you look at lines A, B, C in the picture above. Nothing is communicated. Weight of line, color of line, type of line, beginning line marker/ending line marker, these are all potential ways that we could see a picture of the data without having to get into browse everywhere to see the information. If we hover over the data connection, even more information could appear (e.g. # of records, size of file) without having to toggle the configuration parameters.

2. Wouldn't it be nice to not have to RUN a workflow to know last SAVED metadata (run) of a workflow? I'd like to open a "saved" workflow and know what to expect when I run the workflow. Heck, how long does it take the beast to run is something that we've never seen unless we run it.

3. I'd like to set the metadata to display SORT keys, order. Sort1 Asc, Sort 2 Desc .... This sort information is very helpful for the engine and I'll likely post about that thought. As a preview, when a JOIN tool has sorted data and one of the anchors is at EOF, then why do we need to keep reading from the other anchor? There won't be another matched record (J) anchor. In my example above, we don't ask for the L/R outputs, so why worry about the rest of the join?

4. Have you ever seen a map (online) that didn't display watermark information? I think that the canvas experience should allow for a default logo (like mine above, but transparent) in the lower right corner of the canvas that is visible at all times. Having the workflow name at the top in a tab is nice, but having it display as a watermark is handy.

5. Once the workflow has RUN, all anchors are the same color. How about providing GREY/White or something else on EMPTY anchors instead of the same color? This might help newbies find issues in JOIN configuration too.

6. If the tool has ERRORs you put a RED exclamation mark. I despise warnings, but how about a puke colored question mark? With conversion errors, the lines could be marked to let you know the relative quantity of conversion errors (system messages have a limit)

Just a few top of mind things to consider ....

Cheers,

Mark

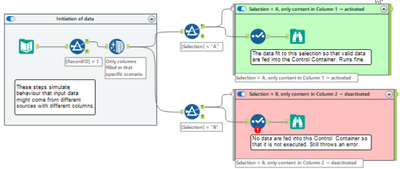

Sometimes, Control Containers produce error messages even if they are deactivated by feeding an empty table into their input connection.

(Note that this is a made up example of something which can happen if input tables might be from different sources and have different columns so that they need separated treatment.)

According to the product team, this is expected behaviour since a selection does not allow zero columns selected. This might be true (which I doubt a bit), but it is at least counter-intuitive. If this behaviour cannot be avoided in total, I have a proposal which would improve the user experience without changing the entire workflow validation logic.

(The support engineer understands the point and has raised a defect.)

Instead of writing messages inside Control Containers directly to the log output (on screen, in logfile) and to mark the workflow as erroneous, I propose to introduce a message (message, warning, error) stack for tools inside Control Containers:

- When the configuration validation is executed:

- Messages (messages, warnings, errors) produced outside of Control Containers are output to the screen log and to the log files (as today).

- Messages (messages, warning, errors) produced inside of Control Containers are not yet output but stored in a message stack.

- At the moment when it is decided whether a Control container is activated or deactivated:

- If Control Container activated: Write the previously stored message stack for this Control Container to the screen and to the log output, and increase error and warning counts accordingly.

- If Control Container deactivated: Delete the message stack for this Control Container (w/o reporting anything to the log and w/o increasing error and warning count).

This would result in a different sequence of messages than today (because everything inside activated Control Containers would be reported later than today). Since there’s no logical order of messages anyways, this would not matter. And it would avoid the apparently illogical case that deactivated Control Containers produce errors.

Would love to have the ability to connect S3 to alteryx using the AWS IAM role instead of needing an AWS access key/secret key.

IT will not hand out the Access/secret key so it would be great to connect to S3 without needing a password.

Hello,

According to wikipedia :

A partition is a division of a logical database or its constituent elements into distinct independent parts. Database partitioning is normally done for manageability, performance or availability reasons, or for load balancing. It is popular in distributed database management systems, where each partition may be spread over multiple nodes, with users at the node performing local transactions on the partition. This increases performance for sites that have regular transactions involving certain views of data, whilst maintaining availability and security.

Well, basically, you split your table in several parts, according to a field. it's very useful in term of performance when your workflows are in delta or when all your queries are based on a date. (e.g. : my table helps me to follow my sales month by month, I partition my table by month).

So the idea is to support that in Alteryx, it will add a good value, especially in In-DB workflows.

Best regards,

Simon

Alteryx Server is great, but very costly. Having the ability to install the Alteryx engine without the Designer, thus allowing you to share Workflows/Apps with users directly. This could be licensed on a per user basis as well, but a reduced cost.

This also allows for some more advanced workflows that do not work in the Gallery.

At the moment containers either expand and overlap other tools, or you have to leave space for them (defeating the original purpose of using them). Is there a way we can have the containers expansion shift the workflow so the others tools shift down / right to account for this expanision?

It is my understanding that hidden in each yxdb is metadata. The following use case is common:

As an Alteryx Developer/Designer I want to know the source of a yxdb.

Ideally, I would know as much about the workflow (name, path, workflow version, AYX version, userid) as possible.

It would be awesome to be construct a workflow that would allow me to search the metadata of yxdb's on my client computers quickly.

Cheers,

Mark

We all love seeing this. And, it's fairly easy to fix, just go find the macro and insert a new copy. But, then you have to remember the configuration and hope that it was simple.

With the tool that's there, the XML still contains the configuration, all that's missing is the tool path. It would be great to be able to right click and repair the path from the context of the missing macro.

Who needs a 1073741823 sized string anyways? No one, or close enough to no one. But, if you are creating some fancy new properties in the formula tool and just cranking along and then you see that your **bleep** data stream is 9G for nine rows of data you find yourself wondering what the hell is going on. And then, you walk your way way down the workflow for a while finding slots where the default 1073741823 value got set, changing them to non-insane sized strings, and the your data flow is more like 64kb and your workflow runs in 3 seconds instead of 30 seconds.

Please set the default value for formula tools to a non-insane value that won't be changed by default by 99.99999% of use cases. Thank you.

I would like to suggest creating a fix to allow In-DB Connect tool's custom SQL to read Common Table Expressions. As of 2018.2, the SQL fails due to the fact that In-DB tools wrap everything in a select * statement. Since CTE's need to start with With, this causes the SQL to error out. This would be a huge help instead of having to write nested sub selects in a long, complex SQL code!

Currently the install of AIS is tied to a specific version of Designer. However due to the feature changes being brought to AIS it would be great to be able to upgrade the AIS install separate to the version of Designer.

It might be that this is only possible for a certain number releases, due to underlying dependencies such as python version, but it would be great for example that I could get the newer AIS features without needing to upgrade Designer (which is set by IT policy).

At the moment, at least for Postgres and ODBC connections, the DCM only supports a names DSN that must be installed on each machine running Designer or Server. However, the ODBC admin function is admin only within my company, which makes DCM more trouble than it is worth to use.

Connection strings work well in the workflows, have been implemented on the gallery before, and do not require access to the ODBC admin to implement. Could DCM please be improved to support native connection strings?

When email body gets imported using latest version of the Outlook 365 tool, this tool removes the new line separators from the message body, which makes it difficult to parse relevant information out of the message body. New line separators are there prior to message being imported into Alteryx as can be verified when importing same message using different tools (for example, Python or Power Automate). Without new line separators it is not possible to accurately parse message body using Alteryx. Please add the enhancement to the Outlook 365 tool so that it doesn't remove new line separators from the message body.

This limitation of the Outlook 365 tool has been discussed in the community

Please add official support for newer versions of Microsoft SQL Server and the related drivers.

According to the data sources article for Microsoft SQL Server (https://help.alteryx.com/current/DataSources/SQLServer.htm), and validation via a support ticket, only the following products have been tested and validated with Alteryx Designer/Server:

Microsoft SQL Server

Validated On: 2008, 2012, 2014, and 2016.

- No R versions are mentioned (2008 R2, for instance)

- SQL Server 2017, which was released in October of 2017, is notably missing from the list.

- SQL Server 2019, while fairly new (~6 months old), is also missing

This is one of the most popular data sources, and the lack of support for newer versions (especially a 2+ year old product like Sql Server 2017) is hard to fathom.

ODBC Driver for SQL Server/SQL Server Native Client

Validated on ODBC Driver: 11, 13, 13.1

Validated on SQL Server Native Client: 10,11

- ODBC Driver 17+ is not mentioned, even though it was released in February of 2018. https://docs.microsoft.com/en-us/sql/connect/odbc/windows/release-notes-odbc-sql-server-windows?view...

- SQL Server Native Client is deprecated. It is being replaced by Microsoft OLE DB Driver for SQL Server. However, there is not a mention of Microsoft OLE DB Driver for SQL Server. The latest version of this is 18.3.0. https://docs.microsoft.com/en-us/sql/connect/oledb/release-notes-for-oledb-driver-for-sql-server?vie...

...and now for probably the most trivial request in a long time, but also one of the most annoying things (for me anyway)..........

When viewing a browse window, it's so darn awesome to be able to sort and search. However, it would be even awesomeer (yes, I just made up a word) if when you actually conducted a sort or search, you could make your selection (for sorts) or type in your criteria (for searches) and simply press the "Enter" button on the keyboard and have it do the same thing that selecting "Apply" with the mouse does. This is common Windows functionality and I think should be easy to implement.

A common problem with the R tool is that it outputs "False Errors" like the following: "The R.exe exit code (4294967295) indicted an error"

I call this a false error because data passes out of the R script the same as if there were no error. As such, this error can generally be ignored. In my use case, however, my R tool is embedded within an iterative macro, and the error causes the iterator to stop running.

I was able to create a workaround by moving the R tool to a separate workflow and calling it from the CReW runner macro within my iterator, effectively suppressing the error message, but this solution is a bit clumsy, requires unnecessary read/writes, and uses nonstandard macros.

I propose the solution suggested by @mbarone (https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509) to only generate an error when the R return code is 1, indicating a true error, and to either ignore these false errors or pass them as warnings. This will allow R scripts and R-based tools to be embedded within iterative macros without breaking.

In order to make the connections between Alteryx and Snowflake even more secure we would like to have the possibility to connect to snowflake with OAuth in an easier way.

The connections to snowflake via OAuth are very similar to the connections Alteryx already does with O365 applications. It requires:

- Tenant URL

- Client ID

- Client Secret

- Get Authorization token provided by the snowflake authorization endpoint.

- Give access consent (a browser popup will appear)

- With the Authorization Code, the client ID and the Client Secret make a call to retrieve the Refresh Token and TTL information for the tokens

- Get the Access Token every time it expires

With this an automated workflow using OAuth between Alteryx and Snowflake will be possible.

You can find a more detailed explanation in the attached document.

when you render out to an excel file, the excel file is created as a new file. You cannot render to an existing excel file.

I'd like to see this functionality. I have a client who has a workbook with multiple formatted sheets and they'd like to render an addiitional sheet of formatted data out from Alteryx into the existing workbook.

Our company has a need to link a new data source in Athena. We have been able to establish a connection using the input functionality however the connection is so slow it is unusable. We need to have Alteryx build an In Database option for Athena to allow us to link our data lake to Alteryx.

At present, Alteryx allows for users to run 2 versions of Alteryx at once - one installed using the "Admin Installer" and one via the "non-admin installer"

However, in corporate environments, only the Admin Installer can be used (all installers are repackaged for corporate environment / endpoint management)

This leads to a situation were we cannot run two or more different versions of Alteryx on one machine (like you can with Visual Studio or other platforms). This also prevents us from participating in the BETA program because the BETA version would overwrite the users's current version. Finally - this also makes version upgrades more risky since we cannot run the new version in parallel for a period to evaluate and identify any issues.

Request: Please can you change the installer for Alteryx to default to parallel install per version - so that a user can run 2019.1; 2019.2; and 2019.2 BETA on one machine in a way that is fully isolated (i.e. no shared components - have to be able to uninstall one instance cleanly and leave the others in a fully functional state).

Many thanks

Sean

- New Idea 290

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,550 -

Documentation

64 -

Engine

127 -

Enhancement

342 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 11 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |