Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The email tool, such a great tool! And such a minefield. Both of the problems below could and maybe should be remedied on the SMTP side, but that's applying a pretty broad brush for a budding Alteryx community at a big company. Read on!

"NOOOOOOOOOOOOOOOOOOO!"

What I said the first time I ran the email tool without testing it first.

1. Can I get a thumbs up if you ever connected a datasource directly to an email tool thinking "this is how I attach my data to the email" and instead sent hundreds... or millions of emails? Oops. Alteryx, what if you put an expected limit as is done with the append tool. "Warn or Error if sending more than "n" emails." (super cool if it could detect more than "n" emails to the same address, but not holding my breath).

2. make spoofing harder, super useful but... well my company frowns on this kind of thing.

-

Category Reporting

-

Desktop Experience

-

Feature Request

-

Tool Improvement

Bring back the Cache checkbox for Input tools. It's cool that we can cache individual tools in 2018.4.

The catch is that for every cache point I have to run the entire workflow. With large workflows that can take a considerable amount of time and hinders development. Because I have to run the workflow over and over just to cache all my data.

Add the cache checkbox back for input tools to make the software more user friendly.

-

Tool Improvement

Frequently with more complicated tool configurations I end up having to setup certain elements over and over again. Would be great to have a one click "use this as the default" configuration that would follow my profile and apply to all future drags of that tool onto the workflow. Configuration elements that depend on the input fields would not be impacted.

Also an apply all feature to apply the similar configuration elements to all tools of the same tool type.

Example Configuration Elements

Comment Tool - Shape, Font, text Color, Background Color, Alignment...

Tool Container - Text Color, fill color , border color, transparency, margin

Table Tool - Default Table Settings

Union Tool - "Auto config by name", Actions when fields differ

Data Clensing - all configuration elements

Sample - all configuration elements

-

Setup & Configuration

-

Tool Improvement

-

Tool Improvement

I would like to request that the Python tool metadata either be automatically populated after the code has run once, or a simple line of code added in the tool to output the metadata. Also, the metadata needs to be cached just like all of the other tools.

As it sits now, the Python tool is nearly unusable in a larger workflow. This is because it does not save or pass metadata in a workflow. Most other tools cache temporary metadata and pass it on to the next tool in line. This allows for things like selecting columns and seeing previews before the workflow is run.

Each time an edit is made to the workflow, the workflow must be re-run to update everything downstream of the Python tool. As you can imagine, this can get tedious (unusable) in larger workflows.

Alteryx support has replied with "this is expected behavior" and "It is giving that error because Alteryx is

doing a soft push for the metadata but unfortunately it is as designed."

-

Category Predictive

-

Desktop Experience

-

Tool Improvement

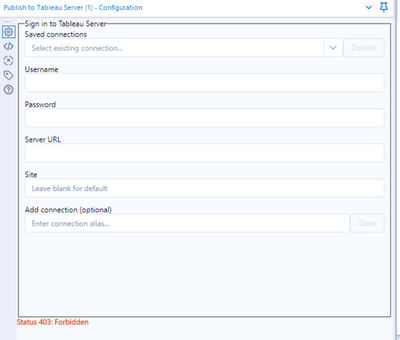

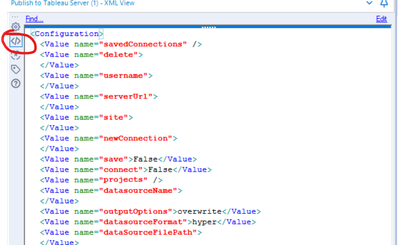

Version 2.0 of the publish to Tableau tool does not work for the initial authentication if Tableau Server has CORS enabled.

This only impacts the UI for the tool that completes the sign in to Tableau Server and provides back the list of projects, data source names, etc.

When CORS is enabled, a 403 error is received with a response of invalid CORS request.

If the XML on the tool is manually edited and the tool is run, it works fine to publish to Tableau, with or without CORS enabled.

version 1.0.9 also works with no issues, but is not the ideal solution when users are on newer versions of Alteryx designer

Additionally calls to the REST API from a local desktop using postman or python work with or without CORS enabled.

Based on conversations with Alteryx support, the tool was not tested with CORS enabled, thus the bundle.js file completing the authentication for the GUI must not account for Tableau Servers with CORS enabled.

For those who build solutions with Tableau Server that utilize the REST API (e.g. like custom portals) CORS must be enabled to function, but it limits the ability to use version 2.0 of the publish to Tableau tool.

-

Tool Improvement

API Security requirements are constantly evolving and strengthening. As API architectures migrate from traditional authentication models (Basic, OAuth, etc.) to more secure, certificate-based models, like MTLS/MSSL, leveraging Alteryx Designer will become increasingly difficult, especially for larger organizations trying to scale the use of Alteryx across a large user base, with vastly diverse skillsets.

I realize issuing API calls with certificates is possible via the Run Command tool. We consider this a temporary workaround, and not a permanent, strategic solution. The Run Command tool can be clunky to use when passing in variables and passing the output back into the workflow for downstream processing.

Therefore, I would like to request a more scalable approach to issuing MTLS/MSSL API calls. Can an option be added to the Download Tool to allow for certificates to be passed on API calls?

-

API

-

Tool Improvement

-

Tool Improvement

Maybe it was a lack of planning, but I've had a need to rename a variable within a workflow and would like to (outside of the XML view) be able to rename the variable so that downstream tools don't have to be reconfigured (e.g. formula, join, union).

-

General

-

Tool Improvement

Hello .. me again!

Please can you fix the copy and paste of renames across field. It's a behavior that I see in many tool's grids and drives me mad. Its not just select.

Take the attached screen shot. In the select tool, i've renamed "test 2" to "rename2". Fine it works. No issue.

I then copy rename2 and paste into the test3 field, and it copys the entire row's data (and metadata) into that little box, tabs, spaces the lot. I end up with something like the screenshot. Really not sure it was meant to be designed this way, as I cant really see the point.

Please can you fix this bug

Jay

-

Tool Improvement

-

User Experience Design

Now

File Specification: Specify the type of files to return.

- *.*: Return all file types in the specified directory. The default value.

- *.csv: Return all csv files in the specified directory.

- temp*.*: Return all files that begin with temp in the file name: temp1.txt and temp_file.yxdb

Idea

now the file specification option can only be one or all.

is it possible to specify multiple file types.For example *.csv or *.xlsx ?

-

Tool Improvement

Alteryx should raise a Conversion Error if re-sizing of a string field in a Select tool results in data truncation. It does this for integers but if a string is truncated there is no indication of this in the workflow output.

-

Tool Improvement

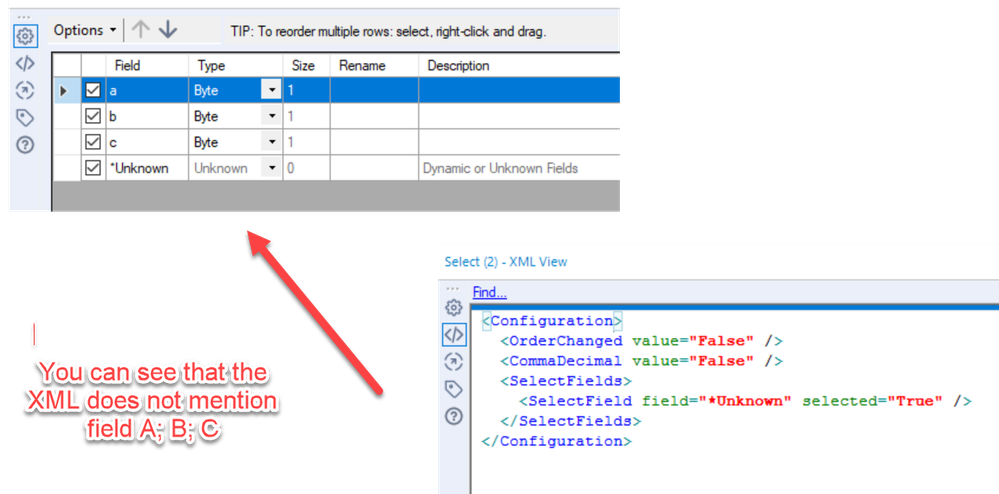

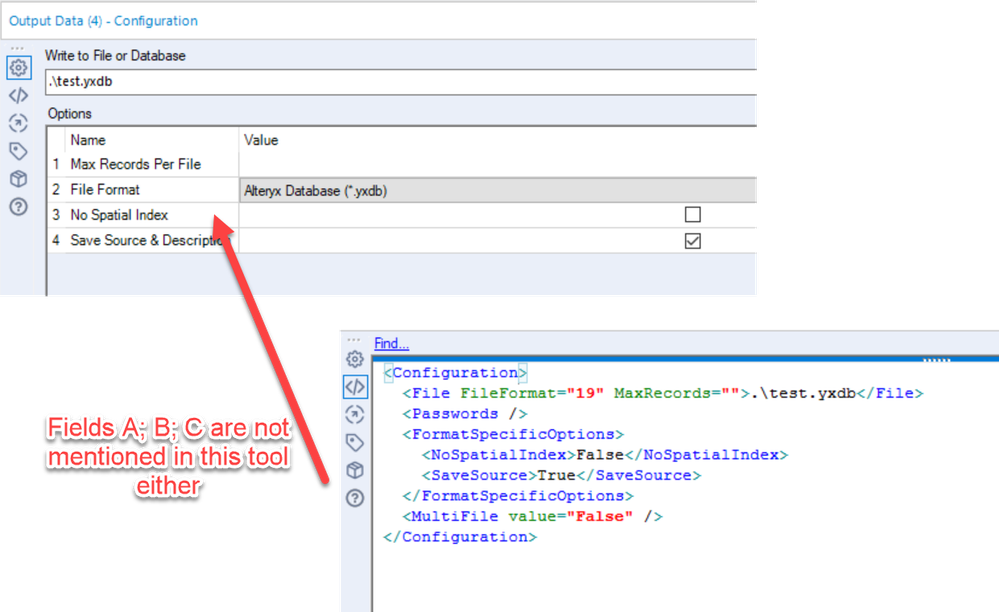

We have a need to be able to trace the lineage of fields being processed through Alteryx - and it has to be done at a field level to satisfy our regulator. In essence, we have to be able to show exactly where a particular field came from, and demonstrate that we can trust this field. NOTE: If we could do this - then we could also use this information to make EVERY canvas faster, by checking for unused fields and making suggestions to remove these unused fields early in the flow.

In order to do this - it would be great if there were an option to force explicit field names in the Alteryx XML so that we can trace a specific field.

- Alteryx currently only makes a note of a field name if it is changing that field which is very economical - but it makes tracing fields impossible.

- Desire is for every tool to write the field list to the XML definition for every field that it knows about (just like it looks in the UI)

cc: @AdamR_AYX @jpoz @Claje

Example:

Here's a simple canvas:

- 3 fields coming in from an input

- Select tool with these 3

- output of three fields into a file

These three fields are not mentioned anywhere in the XML

-

General

-

Tool Improvement

-

User Experience Design

Hello Dev Gurus -

The message tool is nice, but anything you want to learn about what is happening is problematic because the messages you are writing to try to understand your workflow are lost in a sea of other messages. This is especially problematic when you are trying to understand what is happening within a macro and you enable 'show all macro messages' in the runtime options.

That being said, what would really help is for messages created with the message tool to have a tag as a user created message. Then, at message evaluation time, you get all errors / all conversion warnings / all warnings / all user defined messages. In this way, when you write an iterative macro and are giving yourself the state of the data on a run by run basis, you can just goto a panel that shows you just your messages, and not the entire syslog which is like drinking out of a fire hose.

Thank you for attending my ted talk regarding Message Tool Improvements.

-

API SDK

-

Category Developer

-

Feature Request

-

Tool Improvement

The new Paste Before/After feature is awesome, as is the Cut & Connect Around.

https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Paste-Before-After/idc-p/510292#M12071

What would be even better is to allow the combination of the two. E.G. It is not currently possible to copy or cut multiple tools and paste before/after, as this functionality only works for a single tool that's copied.

Thanks,

Joe

-

Feature Request

-

General

-

Tool Improvement

-

User Experience Design

I see many posts where users want to view numeric or string data as monetary values. I think that it would be friendly to have a masking option (like excel) where you could choose a format or customize one for display. The next step is to apply the formatting to the workflow so that folks who want to export the data can do so.

cheers,

mark

-

Feature Request

-

Localization

-

Tool Improvement

-

User Experience Design

Sometimes I want to test portions of a workflow, independent of other portions. I find myself adding containers, just so I can disable some of the time consuming portions that are not part of my test. It would be nice to be able to enable/disable any portion of a workflow, on the fly. Or maybe just disable/enable any connection with a right-click.

Thanks!

Gary

-

Tool Improvement

In the moment we using Alteryx and Tableau to publish data from the Azure environment. In our focus is to publish the data with the ADLS Connector. For us would be perfect if as well parquet would be supported. In end we are in competition against PowerBI and these software supports parquet files.

-

Tool Improvement

When the append tool detects no records in the source, it throws a warning. I would like to have the ability to supress this warning. In general, all tools should have similar warning/error controls.

-

Category Join

-

Desktop Experience

-

Tool Improvement

Idea:

I know cache-related ideas have already been posted (cache macros; cache tools), but I would like it if cache were simply built into every tool, similar to the way it is on the Input Tool.

Reasoning:

During workflow development, I'll run the workflow repeatedly, and especially if there is sizeable data or an R tool involved, it can get really time consuming.

Implementation ideas:

I can see where managing cache could be tricky: in a large workflow processing a lot of data, nobody would want to maintain dozens of copies of that data. But there may be ways of just monitoring changes to the workflow in order to know if something needs to be rebuilt or not: e.g. suppose I cache a Predictive Tool, and then make no changes to any tool preceeding it in the workflow... the next time I run, the engine should be able to look at "cache flags" and/or "modified tool flags" to determine where it should start: basically start at the "furthest along cache" that has no "modified tools" preceeding it.

Anyway, just a thought.

-

Engine

-

Feature Request

-

Tool Improvement

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |