Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The "Manage Data Connections" tool is fantastic to save credentials alongside the connection without having to worry when you save the workflow that you've embedded a password.

Imagine if - there were a similar utility to handle credentials/environment variables.

- I could create an entry, give it a description, a username, and an encrypted password stored in my options, then refer to that for configurations/values throughout my workflows.

- Tableau credentials in the publish to tableau macro

- Sharepoint Credentials in the sharepoint list connector

- When my password changes I only have to change it in one place

- If I handoff the workflow to another user I don't have to worry about scanning the xml to make sure I'm not passing them my password

- When a user opens my workflow that doesn't have a corresponding entry in their credentials manager they would be prompted using my description to add it.

- Entries could be exported and shared as well (with passwords scrubbed)

Example Entry Tableau:

| Alias | Tableau Prod |

| Description | Tableau Production Server |

| UserID | JPhillips |

| Password | ********* |

| + |

Then when configuring a tool you could put in something like [Tableau Prod].[Password] and it would read in the value.

Or maybe for Sharepoint:

| Alias | TeamSP |

| Description | Team sharepoint location |

| UserID | JPhillips |

| Password | ********* |

| URL | http://sharepoint.com/myteam |

| + |

Or perhaps for a team file location:

| Alias | TeamFiles |

| Description | Root directory for team files |

| Path | \\server.net\myteam\filesgohere |

| + |

Any of these values could be referenced in tool configurations, formulas, macro inputs by specifying the Alias and field.

-

Feature Request

-

General

-

Tool Improvement

I love this tool, but think it would be improved by including an option to create a column per delimiting character. This could be added in the number of columns selector box. In the case where 1 row has more delimiters than another, null columns can be created. Without this option you have to Regex count the delimiters, select the max and then embed the Text to columns tools in a macro and then pass the max columns as a param. Would be nice to resolve all this in the main tool.

Thanks, nick

-

Feature Request

-

Tool Improvement

I personally think it would work better to tab from 'Select Column' to 'Enter Expression Here' and not the 'Functions' List as probably people who are tabbing would immediately like to start typing the formula rather than going through functions, fields, etc.

-

General

-

Tool Improvement

-

User Experience Design

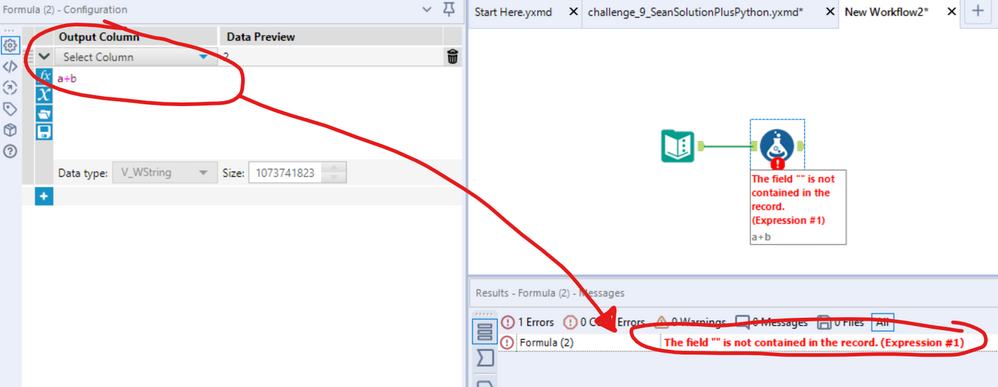

If you forget to put a name on a new column in the formula tool - the error message is

The field "" is not contained in the record. (Expression #1)

Please could you replace this with a user-friendly message which is self descriptive like:

"Please provide a name for the new column created in expression 1"?

-

Tool Improvement

Please add ablity to globally, within a module, forget all missing fields.

-

Tool Improvement

A common problem with the R tool is that it outputs "False Errors" like the following: "The R.exe exit code (4294967295) indicted an error"

I call this a false error because data passes out of the R script the same as if there were no error. As such, this error can generally be ignored. In my use case, however, my R tool is embedded within an iterative macro, and the error causes the iterator to stop running.

I was able to create a workaround by moving the R tool to a separate workflow and calling it from the CReW runner macro within my iterator, effectively suppressing the error message, but this solution is a bit clumsy, requires unnecessary read/writes, and uses nonstandard macros.

I propose the solution suggested by @mbarone (https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509) to only generate an error when the R return code is 1, indicating a true error, and to either ignore these false errors or pass them as warnings. This will allow R scripts and R-based tools to be embedded within iterative macros without breaking.

-

Category Macros

-

Desktop Experience

-

Tool Improvement

I propose another wildcard, %ErrorLog%, that would simply output the error codes and narratives instead of having to use the %OutputLog% to see these. I'd rather not have a 4 MB text email depicting every line of code and action in the module when all I really need to see are the errors.

-

General

-

Tool Improvement

Hi Alteryx community,

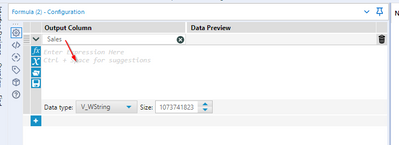

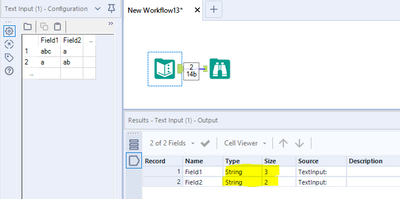

It would be really nice to have v_string/v_wstring and max character size as a standard for text columns.

it is countless how many times I found that the error was related to a string truncation due to string size limit from the text input.

Thumbs-up those who lost their minds after discovering that the error was that! 😄

-

Category Input Output

-

Data Connectors

-

Tool Improvement

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

Please add xlsx files within the onedrive input/output tool

-

Tool Improvement

Currently when you add an event to notify you of workflow failure / success - you have to enter the SMTP settings every time. It would be more efficient to set this up as a user setting which can be used for the default across all canvasses that this user creates.

-

General

-

Tool Improvement

In order to debug a call to a REST API - it is often necessary to take the web call, and pop this into a web browser. Can you add a second output to a RestAPI tool (a derivative of the Download tool) that has a second output that provides the full web call that was made, including the full parameterised URL. This would make it MUCH easier to debug rest API calls.

cc: @TashaA

Similar to this idea https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Download-tool-Request-and-Response-details/i...

except my preference would be to pull Rest API calls into a more specific tool and give a second output for the responses

-

Tool Improvement

Hi there,

We often get the following error message from the download tool

"00:00:23.555 - Error - ToolId 106: Error in libCURL: You have found a bug. Replicate, then let us know. We shall fix it soon."

Unfortunately this seems to be a transient error so we've not been able to replicate this in a useful & repeatable way. However - we see this happening at least a few times per week on one of our servers, so this is a continuing issue.

Please could you provide more detailed error messaging on the download tool so that this error can be debugged and/or replicated?

Many thanks

Sean

-

Tool Improvement

It would be great if there was an output option for excel files where you could overwrite the data in the sheet, but keep the formatting in the sheet. Similar to how the Paste Values option works in Excel. This would allow me to create a template with data validation, conditional formatting, column widths, cell fill colors, etc and set a workflow to run on a schedule and just paste the data into the existing template.

To get around this right now I have to output it to a separate tab and then paste the columns as values over the existing template. This is fine unless I am out of the office and need to bother someone else to do it. I know there have been many times where i wish this was an option outside of the report I am currently building. I am honestly surprised I couldn't find an idea already submitted about this!

Thanks,

Wes

-

Tool Improvement

Some of the workflows I use have multiple inputs that can take a long time to initially load. The new cache function itself has been amazing, but there is one big drawback for me: I can't cache multiple tools at the same time. Alteryx will allow me to eventually cache all of the tools I want cached, but it will take multiple times running the file. This still saves me time in the end, but it feels a bit cumbersome to set up.

-

Feature Request

-

Tool Improvement

TIBCO Data Virtualization is a Data Virtualization product focused on creating a virtual data store consolidating data from throughout the enterprise. It can be accessed via a SQL query engine, and has a variety of supported connectors, including an ODBC driver.

This data source can be connected to via ODBC in Alteryx today, but error messaging is unclear/unhelpful, and attempting to use the Visual Query Builder causes Alteryx to crash.

Adding TIBCO Data Virtualization as a supported ODBC connection would empower business users to leverage this product and easily utilize this enterprise data store, enhancing the value of the Alteryx platform as a consumer of this data.

The Source field of the field metadata is very useful, but has some problems.

- It is repetitious. A long connection string repeated for many fields from the same source can bloat the size of the workflow above 10 MB, and when removed is around 0.5 MB.

- It exposes sensitive information about a company's infrastructure, such as server names, ports, user ids, and proprietary data structures.

I first started paying attention when we found a user's password in the metadata because they had passed it as a string to the Dynamic Input Tool (separate Idea submitted for that - LINK). Then when I had to share an App with the Alteryx Support team for support with an issue, I thought to check the metadata, and I noticed that the file was too big and was exposing information that I would not normally share with another company.

I'm not sure how you want to handle this, but here's some thoughts:

- Default the Source field to 'off' and provide users the option to turn it 'on' in the workflow/app settings.

- Provide a mechanism to strip the 'Source' field at time of saving or exporting the workflow.

- If nothing else, provide education to users on the implications of including this information in the file.

Thanks for listening!

Cameron

-

Feature Request

-

Tool Improvement

I recently came to know that Alteryx doesn't support Denodo Data sources. We at our company are using Denodo as a data virtualization tool and also Alteryx is used for data blending. The request is for Alteryx to start supporting Denodo as a data source so that our company can reach out to Alteryx for any support related issues with Denodo.

-

Tool Improvement

At the moment if a part of your python code takes more than 30s to run, Jupyter times out and Alteryx cancels the workflow. This makes the Python Tool unusable for anything intensive and the timeout should be removed by default or be configurable per workflow.

I've made this idea as none of the solutions in these threads feel satisfactory:

-

API SDK

-

Category Developer

-

Tool Improvement

I find the Run Command tool to be counter-intuitive: rather than supplying a required I/O parameter (in at least one of "Write Source" and/or "Read Results"), I would rather just use a "Block Until Done" approach to 1. write file, 2. issue custom system command, 3. read file. An even simpler example is the case where I don't need I/O to/from the system command... in that case, I just want to issue the command, nothing more. But the current tool will require me to specify a dummy file, which is counter-intuitive and also leaves that unnecessary file somewhere.

To fix this up without breaking existing user implementations, the "idea" is:

- Do not require either "Write Source" or "Read Result" ... allow both to be blank.

- Allow (but don't require) any of "Command," "Command Arguments," and "Working Directory" to be dynamically populated from fields in the data streamed into the tool.

So... any existing user implementation should be unnaffected... but these changes would allow users to implement system commands in a more intuitive manner, and even allow for very dynamic system commands based on the workflow.

Thanks!

-

Tool Improvement

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 31 | |

| 7 | |

| 3 | |

| 3 | |

| 3 |