Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

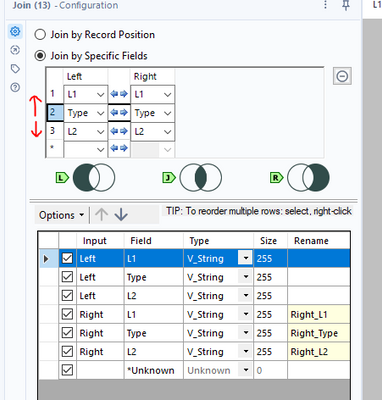

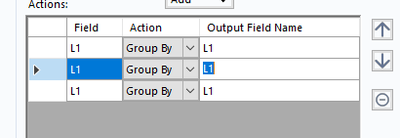

The order of the join fields effects the ordering output

For more complex joins it would be nice to have up and down arrows much like the summarise tool:

-

Enhancement

-

New Request

-

UX

The data view of any anchor is searchable. I want to search the metadata view please.

-

Enhancement

-

New Request

-

UX

It would be really great to have Dynamic Detour tool where you could specify the detour direction as an input to the tool rather than an imbedded control.

This would allow workflow branching.

Using a filter for this passes a dataset with no rows in it which causes dynamic in-db or dynamic input tools to error.

-

API SDK

-

Category Developer

-

New Request

Hello,

This is a feature I haven't seen in any data prepation/etl. The core feature is to detect the unique key in a dataframe. More than often, you have to deal with a dataset without knowing what's make a row unique. This can lead to misinterpret the data, cartesian product at join and other funny stuff.

How do I imagine that ?

a specific tool in the Data Investigation category

Entry; one dataframe, ability to select fields or check all, ability to specify a max number of field for combination (empty or 0=no max).

Algo : it tests the count distinct every combination of field versus the count of rows

Result : one row by field combination that works. If no result : "no field combination is unique. check for duplicate or need for aggregation upstream".

ex :

order_id line_id amount customer site

| 1 | 1 | 100 | A | U_250 |

| 1 | 2 | 12 | A | U_250 |

| 1 | 3 | 45 | A | U_250 |

| 2 | 1 | 75 | A | U_250 |

| 2 | 2 | 12 | A | U_250 |

| 3 | 1 | 15 | B | U_250 |

| 4 | 1 | 45 | B | U_251 |

The user will select every field but excluding Amount (he knows that Amount would have no sense in key)

The algo will test the following key

-each separate field

-each combination of two fields

-each combination of three fields

-each combination of four fields

to match the number of row (7)

And gives something like that

choice number of fields field combination

| very good | 2 | order_id,line_id |

| average | 3 | order_id,line_id, customer |

| average | 3 | order_id,line_id, site |

| bad | 4 | order_id,line_id, site, customer |

| … | … | …. |

Best regards,

Simon

-

Category Data Investigation

-

Desktop Experience

-

New Request

Whenever I overwrite an Excel sheet with data of the same format just different values (e.g. Q2 data versus Q1 data) all of my Pivot Tables break and I have to manually recreate them even though the schema didn't change. Somehow the Table is being deleted/removed and replaced with a completely different Table which is what causes the Pivot Tables to break. The only way to avoid this is to manually set the Cell Range, but who has time for that? The only solution I have found is to manually copy all values and paste them over the existing data which is very inefficient the more sheets you are working with.

-

Category Input Output

-

Data Connectors

-

New Request

The basic premise is this:

Phantom spacing. Basically something that looks like it has spaces on Excel but is actually formatted as an indentation.

Unfortunately, to read the indentation we will need either a VBA prep or read the XML inside. The latter of which is difficult.

As to VBA, the general steps are to create an indentation formula in order to see the numbers, then go from there. The idea is credited to @clmc9601 as we discussed privately.

As of now, I do not see anyway to do this on Alteryx as a function or even expression. It would be very helpful especially reading trial balances or even Bloomberg outputs as they are formatted with indentation.

Reading indentation from Excel or any other file within Alteryx will be much appreciated, especially in actuarial and finance spaces.

-

Category Preparation

-

Desktop Experience

-

New Request

It would be great if you could include a new Parse tool to process Data Sets description (Meta data) formatted using the DCAT (W3C) standard in the next version of Alteryx.

DCAT is a standard for the description of data sets. It provides a comprehensive set of metadata that can be used to describe the content, structure, and lineage of a data set.

We believe that supporting DCAT in Alteryx would be a valuable addition to the product. It would allow us to:

- Improve the interoperability of our data sets with other systems (M2M)

- Make it easier to share and reuse our data sets

- Provide a more consistent way to describe our data sets

- Bring down the costs of describing and developing interfaces with other Government Entities

- Work on some parts of making our data Findable – Accessible – Interopable - Reusable (FAIR)

We understand that implementing support for this standards requires some development effort (eventually done in stages, building from a minimal viable support to a full-blown support). However, we believe that the benefits to the Alteryx Community worldwide and Alteryx as a top-quality data preparation tool outweigh the cost.

I also expect the effort to be manageable (perhaps a macro will do as a start) when you see the standard RDF syntax being used, which is similar to JSON.

DCAT, which stands for Data Catalog Vocabulary, is a W3C Recommendation for describing data catalogs in RDF. It provides a set of classes and properties for describing datasets, their distributions, and their relationships to other datasets and data catalogs. This allows data catalogs to be discovered and searched more easily, and it also makes it possible to integrate data catalogs with other Semantic Web applications.

DCAT is designed to be flexible and extensible, so they can be used to describe a wide variety. They are both also designed to be interoperable, so they can be used together to create rich and interconnected descriptions of data and knowledge.

Here are some of the benefits of using DCAT:

- Improved discoverability: DCAT makes it easier to discover and use KOS, as they provide a standard way of describing their attributes.

- Increased interoperability: DCAT allows KOS to be integrated with other Semantic Web applications, making it possible to create more powerful and interoperable applications.

- Enhanced semantic richness: DCAT provides a way to add semantic richness to KOS , making it possible to describe them in a more detailed and nuanced way.

Here are some examples of how DCAT is being used:

- The DataCite metadata standard uses DCAT to describe data catalogs.

- The European Data Portal uses DCAT to discover and search for data sets.

- The Dutch Government made it a mandatory standard for all Dutch Government Agencies.

As the Semantic Web continues to grow, DCAT is likely to become even more widely used.

DCAT

- Reference Page: https://www.w3.org/TR/vocab-dcat/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/dcat-ap-donl

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Data_Catalog_Vocabulary

RDF

- Reference Page: https://www.w3.org/TR/REC-rdf-syntax/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/rdf

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Resource_Description_Framework

-

Category Parse

-

New Request

Can we have an option to disable all tool containers at once? Similar to disable all browse tools or tools that write output.

-

Engine

-

New Request

Once I've built a workflow I often have to go through the process of removing and combining tools such as selects and formula tools which could be simplified to just one tool. It would be great to have an automated feature which could detect groups of tools which could be simplified and then automatically combined them into one step, improving/simplifying my workflow.

-

New Request

-

UX

Most people who have been around for more than one version change of Alteryx will be familiar with the standard dreaded error pop-up box:

"There was an error opening [workflow X]. This workflow was created by a more recent version of Alteryx..."

The pop up box is generated as many times as there are assets potentially affected. You click once to acknowledge you're aware there is a problem with asset 1A, then you click again when the 1B pop up appears, then you keep clicking until you reach W76. Or that's what the software expects you to do and seem to figure is the graceful way to handle potential problems associated with missing assets (it's far from certain there are even any problems with running the specific code referred to on the older version, this is a warning-level notification where stuff might not work which has been 'promoted' to a full-fledged error that you are requested to address at the asset level).

If you work somewhere where there is a large community of Alteryx users sharing assets widely with each other (all making use of large shared macro repositories) the software's choice of notifying you at the asset level is, not to mince words, completely insane. You could do everything right, have exactly the recommended version from the perspective of Alteryx sys-management, the one that corresponds to the corporate server version executing the scheduled workflows, and still be bombarded with 15 notifications at start-up if you're away for a few days and in the time you were away one or two new guys at the (very large) company decided to create a few new assets with the latest version of the software and share them with their colleagues (the latest version was not yet implemented server-side, so some of those tools might fail for those users - but the tools become everybody's problem the second they're stored in the shared location).

The notifications at startup make no distinction between relevant and irrelevant messages, you can start an empty new workflow and still get messages related to macros you don't care about, because they're located somewhere where Alteryx has been told to look for them even if they're not loaded/included in the workflow.

Every single asset Alteryx might in theory make use of during the session that is starting up will spark an individual message that cannot be ignored or skipped without acknowledging its existence, even if many of the assets will work just fine with an older version. This setup scales ...badly.

I can think of at least two solutions which would in many ways be preferable to the current structure. One would be to 'batch' the notifications prior to creating the pop-up box (one pop-up per start-up, not per asset). What might be included in such a pop-up could for instance be a grouped output with the Alteryx versions that did not match the active version ('workflows developed in version 'XX56' and 'XX57' were identified and these may fail to load', or whatever). Another option would be to have a setting in Designer where you tell Alteryx you don't want to see these notifications at start-up.

-

New Request

-

UX

It would be nice to have an option to distribute tools with the fixed default space of three in between each tool. Now it just distributes based on the available space, which can be inconsistent throughout the workflow.

-

New Request

-

UX

Adding "Lightning Bolt" connectors to the standard workflow tools to allow dynamic automation of the settings would be a game changer. I believe that this would enable us to create universally dynamic and adaptive workflows which could be used as drop in solutions for most datasets. This would turn the standard tools into a dynamic ones and dramatically reduce the tool count to accomplish dynamic tasks, and make complex workflows much easier to internalize. Making standard workflow tools more dynamic would allow us to easily dynamically incorporate conditional tests / values / fieldname selections / bypass / etc into tools like detour / filter / formula / unique / transpose / crosstab / summarize / Outputs / etc. I would also like to see the ability to utilize a bool field to bypass any given tool in a workflow. That way we could do things like conditionally bypass an entire formula tool which would dramatically simplify complex formula construction, turn on and off inputs / outputs, simplify error avoidance, etc.

In order to build complex dynamic conditional workflows with the current tool capabilities, most of us are forced to use custom macros (often a multitude of workflow specific ones as well), constantly add and remove formula created fields for message relay, and create complex multi-routings / tests / unions in a standard workflow with large numbers of tools and containers. This hides many of our tasks within short-term use fields / custom macros and it makes the rest of our workflows voluminous and less intuitive.

On the User Interface side, I recommend a simple approach. Next to the standard tool setting there should be a dynamic input option which allows you to select the source field in the lightning bolt connector. Next to that, there should be an icon that can be clicked on to pop up a short text description and a basic screen shot of data in the correct format for dynamic input. I would also like to see a check box at the bottom for manual tool "bypass" which can also be dynamically controlled. (This would especially be helpful on outputs, but it would also be helpful to allow formulas and filters to be kept in place for future use even when they should not currently be used) Turned off tools could be highlighted in a red background or something.

This would be useful for anyone creating dynamic and adaptive workflows, but it would especially expand Alteryx Designer's capability to attract more custom software developers like me. It would dramatically reduce the need for a large number of complex workflow specific macros that clutter our systems. Users that find the traditional workflow tool approach easier for them could easily use the tools as normal by simply using the standard manual settings. Advanced users could simplify the creation of universally dynamic and self adaptive workflows.

-

Category Macros

-

Desktop Experience

-

Enhancement

-

New Request

The most difficult part about quickly sharing Community Questions and Solutions is constructing representative "Dummy" data values as static Text Inputs that can be packaged in a workflow. Most of us are almost exclusively working with sensitive client and company data that cannot be shared. It would be great to have a tool that converted values over to dummy values based on the type of data in that field. Kind of like a dynamic find and replace that randomizes values, replaces occurrences with similar dummy values, or scrambles string values in an indecipherable way.

The tool output could directly update a connected "Create Text Input" tool, or it could be connected to a browse tool that could quickly be converted to a Text Input.

-

New Request

-

UX

I would love a tool to be created for looking up a value in a table based on a condition. It could be called "Lookup." One input to the tool would be the lookup list, the other is the main database. Inside the tool you could enter functions that can query the lookup table and return the results either as an overwrite of an existing field in the main DB or as a new field in the main DB, similar to the options in the Multi-Row Formula tool.

Here is a link to my post in Community that explains the problem. The solution, in a nutshell, was to create a Join (which resulted in millions of additional rows), run the conditional formula, then filter to get rid of the millions of rows that were created by the Join so only those that met the condition remained (the original database rows).

Here is the text of my Community post describing my project (slightly modified for clarity):

Table 1: A list of Pay Dates (the lookup table)

Table 2: Daily timekeeper data with Week Start and Week End Date fields.

The goal: To find the Pay Date in Table 1 that is greater than the Week Start Date in Table 2 and no more than 13 days after the Week End Date in Table 2.

[Table 2: Week Start Date] < [Table 1: Pay Date]

and [Table 2: Week End Date] < [Table 1: Pay Date]

and DateTimeDiff([Table 1: Pay Date], [Table 2: Week End Date], 'Days') <= 13

There are many different flows I could use this type of tool for that would save time and simplify the flow.

Thanks!

-

Category Join

-

New Request

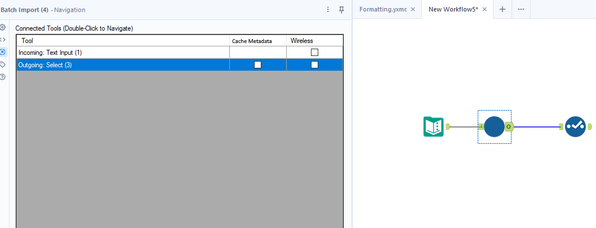

In short:

Add an option to cache the metadata for a particular tool so that it doesn't forget when using tool that have dynamic metadata such as batch macros or alteryx metadata engine can't resolve such as python tool.

Longer explanation:

The Problem:

One of the issues I often encounter when making dynamic workflows or ones that require calling external services is that Alteryx often forgets the metadata of what columns to expect. This causes the workflow to forget configuration of downstream tools when a workflow is first opened or when the metadata engine refreshes. There is currently the option to disable the metadata engine from automatically refreshing but this isn't a good option because you miss out on much of the value it brings.

Some of the common tools where I encounter this issue:

- Json parse

- Batch macros

- Python tool

- Regex parsing to rows

Solution:

Instead could we add an option to cache the metadata for a particular tool, this would save the metadata from the last time the workflow ran to within the workflows XML so that it persists when closed and reopened. Then when the metadata engine runs when it gets to this tool instead of resolving the metadata from the tool it instead uses the saved version in the XML. Obviously when it actually runs it would ignore this and any errors would still occur.

This could be an option in navigation pane of each tool. Mockup below:

This would make developing dynamic workflows far easier and resolve issues of configuration being lost when the metadata changes and alteryx forgets the options.

-

Engine

-

New Request

Hi there,

When you connect to a DB using a connection string or an alias - this shows up in the Workflow Dependancies in a way that is very useful to allow you to identify impacts if a DB is moved or migrated.

However - in 2023.1, if you use DCM then the database dependancies just show up as .\ which makes dependancy management much more difficult.

Please could you add the capability to view the DCM dependancies correctly in the dependancy window?

BTW - this workflow Dependancy Window would be a great place to build a simple process to move existing DB connections to a DCM connection!

CC: @wesley-siu @_PavelP

-

Category Connectors

-

Enhancement

-

New Request

-

Scheduler

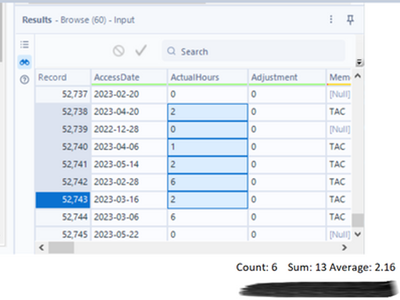

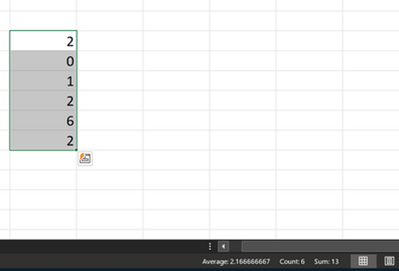

Alteryx should seriously consider incorporating certain Excel features into its Brows tool, as they greatly enhance usability and functionality.

Currently, when selecting specific records in the Brows tool, users are unable to obtain important metrics such as sum, average, or count without resorting to additional steps, such as adding a summary tool or filters.

However, envisioning the integration of a concise bar below the message result window that provides these essential statistics, which are immensely beneficial to users, would undoubtedly elevate the Brows tool to the next level.

By implementing this enhancement, Alteryx would make a significant impact and establish the Brows tool as a must-have resource.

-

Enhancement

-

New Request

-

UX

Hello All,

I am not sure whether my idea makes sense or not.

In today's advanced analytics world, we used RPA for various automation, process simplification, etc. There are CO-BOTs that are designed to run the Alteryx flow as well. Through RPA we are able to log in to the system and tools like Cognos, Oracle, TM1, and so on.

So, I am thinking, if Alteryx developed RPA as a tool in the Alteryx Designer like other tools such as Join, Transform, ML, Computer Vision, Interface, etc.

I believe the implementation of RPA in Alteryx will prove an Asset, and make Alteryx.com more powerful.

Thanks,

Mayank

-

Enhancement

-

New Request

-

Scheduler

For very complex canvases and api data pulls that take a long time, it would be great that as we're working through the canvas to put flags or some setting that would allow us to keep data already pulled into a tool. This way I can set a certain tool to keep all of its data and then all tools i work on from that point forward will pull from that tool rather than from the beginning of the canvas.

for ex.

input tool --> api tool --> formatting tools --> new tools being worked on

if i can set the end of the formatting tools to keep all data then when i run the canvas only the new tools being worked on would get refreshed

i hope that's clear... currently it's very frustrating that any small change i make, i have to rerun the whole canvas and that takes a while

-

New Request

-

UX

Please add in a feature to connect to S3 via AWS IAM roles.

-

New Request

-

UX

- New Idea 289

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

219 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,550 -

Documentation

64 -

Engine

127 -

Enhancement

342 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

203 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 17 | |

| 6 | |

| 5 | |

| 4 | |

| 3 |