Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I think the undo/redo capabilities in Alteryx could be greatly improved. Here is an idea that I think would be beneficial...

I'd like to see which exact tools are affected by my undo/redo actions. An idea was suggested a couple years ago to move your location on the canvas, but that was not added to the roadmap. Instead, is it possible to add the tool ID to the undo menu so that it is obvious which tool each line is detailing?

This is the current debug menu that shows your previous actions:

When a tool is created, the ID can be displayed in this menu, but this is not shown when a change is made to an existing tool. My suggestion is that the menu would say:

4. Change Sort (3) Properties

This same change should be made in the Edit dropdown menu.

-

Enhancement

-

UX

In order to make it easier to find workflow logs and be able to analyze them we would suggest some changes:

- In the log name instead something like "alteryx_log_1634921961_1.log" the log name should be the queue_id for example: "6164518183170000540ac1c5.log"

This would facilitate when trying to find the job logs.

To facilitate reading the log we would suggest the following changes:

- Add the timestamp

- Add error level

For the example of current and suggested log:

Please consult the document in attachment.

In the suggested format the log would be [TIMESTAMP] [ERRORLEVEL] [ELAPSEDTIME] [MESSAGE]

-

Desktop Experience

-

Enhancement

-

User Settings

The interactive results pane is great, but wouldn't it be cool if you could interact directly with the result pane to do things like filtering.

There are a few too many steps with the method at the moment, where you need to either copy the value or type it into the filter pop up. A simple right click and filter on selected value would be a big ux improvement.

-

Enhancement

-

UX

With the new keyboard shortcuts in 2021.1, I would love to see this same functionality added to the global search. I would like for

1) The global search bar to be accessible via a keyboard shortcut

2) You can navigate through the results with the arrow keys and

3) I can click enter on a tool and it will add it to the canvas just like the tool palette now functions in 21.1.

cc: @A11yKyle

-

Enhancement

-

UX

Hello,

In cases where more than one field is being used in a join, the "Join (Tool ID) String fields can only be joined to other string fields" error message could be improved by indicating which field has a mismatch.

For example, if I'm joining Fields A, B, C, D... to fields Z, Y, X, W... in Join tool 24, and for some reason Field Z gets changed from String to Double, it'd be nice to see a message like:

"Join (24) (Field 1) String Fields can only be joined to other String fields"

or

"Join (24) String Fields can only be joined to other String fields (A)"

So that I know I need to go to a select tool and change the type of either A or Z.

Otherwise I look at the Join tool output and try to figure out which pair no longer has matching types, which can take a minute when dealing with a multiple-point join.

Thank you!

-

Category Join

-

Desktop Experience

-

Enhancement

The Dynamic Input tool fails when attempting input a set of Excel files with the following error:

Error: Dynamic Input (1): The file "Test2.xlsx|||<List of Sheet Names>" has a different schema than the 1st file in the set.

Each spreadsheet contains two tabs and all tabs contain the same columns.

The root cause of the schema error is that maximum sheet name length in the two spreadsheets is different. The first spreadsheet uses "East" and "West" for sheet names. The second spreadsheet uses "North" and "South" for sheet names. The Dynamic Input tool uses the longest sheet name when defining the effective Schema.

Excel limits sheet name length to 31 characters. It would be helpful if the Dynamic Input tool used 31 as the minimum string length when defining a schema from Excel sheet names.

The Input Data tool exhibits similar behavior when using a wildcard in the filename and the "Import only the list of sheet names" option.

A batch macro can be used as a workaround.

-

API SDK

-

Category Developer

-

Enhancement

In 24.1 the email tool was adjusted such that any error in the workflow prevents the email tool from sending any emails. Previously, if AMP was enabled, the email tool could still send emails even if the workflow contained an error, but this is no longer the case. There are many cases where this is not ideal, one example being:

In larger workflows, I have multiple data streams which, after a point, operate independently. Even if one stream errors, I would like emails to be sent from the other streams. I have tried nesting the email tool within multiple layers of macros, but if any of the parent or child workflows/macros contain an error, the email tool will not send any emails.

I would like a checkbox option in the email tool or workflow configuration that will still allow emails to be sent if the workflow errors. Then, with the use of control containers, I will have full control over email distribution with errors.

-

Category Input Output

-

Data Connectors

-

Enhancement

Hello,

Here is the proposal about an issue that I face frequently at work.

Problem Statement -

Frequent failure of workflows that have either been scheduled or run manually on server because the excel input file is sometimes open by another user or someone forgot to close the file before going out of office or some other reason.

Proposed Solution -

The Input/Dynamic Input tools to have the ability to read excel files even when it is open so that the workflows do not fail which will have a huge impact in terms of time savings and will avoid regular monitoring of the scheduled workflows.

-

Category Input Output

-

Data Connectors

-

Enhancement

Hello all,

As you all know, you can use API with the Alteryx Download tool. However, this tool is not that easy to configure.

On the other hand, the API world use a lot tools such as Postman or Bruno (an open source clone) which allows easy test, debug... I use it everytime I had to work on a rest API and then I try to translate it to the final tool (such as the Alteryx Download tool). Both tools offer "collection", a set of request, and also environment configuration. Here are some examples on the project I'm working on :

And you can even get some code

I would like to leverage those collections in my download tool configuration, that would be quite easier to use !

Best regards,

Simon

-

Category Connectors

-

Data Connectors

-

Enhancement

We have lots of tools that create new column(s) from the Inputs, e.g., Generate Rows. It'd be very nice if the new column(s) is/are highlighted in the Output. This makes it a lot easier for users when developing the workflow.

-

Enhancement

-

UX

Please consider implementing a consistent case-sensitive option for all tools and functions.

To compare string values, including case-sensitivity: This post had a good description of the challenge, but the post has been archived:

For all the time I've used Alteryx, I thought that IF "test" = "TEST" would evaluate to false. Today I realised that isn't the case and I was surprised. I'm very surprised that "equals" performs like it does.

A few existing Ideas request case-sensitivity for individual tools:

Case insensitive option while joining two data sets

https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/Case-insensitive-option-while-joinin...

Unique tool enhancement - deal with case sensitive data

https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/Unique-tool-enhancement-deal-with-ca...

This new Idea requests system-wide consideration for case-sensitivity, for all tools and functions.

Current state:

These tools and functions are case-sensitive:

- Tool: Join

- Tool: Tile

- Function: FindString

- Functions: MD5_ASCII, MD5_UNICODE, MD5_UTF8

These tools and functions are NOT case-sensitive:

- Tool: Unique

- Function: CompareDictionary

These tools and functions can be either case-sensitive or NOT case-sensitive, depending on the options used:

- Function: Contains

- Function: EndsWith

- Function: StartsWith

- Functions: REGEX_Match, REGEX_Replace, REGEX_CountMatches

Current Challenges:

How do we easily identify Lower Case, Upper Case, Mixed Case?

How do we easily compare strings for equality, using case sensitivity?

Request:

Ensure all tools and functions include an option to ignore or consider Case

Create new functions for IsUpperCase, IsLowerCase, IsMixedCase

Create a new function for IsEqual, with an option to ignore or consider Case

See attached workflow, which

- uses REGEX_Match to create 3 new fields: IsUpperCase, IsLowerCase, IsMixedCase

- creates a field [Flag: Original value IsEqual, case-sensitive], to compare strings for equality, using case sensitivity

-

Category Preparation

-

Enhancement

Hello All,

I'm using the dynamic input tool for SQL requests in my Workflow (WF).

I'm using the "Replace a Specific String" to replace elements in the SQL statement dynamically depeding on results of prevoius tools, user input etc.

So the statement looks like

select * from Schema_Name_xx where invoice_number = 'invoice_number_xx'

Since Schema_Name_xx is no valid Schema in the Database, the statement (= Validation) won't work. Only if I replace Schema_Name_xx by e.g. Invoice_Data_Current it will work, same with the invoice number, invoice_number_xx is replaced by e.g. 4711.

Therefore, validation makes no sense and will never work, only if the WF is running, the correct Schema is inserted in the SQL statement by the "Replace a Specific String" function.

It would be great to disable it in the users settings or wherever in the Designer, changing a config file would also be great :-)

Pls. note: I'm thinking (since I'm not allowed anyway ;-)) about changing/disabeling anything in the Alteryx Server settings.

Reason:

1. Speed: Validating a WF with SQL statements that don't work takes time (every time I save it), sometimes I get even a timeout...

2. WF error entries: Each upload with a failed validation creates an entry in the WF result list which makes it harder to seperate them from the "real" WF errors...

Thanks & Best Regards,

Thomas

-

Enhancement

-

User Settings

When I import an Excel file in to Alteryx I get an error: “shared strings root=x:sst” and Alteryx cannot read the file.

I can work around this by manually opening and saving the excel before importing it into Alteryx but this is not ideal, especially considering the automation implications.

I believe this may be happening because the XLSX generated by the source of the report has a prefix “x:” in all the tags in the Shared String XML embedded in Excel. See: https://learn.microsoft.com/en-us/office/open-xml/working-with-the-shared-string-table

Essentially, it would appear Alteryx is not able to read generated Excel sheets which has the prefix "x:" (e.g. from a bot). The second file which has been opened and saved in Excel manually can be read by Alteryx correctly.

Example of file as exported from ”BOT”:

How the same file looks once it is manually opened and saved:

Ideally Alteryx would read the file as is, i.e. with the "X:SST" tag seen above as having to manually open and save the excel before it can be read is rather clunky.

Thanks!

-

Enhancement

-

Scheduler

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

-

Category Connectors

-

Enhancement

-

New Request

-

Scheduler

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

-

Admin Settings

-

Category Developer

-

Enhancement

-

User Settings

Apologies if this has been suggested already - did a search and didn't see anything similar.

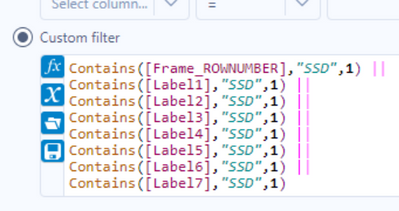

This is a quality of life/UX idea. The search functionality in the results pane essentially does a 'contains' search on all of the columns (see below screenshots for the filter inserted by the 'apply data manipulations button). As I build workflows and profile the data, it'd be helpful if I could click one or more columns and limit the search bar to just those fields.

Right now, depending on the dataset I could get rows returned by the search due to the search term appearing in columns that aren't relevant. To workaround this I could add select tools to limit the columns or do more robust filters in a filter tool, but having it built in would be very helpful.

-

Enhancement

-

UX

Currently, you have two choices for Auto Configure while working on workflows:

- Auto Configure switched on: After every change, the configurations (= columns) of tools are re-evaluated for the entire workflow (at least, this is how it feels like).

- Auto Configure switched off: Configuration of tools is only re-evaluated when pressing F5 (or when using the clipboard).

Pros and Cons of both:

- Auto Configure switched on:

- Configuration in each tool is always accurate so that working on tools is straight forward.

- Editing workflows gets annoyingly slow for complex workflows, especially when data sources from network locations or macros are used. Sometimes I have to wait a minute between two mouse clicks.

- Auto Configure switched off:

- Editing workflows is faster (at least in theory).

- I have to press F5 all the time (because I nearly always change output configuration of tools when working on workflows). Even after pressing F5, Alteryx does not always succeed in calculating the correct configuration of a tool.

- Working with clipboard, loading, saving workflows is still slow.

I would love to have something in between all, kind of an intelligent Auto Configure with following features:

- F5 still starts full configuration evaluation.

- Configuration of input tools is frozen (unless F5 pressed) so that no network access is started during editing the workflow.

- Check for update of macro files is switched off (unless F5 pressed).

- After changing a tool configuration, either a flag is set that this tool was changed but no re-assessment of the workflow configuration is run (approach 1), or only downstream configuration is updated (approach 2). Whether approach 1 or 2 is started could be decided on various criteria: Number of downstream tools (or other measure of complexity), how many "change flags" according to approach 1 are already set, etc.

- If approach 1 was chosen: If you edit a tool which is downstream to another one for which the change flag is set, re-evaluate only the portion of the workflow between the previously changed upstream tool and the tool supposed to be edited.

- Using Clipboard should not invoke full re-configuration.

- Before saving a file, full re-configuration needs to be run (as already now).

This idea will add quite some complexity into the logic of Auto Configure but should have quite some potential to speed up editing workflows because network access and number of re-evaluated tools in each editing step will be reduced.

-

Enhancement

-

UX

CI / CD is critical to any production level process, especially when multiple authors are contributing new features to the same workflow. Currently, multi-author editing of workflows is extremely difficult, and something that would be aided greatly by using git to control different branches of ongoing work. Luckily, that's something we can already do today! However, the ability to test before merging a pull request is critical to modern CI / CD pipelines. For this, it we need to be able to run a headless workflow from a CI / CD environment. Also, having the ability to pass in parameters to the workflow would allow for robust integration testing - something that isn't straightforward today without running on production environments.

-

Engine

-

Enhancement

Often I need to add filters or other tools early on after the workflow is already been mostly built. If a tool connects to one tool I can drag the filter over the connecting line and add the filter seamlessly. However in large workflows there is often this situation:

The Filter will only connect to one of the lines I'm hovering over. If I could connect to all lines simultaneously and drop in the connection to achieve this (would be awesome):

-

Enhancement

-

New Request

-

UX

In workflow Constants, it would be really useful to be able to populate a new field associated with each user created constant.

E.g. Type, Name, Value, "Description"

The description could be left blank but also populated by workflow designers to attach commentary / business logic to the constant.

E.g. Type = User, Name = MyUserConstant, Value = 0.25, Description = "This describes the weighting factor used in Product Calculations"

-

Engine

-

Enhancement

- New Idea 367

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

226 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

251 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

215 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

654 -

Category Interface

245 -

Category Join

107 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

77 -

Category Predictive

79 -

Category Preparation

401 -

Category Prescriptive

2 -

Category Reporting

202 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

981 -

Data Products

3 -

Desktop Experience

1,598 -

Documentation

64 -

Engine

134 -

Enhancement

400 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

223 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

- Pilsner on: Select/Unselect all for Manage workflow assets

-

TheOC on: Dynamic Select Everywhere

| User | Likes Count |

|---|---|

| 24 | |

| 6 | |

| 5 | |

| 4 | |

| 4 |