Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be oh so nice to be able to copy a container's properties and paste those formatting options onto other containers. It could be accomplished through a Paint Brush icon on CTRL-Copy and Right Click to paste format. either way it would save setting the Color (multi-step select), Margin, transparency.

Cheers,

Mark

-

Enhancement

-

New Request

-

UX

Hi all,

When debugging an error, we need to verify tool by tool in a sequence to better understand what is really going on.

Sometimes the tools are miles away from each other. Imagine a gigantic workflow with a lot of connections going back and forth and wireless connections everywhere to help the workflow organization. Here is an example with more than 1300 tools:

My idea is to have a shortcut showing all the previous/next tools and by selecting the previous/next one you go directly to them.

Something like this:

What do you guys think about that?

Best,

Fernando Vizcaino

-

Enhancement

-

UX

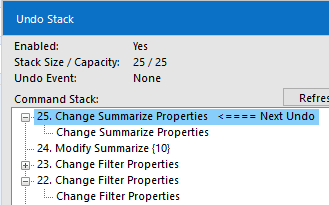

The Edit menu allows you to see what your next undo/redo actions are. This is super helpful, however sometimes I decide to scrap an idea I was starting on and need to perform multiple undo's in a row. It would be great if we could see a list of actions like in the debug undo/redo stack menu then select how many steps we'd like to undo/redo.

For example, using the below actions, if I want to undo the Change Summarize Properties and also the Modify Summarize, currently I have to do that in two steps. I'd like to be able to click the Modify Summarize and have the workflow undo all commands up to and including that one.

-

Enhancement

-

UX

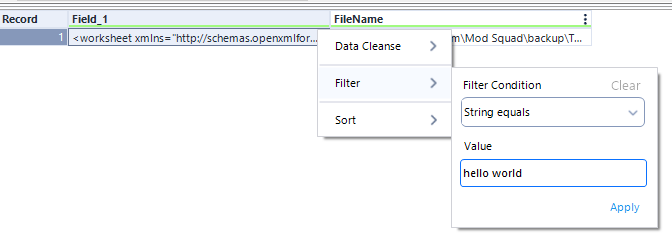

After I type something into the filter box, I should be able hit enter and then it just applies my change (ie enter hits the apply button). It used to be this way, but it's not working as of 2021.2. This feels like a very tiny move in the wrong direction. Currently enter does nothing. It looks like if I hit tab twice and then enter, it finds the apply button. I shouldn't have to hit tab twice.

-

Enhancement

-

UX

Today, there is an checkbox to "Disable All Tools that Write Output" within the Runtime settings for a workflow. Setting this option requires at least 3 clicks:

- Click on the canvas

- Click the "Runtime" tab in the Configuration pane

- Click the checkbox

Could a keyboard shortcut be added for this? I've spoken to several users who leverage this feature and, while it is already a time saver, it seems helpful enough where a keyboard shortcut is warranted.

-

Enhancement

-

UX

Note: This idea doesn't strictly fit into any given category as it involves enabling support for something that affects numerous aspects of Alteryx's already existing spatial features.

I live in Australia. As do a large number of your users. Like me, many of those users use Alteryx to process spatial data. There is only one problem; we live on a roving continent. Every year our continent shifts ever so slightly but over time that shift becomes significant. For this reason we have our own continental system of spatial coordinate projections. It's called the Geocentric Datum of Australia or GDA.

Since 2000, the official Australian geodetic datum has been GDA94. However, according to the Intergovernmental Committee on Surveying and Mapping (ICSM), because the coordinates of features on our maps, such as roads, buildings and property boundaries (and so on), are all based on GDA94, they do not change over time. This is why they have since adopted a new datum: GDA2020. This has now become the standard for mapping in Australia, bringing Australia’s national coordinates into line with global satellite positioning systems.

A more detailed explanation of this can be found on the ICSM's website: What is changing and why? | Intergovernmental Committee on Surveying and Mapping (icsm.gov.au).

Of course Alteryx supports the more global WGS84 standard, which like GDA94 is a fixed datum. But there is up to a 1.8 metre discrepancy between GDA94 (and WGS84) and GDA2020. For spatial analysis projects that don't require metre accuracy that's not a problem. But imagine you are building a bridge, plotting the lanes of a road or programming a GPS enabled tractor. That 1.8 metre discrepancy between the real world coordinates and the projection is enough to cause problems.

And it is. Which is why we request that Alteryx include support for GDA2020 in its existing selection of spatial projections.

This will enable spatial datasets configured in GDA2020 to not require conversion and thus risk corruption or error. This includes providing the ability to configure GDA2020 as the spatial projection in the input tool and all spatial tools.

Doing so would go a long way to supporting your ever growing Australian user base and maintaining Alteryx's position as a trusted software for processing spatial data.

-

Enhancement

-

UX

I will start off with a story. I have built a process to manage batch API requests. It's an iterative process that checks to see where the export is at by calling an API and then returning some status. It will run and wait and run and wait until the export is ready to be downloaded. However sometimes, the jobs don't finish and a status returns something like "failed" or "cancelled". When this is the case, I have my process (which is a little bit batch macro) kicks off an error message, using the nifty error message tool. After some time I noticed that it was a PAIN to go back and figure out which of my requests failed and I decided that I need to add some messaging around where this was failing, so I could do some easy auditing. So I go back into my tool and much to my chagrin, I cannot pass variables into the message section. I would expect it to have worked something like this:

"Record "+[#2]+" is not 'A'"

Can we please get a change to this. It would save a lot of time and energy if we could create a dynamic error message option.

TL;DR Please allow us to use formulas in the "If expression is true, display error message:" settings area.

-

Category Interface

-

Desktop Experience

-

Enhancement

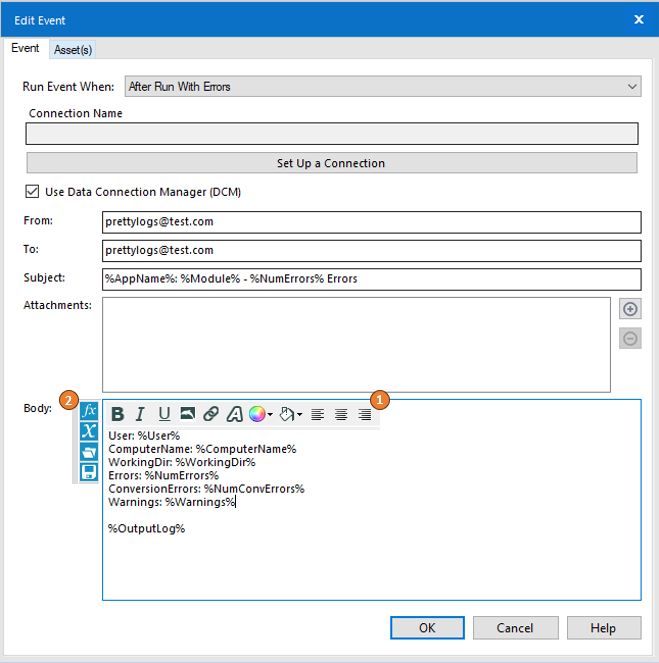

Everyone knows the importance of adding the appropriate controls and governance to your workflows - and often, this means including events that will generate notifications if a workflow is running with errors.

But who is the audience of that email? If it's not a developer, will that person know what they are reading and where to focus?

How about a developer that would like to customize the message that the end user will receive?

Porting some existing functionality from other tools in the Alteryx toolkit to the Events page could easily provide added flexibility to event generation:

1) Add a formatting bar to the tool like shown in the image below

-- Style changes

-- Alignment

-- Highlighting

-- Coloring

-- Images

2) Add a function bar to the tool like shown in the image below

-- Ability to view all available variables

-- Ability to apply formulas using variables

-- Ability to save formulas

What do you think? Give this post a thumbs up if you find the post helpful!

-

Enhancement

-

UX

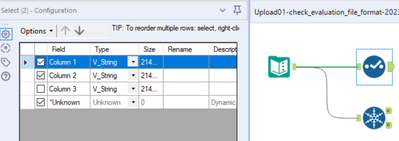

In some cases, the information about incoming columns to tools are (temporarily) forgotten, e.g. if Autoconfig is switched off, if the incoming connection is temporarily missing, or if column names are generated dynamically and the workflow has not been executed, yet.

Many tools deal with that situation well, e.g. Selection, Formula, or Summarize. In these cases, the tools tell the user that they cannot find incoming columns, but they preserve the configuration so that the user still can (at least partially) work on these tools and important information on the configuration is not lost:

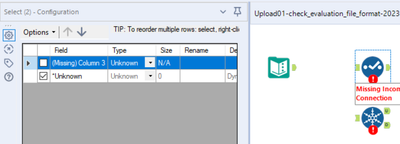

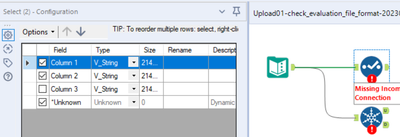

Example Select Tool

- First step: Connections present, configuration typed in:

- Second step: Connection cut, confguration opened. The configuration looks screwed up but implicitly contains all settings:

- Third step: Connection re-connected. The configuration is as before:

Other tools behave the opposite, for example Unique or Macro Input (an for sure many other tools). If the incoming columns are currently unknown to the Designer and you click once on the symbol, the entire configuration of this tool is lost. You might try to get the configuration back by pressing undo. This, in most cases does not work. Or, even worse, you find out what happened later when it's too late for undo. In this case, you either have an old version of that workflow to look up the configuration or you have to re-develop it. In any case, this is unnecessary and time-consuming software behaviour.

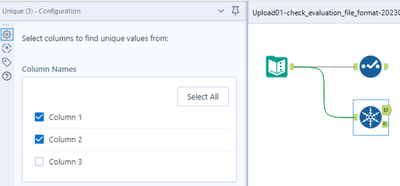

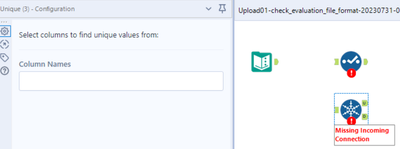

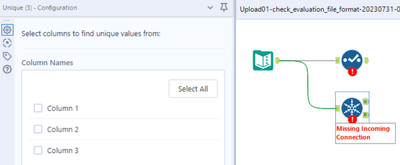

Example Unique Tool

- Step 1: Connections present, configuration typed in:

- Step 2: Connection cut, confguration opened. The configuration is empty:

- Step 3: Connection re-connected: The entire configuration is permanently lost:

I wasn't sure whether I should report this as a bug or a feature enhancement. It is somehow in between. Two aspects tell me that this should be changed:

- Inconsistent behaviour of different tools for now reason,

- Easy loss of programming work, resulting in time-consuming bug fixing.

Please make sure that all tools preserve their configuration also if information on incoming columns is temporarily lost.

-

Enhancement

-

UX

Hello,

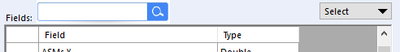

It would be very helpful to have a search box for field names in the summary tool, I think it would help decrease errors by selecting fields by mistake with similar names and will help gain a couple of seconds while looking around for a specific field, particularly with datasets with a lots of them.

Like this:

-

Category Transform

-

Desktop Experience

-

Enhancement

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

-

Enhancement

-

Machine Learning

The idea behind encrypting or locking a workflow is good for users to maintain the workflow as designed.

However, when a user reaches a level of maturity equivalent to that of the builder or more, or even when changes are required - the current practice is to keep a locked and unlocked version of the workflow so that it allows for a change in the future.

It would be much simpler if we can have the power to lock and unlock workflows with a password. Users can then maintain and keep the passwords so that they can continue with the workflow.

Not everybody is on Server yet so this feature is very helpful for control before Server migration. Otherwise it’s just password protecting a folder containing the workflow package, then re-locking a new save file each time a change is made or when someone new takes over on prem.

-

Desktop Experience

-

Enhancement

-

User Settings

-

UX

Hello,

This is one thing that my OCD cannot cope with.

Some tools, like the Union tool, allow you to 'Ignore warnings', like when fields are missing.

Some other tools however don't give the option. Date time tool for instance. Sometimes I feel like yelling at Alteryx that "I know that field already exists! I want to change it!". Or the join tool, when you join on a double.

I know that these warnings don't really affect anything, and they may be useful to highlight something that may be best to be changed, but pleeeeaaassee give us a tick box or something like the union tool where we can ignore warnings. It makes my workflow messy.

(I'm on designer v 2021.1 btw, so if this has already been done, then please ignore my rant. 😁 )

Thanks

Edit: What I'm talking about

-

Enhancement

-

UX

Need a way to highlight lines whether that means right-clicking and selecting a color or what-not, but just having the lines become black & BOLD doesn't cut it. It's not easy on the eyes. If I could click this line/connector and make it bright green that would be ideal and then I can see where it connects better when zooming out.

-

Enhancement

-

UX

Currently when a unique tool is used, and a field is removed upstream then the workflow fails to move forward. If you have one or two unique fields being used then it is no big deal, but when you have a very complex workflow then you have to click into each one of those tools in order to update. This can be very problematic and creates a lot of time following all the branches that is connected after the 1st unique tool is used. My suggestion is to make this a warning instead of a fail or have an option to select fail or warning like the union tool is setup. This way people can decide how they want this tool to react when fields are removed.

-

Category Preparation

-

Desktop Experience

-

Enhancement

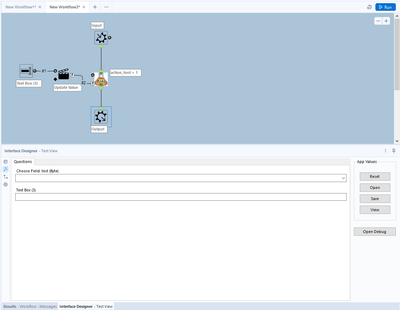

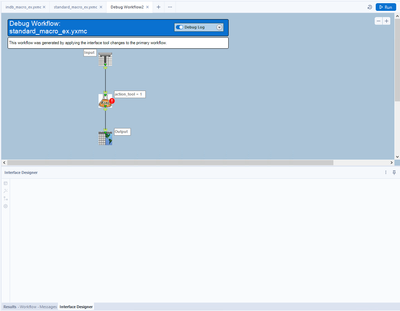

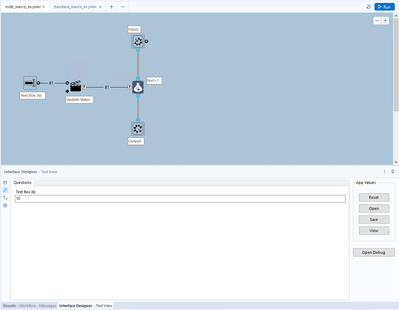

When making any type of macro, it's important to test the functionality of the macro via a debug. This is accomplished successfully with normal tools, however there's a bug that will not allow the user to debug In-DB macros that use either of the following standard Alteryx tools:

- Macro Input In-DB

- Macro Output In-DB

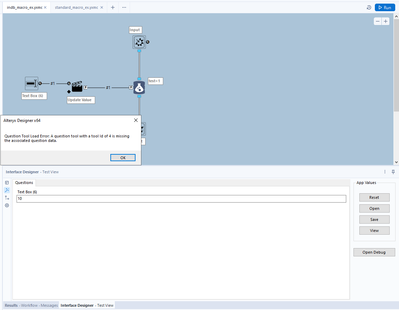

If either of these tools are included in the macro you are building, an error message will appear not allowing you to open a debug.

Error message: Question Tool Load Error: A question tool with a tool id of XXX is missing the associated question data.

Of course, Macro input and output tools do not require any specific action/question tool associated with it. This is a bug. A user pointed out the XML issue almost 3 years ago here:

In summary: "It appears that the tool itself inserts a hidden Question attribute into the XML which can also be seen in Workflow Configuration"

Source:

Examples....

A normal macro, using standard tools:

After debugging a standard macro, the Macro Input/Output tools correctly change to a Text Input and a Browse tool. This allows the macro author to test the macro.

However, when trying the same thing with In-DB tools in a macro, an error message appears:

In-DB macro 1:

In-DB Macro error message (after clicking "Open Debug"):

-

Category In Database

-

Enhancement

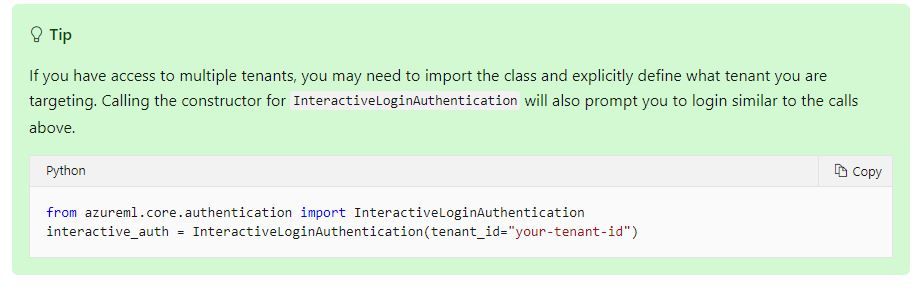

Introducing: The Azure Machine Learning Training and Scoring Tools

We tried to use this tool but can't log in to Azure ML correctly. We have several Tenant ID then log in to another tenant for office 365 not Azure ML.

====================== <Error Message> ==========================================================

Message: You are currently logged-in to 55f0a...-.............................................. tenant. You don't have access to d846a...-............................................. subscription, please check if it is in this tenant. All the subscriptions that you have access to in this tenant are =

[SubscriptionInfo(subscription_name='Microsoft Azure Enterprise', subscription_id='754c5...-...........................')].

Please refer to aka.ms/aml-notebook-auth for different authentication mechanisms in azureml-sdk.

InnerException None

ErrorResponse

=======================================================================================================

Microsoft states that tenant needs to be specified if we have access to multiple tenants.

Set up authentication for Azure Machine Learning resources and workflows

Could you add Tenant ID into Azure credentials so that we can use this tool?

-

Category Connectors

-

Data Connectors

-

Enhancement

At the moment, at least for Postgres and ODBC connections, the DCM only supports a names DSN that must be installed on each machine running Designer or Server. However, the ODBC admin function is admin only within my company, which makes DCM more trouble than it is worth to use.

Connection strings work well in the workflows, have been implemented on the gallery before, and do not require access to the ODBC admin to implement. Could DCM please be improved to support native connection strings?

-

Enhancement

-

UX

We aren't getting a huge amount of help from support on this, so I'm posting this idea to raise awareness for the product teams responsible for the Salesforce connectors and the embedded Python environment.

This post from user Dubya describes the issue in detail:

I have a workflow with several salesforce tools in it, which works fine on my machine. But we need another alteryx user in our office to be able to access, run and maintain the workflow too, via their machine and copy of alteryx designer.

However we're finding that the salesforce inputs and outputs can only be authenticated on one machine at a time.

When the other new user opens the original workflow from the shared network location, the salesforce tools display an error "Salesforce Input (1): {'error': 'invalid_grant', 'error_description': 'authentication failure'}" and the tools fail to load any data. But we can see the full query in the tool and we can even set the custom query option and validate the query successfully, which suggests the source is being correctly connected to and queried, but we just cant run the tool.

The only way to run the tool successfully is to change the credentials and re-authenticate the tool. However this then de-authenticates the original machine, and when we open up the workflow on there and try to run ying the workflow brings back the same error.

We've both tried this authentication back and forth on our own machines and each time one of us re-authenticates, it de-authenticates the other, leading to it triggering the error.

Can someone help explain what's going on and how to fix it, as this doesn't bode well for our collaboration.

We're both running:

The latest build of version of designer 2021.2 (original machine also running desktop automation)

Salesforce Input Tool v4.1.0

Salesforce Output Tool v1.3.0

My response here identifies that this is a problem for our organization as well:

We're experiencing the same issue. It appears to be related to how the tool handles password and security token decryption. I've found that when you modify the related registry entry from "true" to "false", you can see in the tool's xml that the encrypted password and security token are still in there. I'm not sure what else is going on behind the scenes beyond that, but that ought to be addressable by the product teams handling the Salesforce connectors and the Python installation embedded in Designer.

The only differences in our environment compared to u/Dubya's are that we're running on 2020.4 and attempting to use Salesforce Input Tool v4.2.4.

This is a must have for anyone who needs the ability to share workflows among multiple users. This is part of a series of problems that these updated connectors have been plagued with since introducing them years ago, and no one at Alteryx seems to care enough to truly fix the problems. Salesforce is a core system for our organization, so having tools that utilize the latest version of Salesforce's APIs is very important to us. The additional features that the Input tool provides are welcome, but these bugs have to be sorted out in order for us to extract any kind of value out of them. If the "deprecated" Salesforce tools were ever to be removed from Designer while there are issues with the "new" connectors, we would have no choice other than to never upgrade Designer/Server again and be forced to look for another product to serve as our ETL platform.

Please, please, please address this.

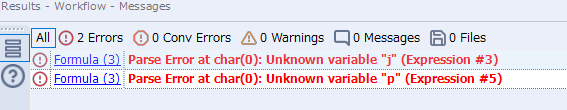

I want to jump to expression #3 of formula (3), when I see following error message. Now I can jump to formula (3), but only expression #1 is opened, not #3. If I have 30 expressions, it is hard to find #20 in 30s.

-

Enhancement

-

UX

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |