Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi,

I was told this feature is working as intended/designed, so I decided to post an idea to see if there was interest in the feature I'm looking for.

In debugging a workflow that another Alteryx user at my company was developing, we realized that an in-db connection was inadvertently pointing to the wrong environment. Because of how in-db tools output messages, there wasn’t anything visual pointing to this as an issue without manually inspecting the connection in “Manage In-DB Connections”. Since I’ve encountered some challenges with In-DB connections before, I suggested using the Dynamic Output In-DB tool, which I’ve used before for the “Input Connection String” option.

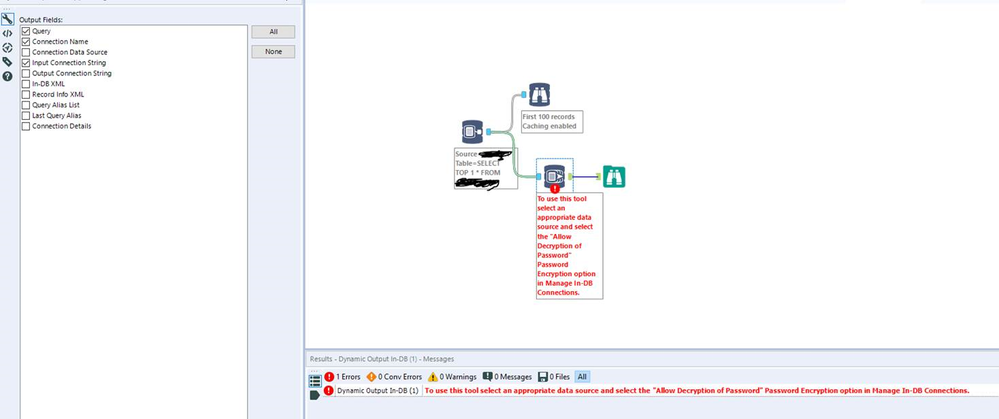

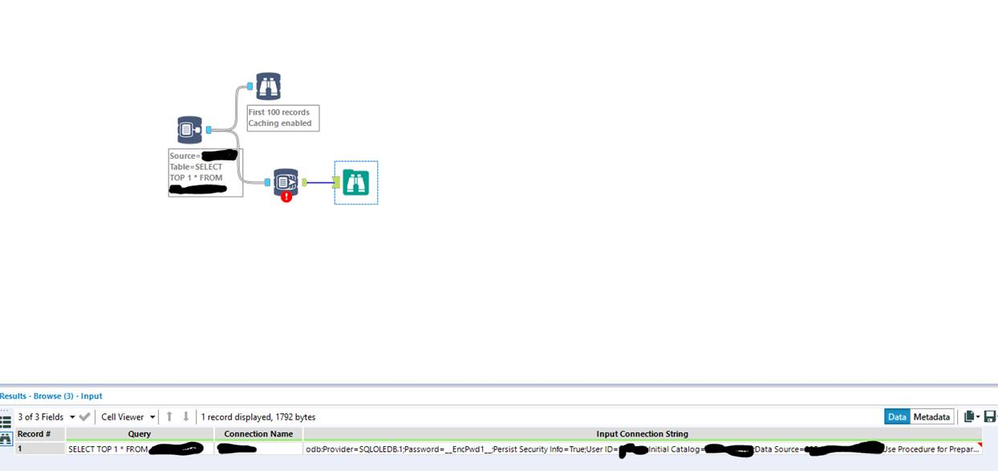

What I had never done before, and didn’t realize until I tested this, was use this with a connection that leverages an embedded SQL Server account username/password. When using this type of connection, an error is thrown that says: “ Dynamic Output In-DB (1) To use this tool select an appropriate data source and select the "Allow Decryption of Password" Password Encryption option in Manage In-DB Connections.”. However, the tool still works and appropriately pulls the connection as it exists.

For security reasons, we don’t want decrypted passwords floating around in this information, and we can’t enable “allow decryption of password” in our server environment.

So, my request would be to either add logging to In-Database tools to pass the connection string information (similar to regular input tools), or to add a method for outputting a connection string without a decrypted password that doesn't cause an error in Alteryx.

Screenshots below for reference.

-

Category In Database

-

Data Connectors

-

Feature Request

-

Tool Improvement

It would be good to expose the column metadata (data types) of HIVE tables when viewed using the "Select In-DB" tool, similar to the standard select tool.

As a consumer of hive table from alteryx via the In-DB tools, it adds value to understand the semantics (atleast the field types to start with) of the hive table from alteryx.

Regards,

Sandeep.

-

Category In Database

-

Category Input Output

-

Data Connectors

The summarize tool have drag drop facility and cross checking and suggestion on the type of aggregation that can be applied based on the data type.

e.g. Let there be two different stack. One to be used for Group By. Another for aggregation.

We should be able to drag fields to these sections.

Now when we are dragging something to the Aggregation stack, based on the data type, a small suggestion list of possible aggregation to choose from.

And a small validation of the data type to aggregation if we are defining the aggregation manually.

I can provide mock ups if anyone is interested.

-

Category In Database

-

Category Preparation

-

Data Connectors

-

Desktop Experience

Right now there is not an exception join in DB which means if I want to remove records I have to filter on NULL and with large tables this is really inefficient.

-

Category Data Investigation

-

Category In Database

-

Category Preparation

-

Data Connectors

We are seeing a trend where database teams are using certificate authentication with process ids instead of passwords. It would be extremely beneficial if Alteryx supported this type of authentication so that users can utilize the benefits of process ids instead of their individual ids which require password changes every 45 days. The database team that is currently switching to this type of authentication is the Mainframe DB2 team but it would be great if Alteryx enabled this for all database platforms.

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

Hi,

i recently started working on Alteryx and would like to ask if its possible to have preview of data when using In database tools working with db tables.

I have to invest more time on seeing the result using browse tools which again slows bit the performance on queries just to see the results.Also each step you have to add browse tool.

I have worked heavily on powerbi and see this as prime need as in PBI you can work on the visible data itself and figure it out right away how the transformations are working.if preview feature with cache options are given i guess that would be a big booster for Alteryx users.

Thanks,

Brij

-

Category In Database

-

Data Connectors

When connecting to Oracle thru In-db

I've came across an awkward issue, somehow Alteryx can't read TNS that I've defined.

Eventually I've had a solution and this was not as straight forward as it should be...

1) There is an easy way to edit, update add TNS records, finding the file and understand and update it is not as straightforward as a non IT person to tackle

Here's some tablau links on how to do it... http://kb.tableau.com/articles/howto/setting-an-oracle-connection-to-use-tnsnames-ora-or-ldap-ora

2) Especially the In-db tool doesn't even look at that file...

It says enter "TNS Server Name" but it actually asks for an IP address and some credentials like

1.0.0.0.1/oracl,

you gotta write this in Help file,

You gotta have a better UI/UX design that helps the end user...

Best

-

Category In Database

-

Data Connectors

Hi Support,

Please consider this Idea in your future release of Alteryx based on the following details:

- Once an In-DB Write action is processed, process is only based on internal table (creation of a temporary table within Hive / Warehouse depository, load data inpath…, then rename & move this table at specified location within « Table Name » option).

- Customer requirement is to have this process available on external table along with available options similar to available one within In-Memory tool.

- Proposed work-around from your side is only satisfactory as a temporary solution:

- write to a table in the write in-db tool;

- stream out 1 record from that table;

- write that to a "fake" temporary table in the regular output tool;

- and then use a post SQL statement to create the external table and move the data from the table created with write in-db to the external table.

Thanks & Regards,

YRA

-

Category In Database

-

Data Connectors

During my time with Alteryx, I've largely been able to accomplish all of my data processing jobs using the in-database toolset.

One exception is when it comes to window functions/multi-row formulas. When window functionality is needed, an Alteryx approach ends up looking something like this:

- Stream data out of database to an intermediate table

- Run a pre-written window function over dataset

- Store results into another intermediate table

- Load intermediate table into separate Alteryx workflow to continue further processing

While it may be possible to use a self-join as a workaround, it results in a bottlenecked, inefficient process. The same could be said for streaming the dataset out of database to use the non-in-database multi-row formula built in to Alteryx.

If anyone knows of an existing solution, please let me know - otherwise I believe many users would greatly benefit from this added functionality.

-

Category In Database

-

Data Connectors

Hi,

It would be great if we can get the collection name by using some engine variables in the workflow. By doing so, we can use collections extensively for client specific categorizations. If we create collection for each client and if we are able to get the collection name from which collection this workflow got executed, then we will be able to track the executed workflow is performed against that respective client. This will solve the db connection establishments, i.e. based on the collection name (i.e. client name) we can build the respective client db connection the same workflow.

-

Category In Database

-

Data Connectors

As my Alteryx workflows are becoming more complex and involve integrating and conforming more and more data sources it is becoming increasingly important to be able to communicate what the output fields mean and how they were created (ie transformation rules) as output for end user consumption; particular the file target state output.

It would be great if Alteryx could do the following:

1. Produce a simple data dictionary from the Select tool and the Output tool. The Select tool more or less contains everything that is important to the business user; It would be awesome to know of way to export this along with the actual data produced by the output tool (hopefully this is something I've overlooked and is already offered).

Examples:

- using Excel would be to produce the output data set in one sheet and the data dictionary for all of its attributes in the second sheet.

- For an odbc output you could load the data set to the database and have the option to either create a data dictionary as a database table or csv file (you'd also want to offer the ability to append that data to the existing dictionary file or table.

2. This one is more complex; but would be awesome. If the workflow used could be exported into a spreadsheet Source to Target (S2T) format along with supporting metadata / data dictionary for every step of the ETL process. This is necessary when I need to communicate my ETL processes to someone that cannot afford to purchase an alteryx licence but are required to review and approved the ETL process that I have built. I'd be happy to provide examples of how someone would likely want to see that formatted.

-

Category Data Investigation

-

Category Documentation

-

Category In Database

-

Category Reporting

Alteryx has proven very useful in connecting to and processing data in local Cloudera HDFS cluster. However, like most companies we are moving much of our processing/storage to a cloud environment like AWS and Azure. A typical security setting is to have this data encrypted within HDFS. Unfortunately Alteryx does not support this environment. This could quickly force us to look for different platforms to use unless Alteryx can evolve to support this.

-

Category In Database

-

Data Connectors

MySQL released version 8 for general availability in April 2018. It would be nice when Alteryx installed LUA scripts for MySQL 8 by default. Now only LUA scripts for MySQL 5 are installed.

-

Category In Database

-

Category Input Output

-

Data Connectors

It seems that I'm unable to use my mouse wheel to scroll through or "Control + A" to select all in the "Table or Query" field of the "Connect In-DB" tool. I have to click the top of it and drag down the text to highlight it all.

-

Category In Database

-

Data Connectors

I realize a true "In-DB" version of the SharePoint tool may not be possible due to the complexity and layers to get to an actual SharePoint Database. However, would it be possible to add some parameters for pre-filtering? O365 version of SharePoint has some timeout limitations that cause the SharePoint tool to fail randomly. If I could specify parameters (such as a date minus some number of days or hours on a particular date field) to filter the dataset. Or specify record ID ranges to pull records in batches, this would allow users to work around this issue.

-

Category In Database

-

Data Connectors

Looking forward for the addition of in-database tools for SybaseIQ or SAP IQ

Please star that idea so Alteryx product management can prioritize this request accordingly...

Note on SAPIQ;

- SAP IQ is a database server optimized for analytics/BI, it is a columnar RDBMS optimized for Big Data analytics

- IQ is very good for ad-hoc queries that would be difficult to optimize in a legacy transactional RDBMS...

- It's ranked number 48th amongst all DBMS and 27th amongst relational DBMS

- SAP IQ16 has gained R language support

currently we can connect with ODBC, http://community.alteryx.com/t5/Data-Sources/sap-sybase-iq-ODBC/m-p/9716/highlight/true#M700

-

Category In Database

-

Data Connectors

In the in-db join, add the possibility to make left-except or right-except join in addition to the 4 available options.

-

Category In Database

-

Category Join

-

Data Connectors

-

Desktop Experience

Please let me know if I've overlooked a suggestion and I'll be glad to upvote that post.

Just as we're able to right click a input and turn it into a macro imput for example, it would be helpful to turn formula into In-Db formula and the same goes for all the other tools that are available both inside and outside In-Db.

I created several fields inside a formula tool and later on, I had to do the same but In-Db, the field names and rules were the same, if I could I would just turn the one I already had into an In-Db tool.

Thanks.

-

Category In Database

-

Data Connectors

In the great workaround In-Database Sorting, the author explain how to distribute the sorting in ot the database.

Unfortunately, the Percent option is not available for a Hive Connection (I'm using Simba Hive ODBC driver v2.1 connection).

This is a behavior that my user would like to use in order to reduce the duration of the workflow (so they do not need to stream datas in and out).

We are using Alteryx 10.6 x64.

What do you think about my need ?

-

Category In Database

-

Data Connectors

I find it a little strange that we need to utilize a different set of tools (the In-DB tools) in order to achieve push-down optimization. This is not the case, for instance (as far as I know), in SPSS, perhaps others.

My request is to automatically push logic to the database layer if and when it's possible to do so.

In order to maintain operation similar to existing, it could be implemented as an overall workflow setting (on or off) such that leaving it turned off would result in tools continuing to behave as they do today. Turning it on simply enacts a process of combining as many tools as possible into SQL that is pushed to the database server, and assumes whatever permissions are necessary to make that happen; (e.g. ability to write temp tables or etc...)

Thanks!

-

Category In Database

-

Data Connectors

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets