Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

While it is good to have the test tool stop outputs based on tests, it isn't too insightful to users on the full details of these error messages. It would be more useful to have an output location for a test if these errors appear so that end users can troubleshoot instead of the workflow developer having to work through all of the error messaging.

There are workarounds for this that can be used, but they are often extensive and require the addition of significant logic. Adding optional outputs to the test tool would be a simple fix that could save a lot of hours of debugging when end users find an error.

-

API SDK

-

Category Developer

-

Enhancement

Cheers,

Mark

-

API SDK

-

Category Developer

It would be very helpful if there was a tool that could stop the workflow without throwing an error. Currently, you can use the message tool to throw an error on a certain condition, and then enable the "Cancel Running Workflow on Error" option in the Runtime settings, but when the workflow is stopped in this way, many other tools don't function such as the Output Data and Email tools. Simply adding a tool that stops the workflow without erroring that also allows the other tools to finish their job would be great.

-

API SDK

-

Category Developer

-

New Request

Using the download tool is great and easy to use. However, if there was a connection problem with a request the workflow errors out. Having an option to not error out, ability to skip failed records, and retrying records that failed would be A LIFE CHANGER. Currently I have been using a Python tool to create multi-threaded requests and is proven to be time consuming.

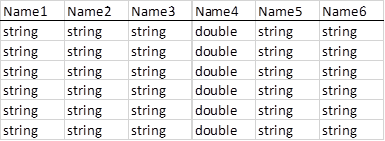

I'm converting some old macros that were built in 8.6 for use in 10.6 and found something that could potentially be changed about the Alteryx .xlsx drivers. Specifically this refers to the field types passed from a Dynamic Input tool when the data fields are blank when using an .xlsx file as the data source template.

When the new .xlsx file has fields populated, it works fine. If data is not populated in the new file, the field types are converted to Double[8]. This doesn’t cause a problem for the Dynamic Input, but it can cause problems for downstream tools. In the second case below, the field names are retained but the downstream Join tool errors when trying to join this now double field to a string (rather than returning no results from the join output as desired). This also occurs when a single field is empty, only that field will be converted to a Double[8] field. When the legacy .xlsx drivers are used, the field types are retained from the data source template.

File Source Template vs. file that is returned upon running

There are other solutions for this scenario such as using a Select tool with a loaded .yxft file of the correct field types, or selecting the Legacy .xlsx drivers from the File Format dropdown when configuring the Dynamic Input. However I thought this is something that could be improved about the Alteryx .xlsx drivers.

-

API SDK

-

Category Developer

It would be great to make R tool in Alteryx closer in interface to, let's say, RStudio. By this I mean - can we please have code auto completions, color highlighting of formulas/dataset names, and other useful interface details that make coding easier?

-

API SDK

-

Category Developer

This suggestion is particularly relevant for macros and custom tools created with the Python SDK, but I think it can apply to other tools as well.

When searching for tools in Alteryx, I can easily find tools I want fairly quickly. However, I often don't know which tool category it is in, which can sometimes slow me down (it is sometimes faster/easier for me to go to the tool category, rather than search for the tool I want).

As a quick example, I just installed the Word Cloud tool that @NeilR shared here: https://community.alteryx.com/t5/Dev-Space/Python-Tool-Challenge-BUILD-a-Python-tool-and-win-a-prize... . I was able to find the tool really easily using search once it was installed, but in order to find the tool category, I either had to unzip the .yxi file and find out where it was, or click around through the tool categories until I found it (it was in the Reporting tools, which makes a lot of sense).

Could we add something either to the search window or to the description/config of tools which calls out where a given tool is in the Tool Palette?

-

API SDK

-

Category Developer

Give me the ability to select a date range that limits the available selections for a date tool.

The limitations should include:

Future dates only

Past dates only

Dates between [startdate] and [enddate]

Future/Previous # years

Future/Previous # months

Future/Previous # weeks

Future/Previous # days

-

API SDK

-

Category Developer

-

Category Interface

-

Desktop Experience

Hello AlteryxDevs -

Back when I used to do more coding, some of the ORMs had the ability to return back to you a natively generated primary key for new rows created; this could be really useful in situations wherein you wanted / needed to create a parent / child relationship or needed to pass the value back to another process for some reason.

As it stands now, the mechanism to achieve this in Alteryx is kind of clunky; all I have been able to figure out is the following:

1) Block until done 1.

1a) Create parent record. Hopefully it has an identifying characteristic that can be attached to.

2) Block until done 2.

2a) Use a dynamic select to go get the parent record and get the id generated by the database.

3) Block until done 3.

3a) Append your primary key found in 2a. Create your children records.

I mean it works. But it is clunky, not graceful, and does not give you any control over the transaction, though that is kind of a more complicated feature request.

Thanks!

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Simple - Add an option that currently exists in the Analytic App properties to "On Success - Run Another Analytic App". Instead this option would be to Run Another Module

Complex - Create a new tool that would have a single input that would accept a list of filepaths to Alteryx modules. The modules would be run sequentially (module 2 run once module 1 was finished).

Cheers,

John Hollingsworth

-

API SDK

-

Category Developer

Hello gurus -

I think it would be an important safety valve if at application start up time, duplicate macros found in the 'classpath' (i.e., https://help.alteryx.com/current/server/install-custom-tools, ) generate a warning to the user. I know that if you have the same macro in the same folder you can get a warning at load time, but it doesn't seem to propagate out to different tiers on the macro loading path. As such, the developer can find themselves with difficult to diagnose behavior wherein the tool seems to be confused as to which macro metadata to use. I also imagine someone could also arrive at a situation where a developer was not using the version of the macro they were expecting unless they goto the workflow tab for every custom macro on their canvas.

Thank you for attending my TED talk on the upsides of providing warnings at startup of duplicate macros in different folder locations.

-

API SDK

-

Category Developer

-

Category Macros

-

Desktop Experience

Hi all,

I'm trying my best to think of the most secure way to do this and struggling within Alteryx using the Download tool in its current format.

I am using an Internal API Manager to retrieve data but this particular API requires additional "Headers" values for username and pw beyond my standard OAuth2 flow to the API Manager. Now I can run this locally but in order to save this down to our network as a workflow or to ideally run it from Gallery I should not be leaving credentials in open text anywhere so that anyone looking at the workflow or the underlying xml can grab these creds. Surely quite an easy one to mask or can this be made more dynamic to retrieve credentials from a Key Vault for example? e.g. Azure Key Vault?

Can we add masking to the Download Tool Header Values?

Thanks,

Ciaran

-

API SDK

-

Category Developer

Many a times, we come across scenarios when the formula tool fails due to the change in the data type of the input fields.

For instance, a numerical calculation would fail or would not give correct result if the data type of a field was changed due to some reason(from double to string for example).

In such cases, we might have to change the change the data type in Select tool or add Tonumber() to the fields expression of the formula tool to make it work.

My proposal is to have a formula tool that should be dynamic to identify the purpose of expression and either add the Tonumber() expression while execution or convert the data type of the fields as per the requirements of the expressions in the formula.

-

API SDK

-

Category Developer

-

Category Preparation

-

Desktop Experience

1) Add cURL Options support from within the Download Tool

The Download tool allows adding Header and Payload (data), which are key components of the cURL command structure. However, there is no avenue to include any of the cURL options in the Download tool. Most of the 'solutions' found have been to abandon the use of the Download tool and run the cURL EXE through the Run Command tool. However, that introduces many other issues such as sending passwords to the Run Command tool, etc. etc.

With the variation and volume of connection requests that are being funneled through the Download tool, users are really looking for it to have the flexibility to do what they need it to do with the Options that they need to send to cURL.

2) Update cURL version used by Alteryx Download tool to a recent version that allows passing host keys

cURL added the option to pass host keys starting in version 7.17.1 (available 10/29/2007). The option is --hostpubmd5 <md5>:

https://curl.haxx.se/docs/manpage.html

And it appears that the cURL shipped with Alteryx is 7.15.1 (from 12/7/2005😞

PS C:\Program Files\Alteryx\bin\RuntimeData\Analytic_Apps> .\curl -V

curl 7.15.1 (i586-pc-mingw32msvc) libcurl/7.15.1 zlib/1.2.2

Protocols: tftp ftp gopher telnet dict ldap http file

Features: Largefile NTLM SSPI libz

Thanks for considering!

Cameron

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Currently pip is the package manager in place within the Designer. Unfortunately this is something that doesn't fit our requirements as Data Scientists. We prefer using conda due to the following reasons:

- conda manages also non-Python library dependencies. This way conda can be used to manage R packages as well which comes in quite handy (even tough not all packages from CRAN Repository are available)

- conda provides a very simple way of creating conda envs (similar to virtualenv but with conda one can also install and manage pip packages --> virtualenv cannot install conda packages!) to isolate required packages (with specific versions) used in a workflow (e.g. for a Python Tool in Designer).

So I would like to have conda instead or additionally to pip and would like to create my conda envs where I install the packages I need for a specific task within my workflow. Moreover, if you think about to feature an R jupyter notebook capability (like the Python Tool) it could be beneficial to change from pip to conda for managing packages in both worlds.

-

API SDK

-

Category Developer

Problem:

Dynamic Input tool depends on a template file to co-relate the input data before processing it. Mismatch in the schema throws an error, causing a delay in troubleshooting.

Solution:

It would be great if the users got an enhancement in this Tool, wherein they could Input Text or Edit Text without any template file. (Similar to a Text Editor in Macro Input Tool)

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Give me the ability to show/hide, enable/disable user interface tools via a control parameter.

-

API SDK

-

Category Developer

-

Category Interface

-

Desktop Experience

Figuring out who is using custom macros and/or governing the macroverse is not an easy task currently.

I have started shipping Alteryx logs to Splunk to see what could be learned. One thing that I would love to be able to do is understand which workflows are using a particular macro, or any custom macros for that matter. As it stands right now, I do not believe there is a simple way to do this by parsing the log entries. If, instead of just saying 'Tool Id 420', it said 'Tool Id 420 [Macro Name]' that would be very helpful. And it would be even *better* if the logging could flag out of the box macros vs custom macros. You could have a system level setting to include/exclude macro names.

Thanks for listening.

brian

-

API SDK

-

Category Developer

-

Category Macros

-

Category Reporting

I would love the ability to double click a un-named tab and rename it for 'temp' workflows.

eg - "New Workflow*" to "working on macro update"...

Reason:

- when designer crashes it is a huge pain to go through auto saves with "New Workflow*" names to find the one you need

- I work on a lot of projects at once and pull bits of code out and work on small subset and then get destracted and have to move over to another project. With mulitpule windows and tabs open it gets confusing with 10 'new worflow' tabs open.

- Allows for better orginaization of open tabs - can drag tabs into groups and in order to know where to start from last time.

-

API SDK

-

Category Developer

All the tools in the interface should be populated and assigned/selected with dynamic values.

Now we have the option of populating a set of values for Tree/List/Dropdown.

But we do not have the selecting some of them by default / while its loading.

And Other controls like TextBox/NumericUpDown/CheckBox/RadioButton also should be controlled by values from database.

For example If I have a set of three radio buttons, I should be selecting a radio button based on my database values while the workflow loading.

-

API SDK

-

Category Developer

-

Category In Database

-

Category Interface

- New Idea 396

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,616 -

Documentation

64 -

Engine

136 -

Enhancement

422 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

16 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |