Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Simple - Add an option that currently exists in the Analytic App properties to "On Success - Run Another Analytic App". Instead this option would be to Run Another Module

Complex - Create a new tool that would have a single input that would accept a list of filepaths to Alteryx modules. The modules would be run sequentially (module 2 run once module 1 was finished).

Cheers,

John Hollingsworth

-

API SDK

-

Category Developer

Hello,

What about a new tool to deal with file actions, such as deleting, renaming, moving, etc ? Today, we do that with cmd, not the easiest way to do. I have developed a tool on Amphi that gives a good idea of what we could have on Alteryx

Here the result :

That can help with temporary, useless files and probably other use cases.

Best regards,

Simon

-

API SDK

-

Category Developer

-

New Request

We could really use a proper API Tool for Input, rather than rely on curl queries, etc. that end up requiring many tools to parse into a proper table form, even using the JSON tools!

I for one deal regularly with cloud APIs, and pulling their data. We need an API Input tool that can handle various auth methods, Headers, Params, Body data, etc and that will ALSO handle converting the typical output (JSON) into two outputs - Meta info, and the table-compatible info.

I'm moving from direct SQL query to using API, and I literally have 15 Tools and steps required to create the same table data that the single SQL query tool gave me. In one case, I have to have an 18 tool Container that just handles getting a Bearer Token before I can pass that on to another container that actually does the curl query, etc and it's 15 tools needed to manage the output JSON into proper table-style data. (Yes, I already use the JSON tools, but the data requires massaging before that tool can work right).

As an add-on, we should also be able to make aliases for the API connection so we aren't having to put user/pass information into the workflow at any point. Interfaces are nice, but not really useful in automated workflow runs.

There's got to be a better way!

-

API SDK

In normal output tool, when file type is csv, it is possible to custom select the delimiter. It would be great to be able to have the same option in the Azure Data Lake output tool, so for example you can write a pipe delimited file to your ADLS storage account.

Hi Team,

I am starting to utilize alteryx as our platform to run our daily data load process. A bunch of the data are sent via SFTP and it would be a lot simpler if the following features are directly available instead of utilizing the run command and/or scripting.

1. Ability to use wildcard in the download instead of specifically defining the filenames. (can be done indirectly but have to use multiple tools --> 2 downloads, parse, etc.)

2. Ability to delete files after the files have been downloaded successfully.

- I have seen a couple of posts in the community trying to do this but haven't found one that worked for me; again this was utilizing run command and scripts and other utility programs)

Appreciate if you can look into this request !

-

API SDK

-

Category Developer

Hi,

I didn't find this mentioned before, but apologies if it has. I and a few clients have found that the Download tool 'Query String/Body' is quite difficult to use from a UX perspective. If you have a long query (or anything over 3 short rows in fact), it's almost impossible to navigate to the part you want with ease, as you're required to highlight and move the cursor manually or use up and down arrows, still only ever seeing a few lines of the query.

My suggestion(s) would be to:

1: include a scroll bar as a minimum

and ideally in addition, either:

2a: give the box itself more real estate (fixed) in the Payload tab, or

2b: allow the user to resize this box in the tool config (probably more complicated?)

Specific problem below, including my (terrible!) drawings on it:

I appreciate there are other methods, including taking the query from a field etc., but for many users - particularly newer ones - it's preferred to copy a query into this String/Body and edit as needed, but right now this is an almost unmanageable approach.

Thanks,

Andy

-

API SDK

-

Category Developer

It is important to be able to test for heteroscedasticity, so a tool for this test would be much appreciated.

In addition, I strongly believe the ability to calculate robust standard errors should be included as an option in existing regression tools, where applicable. This is a standard feature in most statistical analysis software packages.

Many thanks!

It would be nice to improve upon the 'Block Until Done' tool.

Additional Features I could see for this tool:

1: Allow Any tool (even output) to be linked as an incoming connection to a 'Block Until Done' tool.

2: Allow Multiple Tools to be linked to a 'Block Until Done' tool. (similar to the 'Union' tool)

The functionality I see for this is to enable Alteryx set the Order of Operation for workflows and Allowing people to automate processes in the same way that people used to do them. I understand there's a work around using Crew Macros (Runner/Conditional Runner) that can essentially accomplish this; howerver (and I may be wrong). But it feels like a work around, instead of the tool working the way one would expect; and I'm loosing the ability to track/log/troubleshoot my workflow as it progresses (or if it has an issue)

Happy to hear if something like that exists. Just looking for ways to ensure order of operation is followed for a particular workflow I am managing.

Thanks,

Randy

-

API SDK

-

Category Developer

As a security enhancement, the default passwords setting should be encrypt for user. Although this is critical for security my users have overlooked this even with training. They truly aren't culpable if they forgot. If it is the default then they must consciously change the it to an insecure setting.

From a security perspective the current default setting is backwards.

Grant Hansen

A lot of popular machine learning systems use a computer's GPU to speed up some of the math to a huge degree. The header on this article on Medium shows a 15x difference from a high-end CPU vs a high-end GPU. It could also create an improvement in the spatial tools. Perhaps Alteryx should add this functionality in order to speed up these tools, which I can imagine are currently some of the slowest.

When moving external data into the database, the underlying SQL looks like:

CREATE GLOBAL TEMPORARY TABLE "AYX16020836880b41e08246b59ee8c"

...

My client would like to add a prefix to the table as:

CREATE GLOBAL TEMPORARY TABLE MMMM999_DM_USER."AYX16020836880b41e08246b59ee8c"

where MMMM999_DM_USER is supplied in the configuration.

A service account automatically sets the current session to something like MMMM999 (alter session set curent schema=MMMM999;)

I would to suggest to add a configuration in the Block Until Done tool, which allow the user to prioritize the release of a data stream through multiple Block Until Done tools in the same module.

In the example below, the objective is to update multiple sheets in a single Excel workbook. Each sheet is a different data stream, that cannot be unioned together, therefore making the filtering of a single stream feeding into multiple Block Until Done from that filter solution impossible.

What I would like to be able to do is have a configuration, where Block Until Done #2 will not allow the data stream to pass through until Block Until Done #1 is complete, Then Block Until Done #3 will not pass through the data stream until Block Until Done #2 is complete, and so forth through the all the Block Until Done instances.

When working with APIs it is quite common to use the JSON parse tool to parse out the download data which has been returned from the API. However the JSON data may be missing key:value pairs as they are not in the response. This causes issues with downstream tools where there are missing fields. The current workaround for this is to use either the Crew macro Ensure fields, or union on a text input file to force the missing fields downstream.

The issue with this is:

1) Users may not be aware of the requirement to ensure fields are present

2) You need to know the names of all the fields to include in the ensure fields macro

Therefore the feature request is to add an option to the JSON parse tool to add the model schema as an input.

For example with the UK companies house API, to get a list of all the directors at a company the model schema is

{

"active_count": "integer",

"etag": "string",

"items": [

{

"address": {

"address_line_1": "string",

"address_line_2": "string",

"care_of": "string",

"country": "string",

"locality": "string",

"po_box": "string",

"postal_code": "string",

"premises": "string",

"region": "string"

},

"appointed_on": "date",

"country_of_residence": "string",

"date_of_birth": {

"day": "integer",

"month": "integer",

"year": "integer"

},

"former_names": [

{

"forenames": "string",

"surname": "string"

}

],

"identification": {

"identification_type": "string",

"legal_authority": "string",

"legal_form": "string",

"place_registered": "string",

"registration_number": "string"

},

"links": {

"officer": {

"appointments": "string"

},

"self": "string"

},

"name": "string",

"nationality": "string",

"occupation": "string",

"officer_role": "string",

"resigned_on": "date"

}

],

"items_per_page": "integer",

"kind": "string",

"links": {

"self": "string"

},

"resigned_count": "integer",

"start_index": "integer",

"total_results": "integer"

}

But fields such as "resigned_on" are not always present in the data if there are no directors who have resigned. Therefore to avoid a user missing the requirement for unidentified fields needing to be added, if there was an optional input which took the model schema and therefore created the missing fields would greatly improve the API development process and minimise future errors being encountered once a workflow is in production.

-

API SDK

-

Category Developer

Currently the R predictive tools are single thread, which means to utilise multi-threading we need to download separately a third party R package such as Microsoft R Client.

Given this is a better option, should this not be used as the default package upon installation?

The Dynamic Input tool fails when attempting input a set of Excel files with the following error:

Error: Dynamic Input (1): The file "Test2.xlsx|||<List of Sheet Names>" has a different schema than the 1st file in the set.

Each spreadsheet contains two tabs and all tabs contain the same columns.

The root cause of the schema error is that maximum sheet name length in the two spreadsheets is different. The first spreadsheet uses "East" and "West" for sheet names. The second spreadsheet uses "North" and "South" for sheet names. The Dynamic Input tool uses the longest sheet name when defining the effective Schema.

Excel limits sheet name length to 31 characters. It would be helpful if the Dynamic Input tool used 31 as the minimum string length when defining a schema from Excel sheet names.

The Input Data tool exhibits similar behavior when using a wildcard in the filename and the "Import only the list of sheet names" option.

A batch macro can be used as a workaround.

-

API SDK

-

Category Developer

-

Enhancement

It would be incredible helpful if Alteryx canvases auto-populated some metadata about each canvas to track its origination and updates.

The metadata fields I'm specifically thinking about are:

-Author

-Date Created

-Date Last Updated

-

API SDK

-

Category Developer

Similar to how there is a functionality to use pip through the ayxinstallPackages, there needs to be a way to upgrade python itself. There are important packages such as keras that have errors in Python 3.6 that are not present when used with 3.7 so it should really be up to the user as to which python package to use. Another solution could also be to allow the user to point to their own local installation of Python so that the user can maintain consistency between their own local site-packages and the one that Alteryx has.

-

API SDK

-

Category Developer

My company does installs through a machine with admin rights, but the end user does not actually have admin rights to the laptop. Therefore, when attempting to add modules into the Developer tool for python - pip install fails. The failure is due to the install being in program files where a non-admin is unable to write, the normal workaround is also not possible since the version used is admin and not non-admi designer.

Can the tool be more flexible from the get go. As the only way out of this is to go through articles regarding SDK and creating custom requirements txt files. My goal was just to be able to use Python with Alteryx and add on modules as I need. Very cool updates in 3.5 I'm using but thought this conundrum might happen to others in same situation. Admin install with non-admin rights. Thanks.

-

API SDK

-

Category Developer

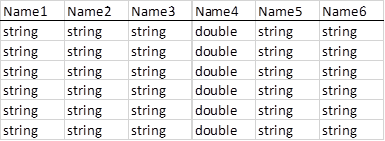

I'm converting some old macros that were built in 8.6 for use in 10.6 and found something that could potentially be changed about the Alteryx .xlsx drivers. Specifically this refers to the field types passed from a Dynamic Input tool when the data fields are blank when using an .xlsx file as the data source template.

When the new .xlsx file has fields populated, it works fine. If data is not populated in the new file, the field types are converted to Double[8]. This doesn’t cause a problem for the Dynamic Input, but it can cause problems for downstream tools. In the second case below, the field names are retained but the downstream Join tool errors when trying to join this now double field to a string (rather than returning no results from the join output as desired). This also occurs when a single field is empty, only that field will be converted to a Double[8] field. When the legacy .xlsx drivers are used, the field types are retained from the data source template.

File Source Template vs. file that is returned upon running

There are other solutions for this scenario such as using a Select tool with a loaded .yxft file of the correct field types, or selecting the Legacy .xlsx drivers from the File Format dropdown when configuring the Dynamic Input. However I thought this is something that could be improved about the Alteryx .xlsx drivers.

-

API SDK

-

Category Developer

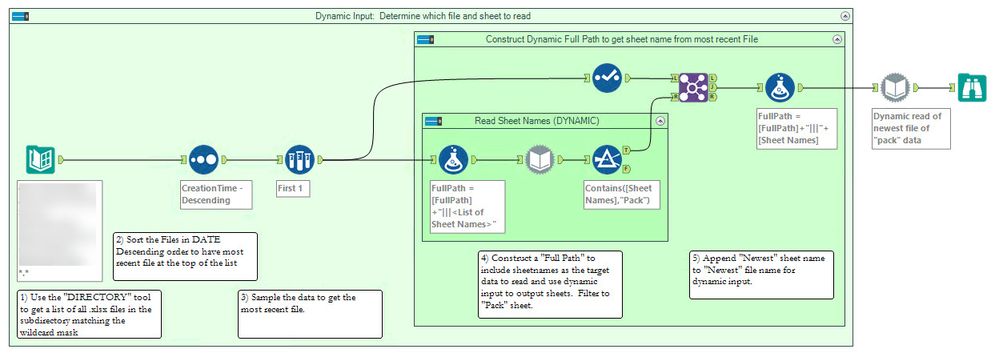

This wasn't pretty (actually, it was challenging and pretty when I was done with it)!

My client receives files that include a static and dated name portion (e.g. Data for 2018 July.xlsx) within the file there are multiple sheets. One sheet contains a keyword (e.g. Reported Data) but the sheet name also includes a variable component (e.g. July Reported Data). I needed to first read a directory to find the most recent file, then when I wanted to supply the dynamic input with the sheet name I wasn't able to use a pattern.

The solution was to use a dynamic input tool just to read sheet names and append the filtered name to the original Full Path.

[FullPath] + "|||<List of Sheet Names>"

This could then feed a dynamic input.

Given the desire to automate the read of newly received "excel" data and the fluidity of the naming of both files and sheets, more flexibility in the dynamic input is requested.

Cheers,

Mark

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 5 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |