Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

If a tool fails, there should be a way to customise the error message. Currently a way to do it: log all messages in a file, read that file with another workflow, then customise the messages (Alteryx workflow error handling - Alteryx Community). However, there should be a more convenient solution. We should be able to:

- Find/replace parts of a message.

- Specify, which tools messages to modify.

- Change the message type.

- Change the order of the messages in the results window, to prioritise the critical ones.

- Pick which messages cannot be hidden by "xxx more errors not displayed".

This would especially help for macros, as sometimes we have a specific tool failing within a macro and producing a non-user friendly message.

-

API SDK

-

Category Developer

In the Test tool, the default is for the "don't report errors if there are other errors in the workflow" box to be checked. I think the default should be for it to be unchecked - it is very aggravating to think that you have found the problem with the workflow only for another to pop up.

-

API SDK

-

Category Developer

When I proceed with this command in a python tool:

from ayx import Package

Package.installPackages(package='pandas',install_type='install --upgrade')

in Alteryx it only updates to 0.25, but the Latest version is 1.1.2.

When I would like to upgrade from the Python side i get the following:

ERROR: ayx 1.0.54 has requirement pandas<0.25.0,>=0.24.2, but you'll have pandas 1.1.2 which is incompatible.

Can you please make sure we can upgrade to the latest version of pandas without any compatibility issue?

This is important because of json_normalize. Really useful tool, available from pandas 1.0.3!

-

API SDK

-

Category Developer

-

Engine

-

Enhancement

Hello Dev Gurus -

The message tool is nice, but anything you want to learn about what is happening is problematic because the messages you are writing to try to understand your workflow are lost in a sea of other messages. This is especially problematic when you are trying to understand what is happening within a macro and you enable 'show all macro messages' in the runtime options.

That being said, what would really help is for messages created with the message tool to have a tag as a user created message. Then, at message evaluation time, you get all errors / all conversion warnings / all warnings / all user defined messages. In this way, when you write an iterative macro and are giving yourself the state of the data on a run by run basis, you can just goto a panel that shows you just your messages, and not the entire syslog which is like drinking out of a fire hose.

Thank you for attending my ted talk regarding Message Tool Improvements.

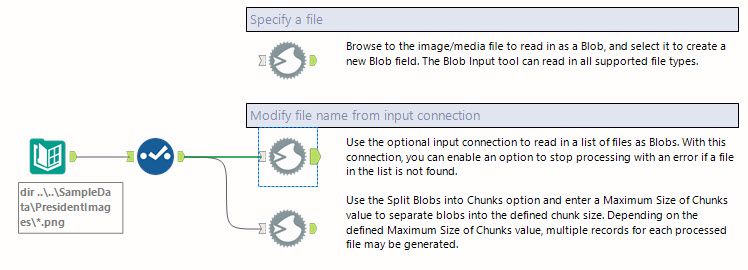

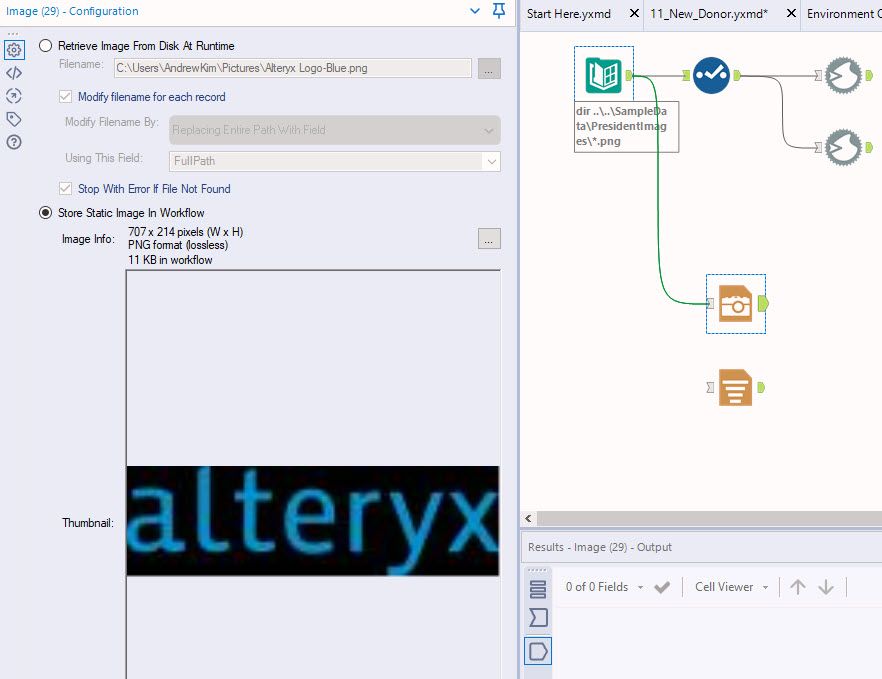

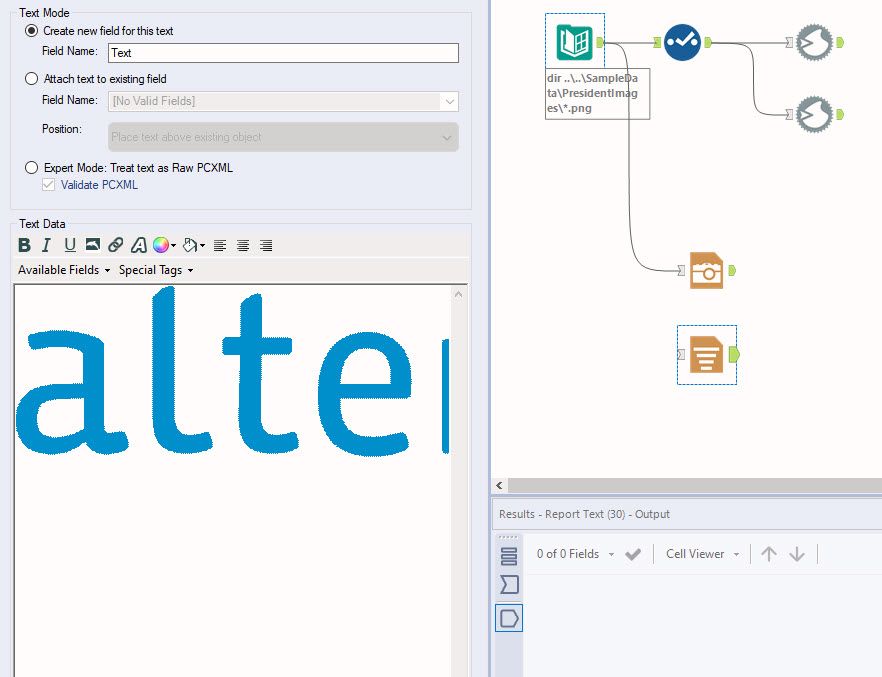

With the new intelligence suite there is a much higher use of blob files and we would like to be able to input them as a regular input instead of having to use non- standard tools like Image, report text or a combination of directory/blob or input/download to pull in images, etc. I would like to see the standard input tool capable of bringing in blob files as well.

There is a great question in the Designer space right now asking about saving logs to a database: https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Save-workflow-messages-log-in-database...

This got me to think a little more about localized logging options in Alteryx.

At a high level, there are ways to accomplish this in Designer at a User or System level by enabling a Logging directory and then parsing those logs with a separate Alteryx job. However, this would involve logging ALL Designer executions, which seems like it may be overkill for this need. A user can also manually save a log after each execution, although this requires manual intervention.

I think adding an option in the Runtime settings for Workflow Configuration to Enable Logging and (optionally) specify a Logging directory would be a great feature add for Designer. In my opinion this should not apply once a workflow runs on Server (Server logging should be handled in a fully standardized way), but should apply to designer "UI" execution. Having the ability to add a logging naming convention (perhaps including a workflow name and run date in the log name) would be icing on the cake.

This would allow for a piecemeal logging solution to log specific flows or processes that might be high visiblity or high importance, while avoiding saving hundreds or thousands of logs daily of less important processes, and of dev test. It would also reduce or eliminate a manual process to save these logs individually.

-

API SDK

-

Category Developer

I recently cam across a limitation in Alteryx, where we can't download non-CSV files using Amazon S3 download tool. There is currently support only for CSV, and couple of other formats but we are using JSON files (.jl) extensively and not having the tool download the files into the workflow is disapointing as now i have to build a custom code outside Alteryx to do that before I can start my workflow.

Can this be please given prompt attention and prioritized accordingly.

-

API SDK

-

Category Developer

The Dynamic Input will not accept inputs with different record layouts. The "brute force" solution is to use a standard Input tool for each file separately and then combine them with a Union Tool. The Union Tool accepts files with different record layouts and issues warnings. Please enhance the Dynamic Input tool (or, perhaps, add a new tool) that combines the Dynamic Input functionality with a more laid-back, inclusive Union tool approach. Thank you.

-

API SDK

-

Category Developer

Hi all,

As per the post here: https://community.alteryx.com/t5/Data-Preparation-Blending/Dynamic-input-not-respecting-data-sort/td... - there are situations where you need to use something like a dynamic input to query data, but need it to be brought back in the order that you specified on the input stream.

The Dynamic Input too sorts the input stream deliberately, to check for duplicate queries so that it doesn't waste time bringing back duplicate data.

It would be great if we can extend the dynamic input tool to allow users to specify that they wish the data unsorted, and that they are OK with the consequences of possibly running the same query twice. Even if this is a setting that can only be set through XML, it would still be helpful.

Many thanks

Sean

-

API SDK

-

Category Developer

The dynamic input tool allows some fairly complex transformations to the underlying query - but it's not always easy to debug this when it doesn't behave as expected.

Could we add the ability to inspect the resulting query (just like you can on the InDB queries using the dynamic output component?)

It is currently possible to see this in the results / messages pane, but I can't find a way to get this into a data-stream to persist it or manipulate it.

-

API SDK

-

Category Developer

When we try to call external web site from Alteryx Designer Download tool, our company proxy server failed the authentication because Alteryx uses the basic login/password authentication. This has happened to multiple applications that need to interact with external partners. Will like to request an enhancement to enable Alteryx to authenticate using Kerberos or NTLM.

One of the common things that we need to do, is to take a delta-copy of a file or a DB table into the staging area of the analytical database.

This always looks very similar - so it would be useful to make this a wizard based process so that teams can easily build these very quickly rather than having to hand wrap:

Process:

- Check which primary keys exist - fill the gaps where they don't

- Are there any rows that update over time (or is this insert-only) - if they update over time, which column is the "updated date" column so that we can spot updates - if there is no update date; then we need to do a column by column check of some kind (like a hash or a checksum)

- Do you want to sync deletes?

- Do you want to keep updates?

Outputs:

- Target table in staging area which is now updated compared to the source

- Logging done (similar to what Kimball recommends in the ETL Handbook) with the run date/time; summary stats; and any errors

- Errors table for any errors that arose with row numbers

- Tables in target created (with history table if requested)

While In-db tools are very helpful and cut down the time needed to write complex SQL , there are some steps that are faster by directly writing SQL like window functions- OVER (PARTITION BY .....). In Alteryx, we need to create multiple joins and summaries to perform a window function. It would be immensely helpful if there was a SQL editor tool for in-db workflows where we can edit the SQL code at any point in the workflow, or even better, if they can add an "edit" function to every in-db tool where we can customize the SQL code generated and then send to the next tool.

This will cut down the time immensely and streamline the workflow to make Alteryx a true contender for the ETL solution space.

-

API SDK

-

Category Developer

Alteryx hosting CRAN

Installing R packages in Alteryx has been a tricky issue with many posts over the years and it fundamentally boils down to the way the install.packages() function is used; I've made a detailed post on the subject. There is a way that Alteryx can help remedy the compatibility challenge between their updates of Predictive Tools and the ever-changing landscape that is open-source development. That way is for Alteryx to host their own CRAN!

The current version of Alteryx runs R 4.1.3, which is considered an 'old release', and there are over 18,000 packages on CRAN for this version of R. By the time you read this post, there is likely a newer version of one of these packages that the package author has submitted to the R Foundation's CRAN. There is also a good chance that package isn't compatible with any Alteryx tool that uses R. What if you need that package for a macro you've downloaded? How do you get the old version, the one that is compatible? This is where Alteryx hosting CRAN comes into full fruition.

Alteryx can host their own CRAN, one that is not updated by one of many package authors throughout its history, and the packages will remain unchanged and compatible with the version of Predictive Tools that is released. All we need to do as Alteryx users is direct install.packages() to the Alteryx CRAN to get our new packages, like so,

install.packages(pkg_name, repo = "https://cran.alteryx.com")

There is a R package to create a CRAN directory, so Alteryx can get R to do the legwork for them. Here is a way of using the miniCRAN package,

library(miniCRAN)

library(tools)

path2CRAN <- "/local/path/to/CRAN"

ver <- paste(R.version$major, strsplit(R.version$minor, "\\.")[[1]][1], sep = ".") # ver = 4.1

repo <- "https://cran.r-project.org" # R Foundation's CRAN

m <- available.packages() # a matrix of all packages and their meta data from repo

pkgs4CRAN <- m[,"Package"] # character vector of all packages from repo

makeRepo(pkgs = pkgs4CRAN, path = path2CRAN, type = c("win.binary", "source"), repos = repo) # makes the local repo

write_PACKAGES(paste(path2CRAN, "bin/windows/contrib", ver, sep = "/"), type = "win.binary") # creates the PACKAGES file for package binaries

write_PACKAGES(paste(path2CRAN, "src/contrib", sep = "/"), type = "source") # creates the PACKAGES files for package sources

It will create a directory structure that replicates R Foundation's CRAN, but just for the version that Alteryx uses, 4.1/.

Alteryx can create the CRAN, host it to somewhere meaningful (like https://cran.alteryx.com), update Predictive Tools to use the packages downloaded with the script above and then release the new version of Predictive Tools and announce the CRAN. Users like me and you just need to tell the R Tool (for example) to install from the Alteryx repo rather than any others, which may have package dependency conflicts.

This is future-proof too. Let's say Alteryx decide to release a new version of Designer and Predictive Tools based on R 4.2.2. What do they do? Download R 4.2.2, run the above script, it'll create a new directory called 4.2/, update Predictive Tools to work with R 4.2.2 and the packages in their CRAN, host the 4.2/ directory to their CRAN and then release the new version of Designer and Predictive Tools.

Simple!

When dealing with very large tables (100M rows plus), it's not always practical to bring the entire table back to the designer to profile and understand the data.

It would be very useful if the power of the field summary tool (frequency analysis; evaluating % nulls; min & max values; length of strings; evaluating if the type is appropriate or could be compressed; whether there is whitespace before or after) could be brought to large DB tables without having to bring the whole table back to the client.

Given that each of these profiling tasks can be done as a discrete SQL query; I would think that this would be MASSIVELY faster than doing this client-side; but it would be a bit of a pain to write this tool.

If there is interest in this - I'm more than happy to work with the Alteryx team to look at putting together an initial mockup.

Cheers

Sean

When the Python Tool operates, it seems to always ingest all the data before processing any of it (i.e. no batch processing). Python can handle this type of functionality with generators, can we update the tool so that it may do some preprocessing (like imports and data prep) and allow a defined generator function to be called repeatedly from a separate input handle and provide batch data frames on output for more parallel-like processing of data?

The Python Tool could be updated as such:

- Multi-Input - Same functionality as now, and also allow this data to be used for preprocessing and setting up the Python functions and a single batch function.

- Data Input - Ingests data in batches (as most other tools operate) where each batch passes in a dataframe (in this case, a subset of processed entries) into an existing Python function (with a name that is in globals()), and returns another dataframe with that desired output. This can give the option of adding/removing rows as necessary to a subset of the data.

- Data Output - Partial set of data after data processing to allow tools further in the chain to process in parallel.

- "On Complete" Multi-Outputs - Same functionality as now, to pass process-complete data to the next tool once all data ingested has been processed. Perhaps give the option to pass the complete set from Data Output.

A simple use-case, if a user wanted to use only the Python Tool:

Let's say a user wants to get all URLs from every post in a thread (containing millions of posts) that are in blacklisted domains.

- Data prep that sends the list of blacklisted domains into the Python Tool's Multi-Input handle, and that data is transformed and stored in a set within the Python tool once.

- A series of posts (strings) are sent in batches (let's say ~10000) to the Data Input of the Python Tool. The tool calls a defined Python function that extracts all the URLs, and filters those in the blacklist.

- That data is then transformed into a DataFrame which is then sent to the Data Output of the Python Tool, and only contains results corresponding to the small batch of posts that were ingested. Alteryx can also use this to track progress during execution.

- Once all posts have been processed, one of the Python Tool's Multi-Outputs can return a total count of URLs found that were NOT in the blacklist (sure this can be a part of the Data Output, but just for the sake of this example). Could also be used to trigger "on-complete events."

I know I used the term "generators" above, and the design could probably be simplified to instead call an Alteryx Python function that yields from a function to await input from the next batch to use actual Python generators. However, I feel my initial approach could be thought of as a simpler process since generators are more of an intermediate functionality.

I hope this makes sense and is elaborate enough to pursue. Thanks for the consideration!

-

API SDK

-

Category Developer

It seems that currently the Python tool is raising a `FileNotFoundError` exception in Python when there is not data incoming on an input connection. I have, for example, a Filter tool before the Python tool and sometimes there is just no data coming to Python tool - as it is intended.

Unfortunately, the Python tools gives my an error message in those cases with this message before the error:

This is only the case when there is no data incoming. In all other cases, the tool works fine.

Since this is not really an error, a way to either catch this before using `Alteryx.read("#1")` or just having `Alteryx.read()` return an empty data.frame (as I would expect it to do) would be appreciated.

-

API SDK

-

Category Developer

-

Tool Improvement

Hello,

As of today, there are only few packages that are embedded with Alteryx Python tool. However :

1/Python becomes more and more popular. We will use this tool intensively in the next years

2/Python is based on existing packages. This is the force of the language

3/On Alteryx, adding a package is not that easy : you need to have admin rights and if you want your colleagues to open your workflow, it also means that he has to install it himself. In corporate environments, it means loosing time, several days on a project.

Personnaly, I would Polars, DuckDB.. that are way faster than Panda.

-

API SDK

-

Category Developer

-

Enhancement

The Python tool has been a tremendous boon in being able to add capability that is not yet available in the Alteryx platform.

It would make the Python Tool much more usable and useful if you can define the inputs explicitly rather than just relying on the good behaviour of both the user; and also the python code that reads the inbound data (Alteryx.Read('#1'))

This is not something that the Jupyter notebook code-interface may handle directly (because the Jupyter notebook has no priveledged knowledge of the workflow outside it); so this may be best handled by the container itself.

The key here is that if my python app requires 2 inputs - it should be possible to define these explicitly so that we can test; and also so that we can prevent errors and make this more bullet-proof.

The same would apply on the outbound nodes for the Python tool.

-

API SDK

-

Category Developer

-

Feature Request

-

General

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 5 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |