Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be nice if when Alteryx crashes there is an autosave capability that when you re-open Alteryx it shows you the list of workflows that were autosaved and give you the immediate option to select which ones you want to save. SImilar to what Excel does.

I know about the current autosave feature, but I would still like to have it pop up or the option to have it pop up for me to select which to keep and which to discard.

-

API SDK

-

Category Developer

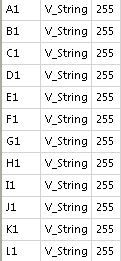

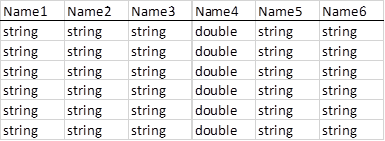

I setup a generic template because the excel files do not have a consistent schema. This is the template

which is interpreted as text

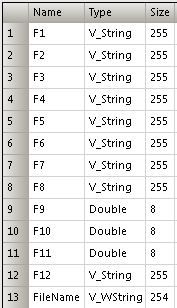

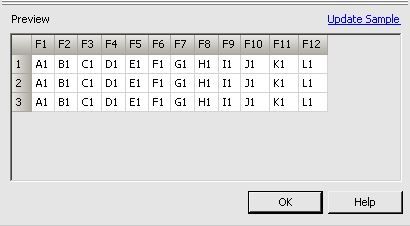

When I feed files into the dynamic input tool the output configuration is

This is the schema of the first file read by the DI tool. Why didn't it use the template?

The requirement to have all the schemas match is difficult - especially with excel.

I would like to suggest the input tool have a checkbox to override the data driver and interpret all columns as text.

https://www.connectionstrings.com/ace-oledb-12-0/treating-data-as-text/

I was trying to check the correctness of multiple URLs with the help of download tool connected to parsing tool that way I check the download status and filter the records to good and bad based on the HTTP status codes. To my suprise it allows 2 errors at the maximum ans stops checking next records which is not at all useful for me. I wondered if someone can help me. As @JordanB say it is the default behavior of the tool and can't be handled as of now. Hope you guys have the error handling feature in your next release.

-

API SDK

-

Category Developer

I'm converting some old macros that were built in 8.6 for use in 10.6 and found something that could potentially be changed about the Alteryx .xlsx drivers. Specifically this refers to the field types passed from a Dynamic Input tool when the data fields are blank when using an .xlsx file as the data source template.

When the new .xlsx file has fields populated, it works fine. If data is not populated in the new file, the field types are converted to Double[8]. This doesn’t cause a problem for the Dynamic Input, but it can cause problems for downstream tools. In the second case below, the field names are retained but the downstream Join tool errors when trying to join this now double field to a string (rather than returning no results from the join output as desired). This also occurs when a single field is empty, only that field will be converted to a Double[8] field. When the legacy .xlsx drivers are used, the field types are retained from the data source template.

File Source Template vs. file that is returned upon running

There are other solutions for this scenario such as using a Select tool with a loaded .yxft file of the correct field types, or selecting the Legacy .xlsx drivers from the File Format dropdown when configuring the Dynamic Input. However I thought this is something that could be improved about the Alteryx .xlsx drivers.

-

API SDK

-

Category Developer

It would be great if it were possible in future to adjustment Analytic App buttons.

For example Run instead of Finish or so... and so on!?!

Best regards

Mathias

It would be a handy feature if it were possible to choose a data type for an input tool to read the data in as. For example, if a dataset has multiple fields with different data types, it would be handy to be able to make the Input Tool read and output them all as a string, if needed. This would also make a handy tool, a sort of blanket data conversion to convert all fields to the specified type.

It would be great if we could set the default size of the window presented to the user upon running an Analytic App. Better yet, the option to also have it be dynamically sized (auto-size to the number of input fields required).

Please ignore this post! I just discovered the amazing dynamic input tool can accomplish exactly this 🙂

This is no longer a "good future idea" but simply a solution already provided by AlterYX! Thanks AlterYX!

Hello everyone. I think it would be incredibly helpful if all input tools (database / csv files / etc..) had an input stream for data processing.

This input stream would cause the tool to fire if and only if at least 1 row of "data" was passed into it. This would work similar to the Email tool, as the input would simply not fire if no records in the workflow were passed into it.

By making this change, AlterYX could then control the flow of execution when reading / writing to database tables and files without having to bring in Macros or chained applications.

The problem with having to use batch macros and block until done to solve this problem is that it obfuscates your workflow logic, making it much harder for another developer to look at the workflow and understand what is happening. Below is an extremely simply example of where this is necessary.

Example: any time you are doing a standard dimentional warehouse ETL.

Step #1: Write data to a table with an auto-incremented Key

Step #2: Read back from that table to get the Key that you just generated

Step #3: use that key as a foreign key in your primary Fact table.

Without this enhancment you need at least 2 different workflows (chained apps or marco's) to accomplish the task above, however with this simple enhancement the above task could be accomplished in 3 tools.

This task is the basic building block of almost all ETL's so this enhancement would be very useful in my opinion.

As a security enhancement, the default passwords setting should be encrypt for user. Although this is critical for security my users have overlooked this even with training. They truly aren't culpable if they forgot. If it is the default then they must consciously change the it to an insecure setting.

From a security perspective the current default setting is backwards.

Grant Hansen

With SSIS, you can invoke user precedence contraint(s) to where you will not run any downstream flows until one or more flows complete. A simple connector should allow you to do this. Right now, I have my workflow(s) in containers, and have to disable / enable different workflows, which can be time consuming. Below is a better definition:

Precedence constraints link executables, containers, and tasks in packages in a control flow, and specify conditions that determine whether executables run. An executable can be a For Loop, Foreach Loop, or Sequence container; a task; or an event handler. Event handlers also use precedence constraints to link their executables into a control flow.

-

API SDK

-

Category Developer

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

When moving external data into the database, the underlying SQL looks like:

CREATE GLOBAL TEMPORARY TABLE "AYX16020836880b41e08246b59ee8c"

...

My client would like to add a prefix to the table as:

CREATE GLOBAL TEMPORARY TABLE MMMM999_DM_USER."AYX16020836880b41e08246b59ee8c"

where MMMM999_DM_USER is supplied in the configuration.

A service account automatically sets the current session to something like MMMM999 (alter session set curent schema=MMMM999;)

If you have a complex SQL query with a number of dynamic substitutions (e.g. Update WHERE Clause, Replace a Specific String), it would be nice to be able to optionally ouput the SQL that is being executed. This would be particuarly useful for debugging.

When a custom (bespoke for @Chrislove) macro is created, I would like the option to create an annotation that goes along with the tool. This is entirely cosmetic, but might help users to recognize the macro.

Thanks,

Mark

-

API SDK

-

Category Developer

I've seen this question before and have run into it myself. I'd like to see a new tool that would allow a developer (of a workflow) to choose a path of logic based upon criteria known only during the execution of a module.

If LEFT INPUT Count of records < 10,000 THEN Path1 (e.g. use a calgary join)

ELSE Path 2 (e.g. use a standard join)

endif

Thanks,

Mark

-

API SDK

-

Category Developer

-

Engine

-

Runtime

it would particularly interesting to develop a WMS support in Alteryx.

To include other Maps like bing, google, HERE instead of CloudMade to display geo informations.

Mathias

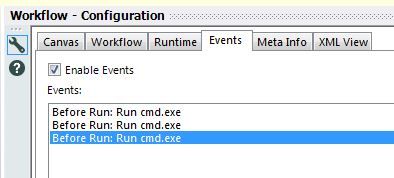

I've been using Events a fair bit recently to run batches through cmd.exe and to call Alteryx modules.

Unfortunately, the default is that the events are named by when the action occurs and what is entered in the Command line.

When you've got multiple events, this can become a problem -- see below:

It would be great if there was the ability to assign custom names to each event.

It looks like I should be able do this by directly editing the YXMD -- there's a <Description> tag for each event -- but it doesn't seem to work.

-

API SDK

-

Category Developer

I would like to suggest to contemplate the option to add a new SDK based on lua language.

Why Lua?

o is open source / MIT license

o is portable

o is fast

o is powerful and simple

o Lua has been used to extend programs written in C, C++, Java, C#, Smalltalk, Fortran, Ada, Erlang

-

API SDK

-

Category Developer

I am trying to use the Dynamic Replace to selectively update records in a set of variables from survey data. That is, I do not have all potential values in the “R” input of Dynamic Replace. Instead, I have a list of values that I would like altered from their current values by respondent (RespondentID) and question # (Q#). Currently, when I run the workflow, any Q#/ResponseID combos that are not in my “R” input are replaced with blanks. However, I would like an option that maintains the original data if there is nothing to replace the data with. Without this option, there are few (I'm still working on some ways) workarounds to ensure the integrity of the data.

Matt

-

API SDK

-

Category Developer

Would be nice if Alteryx had the ability to run a Teradata stored procedure and/or macro with a the ability to accept input parameters. Appears this ability exists for MS SQL Server. Seems odd that I can issue a SQL statement to the database via a pre or post processing command on an input or output, but can't call a stored procedure or execute a macro. Only way we can seem to call a stored procedure is by creating a Teradata BTEQ script and using the Run Command tool to execute that script. Works, but a bit messy and doesn't quite fit the no-coding them of Alteryx.

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 5 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |