Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Alteryx Server was recently updated to allow TLS-mediated connections to the MongoDB persistence layer. This allowed us to switch off of the embedded MongoDB to a highly-available MongoDB Atlas cluster. To our surprise after the switch, when we went to edit our workflows that make use of the persistence layer's data (Server Usage Report, etc.) to hit the new Atlas cluster, we found that the MongoDB Input tool does not support TLS connections. This absolutely needs to be changed. Based on organizational constraints, Atlas is our only option for a HA persistence layer. We absolutely have to have TLS support for the MongoDB Input tool. There is no other way for us to natively query our server persistence layer in Designer. Please bring the MongoDB Input tool into alignment with the MongoDB connections that are supported by Alteryx Server.

It would be very helpful to have an output of the workflow into a step by step document. so someone who does not have access to Alteryx can undestand the steps taken to create the flow hence the result or output.

Tableau v2018.3 introduced multiple table extracts. These are particularly useful for fact table to fact table joins and fact table to entitlement table joins for row-level security where the number of rows created by the join and/or size of join results would be prohibitively large. Also they are useful for fact table to spatial joins where we might have multiple spatial objects (for example custom province/district/health facility catchment) for each row of fact table data.

So in Alteryx I'd like to be able to specify 2+ tables & their join keys and then write out a .hyper multiple tables extract.

Jonathan

At the moment if a part of your python code takes more than 30s to run, Jupyter times out and Alteryx cancels the workflow. This makes the Python Tool unusable for anything intensive and the timeout should be removed by default or be configurable per workflow.

I've made this idea as none of the solutions in these threads feel satisfactory:

It'd be great to have all DCM connections available in the Data connections window.

And when Use Data connection Manager (DCM) is ticked, The screen defaults to DCM Connection list.

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

Hello!

A quite minor, pedantic issue from me today.

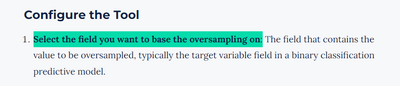

Currently, the Oversample Field Tool's naming and configuration suggest that the tool can over sample data:

However, I would argue the tool under samples data instead.

Here are a few sources that explain this much better than I can:

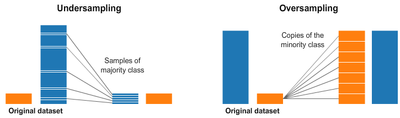

And an image is taken from Medium:

Effectively either step is to create a similar (or same) number of records between each class. Under sampling is the process of taking samples from the majority class, and ending up with a smaller dataset than started with. Over sampling is the process of duplicating records within the minority class, and creates a larger dataset.

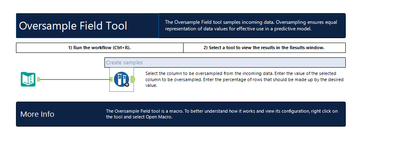

When using the Oversample tool within Alteryx, using the example workflow for reference:

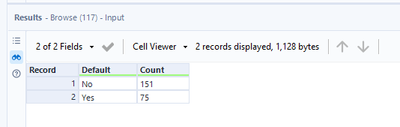

When summarizing the input:

And the output:

It's clear that the data has actually been under sampled, in that random samples have been taken from the majority class to match the minority, rather than creating duplicate minority records.

I would suggest a quick renaming of the tool to "Undersample Field Tool", and documentation to not cause confusion to new users of the platform.

Kind Regards,

TheOC

Where it stands now, only a file input tool can be used to pull data from Google BigQuery tables. The issue here is that the data is streamed and processed locally, meaning the power of BigQuery processing isn't actually being leveraged.

Adding BigQuery In-Database as a connection option would appeal to a wide audience. BigQuery is also standard SQL compliant with the SQL 2011 standard, so this may make for an even easier integration.

I decided to get real fancy when building a standard macro the other day. I checked the box on my macro input that made the connection optional:

It worked really well. My macro then became more complex, so I changed it to a batch macro. To my great surpise/astonishment/shock, the optional incoming connection is no longer optional:

The standard macro is working as expected on the left, but the batch macro is producing an error because my optional connection is requiring that something be connected to it.

I've been told that the code to make it optional is not there for batch macros and that this would be a product feature/improvement.

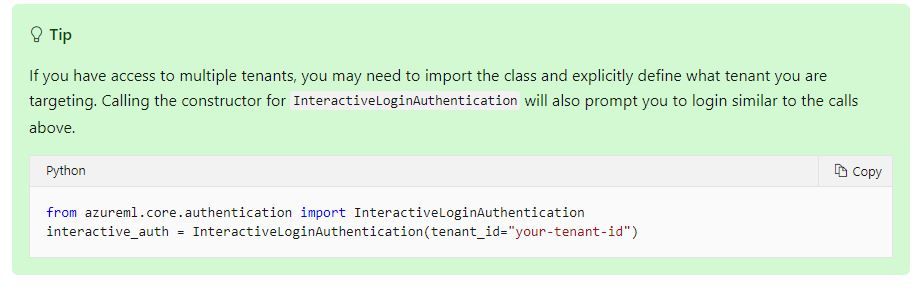

Introducing: The Azure Machine Learning Training and Scoring Tools

We tried to use this tool but can't log in to Azure ML correctly. We have several Tenant ID then log in to another tenant for office 365 not Azure ML.

====================== <Error Message> ==========================================================

Message: You are currently logged-in to 55f0a...-.............................................. tenant. You don't have access to d846a...-............................................. subscription, please check if it is in this tenant. All the subscriptions that you have access to in this tenant are =

[SubscriptionInfo(subscription_name='Microsoft Azure Enterprise', subscription_id='754c5...-...........................')].

Please refer to aka.ms/aml-notebook-auth for different authentication mechanisms in azureml-sdk.

InnerException None

ErrorResponse

=======================================================================================================

Microsoft states that tenant needs to be specified if we have access to multiple tenants.

Set up authentication for Azure Machine Learning resources and workflows

Could you add Tenant ID into Azure credentials so that we can use this tool?

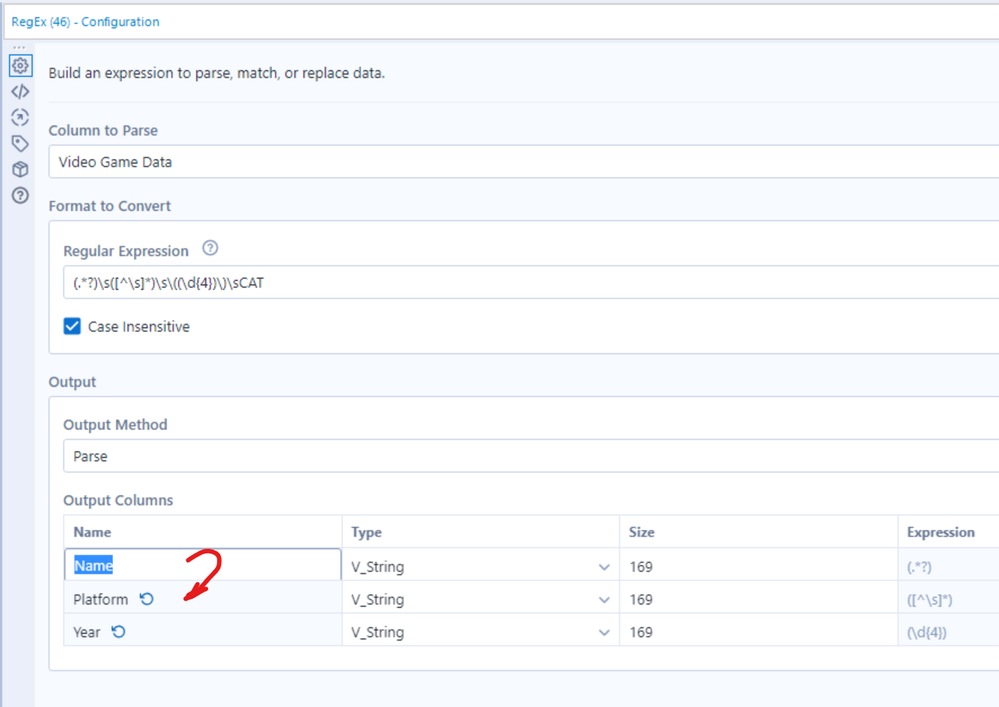

When entering a number of column names in the RegEx parse mode - please can you allow either Enter or down-arrow to move down to the next cell (standard windows convention)?

Currently Enter just exists the edit mode; and down-arrow does nothing.

cc: @Hollingsworth

When training people on the use of action tools, something that I always have to hit on is that when you are telling the tool which piece of the XML that you are adjusting, it's sort of difficult to tell what you have selected, and super easy to accidentally select something else.

Example:

When you initially select the action to take it's this nice Blue Color. However, it still doesn't feel exactly like you have actually selected anything or told the Action Tool what to do, since it's so easy to just select any other one of these actions.

A slightly different problem is that if you are selecting an action that has been previously configured, it is just this light grey color. So it can be easy to accidentally change your settings because you may not realize it's actually set up.

Here is a recent community post that sort of outlines a few of these problems.

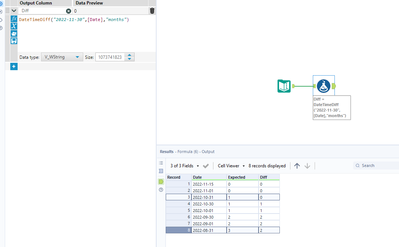

Highlighted in this post: Solved: DateDiff question - Alteryx Community The DateDiff function under certain conditions does not work as you would expect and I suspect most people would not notice the inaccuracy.

Here is the formula for the Results Column below:

DateTimeDiff("2022-11-30",[Date],"months")

| Date | Expected | Result |

| 2022-11-15 | 0 | 0 |

| 2022-10-31 | 1 | 0 |

| 2022-09-30 | 2 | 2 |

| 2022-08-31 | 3 | 2 |

When creating a workflow I generally open a "TEMPLATE" first and then immediately save it to the "NEW WORKFLOW NAME". My template includes all my preferences that aren't set naturally within the user settings and won't get RESET by them either. It has a comment box and containers as well as logos and copyrights. It would be nice to have ready access to this feature. Maybe others have standards that they want applied to all users and their workflows too.

Thanks,

Mark

Please add a data validator workflow.

Suggested features will be the following:

1. Add validation name and set the field/s of your data you want to validate. (it can have more than one validation name on one workflow)

2. On the selected validation(name). Add features that will check/validate the information below:

A. Verify data type

B. Contains Null

C. Max and Min string length

D. Allowed values only, else it will give you an error

E. Regex expected to match and not allowed to match.

3. It can have two(2) outputs. One is True(which is match) and False(which is fail over/error).

Currently, you have two choices for Auto Configure while working on workflows:

- Auto Configure switched on: After every change, the configurations (= columns) of tools are re-evaluated for the entire workflow (at least, this is how it feels like).

- Auto Configure switched off: Configuration of tools is only re-evaluated when pressing F5 (or when using the clipboard).

Pros and Cons of both:

- Auto Configure switched on:

- Configuration in each tool is always accurate so that working on tools is straight forward.

- Editing workflows gets annoyingly slow for complex workflows, especially when data sources from network locations or macros are used. Sometimes I have to wait a minute between two mouse clicks.

- Auto Configure switched off:

- Editing workflows is faster (at least in theory).

- I have to press F5 all the time (because I nearly always change output configuration of tools when working on workflows). Even after pressing F5, Alteryx does not always succeed in calculating the correct configuration of a tool.

- Working with clipboard, loading, saving workflows is still slow.

I would love to have something in between all, kind of an intelligent Auto Configure with following features:

- F5 still starts full configuration evaluation.

- Configuration of input tools is frozen (unless F5 pressed) so that no network access is started during editing the workflow.

- Check for update of macro files is switched off (unless F5 pressed).

- After changing a tool configuration, either a flag is set that this tool was changed but no re-assessment of the workflow configuration is run (approach 1), or only downstream configuration is updated (approach 2). Whether approach 1 or 2 is started could be decided on various criteria: Number of downstream tools (or other measure of complexity), how many "change flags" according to approach 1 are already set, etc.

- If approach 1 was chosen: If you edit a tool which is downstream to another one for which the change flag is set, re-evaluate only the portion of the workflow between the previously changed upstream tool and the tool supposed to be edited.

- Using Clipboard should not invoke full re-configuration.

- Before saving a file, full re-configuration needs to be run (as already now).

This idea will add quite some complexity into the logic of Auto Configure but should have quite some potential to speed up editing workflows because network access and number of re-evaluated tools in each editing step will be reduced.

As we do more work analyzng the canvasses that our folk are producing - it's becoming more and more necessary to have a well documented definition and schema for the XML that is used for Alteryx Canvasses.

Please could you publish the full XML definition and schema for Alteryx canvasses - this will allow groups to perform deeper analytics on how people are using Alteryx, automate quality checks; look for learning gaps; scan for dependencies etc?

Note: this relates to an idea from @dataprep here: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Documentation-tool-list-fileformat/idi-p/184...

It would be extremely useful to quickly find which of my many workflows feed other workflows or reports.

A quick and easy way to do this would be to export the dependencies of a list of workflows in a spreadsheet format. That way users could create their own mapping by linking outputs of one workflow, to inputs of another.

Looking at the simple example below, the Customers workflow would feed the Market workflow.

| Workflow | Dependency | Type |

| Customers | SQL Table 1 | Input |

| Customers | SQL Table 2 | Input |

| Customers | Excel File 1 | Input |

| Customers | Excel File 2 | Input |

| Customers | Excel File 3 | Output |

| Market | Excel File 3 | Input |

| Market | SQL Table 3 | Output |

It would be CRAZY AWESOME if we could get a report like this for all scheduled workflows in the scheduler.

Hello all,

Some Database, including Hive, support natively scheduled queries (yes, the scheduling configuration is inside the database, not through etl/dataprep system). I think this would be an interesting feature for in-db workflow output : you play the worflow once and then only have to run it when it changes, the database do the scheduling.

https://cwiki.apache.org/confluence/display/Hive/Scheduled+Queries

Intro

Executing statements periodically can be usefull in

- Pulling informations from external systems

- Periodically updating column statistics

- Rebuilding materialized views

Best regards,

Simon

- New Idea 301

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 169

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,558 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

209 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections