Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The TO field (and I assume other fields) in the Email tool seem to have a 254 character limit - this should be increased heavily as there are many distribution lists that will go above this character limit!

- Solved: Email tool recipients list truncating emails - Alteryx Community

- Solved: Email Widget: Cut off all the emails in the "To" r... - Alteryx Community

- Re: Email Address Truncated in the "To" Field - Alteryx Community

A distribution list works but is not ideal. Thumbs up if you like this idea!

In the tools that embed the "Rename" option (Select, Append Fields, Join, Join Multiple), copying the new name will copy all the information of the field configuration : tick/untick, original field name, type, size, new name and description.

In my opinion, it should copy only the new name. This would be useful, especially because when you change the name of a field, it isn't automatically changed in subsequent tools, so copying it to replace it in those tools is faster than retyping it every time.

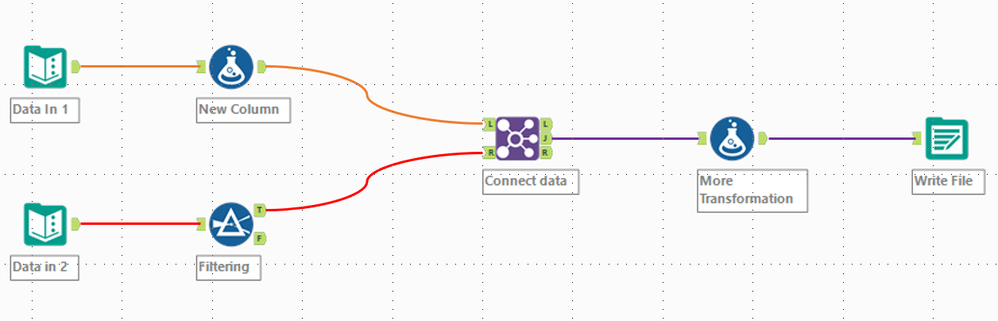

The idea is quite simple. I am sure a lot of Alteryx enthusiasts use containers frequently. These can also be color coded for better overview and readability of your workflows. However, while connections between tools can be named, they cannot be colored.

Therefore, this idea is very simple. Adding an option to color these connections. This would allow for even more readability of workflows. Especially if a workflow contains multiple separate streams of data, this could help to navigate and keep track of how and where data is flowing.

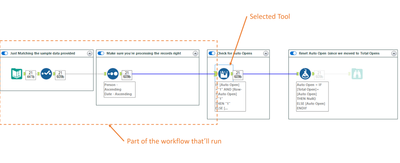

The idea is to have a Run option, where the workflow runs everything up to the selected tool (Like the Cache functionality does).

You select the tool, hit Run Up and the workflows executes everything "before" the selected tool.

That'll make developing much easier, specially when dealing with big workflows and constant changing data.

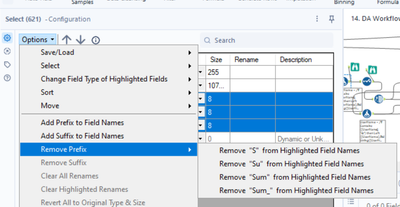

As part of the options of the select tool, it would be really helpful if the 'Change Field type of Highlighted Fields' included the Forced type which would detect for each highlight field, the current type, and change it to the forced version of that type. Currently we need to go through each column to achieve this, and with a lot of columns (that are not consistent across different sheets, so a .yxft is not suitable) this is a massive pain. It seems fairly straight forward to add this as an option called 'forced' or something alongside the other data types

I am working with complex workflows which use multiple files as input, located on network drives. Input tools are Input Data, Directory, Wildcard Input, Wildcard XLSX Input (from CReW macros).

Regularly, I experience very slow Designer when working on the workflows, and slow progress when running the tools mentioned above, especially when working from home. Switching off Auto Configure did not really help because I the column list sometimes does not converge even after pressing F5 multiple times, and when actively working on workflows, I have to press F5 all the time...

In order to speed up both working on workflows and running the workflows, I would like to propose a function "Cache all File Inputs" which loads and caches all file inputs at once. To achieve this state, I now have Cache and Run workflow once per every file input.

Currently the select tool can reorder data by sorting or selecting the field and then clicking the up or down button. This is tedious when you have a data set with 400 fields. I suggest you add a drag and drop functionality to the field list to facilitate reordering data.

The constant [Engine.GuiInteraction] can be used to determine whether a workflow was run in the Designer or Gallery. Currently, there's no method to also find out whether a workflow was initiated by a schedule or run manually in the Gallery. The information is available in the Gallery but not forwarded to inside the workflow.

Please introduce a new variable [Engine.ScheduledRun] (or similar) which determines whether the workflow was initiated by a schedule (value "true" if boolean or "schedule" if string type) or manually (value "false" or "manual").

I would like a way to disable all containers within a workflow with a single click. It could be simply disable / enable all or a series of check boxes, one for each container, where you can choose to disable / enable all or a chosen selection.

In large workflows, with many containers, if you want to run a single container while testing it can take a while to scroll up and down the workflow disabling each container in turn.

Hi! I noticed that there is currently no way to use the debug function when working on an analytic app workflow that contains control containers. I'm running 2024.1 and I use the debug feature in my workflows that currently do not have control containers for me to troubleshoot when data changes in a dynamic workflow. Currently, when running in test mode, I have no way to review the data step by step in the flow when selected dynamically through the interface apps. I can only view the final output and make tweaks.

Hello all,

As of today, Alteryx proposes the Intelligence Suite with amazing tools never seen in a data tool, even OCR, image analysis etc.. https://www.alteryx.com/fr/products/intelligence-suite

But... these wonderful tools are part of a paid add-on. And this is what is problematic :

-Alteryx is already an expensive tool. With a huge value but honestly expensive.

-The tools in Intelligence Suite are not common in data tools because you won't use often. And paying for tools you use once or twice in a month is not easy to justify.

So, I suggest to incorpore Intelligence Suite in the core product. The Alteryx users benefit is evident so let's see the Alteryx benefits :

-more user satisfaction

-a simpler catalog

-adding a lot of value to Designer, with the ability to communicate widely on the topic.

-almost no cost : most costumers won't buy the Intelligence Suite anyway.

Best regards,

Simon

In 24.1 the email tool was adjusted such that any error in the workflow prevents the email tool from sending any emails. Previously, if AMP was enabled, the email tool could still send emails even if the workflow contained an error, but this is no longer the case. There are many cases where this is not ideal, one example being:

In larger workflows, I have multiple data streams which, after a point, operate independently. Even if one stream errors, I would like emails to be sent from the other streams. I have tried nesting the email tool within multiple layers of macros, but if any of the parent or child workflows/macros contain an error, the email tool will not send any emails.

I would like a checkbox option in the email tool or workflow configuration that will still allow emails to be sent if the workflow errors. Then, with the use of control containers, I will have full control over email distribution with errors.

My organization use the SharePoint Files Input and SharePoint Files Output (v2.1.0) and connect with the Client ID, Client Secret, and Tenant ID. After a workflow is saved and scheduled on the server users receive the error "Failed to connect to SharePoint AADSTS700082: The refresh token has expired due to inactivity" every 90 days. My organization is not able to extend the 90 day limit or create non-expiring tokens.

If would be great if the SharePoint connectors could automatically refresh the token when it expires so users don't have to open the workflow and do it manually.

Hello,

As of today, there are only few packages that are embedded with Alteryx Python tool. However :

1/Python becomes more and more popular. We will use this tool intensively in the next years

2/Python is based on existing packages. This is the force of the language

3/On Alteryx, adding a package is not that easy : you need to have admin rights and if you want your colleagues to open your workflow, it also means that he has to install it himself. In corporate environments, it means loosing time, several days on a project.

Personnaly, I would Polars, DuckDB.. that are way faster than Panda.

Hello,

There are several dozens of data sources... maybe it would be useful to have a search in it?

Best regards,

Simon

Hello all,

As of today, you can populate the Drop Down tool in the interface category with a query launched from a in-memory connection. I would really appreciate the ability to use instead an in-db connection.

Why ?

It means managing two connections instead of one, and finding ways to manage it on server for both of them, etc etc.. Simplicity is key.

Best regards,

Simon

Hi there

My idea is to have an option to copy and paste a tools configuration to a different tool of the same type somewhere else on the canvas.

Example:

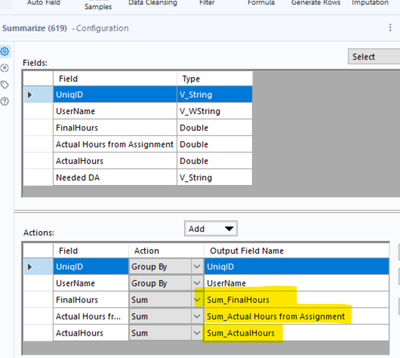

Say I have four summarise tools dealing with four different data streams, I envision a 'Copy Tool Configuration' option after right clicking on a tool and then a 'Paste Tool Configuration" which can be applied across the multiple instances of the summarise tool by overwriting. This would preserve the tools anchors incoming and outgoing connections.

Benefit:

This would increase the speed of developing workflows. Naturally this would be significantly quicker than copy and pasting tools, and then re-wiring anchors. Additionally, this would potentially reduce human error when iteratively developing workflows.

Regards - Rhys Cooper

Hello all,

We all know for sure that != is the Alteryx operator for inequality. However, I suggest the implementation of <> as an other operator for inequality. Why ?

<> is a very common operator in most languages/tools such as SQL, Qlik or Tableau. It's by far more intuitive than != and it will help interoperability and copy/paste of expression between tools or from/to in-database mode to/from in-memory mode.

Best regards,

Simon

Hello,

As of now, you can't choose the DCM connections to synchronize. It's either all or none.

However, I have one designer and two servers (Sandbox/Production). Most connections must be common, but not all.

Best regards,

Simon

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 32 | |

| 6 | |

| 5 | |

| 3 | |

| 3 |