Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

This idea has arisen from a conversation with a colleague @Carlithian where we were trying to work out a way to remove tools from the canvas which might be redundant, for example have you added a select tool to the canvas which hasn't been configured to change a data type or rename a field. So we were looking for ways of identifying in the workflow xml for tools which didn't have a configuration applied to them.

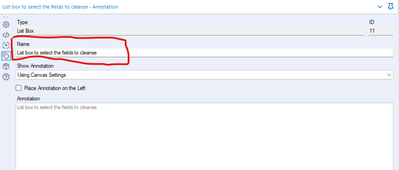

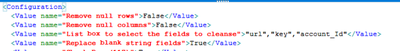

This highlighted to me an issue with something like the data cleanse tool, which is a standard macro.

The xml view of the data cleanse configuration looks like this:

<Configuration>

<Value name="Check Box (135)">False</Value>

<Value name="Check Box (136)">False</Value>

<Value name="List Box (11)">""</Value>

<Value name="Check Box (84)">False</Value>

<Value name="Check Box (117)">False</Value>

<Value name="Check Box (15)">False</Value>

<Value name="Check Box (109)">False</Value>

<Value name="Check Box (122)">False</Value>

<Value name="Check Box (53)">False</Value>

<Value name="Check Box (58)">False</Value>

<Value name="Check Box (70)">False</Value>

<Value name="Check Box (77)">False</Value>

<Value name="Drop Down (81)">upper</Value>

</Configuration>

As it is a macro, the default labelling of the drop downs is specified in the xml, if you were to do something useful with it wouldn't it be much nicer if the interface tools were named properly - such as:

So when you look at the xml of the workflow it's clearer to the user what is actually specified.

The Download tool allows for encrypted SFTP connections, but I recently discovered (the hard way) that the Alteryx capabilities are incomplete and the algorithms not fully up to date. Just adding an additional updated algorithm or two to the 4 available for message authentication would bring it up to date.

As back story, our firm has onboarded a new SFTP server, and all of a sudden my Alteryx SFTP workflows didn't work when I pointed them at the new server. After going back and forth extensively with the helpful folks at Alteryx, we discovered there's a gap in Alteryx's current capabilities.

Basically, the Alteryx download tool can use the old encryption algorithm and half of the new version, and half of the new version is like having half a bridge.

Up until 2017, SHA-1 was the most common hash used for cryptographic signing. Since then it's been slowing getting supplanted by SHA-2.

Alteryx can use SHA-2 for key exchanges, but not for message authentication (the HMAC algorithm). The internet seems to swear up and down that the old SHA-1 algorithm works just fine for message authentication, but I don't have the luxury of caring about that. All I know is that as of March 2019 the SFTP server I have to connect with has deprecated Alteryx's SHA-1 algorithm as being too out of date and only allows the new SHA-2 message authentication.

Alteryx CAN use the up to date SHA-2 for key exchange (GOOD, halfway there!) but can only use (old) ways of doing message authentication that do NOT include SHA-2 (NOT GOOD!). Please add updated SHA-2 algorithms (hmac-sha2-512, hmac-sha2-256) to the HMAC mix too!

Many thanks,

Josiah

Could you expose a link to the Keyboard Shortcuts (which is here: https://help.alteryx.com/2019.4/HotKeys_Shortcuts.htm?Highlight=keyboard%20shortcuts) on the primary help menu (screenshot below)

This will allow people to get quicker in Alteryx by exposing these shortcuts to more users.

(1) I would like to have more text formatting options available in the Comment Tool, such as:

- set different format for selected words (color, bold, underline, size..)

- indenting

- bullet list or numbered list

(2) Option to remove or recolor the blue outline of the comment box. (Especially when I have a comment in a color-filled comment box, I would prefer a comment box without a dark outline.)

(3) UX - Add an arrow cursor to indicate resizing functionality

I know it sounds trivial, but I hate having to do the extra click to get the browse tool to pop out. Just upgraded from 2020.2 to 2021.3. Before, you could pop out a browse window in 2 clicks:

Now you need 3 clicks:

Like I said, I know it sounds trivial, but when you do this dozens of times a day, it adds up to a big annoyance.

Anyway, was just wondering if enough others felt the same and if so, hopefully the browser behavior could be pushed back to a 2 click pop out.

Although I must say that I just LOVE the comma inserter.

Currently the InDB tools require to select a DSN that is defined on your computer.

This makes any workflow which uses a DSN incredibly painful to deploy to the server, since the DSN needs to be created on every worker node and for a large server environment this can mean creating DSN entries on 6+ worker nodes in prod plus prod server plus dev/UAT environment plus dev/UAT worker nodes.

Could we please change the InDB tools to default to DSN-less connections, where the connection persists the connection details in-line so that it can deploy to the server without a DSN setup (since all connection details are contained within the connection string)?

Thank you

Sean

CC: @rijuthav @jithinmony @HengHe @RajK @ydmuley @revathi @Deeksha @MPistone @Ari_Fuller @Arianna_Fuller @JoshKushner @samnelson @avinashbonu @Sunder_Sriram @Rahul_Thakur @Rahul_Singh

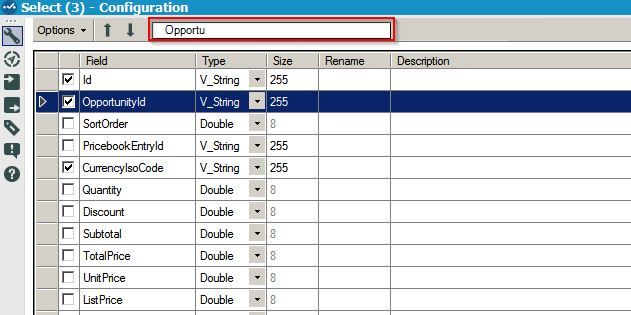

It would be very useful to be able to search the field by typing the name instead of scrolling up and down looking for it among a few hundred fields.

In a controlled environment, there is a need to control promotion of assets to prod with basic controls to ensure that someone has tested, signed off, that it meets certain quality standards (like "no warnings", "no Run Commands", "all reports must have company logo on top left" etc).

However, at the moment there doesn't appear to be a promotion process in Alteryx to control this flow, so assets are copied across by an admin. This is very manual and error prone (many times we've had the wrong assets copied), and it also means that this process is controlled in a workflow outside of Alteryx (e.g. A JIRA queue or similar).

Could we request that Alteryx look into a production promotion process, which allows the admin team to perform any required checks (including automated checks), and then pushes this into prod stamped with the designer's Kerberos rather than the sys admin?

Joint idea with @avinashbonu @DamianA @BenBu

Hi all,

The Publish to Tableau Server tool is great.. but requires username and password. If you are using AD, there is a chance that your users don't have a password. In that case, you probably have a technical user that you share across the team. This is not an ideal situation and you loose the governance around the data.

Fortunately, there is an easy workaround. You can leverage personal token authentication : https://help.tableau.com/v2019.4/server/en-us/security_personal_access_tokens.htm

The advantage of this method is that it logs in with your user and your data source is uploaded under your name. This is still using the Tableau REST API so the changes to do in the current macro is MINOR.

Changes to do in the current macro :

1- Add a parameter authentication method with choices : Username/Password ; Personal Token

2- If Personal Token is selected, add two parameters : Token_Name and Token_Value

3 - In the TableauServer.Login supporting macro, improve the formula(13) to change the payload based on user selection. If Username/Password, keep it as is. Else use the syntax here : https://help.tableau.com/current/api/rest_api/en-us/REST/rest_api_concepts_auth.htm#make-a-sign-in-r...

This is quite a straight forward change but could help a lot of companies using Alteryx.

Can you please implement that changes to strengthen this tool ?

Thanks a lot,

When using an OUTPUT tool, you can currently only output to one (1) format. My idea is to allow for a checkbox to create a YXDB file format when you output to another format. In many instances a copy of the CSV data in YXDB file format is needed. Creating another output requires another tool with nearly identical information. This is my backup copy to what I sent the customer is an example.

Cheers,

Mark

Taking inspiration how you work with Jupyter notebooks and use the notebook to show your workings, wouldn't it be great if you could document your workflows directly on the canvas more in a notebook style.

I think this essentially can be summarised down into two features:

1) Markdown functionality in the comment tool

2) Ability to import results from IRG and / or browse tool directly into the canvas.

I have mocked up a version of what this could look like in the screenshot below.

Overall I think it would improve the experience of documenting workflows as you can show your workings in-line while building the workflow. Plus it solves the debate around team vertical vs team horizontal as you build using both!

The more python and R development I do the more I want to use the shortcutes [CTRL] + [ENTER] to run my workflow,

Is it possible to add this as a second way to run the workflow?

I'm thinking its going to have to have a new shortcut anyways with cloud as [CTRL] + [R] would refresh the page! :D

Asking for a friend :D

Hello all,

A few weeks ago Alteryx announced inDB support for GBQ. This is an awesome idea, however to make it run, you should use Oauth2 Authentication means GBQ API should be enabled. As of now, it is possible to use Simba ODBC to connect GBQ. My idea is to enhance the connection/authentication method as we have today with Simba ODBC for Google BigQuery and support inDB. It is not easy to implement by IT considering big organizations, number of GBQ projects and to enable API for each application. By enhancing the functionality with ODBC, this will be an awesome solution.

Thank you for voting

Albert

There are many circumstances when you have to build an interative macro where it's not just the iterating data set that needs to change every iteration, but also a second data set.

Think about this like a loop where two different variables are updated on every iteration, not just the control variable in the For xxx control variable.

The way that users work around this is to use a temporary yxdb file where instead of a macro input you input from the yxdb, and then write back to the same yxdb. This allows you to pretend that you can adjust 2 different data sets on every cycle of the loop. there are 4 downsides to this:

a) User complexity - this breaks the conceptual simplicity of macro inputs since now the users have to understand that in situation X I use macro inputs; and in situation Y I have to use some other type of tool.

b) Speed penalty - writing to disk is between 1000x slower and 1 000 000x slower than working with data in memory (especially if it's in cache) - so by forcing this to go through a yxdb file, you do incur a speed penalty which is just not needed

c) blocking penalty - Because of the fact that you can't write to a file that you're still reading from, you need to pepper this with Block until done tools - and you need to initialize the macro using a first write to the yxdb file outside the macro - which further hurts speed. Given the nuanced behaviour of block-until-done, this also introduces user complexity issues

d) Self-contained - because you have to initialize these files outside the macro - the macro is no-longer self-contained and portable (which breaks the principle of Information Hiding which is a key pillar of good modular decoupled software design.

The other way that users work around this - is to serialize their entire second recordset into a field which then gets tacked onto the iterating data set using an iterative macro. This is HIGHLY wasteful becuase then you have to build a serialize & deserialize process for this second recordset. It fixes the speed and blocking penalites from above, but introduces a computational overhead which is generating no value; and makes this even more complex for users - and a further blocker to using macros.

Recommendation:

We could make this simpler by allowing users to create multiple pairs of macro input / macro outputs so that 2 or 3 or n different data sets can be updated with every iteration.

Below is a screenshot demonstrating this, from an Advent of code challenge - the details of the problem are not important - the issue at hand is that there are 2 record sets which both need to be updated on every iteration.

cc: @NicoleJohnson @Samanthaj_hughes @SteveA

Currently if one wants to compare different alteryx files or different versions of the same file - one needs to compare the XML files. If you are not very familiar with navigating XML, this poses a risk as one may not be able to identify all changes.

It would be a great addition to Alteryx to integrate Alteryx with Git, Subversion, CVS, Mercurial, and GitHub as this tool is becoming the go-to tool for data processing for data analysts and even programmers.

This additional functionality to compare previous versions (diff) and also to merge alteryx workflows if two people are working on the same workflow, and also to easily see what changes have been committed/ made by other developers and when would make Alteryx a much more powerful tool and would open doors to other types of users, as essentially you can run anything through Alteryx.

When I import an Excel file in to Alteryx I get an error: “shared strings root=x:sst” and Alteryx cannot read the file.

I can work around this by manually opening and saving the excel before importing it into Alteryx but this is not ideal, especially considering the automation implications.

I believe this may be happening because the XLSX generated by the source of the report has a prefix “x:” in all the tags in the Shared String XML embedded in Excel. See: https://learn.microsoft.com/en-us/office/open-xml/working-with-the-shared-string-table

Essentially, it would appear Alteryx is not able to read generated Excel sheets which has the prefix "x:" (e.g. from a bot). The second file which has been opened and saved in Excel manually can be read by Alteryx correctly.

Example of file as exported from ”BOT”:

How the same file looks once it is manually opened and saved:

Ideally Alteryx would read the file as is, i.e. with the "X:SST" tag seen above as having to manually open and save the excel before it can be read is rather clunky.

Thanks!

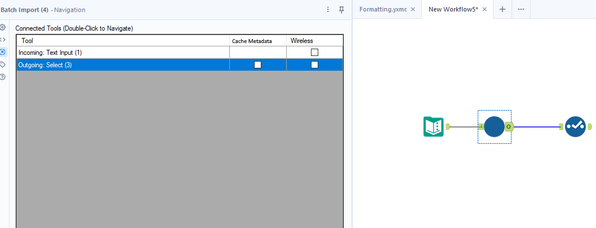

In short:

Add an option to cache the metadata for a particular tool so that it doesn't forget when using tool that have dynamic metadata such as batch macros or alteryx metadata engine can't resolve such as python tool.

Longer explanation:

The Problem:

One of the issues I often encounter when making dynamic workflows or ones that require calling external services is that Alteryx often forgets the metadata of what columns to expect. This causes the workflow to forget configuration of downstream tools when a workflow is first opened or when the metadata engine refreshes. There is currently the option to disable the metadata engine from automatically refreshing but this isn't a good option because you miss out on much of the value it brings.

Some of the common tools where I encounter this issue:

- Json parse

- Batch macros

- Python tool

- Regex parsing to rows

Solution:

Instead could we add an option to cache the metadata for a particular tool, this would save the metadata from the last time the workflow ran to within the workflows XML so that it persists when closed and reopened. Then when the metadata engine runs when it gets to this tool instead of resolving the metadata from the tool it instead uses the saved version in the XML. Obviously when it actually runs it would ignore this and any errors would still occur.

This could be an option in navigation pane of each tool. Mockup below:

This would make developing dynamic workflows far easier and resolve issues of configuration being lost when the metadata changes and alteryx forgets the options.

Hi,

It would be great if users have the option to display the number of records that go in and out of the different tools in your canvas. This allows users to very quickly see how many records are in their datasets, and especially quickly analyze the results of specific actions such as joins, filters etc. without the need to open each individual tool. Especially when performing joins this can be very useful to quickly see how many of your rows have been successfully joined. I think this will give users a feeling that they have more control over their data and a better understanding of what is happening in Alteryx. Also if you quickly want to review a complex workflow (especially when it is not your own) this could be a huge timesaver. Simply run the workflow and follow the numbers to see what is happening and identify tools that might cause issues.

Love to hear what you think!

I think the undo/redo capabilities in Alteryx could be greatly improved. Here is an idea that I think would be beneficial...

I'd like to see which exact tools are affected by my undo/redo actions. An idea was suggested a couple years ago to move your location on the canvas, but that was not added to the roadmap. Instead, is it possible to add the tool ID to the undo menu so that it is obvious which tool each line is detailing?

This is the current debug menu that shows your previous actions:

When a tool is created, the ID can be displayed in this menu, but this is not shown when a change is made to an existing tool. My suggestion is that the menu would say:

4. Change Sort (3) Properties

This same change should be made in the Edit dropdown menu.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |