Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi

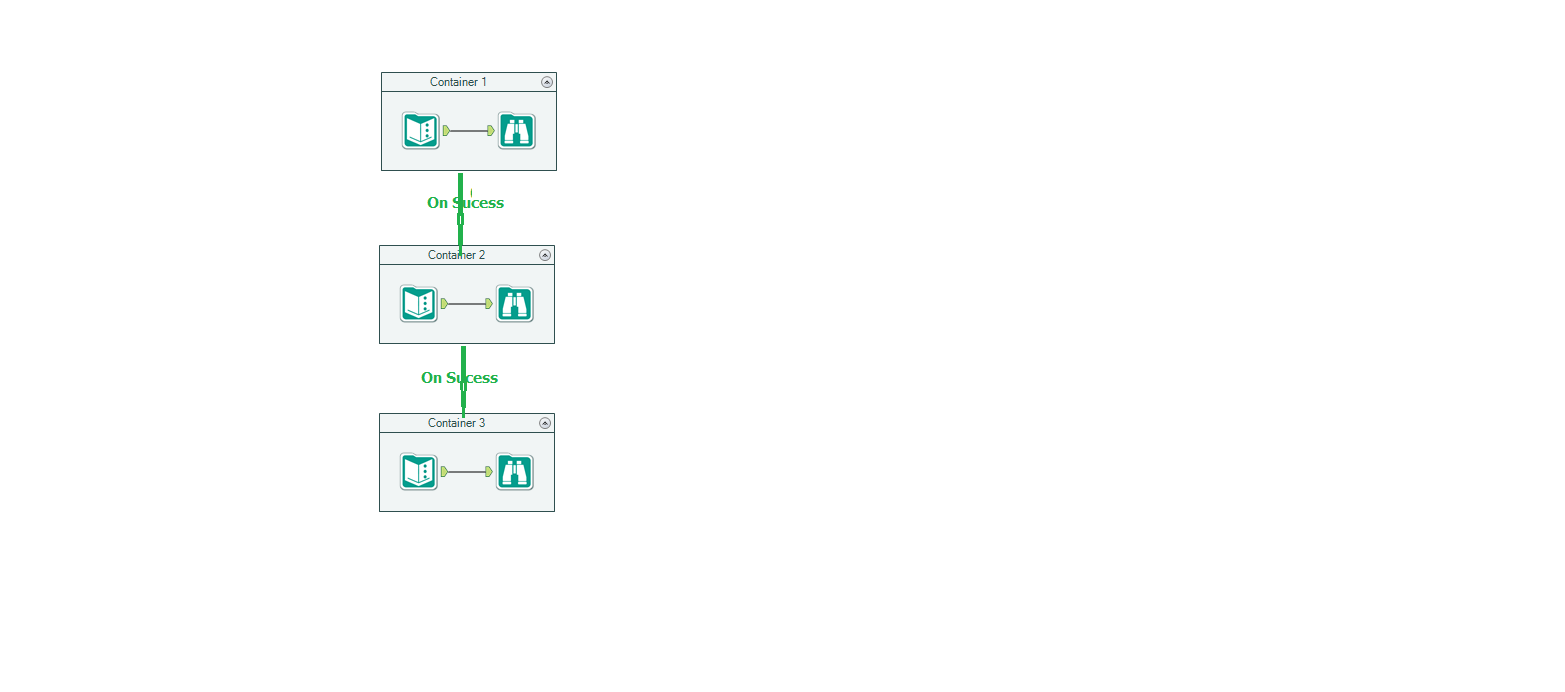

Wanted to control the order of execution of objects in Alteryx WF but right now we have ONLY block until done which is not right choice for so many cases

Can we have a container (say Sequence Container) and put piece of logic in each container and have control by connecting each container?

Hope this way we can control the execution order

It may be something looks like below

Please add a toggle for Dark Mode as Alteryx, after all these years of using it, is burning out my retinas.

The OS and most apps have a Dark Mode theme so flipping back to a bright white canvas is very jarring. I tried to adjust the canvas colors in a more muted way but never can get it to work satisfactorily and still be as easy to read as the retina burning default.

This is a pretty quick suggestion:

I think that there are a lot of formulas that would be easier to write and maintain if a SQL-style BETWEEN operator was available.

Essentially, you could turn this:

ToNumber([Postal Code]) > 1000 AND ToNumber([Postal Code]) < 2500

Into this:

ToNumber([Postal Code]) BETWEEN 1000 AND 2500

That way, if you later had to modify the ToNumber([Postal Code]), you only have to maintain it once. Its both aesthetically pleasing and more maintainable!

Hi everyone! I have been trying to find a way to do this without creating a new idea, but I have decided to make it an official 'Idea' to see if there is anyone else that might appreciate a feature like this (or has found there own way to do it!)

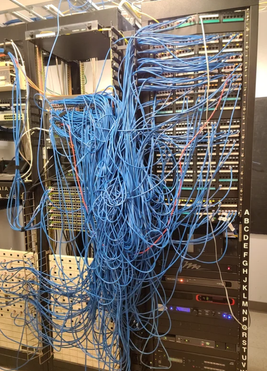

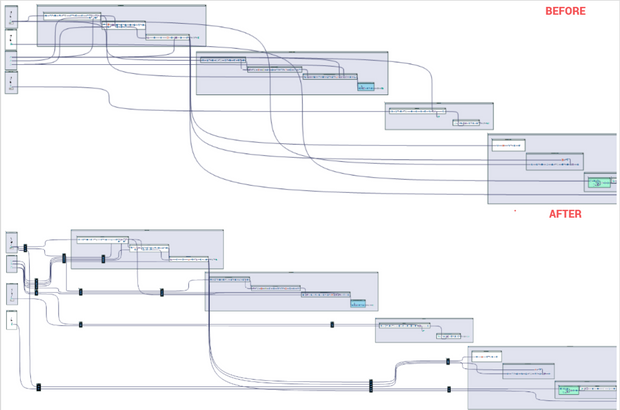

Do your workflows look like this...

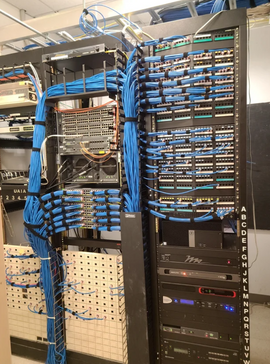

but you wish they could look like this?

Well... they can with your help!

Okay, I might be crazy...but its worth a shot.

While I understand this is an extremely niche issue, in my experience, it can become very difficult to trace the data through unmanaged lines in large workflows. I think it will be great to cable manage canvas lines so workflows are easier to follow. Heck, while I am already at it, I think it we should all start calling these canvas lines cables... They don't carry electricity, but they sure do carry data!

Here is an example I created in Alteryx using select tools and containers:

I've seen this question before and have run into it myself. I'd like to see a new tool that would allow a developer (of a workflow) to choose a path of logic based upon criteria known only during the execution of a module.

If LEFT INPUT Count of records < 10,000 THEN Path1 (e.g. use a calgary join)

ELSE Path 2 (e.g. use a standard join)

endif

Thanks,

Mark

Similar to the setting that you have in many individual tools (join, append, select, et al) where you can go to options and choose to "forget missing fields" it would be nice where you could go to options for the entire flow and "forget missing fields".

This would remove the headache that you have with large flows where you make a change(s) then have to go back through each and every tool to "forget" within that tool. Yes you could still do it individually, but if you chose, you could also do it universally for the entire flow all at once to all the 'missing fields'.

I'd like to see Alteryx allow a second install of your license on a second, personal machine. Tableau allows this and IMO is why there is such a robust online / blog community around that product.

For those of us that work at mid-size to large organizations, there are often strict rules governing internal data and use of cloud-based data sources. If I discover some new trick I'd like the share with my fellow Alteryx analysts outside of my company, I have no clear way to do that the same way I can with Tableau where I can do it at home not using my company's data.

Being able to learn new features and test things out on commonly available public data (ever notice that Superstore data set everyone who gets Tableau has?) would accelerate what we're able to do with the community site here and the larger analytics blogging community.

Right now we can create Tableau extract files (.tde), but cannot read them into Alteryx -- this limits the partnership of these two companies.

Please add the functionality to import .tde files,

Best,

Jeremy

I constantly find my using pre and post SQL Commands in the Output tool to run SQL when I don't actually have any data to output.

One example is when I load data into S3 and want to load it into Redshift. I have SQL code to run but no data to Output - I end up running a dummy row into a temp table.

So can we have an SQL tool that simply acts the same as a Pre-SQL command without the associated data output. Once the command is run we should be able to continue the workflow, so the tool should have an option input and output, like the Run Command tool.

The bak file that is automatically created (and re-created if deleted) really clutters up our folders.

Please allow us to either turn it off, or specify a different location to hold our back up files.

Thanks

in our organization people are moving away from network drives to BOX for file repository and they needs to use to connect to BOX using Alteryx as an Input and Output platform where they should be able to access files to read and write.

Currently few of the users are able to use the BOX as a repository using BOX Sync tool (Map BOX as a network drive) but that is not at all useful when they try to save into a gallery and run or schedule on the gallery. A connector for BOX will be of great help.

Referencing the previous idea: Inputs/Output should have the option to read/write a compressed file (ZIP or GZIP)

This idea has been implemented for inputting .zip files. However, we still need to use the run command workaround for outputs. It's very common for many users to want to output their .csv, .xlsx, .pdf to a .zip. The functionality would also need to extend to Gallery.

See the following links for people that are looking for this type of functionality:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Output-files-to-ZIP/td-p/163502

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Zip-files/td-p/151456

Feel free to merge this idea with the previous one for continuity.

We have discussed on several occasions and in different forums, about the importance of having or providing Alteryx with order of execution control, conditional executions, design patterns and even orchestration.

I presented this idea some time ago, but someone asked me if it was posted, and since it was not, I’m putting it here so you can give some feedback on it.

The basic concept behind this idea is to allow us (users) to have:

- Design Patterns

- Repetitive patterns to be reusable.

- Select after and Input tool

- Drop Nulls

- Get not matching records from join

- Conditional execution

- Tell Alteryx to execute some logic if something happens.

- Record count

- Errors

- Any other condition

- Order of execution

- Need to tell Alteryx what to run first, what to run next, and so on…

- Run this first

- Execute this portion after previous finished

- Wait until “X” finishes to execute “Y”

- Orchestration

- Putting all together

This approach involves some functionalities that are already within the product (like exploiting Filtering logic, loading & saving, caching, blocking among others), exposed within a Tool Container with enhanced attributes, like this example:

The approach is to extend Tool Container’s attributes.

This proposition uses actual functionalities we already have in Designer.

So, basically, the Tool Container gets ‘superpowers’, with the addition of some capabilities like: Accepting input data, saving the contents within the container (to create a design pattern, or very commonly used sequence of tools chained together), output data, run the contents of the tools included in the container, etc.), plus a configuration screen like:

- Refers to the actual interface of the Tool Container.

- Provides the ability to disable a Container (and all tools within) once it runs.

- Idea based on actual behavior: When we enable or disable a Tool Container from an interface Tool.

- Input and output data to the container’s logic, will allow to pickup and/or save files from a particular container, to be used in later containers or persist data as a partial result from the entire workflow’s logic (for example updating a dimensions table)

- Based on actual behavior: Input & Output Data, Cache, Run Command Tools, and some macros like Prepare Attachment.

- Order of Execution: Can be Absolute or Relative. In case of Absolute run, we take the containers in order, executing their contents. If Relative, we have the options to configure which container should run before and after, block until previous container finishes or wait until this container finishes prior to execute next container in list.

- Based on actual behavior: Block until done, Cache, Find Replace, some interface Designer capabilities (for chained apps for example), macros’ basic behaviors.

- Conditional Execution: In order to be able to conditionally execute other containers, conditions must be evaluated. In this case, the idea is to evaluate conditions within the data, interface tools or Error/Warnings occurrence.

- Based on actual behavior: Filter tool, some Interface Tools, test Tool, Cache, Select.

- Notes: Documentation text that will appear automatically inside the container, with options to place it on top or below the tools, or hide it.

This should end a brief introduction to the idea, but taking it a little further, it will allow even to have something like an Orchestration layout, where the users can drag and drop containers or patterns and orchestrate them in a solution, like we can do with the Visual Layout Tool or the Interactive Chart tool:

I'm looking forward to hear what you think.

Best

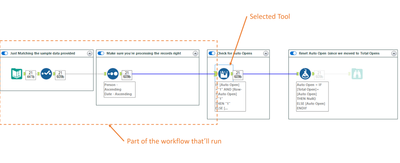

The idea is to have a Run option, where the workflow runs everything up to the selected tool (Like the Cache functionality does).

You select the tool, hit Run Up and the workflows executes everything "before" the selected tool.

That'll make developing much easier, specially when dealing with big workflows and constant changing data.

Hello,

We use the pre-sql statement of the input to set some parameters of connections. Sadly, we cannot do that in a in-db workflow. This would be a total game-changing feature for us.

Best Regards,

Simon

Ever tried to copy a field rename from one select tool to another, or from one summarize tool from another.

Have you noticed that it doesn't work?

I think it should. 🙂

i.e., if you click on the rename box ("Total") and enter ctrl-c, when you enter ctrl-v in the other tool, it pastes this:

Field2 Sum Total

not just the name "Total"

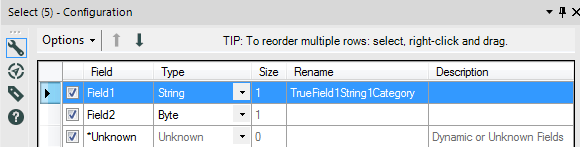

Instead of just the renamed field "Category", the select tool pastes this:

True Field1 String 1 Category

In the tools that embed the "Rename" option (Select, Append Fields, Join, Join Multiple), copying the new name will copy all the information of the field configuration : tick/untick, original field name, type, size, new name and description.

In my opinion, it should copy only the new name. This would be useful, especially because when you change the name of a field, it isn't automatically changed in subsequent tools, so copying it to replace it in those tools is faster than retyping it every time.

To allow users to pull data from Power BI, eg. datasets and usage data, to allow it to be manipulated in Alteryx.

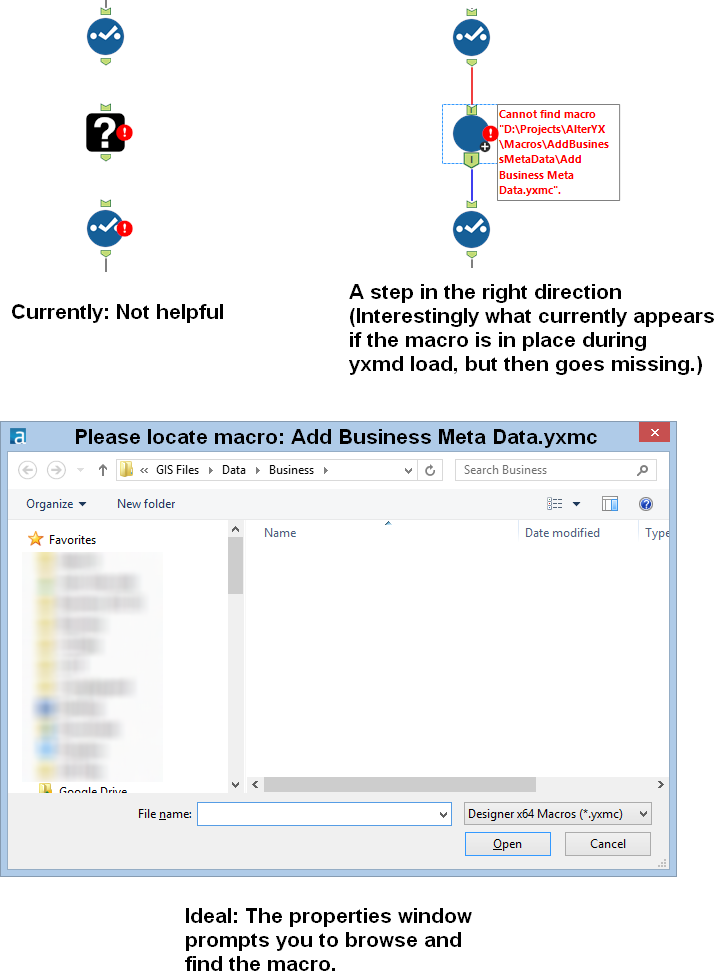

Idea: Prompt the user to find a missing macro instead of the current UX of a question mark icon.

Issue: When a macro referenced in a workflow is missing, then there is no way to a) know what the name of the macro was (assuming you were lazy like me and didn't document with a comment) and b) find the macro so you can get back to business.

When this happens to me know, I have to go to the XML view and search for macros and then cycle through them until I find the one that's missing. Then I have to either copy the macro back into that location or manually edit the workflow XML. Not cool man.

Solution: When a macro is missing, the image below at the right should be shown. In the properties window, a file browse tool should allow the user to find the macro.

The "Manage Data Connections" tool is fantastic to save credentials alongside the connection without having to worry when you save the workflow that you've embedded a password.

Imagine if - there were a similar utility to handle credentials/environment variables.

- I could create an entry, give it a description, a username, and an encrypted password stored in my options, then refer to that for configurations/values throughout my workflows.

- Tableau credentials in the publish to tableau macro

- Sharepoint Credentials in the sharepoint list connector

- When my password changes I only have to change it in one place

- If I handoff the workflow to another user I don't have to worry about scanning the xml to make sure I'm not passing them my password

- When a user opens my workflow that doesn't have a corresponding entry in their credentials manager they would be prompted using my description to add it.

- Entries could be exported and shared as well (with passwords scrubbed)

Example Entry Tableau:

| Alias | Tableau Prod |

| Description | Tableau Production Server |

| UserID | JPhillips |

| Password | ********* |

| + |

Then when configuring a tool you could put in something like [Tableau Prod].[Password] and it would read in the value.

Or maybe for Sharepoint:

| Alias | TeamSP |

| Description | Team sharepoint location |

| UserID | JPhillips |

| Password | ********* |

| URL | http://sharepoint.com/myteam |

| + |

Or perhaps for a team file location:

| Alias | TeamFiles |

| Description | Root directory for team files |

| Path | \\server.net\myteam\filesgohere |

| + |

Any of these values could be referenced in tool configurations, formulas, macro inputs by specifying the Alias and field.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 7 | |

| 5 | |

| 3 | |

| 3 |