Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi,

The current way to label or annotate a tool is that we need to double click the tool to bring up configuration window, then click on the annotation icon, then click on the annotation textbox.

My suggestion is when a tool is selected, simply press the Enter/Return key, then start typing the annotation right there (inline editing). Save a couple of clicks.

Thanks.

Hello,

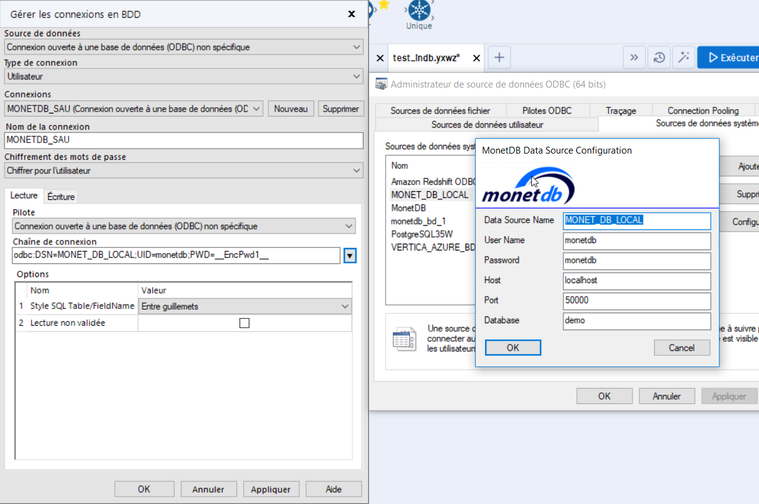

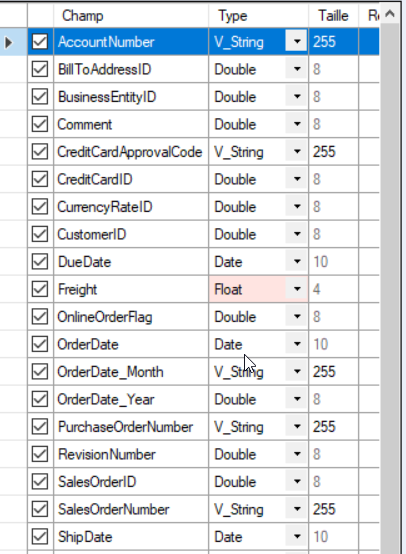

My issue is very easy to solve. I want to use the generic ODBC In database for a specific base (monetdb here but it isn't important).

The connexion works just fine. However, I cannot create table because the data types are changed and does not even exist. Here is my data with some Date type :

And here the error in my data stream in give me this very interesting message :

Error: Entrée du flux de données (2): Erreur lors de la création de la table "formation.temp1" : [MonetDB][ODBC Driver 11.31.11]Type (datetime) unknown in: "create table "formation"."temp1" ("AccountNumber" varchar(255),"BillToAddressID"

syntax error, unexpected IDENT in: ""Freight""

CREATE TABLE "formation"."temp1" ("AccountNumber" varchar(255),"BillToAddressID" float,"BusinessEntityID" float,"Comment

" float,"CreditCardApprovalCode" varchar(255),"CreditCardID" float,"CurrencyRateID" float,"CustomerID" float,"DueDate" datetime,"Freight" real,"OnlineOrderFlag" float,"OrderDate" datetime,"OrderDate_Month" varchar(255),"OrderDate_Year" float,"PurchaseOrderNumber" varchar(255),"RevisionNumber" float,"SalesOrderID" float,"SalesOrderNumber" varchar(255),"ShipDate" datetime,"ShipMethodID" float,"ShipToAddressID" float,"Status" float,"SubTotal" float,"TaxAmt" float,"TotalDue" float)

1/ My field is a date, why do you want to convert it in Datetime??

2/ Datetime is not even a usual field type in sql database (at least not supported by monetdb, vertica, postgresql, oracle, etc, etc...)... it should obviously be timestamp

Currently, this non-specific in database ODBC connexion cannot be used at all!

I recently came to know that Alteryx doesn't support Denodo Data sources. We at our company are using Denodo as a data virtualization tool and also Alteryx is used for data blending. The request is for Alteryx to start supporting Denodo as a data source so that our company can reach out to Alteryx for any support related issues with Denodo.

I reported this to the support team but was told it was by design and to post here.

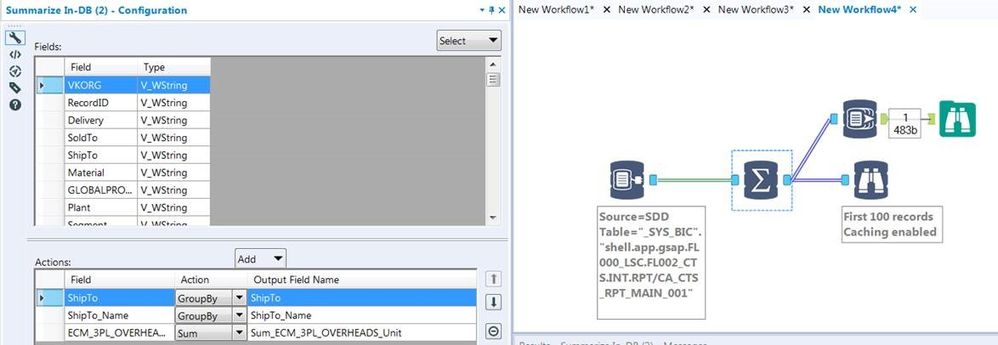

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

As we do more work analyzng the canvasses that our folk are producing - it's becoming more and more necessary to have a well documented definition and schema for the XML that is used for Alteryx Canvasses.

Please could you publish the full XML definition and schema for Alteryx canvasses - this will allow groups to perform deeper analytics on how people are using Alteryx, automate quality checks; look for learning gaps; scan for dependencies etc?

Note: this relates to an idea from @dataprep here: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Documentation-tool-list-fileformat/idi-p/184...

Scenario:

Upstream tools end in a Summarize Tool that has set of records with the following fields: EmailAddress, AttachmentUNCPath. So you get a bunch of recipients with various attachments. Each recipient can have different attachments, and this will change each time it's run. In other words, it's fully dynamic.

If the same recipient has multiple attachments, then it would be nice to group the recipient and just separate the attachments with a semi-colon (or whatever) in the same field. Essentially creating one record per recipient, and therefore one email per recipient, and having the Email Tool attach each file. In other words, mbarone@paychex.com gets one email with 5 attachments. And next week maybe only 3 attachments, and so on.

Currently the only way I see to accomplish this is with a batch macro.

Would be infinitely more convenient to just have the Email Tool by default accept multiple attachments in a field as long as they are separated by a semi-colon, much like occurs in the "to" field.

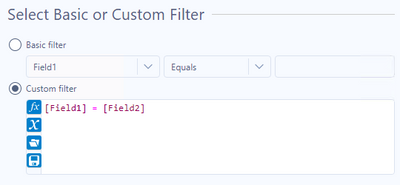

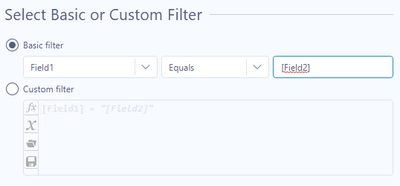

Sometimes I want to set up a filter to compare the values in two fields in my data set. The basic filter option would be much more powerful and configuration would be quicker if this option allowed this.

For example, currently I must use a custom filter to check if Field1 and Field2 are equal:

I would love to have the option to either use a static value in the basic filter (as you can now) or select a field name from a dropdown:

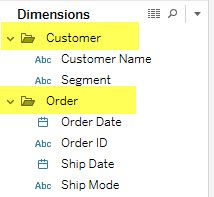

Tableau allows users to do three very useful things to make data more usable for end users, but this functionality is not available with the Publish To Tableau Server tool.

Foldering of dimensions/measures

Creating hierarchies out of dimensions

Adding custom comments to fields that are visible to users when they hover

This functionality allows subject matter experts to create data sources that can be easily understood by everyone within their organizations.

Please "star" this idea if you would like to see functionality in Alteryx that would enable you to create a metadata layer in the "Publish to Tableau Server" tool or in an accompanying tool.

When saving an alteryx module (yxmd, yxmc, yxwz, yxzp), can we have a simple "SAVE AS" function that allows us to choose the version number? Conversely, could we open a newer version module with a warning message rather than an error?

In either case there would be the logical CAVEAT that certain functions or features may not be compatible with the save/open function.

Thanks,

Mark

Could you expose a link to the Keyboard Shortcuts (which is here: https://help.alteryx.com/2019.4/HotKeys_Shortcuts.htm?Highlight=keyboard%20shortcuts) on the primary help menu (screenshot below)

This will allow people to get quicker in Alteryx by exposing these shortcuts to more users.

It would be great if you can add a function "eval". This would be similar to R or access where you would pass a string to the eval function and it would then evaluate the string. My made up use case would be something like this: I have 1 Million rows of data with 20 fields. The first 10 are value1, value2...value10, and the second 10 are value1_right, value2_right....value10_right. I would like to replace valuex with valuex_right if valuex is null. With a multifield formula tool selected I could write something like this with value1-10 selected: eval("IIF(ISnull([_CurrentField_]),["+[_CurrentFieldName_]+"_right],[_CurrentField_])"). Thanks!

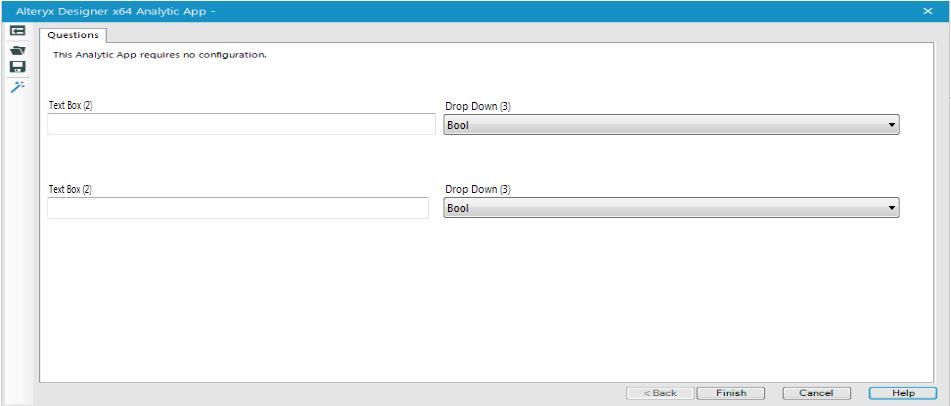

Hi!

For a improved presentation of the GUI elements (Inteface Tools), may be to use for an Analytic App.

It would be great if it were possible to position the Interface Tools also side by side, instead of one above the other.

Best regards

Mathias

As of Version 10.6, Alteryx supports connecting to ESRI File GeoDatabases from the input tool but it doesnt support writing to a geodatabase. This is something we would really like to see implemented in a future version of Alteryx. Those of us working with ESRI products and/or any of the ESRI online mapping systems can do our processing in Alteryx and store large files as YXDBs, but ultimately need our outputs for display in ArcOnline to be in shapefile or geodatabase feature class format. Shapefile have a size limit of 2 GBs and limitation on field name sizes. Many of the files we are working with are much larger than this and require geodatabases for storage which are not limited by size (GDB size is unlimited, 1 TB max per feature class) and have larger field name widths (160 chars). Right now, we have to write to one (or many) shapefile(s) from Alteryx, then import them into a GDB using ArcMap or ArcPy. This can be an arduous process when working with large amounts of data or multiple files.

The latest ESRI API allows both read and write access to GDBs -- is there a way we can add this to the list of valid output formats in Alteryx?

This idea is an extension of an older idea:

https://community.alteryx.com/t5/Alteryx-Product-Ideas/ESRI-File-Geodatabase/idi-p/1424

From Wikipedia

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data.[1] The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect the fact that the architecture of the system can shift to solve different types of data problems. Druid is commonly used in business intelligence/OLAP applications to analyze high volumes of real-time and historical data.[2] Druid is used in production by technology companies such as Alibaba,[2] Airbnb,[2] Cisco,[3] eBay,[4] Netflix,[5] Paypal,[2], Yahoo.[6] and Wikimedia Foundation [7]

More and more companies are going from Hive to Druid for Dataviz needs, maybe it's time to look for Druid Integration with Alteryx?

At the moment if a part of your python code takes more than 30s to run, Jupyter times out and Alteryx cancels the workflow. This makes the Python Tool unusable for anything intensive and the timeout should be removed by default or be configurable per workflow.

I've made this idea as none of the solutions in these threads feel satisfactory:

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

I think it would be incredibly helpful for Alteryx to include a "Fuzzy Join" operator, similar to what is described in this article: http://www.decisivedata.net/blog/alteryx-fuzzy-join-workflow/

Virtually every client/project I work on, there is a nead to clean up data. Most of the time, that involved standardizing to some existing list of data. However, as we all know, data from differnet systems or being manually collected will not match perfectly in all cases. This is most often when I tend to use the Fuzzy Match tool.

However, I have to use a lot of weird steps to effectively create a "Fuzzy Join", which is something I've done using database functions in the past. I think it would be great if a new tool were created that would do the following:

- Accept two inputs, one for the "raw" data and another for the "list" of data to match to.

- Perform a fuzzy join based on similar functionality to the fuzzy match, convert data to metaphone keys and then run Jaro/Levenstein matches. By default, return only the highest matching result.

- Expand the pre-process functionality to include words to exclude from the analysis (beyond just "and", "the" and "in").

- Match on the whole string. No need to try and do joins based on partial words within a string.

This seems like a very common thing (I've created a macro for this anyway) that could be made to be simpler for everyday use.

Thanks!

I would like Alteryx to create an internship support program that provides a license similar to a trial but for an extended period, say 6 to 8 weeks, and tied to core certification. you could repackage much of the existing training into a curriculum aimed at educating new users sufficiently on the elements necessary to pass the Core certification within a short time frame.

Our organization just launched an internship program and had our first group of interns start 5 weeks ago. I had to come up with a plan that provided the intern a valuable experience. I decided to make Alteryx Core certification a key objective and put him on a spare license we had for the duration and worked with him to get his core.

I think this could be a great marketing tool for Alteryx. It would get more people entering the workforce educated about your product so that no matter where they end up they might already be a fan and suggest the tool as a solution in a new job that doesn’t currently know about you. Conversely it gives interns a certification that shows they know more than the other applicants for a job where Alteryx is already a tool. I am sure there are tax benefits to Alteryx as well for each license used.

This is kind of how we discovered Alteryx, we had issues with volume of data and technology limitations (Excel) and someone had used Alteryx at a prior company and suggested we try it out. We purchased a couple licenses, then within a couple years we had 16 licenses. You can’t sell someone who doesn’t know you exist…the internship type license is a good idea to expand the list of people in the workplace who know you exist. Even better they will have have reached a level of knowledge, core certification, to have a basic appreciate your value.

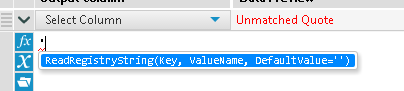

Ok Alteryx, we totally love your product. And I've got a super quick fix for you. Why on earth would you Autocomplete the ubiquitous tick mark as "ReadRegistryString(Key, ValueName, DefaultValue='')"

?

I find myself in this situation constantly where, 'dummy' suddenly becomes 'dummyReadRegistryString('HKEY_LOCAL_MACHINE\SOFTWARE\SRC\Alteryx\4.1', 'InstallDir')' the moment I strike the enter key.

Pls help, I don't ask for much.

There is a need when visualizing in-Database workflows to be able to visualize sorted data. This sorting could be done 1 of 2 ways: In a browse tool, or as a stand-alone Sort tool. Either would address the need. Without such a tool being present, the only way to sort the data is to "Data Stream Out" and then visualize the data in Alteryx. However, this process violates the premise of the usefulness of the in-DB toolkit, which is to keep your data in-DB and process using the DB engine. Streaming out big data in order to add a sort is not efficient.

Granted, the in-DB processing doesn't care whether data is sorted or not. However, when attempting to find extreme values after an aggregation, or when trying to identify something as simple as whether null values are present in a field, then a sort becomes extremely useful, and a necessary tool for human consumption of data (regardless of the database's processing needs).

Thanks very much for hearing my idea!

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 5 | |

| 3 | |

| 3 | |

| 3 |