Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi Alteryx User and Alteryx Dev team,

I saw there are number of posts from the community asking for solution to calculate the NetWorkDays (e.g. similar to the networkdays in excel which to calculate the number of days different between the two days excluding weekend and holidays.)

Although we could build a macro for it, the performance is not ideal, especially when the data set is huge and/or the date range required is far apart from each other because there is currently NO a build-in function in Alteryx. Alteryx will have to expand the date range by date and check whether each is a weekend or holiday. It will an excellent idea if a build-in function for Networkdays could be built to minimize this hassle from everyone around the world.

We are looking forward this idea could be take forward.

Thanks

Eric

Please update the Publish to Tableau Server connector tool to support Tableau's Ask Data feature. The data source must be recognized as an extract on Tableau Server in order for the Ask Data feature to work. Currently, all data source published using version 2.0 of the connector tool are recognized as a live data source. The work around is cumbersome and requires multiple copies of data sources to be created and managed.

Microsoft Office provides a facility in all its apps to make the loading of frequently used files a breeze. In the FILE OPEN function the user can "PIN" a previously opened file so that it is always easy to find and load. This would make it easier to manage and retrieve Designer files.

This is what PINNING looks like in Excel

Connect to Azure SQL Database with Azure AD also with Multi-Factor Authentication is a crucial feature nowadays. The tool should be configurable by interface tools so we can change the database within the same Azure Database server.

There is a workaround to use ODBC for this but it does not support caching credentials and that's why problematic to use. The credential prompt is appearing every time we run the workflow. With ODBC it's also required to have a separate DSN for every database in the same server.

To make it easy for users there should be a native connector for this feature. The user experience should be easy as it's in an azure data lake connector.

Alteryx Server was recently updated to allow TLS-mediated connections to the MongoDB persistence layer. This allowed us to switch off of the embedded MongoDB to a highly-available MongoDB Atlas cluster. To our surprise after the switch, when we went to edit our workflows that make use of the persistence layer's data (Server Usage Report, etc.) to hit the new Atlas cluster, we found that the MongoDB Input tool does not support TLS connections. This absolutely needs to be changed. Based on organizational constraints, Atlas is our only option for a HA persistence layer. We absolutely have to have TLS support for the MongoDB Input tool. There is no other way for us to natively query our server persistence layer in Designer. Please bring the MongoDB Input tool into alignment with the MongoDB connections that are supported by Alteryx Server.

I think the undo/redo capabilities in Alteryx could be greatly improved. Here is an idea that I think would be beneficial...

I'd like to see which exact tools are affected by my undo/redo actions. An idea was suggested a couple years ago to move your location on the canvas, but that was not added to the roadmap. Instead, is it possible to add the tool ID to the undo menu so that it is obvious which tool each line is detailing?

This is the current debug menu that shows your previous actions:

When a tool is created, the ID can be displayed in this menu, but this is not shown when a change is made to an existing tool. My suggestion is that the menu would say:

4. Change Sort (3) Properties

This same change should be made in the Edit dropdown menu.

Requesting a reduced-cost, read-only license to allow for additional users in our organization be directly review workflows for UAT and control testing. Currently, the only individuals who can see the detail of Alteryx workflows directly are those with a full designer license or temporary trial license. In our Alteryx control structure, we have additional reviewers confirming the workflow who do not have licenses, which requires copious amounts of screenshots and/or direct meetings with our licensed designers to walkthrough the flows step-by-step. It would be much more efficient to provide a license that would allow folks to click through the integrations themselves, potentially allowing for comments and annotations, but without the ability to make direct changes. This would be much more cost efficient for our organization and allow for better workflow review and control.

Hello all,

HDFS (Hadoop Distributed File System) connection is widely used to load data efficiently on Hadoop, for Hive, Spark or Impala. However, it's not compatible with the new DCM.

Best regards,

Simon

Hello!

I am making this idea request in response to this question:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Is-it-possible-to-enable-Performance-P...

Currently, one of my favourite settings to enable in Alteryx is the performance profiling, as i get to see exactly how much time is being used at each step, and its a quick reminder to double check those tools that take a while for optimisation. However, i have to enable this on every new workflow that i open as the setting only applies to the current workflow, and it can be frustrating executing a large workflow only to realise after waiting for it to run, that this setting was not enabled.

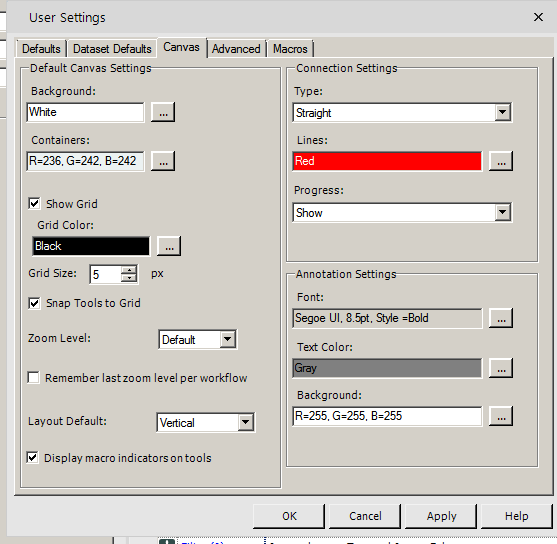

What i'd like to propose, is an extra set of settings within the User Settings, default tab (which is currently):

To something like:

Which would simply enable these settings as a default, when a new workflow is made.

Let me know what you think! I think a couple of the other settings in there could see use, especially as the AMP engine develops and those who want to see all macro messages, for example.

Cheers,

TheOC

Apologies if this has been suggested already - did a search and didn't see anything similar.

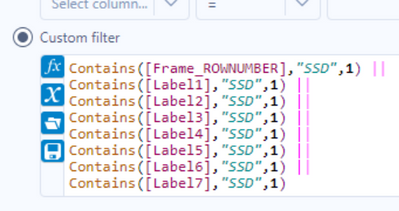

This is a quality of life/UX idea. The search functionality in the results pane essentially does a 'contains' search on all of the columns (see below screenshots for the filter inserted by the 'apply data manipulations button). As I build workflows and profile the data, it'd be helpful if I could click one or more columns and limit the search bar to just those fields.

Right now, depending on the dataset I could get rows returned by the search due to the search term appearing in columns that aren't relevant. To workaround this I could add select tools to limit the columns or do more robust filters in a filter tool, but having it built in would be very helpful.

Cleanse Macro

Given a choice between the delivered macro and the CReW macro, I’ll choose the CReW macro for both speed and functionality. Wikipedia says, “Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data.” If Alteryx were to convert the macro to a true tool, here is my feature request list:

Performance:

- AMP compatible – Fast!

- Faster than the CReW macro for deleting empty fields/rows

- Resolve time it takes to load the tool (current macro versions are slow), html is faster.

Feature Enhancement:

- Allow selection of fields based on data type

- Include incoming/outgoing SELECT functionality

- Allow for PREFIX functionality (like multi-field formula), but NOT default

- Read incoming metadata to provide color coding of fields to indicate where potential problems exist (e.g. NULL, Whitespace) – part of browse everywhere currently

- Allow for Nulls to convert to 0/blank or 0/blank to convert to Null

- When removing punctuation, provide for exceptions (e.g. Numeric set of negative, comma and period).

- Include HTML tag removal

- Support internationalization (character sets)

Going the extra mile:

- Display or opt for output, cleanup metrics. How dirty was my data? Potentially, allow for ERROR to stop workflow if garbage is detected.

- Optional: Detect outliers in numeric data. I’ve got an outlier detection macro that we can review, but while you are passing all of the data for numeric values, explaining or tagging outliers would be useful. Could be a box-whisker on numeric values maybe?

- Make outlier actionable

- Identify in data (new field indicator)

- Remove

- Modify/Impute

- Test/Preview against metadata: (pre-run), see what the incoming/outgoing results would be on *all of the metadata before I run the workflow.

- camelCase: https://en.wikipedia.org/wiki/Camel_case

- Identify/Replace unknown values (e.g. N/A, Not Applicable, #) with Null() or other?

- Identify/Remove duplicate values within a cell

- See also: https://en.wikipedia.org/wiki/Data_cleansing

- Option to point to a “personal” dictionary for spelling or validation

- Provide “smart” annotation on tool

- Make outlier actionable

Idea:

An Alteryx version for Mac OS X sounded like a nice idea... Although there are options for using bootcamp with windows 7-8

or some virtualisation software as mentioned in a community post here.

Rationale 1 (Competitors do it):

First of all there is no need to neglect a customer segment using Mac's.

- Rapidminer Studio comes with a dedicated OS X version,

- Knime has Mac OS X support

- Weka has Mac OS X support as well

- SPSS Modeler is Windows only but SPSS Stats is Mac OS X compatible.

Seems SAS was compatable in the last decade, but they dropped it. Now SAS is not OS X compatible but

still with the "SAS OnDemand" version Mac users can easly get a hands on experience.

Rationale 2:

The Mac Pro Beast has 7.2 TFlops of computing power with the help of dual ATI graphics cards.

It would be awesome to install Alteryx on one...

Hi,

With multiple Workflows open, I'd like to be able to grab one of the Workflow tabs and drag it out on to the desktop. This act would then cause a new Alteryx Window to open up with the Workflow that was pulled out. Just like when you have multiple tabs open in I.E. and you drag a tab out and drop it on the desktop - you end up getting another I.E. opened up and the tab you dragged out is in the newly opened I.E.

This would be handy because I'm often wanting to copy/paste tools, formulas, etc. and it would be nice to do that w/o flipping from one tab to another.

I know I can right-click and open another Alteryx but when opening several - they all open in the same one.

Thanks,

Brad

When you have a "reminder"/"Notification" , there needs to be the option to permanently ignore the update.

Some updates only give you a timeframe for ignore/remind as little as 7 days. There should absolutely be options for longer time frames, and should include a permanent reminder of do not display/remind me of 'this' update again.

Fine for another reminder when there is another new update, but don't repeatedly place the notice of a reminder for the same system/version/data set etc etc etc update.

There are times companies don't provide updates for a year or more. You shouldn't have to keep dismissing update reminders/notices when you don't intend to update until maybe the next version or a year from now.

Remove the constant update notification.

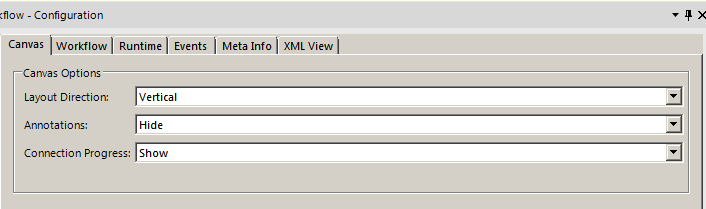

The canvas has 3 options as demonstrated by exhibit A:

The user settings can change 2 of the 3 defaults as demonstrated in exhibit B. The layout default and connection settings progress can both be defaulted for all new workflows:

Thus, I would propose that a user setting be added to the annotation box so that I can set the default to hide.

It would be extremely useful to quickly find which of my many workflows feed other workflows or reports.

A quick and easy way to do this would be to export the dependencies of a list of workflows in a spreadsheet format. That way users could create their own mapping by linking outputs of one workflow, to inputs of another.

Looking at the simple example below, the Customers workflow would feed the Market workflow.

| Workflow | Dependency | Type |

| Customers | SQL Table 1 | Input |

| Customers | SQL Table 2 | Input |

| Customers | Excel File 1 | Input |

| Customers | Excel File 2 | Input |

| Customers | Excel File 3 | Output |

| Market | Excel File 3 | Input |

| Market | SQL Table 3 | Output |

It would be CRAZY AWESOME if we could get a report like this for all scheduled workflows in the scheduler.

To help in adding tools quickly it would be useful to have some form of quick keys or maybe somewhat a combination (enhancement) of search bar / right-click.

So here's the pic and a 1,000 words

And here's the blurb

The idea being that hitting a key whilst mouse is over the canvas would display the search bar at the mouse cursor position. Once you've selected the tool you want it hitting return[+shift] adds it to the canvas either:

- In a dragged (mouse down) state to help for final position and automated connections and then a final left-click to add to canvas or,

- Just add it at that position.

This would also speed up adding tools as you could include things like 'Recent' or 'Favorites'. I grant you that you could just incorporate this into the search dialog but save you a bit of eye movement. Don't get me wrong the search bar is great but it's the lots of drag-drop that can slow things down a bit.

I feel like I must be missing something, but saw a similar suggestion for TDE outputs, so maybe this really doesn't currently exist. We sometimes add descriptions to fields we create, and some inputs come with descriptions, but we can't seem to get them into the final database using the Output tool. Can there be a checkbox to persist the metadata along with the data when writing to a database?

Hello Team,

Currently, in the select tool, we have to scroll up or down to check or see the list of the fields. In case, if the user wanted to change the data type, they can scroll into the list. Like, I am working on the mid-size data, and sometimes data contain 300+ fields, if I need to change anything in the data type I have to search by scrolling up or down.

The idea here is, If you provide a search bar under Field, it will be a great help to all, in case if anyone needs to go for some specific field, the user just types the name in the search bar and make changes quickly. The select tool is important and we used much time while working on the flow.

Thank you,

Mayank

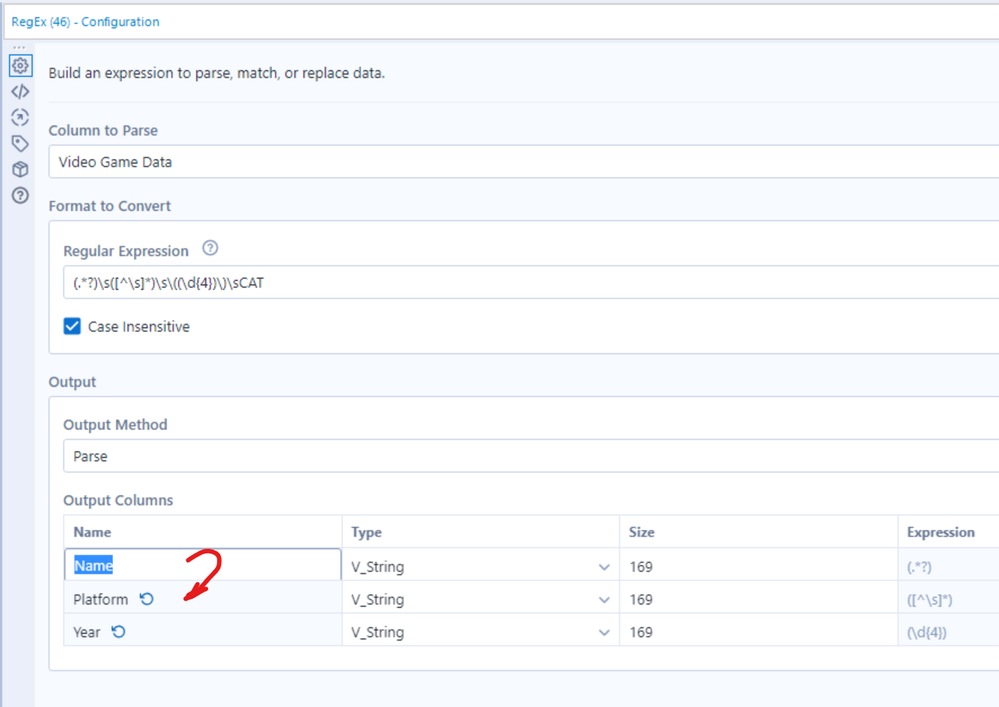

When entering a number of column names in the RegEx parse mode - please can you allow either Enter or down-arrow to move down to the next cell (standard windows convention)?

Currently Enter just exists the edit mode; and down-arrow does nothing.

cc: @Hollingsworth

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 32 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |