Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I have reviewed a number of batch macros that work well for mirroring the "NetworkingDays" excel calculation but it would be great to add an interval type for "weekdays" to the DateTimeDiff formula ie

DateTimeDiff ( [Date01],[Date02], "WorkDays") where any Saturday or Sunday between the dates would be discounted.

Hi!

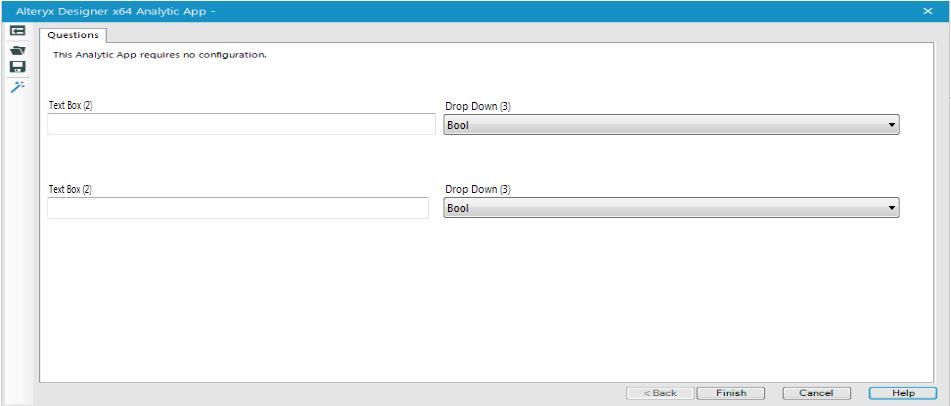

For a improved presentation of the GUI elements (Inteface Tools), may be to use for an Analytic App.

It would be great if it were possible to position the Interface Tools also side by side, instead of one above the other.

Best regards

Mathias

Hi

While the download tool, does a great job, there are instances where it fails to connect to a server. In these cases, there is no download header info that we can use to determine if the connection has failed or not.

Currently the tool ouputs a failure message to the results window when such a failure occurs.

Having the 'failed to connect to server' message coming into the workflow in real time would allow for iterative macro to re-try.

Thanks

Gavin

It would be nice to have a function similar to IsEmpty() that would also return true if the string only contained spaces. Of course the simple work around is to Trim() inside IsEmpty(). Just trying to save a few keystrokes and remove a failure mode if you forgot to Trim()...

ken

(Not sure i have posted this in the correct spot)

Hi,

I get a lot of adhoc requests where the insturctions are in the actual email. As I'm going through the ask, creating the new workflow, the requester will mention "column C" or "column XY", but I'm viewing the data in Alteryx where that doesn't show. So I have to open the Excel file, find the column, get the name, go back to Alteryx to locate the column I need, run the module but get an error/no results because I forgot to close the Excel file (because the module is using the same file I just opened to validate which column I'm using).

It would be really cool to have an Option in the Input tool to add the Excel column lettering and numbering to show (Ex, Column A, B, C, D going left to right, Row 1, 2, 3, going top to bottom). This would just be visually showing it, not adding it to the data (because then you would lose the actual column names). If this can be done, it would be equally as useful in the Browse tool view. Have a great day!

As of Version 10.6, Alteryx supports connecting to ESRI File GeoDatabases from the input tool but it doesnt support writing to a geodatabase. This is something we would really like to see implemented in a future version of Alteryx. Those of us working with ESRI products and/or any of the ESRI online mapping systems can do our processing in Alteryx and store large files as YXDBs, but ultimately need our outputs for display in ArcOnline to be in shapefile or geodatabase feature class format. Shapefile have a size limit of 2 GBs and limitation on field name sizes. Many of the files we are working with are much larger than this and require geodatabases for storage which are not limited by size (GDB size is unlimited, 1 TB max per feature class) and have larger field name widths (160 chars). Right now, we have to write to one (or many) shapefile(s) from Alteryx, then import them into a GDB using ArcMap or ArcPy. This can be an arduous process when working with large amounts of data or multiple files.

The latest ESRI API allows both read and write access to GDBs -- is there a way we can add this to the list of valid output formats in Alteryx?

This idea is an extension of an older idea:

https://community.alteryx.com/t5/Alteryx-Product-Ideas/ESRI-File-Geodatabase/idi-p/1424

Right now, logging of workflows is either on or off and when on is directed to a single location.

I think it would be useful to have control over logging in the Runtime settings for a workflow.

Either as an enable/disable checkbox that uses the logging directory (above)

Or as a more fully featured option where logging output folders could be specified:

We're currently using Regex and text to columns to parse raw HTML as text into the appropriate format when web scraping, when a tool to at least parse tables would be hugely beneficial.

This functionality exists within Qlik so it would be nice to have this replicated in Alteryx.

Obviously, we need to retain the ability to scrape raw HTML, but automatically parsing data using the <td>, <th> and <tr> tags would be nice.

In the following page there is a table showing the states and territories of the US:

As this functionality exists elsewhere it would be nice to incorporate this into Alteryx.

Pet Hate... When i re-enable a container, it opens up the container...

Would be great if the default is do not open on re-enable...

cheers

As Tableau has continued to open more APIs with their product releases, it would be great if these could be exposed via Alteryx tools.

One specifically I think would make a great tool would be the Tableau Document API (link) which allows for things like:

- Getting connection information from data sources and workbooks (Server Name, Username, Database Name, Authentication Type, Connection Type)

- Updating connection information in workbooks and data sources (Server Name, Username, Database Name)

- Getting Field information from data sources and workbooks (Get all fields in a data source, Get all fields in use by certain sheets in a workbook)

For those of us that use Alteryx to automate much of our Tableau work, having an easy tool to read and write this info (instead of writing python script) would be beneficial.

It would be great if there was a way for the Text to Columns tool did not drop the last empty when using Split to Rows.

For example, if I had the data:

| RecordID | String |

| 1 | 1,2,3 |

| 2 | 1,2, |

| 3 | 1,, |

Notice that each value has two commas (representing three values per cell), and If I configure to split into rows on the comma character, what would you expect the result to be:

Result A:

| RecordID | String |

| 1 | 1 |

| 1 | 2 |

| 1 | 3 |

| 2 | 1 |

| 2 | 2 |

| 3 | 1 |

| 3 |

OR

Result B:

| RecordID | String |

| 1 | 1 |

| 1 | 2 |

| 1 | 3 |

| 2 | 1 |

| 2 | 2 |

| 2 | |

| 3 | 1 |

| 3 | |

| 3 |

OR

Result C:

| RecordID | String |

| 1 | 1 |

| 1 | 2 |

| 1 | 3 |

| 2 | 1 |

| 2 | 2 |

| 3 | 1 |

I would expect Result C if I selected "Skip Empty Fileds", and that is what happens if I select that option.

But If I do not want to skip empty fields, I would expect Result B, but what I get is Result A where the last value/field is dropped/skipped.

What would it take to Result B as the output from the Text to Columns tool?

The regular filter tool is great because I get the true and false returns. When doing ad-hoc analytics it would be super helpful if the date filter did the same thing.

In the example below, I had to create an "IF" statement that returned a T/F value and then fitler out based on the output of that formula.

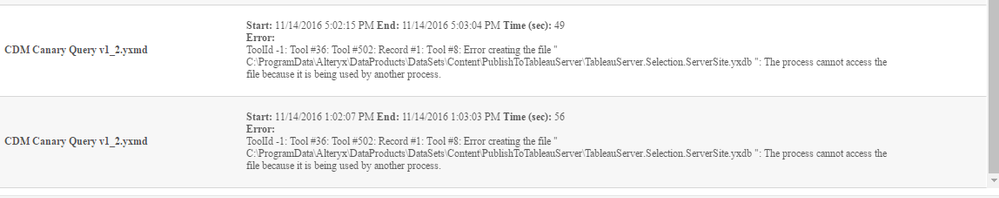

We are running into errors on our scheduler if we have multipe workflows with the Publish to Tableau server macro running at the same time. The macro writes to a local yxdb file with a fixed naming convention and is locked if another workflow is using it at the same time. We like to see if the cached filename TableauServer.Selection.ServerSite.yxdb can be made somehow unique.

Error experience:

Talking with support this is a known issue and needs Macro enhancement.

In the previous tools the information lab had build for publishing to Tableau server, they had the incremental TDE refresh option available. I would like to see that included in the Publish to Tableau Server Macro. We often just want to add previous day data to a YTD data extract without running the full data set from our Datawarehouse. The full set takes long and a daily increment / add only would take a couple minutes.

There may only be two of us, but there are at least more than one 🙂 I noticed someone else also was running into difficulty getting Alteryx to produce a csv or flat file with the header names excluded. There's a workaround to use the dynamic rename to and pull the first row of data into the header field, but it would be simpler if there were an option not to export field names.

Thanks!

Storing macros in a central 'library' and accessing them via the "Macro Search Path" is great for ensuring that everyone is using the same code.

It would be great if the Alteryx installation process prompted for this information. In that way, a new user would automatically have access to the macros.

I would love the ability to double click a un-named tab and rename it for 'temp' workflows.

eg - "New Workflow*" to "working on macro update"...

Reason:

- when designer crashes it is a huge pain to go through auto saves with "New Workflow*" names to find the one you need

- I work on a lot of projects at once and pull bits of code out and work on small subset and then get destracted and have to move over to another project. With mulitpule windows and tabs open it gets confusing with 10 'new worflow' tabs open.

- Allows for better orginaization of open tabs - can drag tabs into groups and in order to know where to start from last time.

In the same way In-DB generates SQL, might it be possible to have an In-PowerBI and generate DAX?

Working for an education company, it would be a huge value for us to be able to have the US school districts available in the spatial sweet of apps, so I could take all of the US schools that are customers and map them to their School Districts in a polygon map.

Writing SQL to set primary keys in Alteryx before outputting the data is tedious, right?

I propose that you guys could incorporate my macro as a tool (referenced here:http://community.alteryx.com/t5/Data-Sources/Variables-used-in-Input-Path/m-p/38199#M2604).

It sure is a lot faster than going through this process: http://community.alteryx.com/t5/Alteryx-Knowledge-Base/Create-Database-Table-Primary-Key-in-Alteryx/...

- New Idea 301

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 169

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,558 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

209 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections