Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello All,

During my trial of assisted modelling, I've enjoyed how well guided the process is, however, I've come across one area for improvement that would help those (including myself) overcome any hurdles when getting started.

When I ran my first model, I was presented with an error stating that certain fields had classes in the validation dataset that were not present in the Training dataset.

Upon investigation (and the Alteryx Community!) I discovered that this was due to a step in the One Hot Encoding tool.

Basically, the Default setting is for all fields to be set to error under the step for dealing with values not present in the training dataset, but there is an option to ignore these scenarios.

My suggestion:

Add an additional step to Assisted Modelling that gives the user the option to Ignore / Error as they see fit.

If this were to be implemented then it would remove the only barrier I could find in Assisted Modelling.

Hope this is useful and happy to provide further context / details if needed.

-

Feature Request

-

Machine Learning

-

Tool Improvement

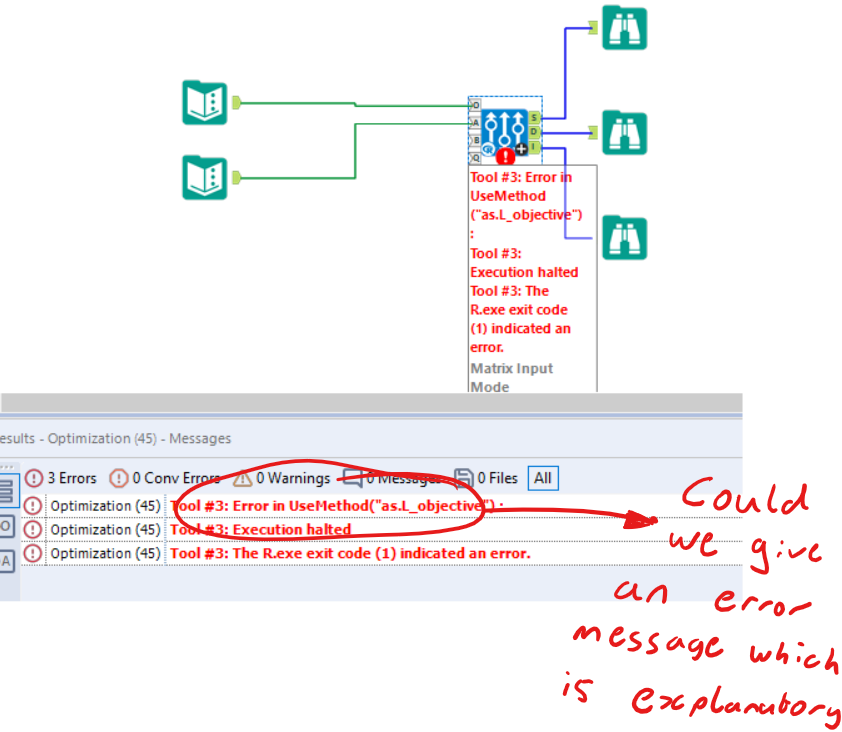

The Optimize tool is a very useful capability - and if we could improve the error messaging then it would be much more approachable for users to learn and make use of.

For example - the error message in the attached image - could we please update this to provide information about what went wrong, and how to fix it?

Many thanks

-

Category Prescriptive

-

Desktop Experience

-

Tool Improvement

-

User Experience Design

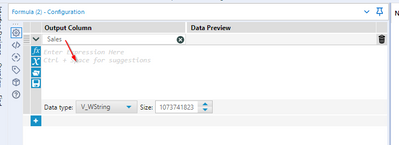

Salesforce Input tool throws a conversion error if labels are longer than 40 characters:

Could the the size of the field be increased to whatever the the Salesforce maximum is, or at least be configurable from the tool configuration.

Particularly annoying is that the conversion error cannot be ignored (as seen in the Union tool) but will continue to show the result as yellow in the Scheduler. We like to keep a green board!

-

Category Connectors

-

Data Connectors

-

Tool Improvement

Hello Dev Gurus -

The message tool is nice, but anything you want to learn about what is happening is problematic because the messages you are writing to try to understand your workflow are lost in a sea of other messages. This is especially problematic when you are trying to understand what is happening within a macro and you enable 'show all macro messages' in the runtime options.

That being said, what would really help is for messages created with the message tool to have a tag as a user created message. Then, at message evaluation time, you get all errors / all conversion warnings / all warnings / all user defined messages. In this way, when you write an iterative macro and are giving yourself the state of the data on a run by run basis, you can just goto a panel that shows you just your messages, and not the entire syslog which is like drinking out of a fire hose.

Thank you for attending my ted talk regarding Message Tool Improvements.

-

API SDK

-

Category Developer

-

Feature Request

-

Tool Improvement

Using the Download Tool, when doing a PUT operation, the tool adds a header "Transfer-Encoding: chunked". The tool adds this silently in the background.

This caused me a huge headaches, as the PUT was a file transfer to Azure Blob Storage, which was not chunked. At time of writing Azure BS does not support chunked transfer. Effectively, my file transfer was erroring, but it appeared that I had configured the request correctly. I only found the problem by downloading Fiddler and sniffing the HTTPS traffic.

Azure can use SharedKey authorization. This is similar to OAuth1, in that the client (Alteryx) has to encrypt the message and the headers sent, so that the Server can perform the same encryption on receipt, and confirm that the message was not tampered with. Alteryx is effectively "tampering with the message" (benignly) by adding headers. To my mind, the Download tool should not add any headers unless it is clear it is doing so.

If the tool adds any headers automatically, I would suggest that they are declared somewhere. They could either be included in the headers tab, so that they could be over-written, or they could have an "auto-headers" tab to themselves. I think showing them in the Headers tab would be preferable, from the users viewpoint, as the user could immediately see it with other headers, and over-ride it by blanking it if they need to.

-

API SDK

-

Category Developer

-

Tool Improvement

Model evaluation (including feature importance) is only available in assisted modelling within the machine learning tools.

It would be great if there was a tool to do this when using the expert mode so that you could see some standard performance metrics for your model(s) and view the feature importance.

-

Machine Learning

-

Tool Improvement

I want to check out which things downstream are receiving data from the true and false branches of this filter.

I could step through them one by one.

It would be much easier if I could simply select the tool and see directly which tools are connected to which output by colour, or line style.

-

Feature Request

-

General

-

Tool Improvement

-

User Experience Design

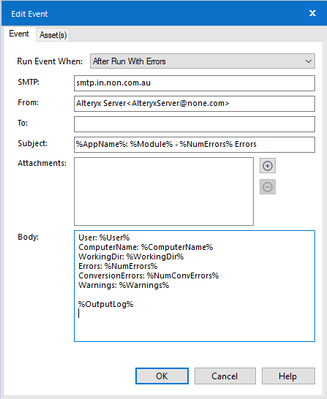

Please enable "Friendly Name" in the e-mail tool.

e.g. None <none@none.com>

When using this configuration, the workflow fails with error:

Error: Email (1): ComposerEmailInterface: Record#1 From Field contains 2 entries

"Fiendly Name" does work when sending a Workflow Event e-mail, but not in the e-mail tool.

-

Category Reporting

-

Desktop Experience

-

Tool Improvement

Problem:

Dynamic Input tool depends on a template file to co-relate the input data before processing it. Mismatch in the schema throws an error, causing a delay in troubleshooting.

Solution:

It would be great if the users got an enhancement in this Tool, wherein they could Input Text or Edit Text without any template file. (Similar to a Text Editor in Macro Input Tool)

I'm really liking the new assisted modelling capabilities released in 2020.2, but it should not error if the data contains: spatial, blob, date, datetime, or datetime types.

This is essentially telling the user to add an extra step of adding a select before the assisted modelling tool and then a join after the models. I think the tool should be able to read in and through these field types (especially dates) and just not use them in any of the modelling.

An even better enhancement would be to transform date as part of the assisted modelling into something usable for the modelling (season, month, day of week, etc.)

-

Category Predictive

-

Desktop Experience

-

Machine Learning

-

Tool Improvement

So I just realized that if I click F2 I am brought to the annotation window in the configuration window.

It would be great if I can click F3 to bring me back to the Configuration window! Often times I switch back between configuring and annotation a tool multiple times.

‘]Thanks,

J

-

Setup & Configuration

-

Tool Improvement

-

User Experience Design

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

I would like to request that the Python tool metadata either be automatically populated after the code has run once, or a simple line of code added in the tool to output the metadata. Also, the metadata needs to be cached just like all of the other tools.

As it sits now, the Python tool is nearly unusable in a larger workflow. This is because it does not save or pass metadata in a workflow. Most other tools cache temporary metadata and pass it on to the next tool in line. This allows for things like selecting columns and seeing previews before the workflow is run.

Each time an edit is made to the workflow, the workflow must be re-run to update everything downstream of the Python tool. As you can imagine, this can get tedious (unusable) in larger workflows.

Alteryx support has replied with "this is expected behavior" and "It is giving that error because Alteryx is

doing a soft push for the metadata but unfortunately it is as designed."

-

Category Predictive

-

Desktop Experience

-

Tool Improvement

The "Text To Columns" Tool Can do Split to Rows and Split to Columns, the name says that it can Text To Columns but it can Text To Rows also, it would be great if it has name something like "Column Splitter" as it can split data horizontally as well as vertically i.e. in form of Columns or Rows!!

It would sound cool !!!

-

Tool Improvement

On Right Clicking to "Comment" we should be able to convert it to "Tool Container" or "Tool Container" to "Comment". Also, it should map all the common configurations or similar. The mapping can be as such:

This example is from Comment to Tool Container,

Text --> Caption upto length 200 or so,..

Shape --> NA

Font --> NA

Text Color --> Text Color

Background Color --> Fill Color and Border Color

Text Alignment --> NA

Background Image --> NA

Transparency and Margin to Default

-

Tool Improvement

I personally think it would work better to tab from 'Select Column' to 'Enter Expression Here' and not the 'Functions' List as probably people who are tabbing would immediately like to start typing the formula rather than going through functions, fields, etc.

-

General

-

Tool Improvement

-

User Experience Design

It will be really useful if the Microsoft Query (aka MS Query, aka Excel Query) can be incorporated in the formula tool. This can make the workflow small and make the tool more powerful

-

Tool Improvement

One idea that could help a lot of users while preparing dashboard solutions where we might need to attach artifacts for proof or references that might have helped us in developing the dashboards.

-

Documentation

-

Feature Request

-

Tool Improvement

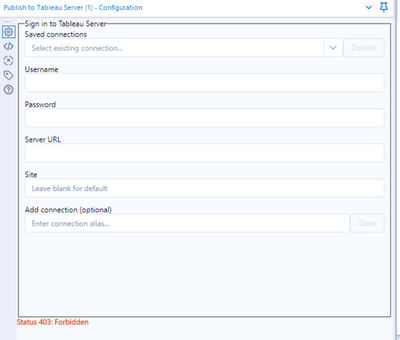

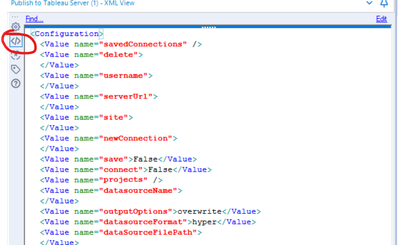

Version 2.0 of the publish to Tableau tool does not work for the initial authentication if Tableau Server has CORS enabled.

This only impacts the UI for the tool that completes the sign in to Tableau Server and provides back the list of projects, data source names, etc.

When CORS is enabled, a 403 error is received with a response of invalid CORS request.

If the XML on the tool is manually edited and the tool is run, it works fine to publish to Tableau, with or without CORS enabled.

version 1.0.9 also works with no issues, but is not the ideal solution when users are on newer versions of Alteryx designer

Additionally calls to the REST API from a local desktop using postman or python work with or without CORS enabled.

Based on conversations with Alteryx support, the tool was not tested with CORS enabled, thus the bundle.js file completing the authentication for the GUI must not account for Tableau Servers with CORS enabled.

For those who build solutions with Tableau Server that utilize the REST API (e.g. like custom portals) CORS must be enabled to function, but it limits the ability to use version 2.0 of the publish to Tableau tool.

-

Tool Improvement

When attempting to save from Designer to Gallery the last step of the save is the validation step. The validation step, as I understand it, checks to make sure there's a valid license on Gallery. This counts as one of the processing "threads". If your organization is constrained by the throughput on Gallery, this can cause delays.

Our business only has the 2-thread service level for Alteryx Gallery. Consequently, if someone is running long, drawn out workflows on Gallery, this can create delays in saving the file from Designer to Gallery. It can also cause delays if there's a long "line" of workflows waiting to run. I presume that the save attempt is put in line along with the other jobs on Gallery that have to run. If this is the case, it could take a long time to complete the validation--tens of minutes or longer.

That window being open keeps the user from being able to use Designer at all. Very inefficient. There should be a requirement that the user has both valid licenses on Designer and on Gallery before they can run anything on Gallery. However, the validation of both of those is already accomplished by virtue of the fact that Designer checks for a license whenever the program loads. Also, if Gallery checks for the license anytime the workflow runs, then the second half of this check is already accomplished and, therefore, renders the check when saving to Gallery unnecessary. Please correct me if i'm wrong on this.

Change #1) Please the validation of Gallery license when saving from Designer to Gallery.

Change #2) Please adjust Designer such that you can continue to edit workflows on the same session while other files are being saved to Gallery.

Change #3) If we can't get #1 or #2, please change the queueing process to put attempts to save to Gallery in the front of the line because they should take <1 second to validate the license while other workflows could take many minutes.

-

General

-

Setup & Configuration

-

Tool Improvement

-

User Experience Design

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |