Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Please add ablity to globally, within a module, forget all missing fields.

-

Tool Improvement

Please provide an option to have a the caption of a tool container sent to log/results after all items within it have been processed.

-

Tool Improvement

It would make it a lot easier to create and manage a work stream if you added the standard ability to maximize and minimize the workflow canvas and configuration and results windows.

-

Tool Improvement

In the Formula Tool, there is an Average() function which can be used to take the average/mean of multiple columns or expressions. This function treats null values as zeroes. This was a surprise/dissapointment to me as I am used to other applications & systems where nulls are ignored, for example Excel. It would be useful to have either an AverageIgnoreNulls() function or an optional extra parameter to Average() which specifies that nulls should be ignored rather than treated as zeroes.

When wishing to average a small number of columns and ignore nulls, a formula can be constructed using Iif(IsNull([Column1]),0,[Column1]) for each column to calculate the total, and Iif(IsNull([Column1]),0,1) for calculating the count. This quickly becomes unwieldy for more than 2 or 3 columns.

-

Tool Improvement

The DateTimeParse function always works if there are leading 0 but if one digit day of month or month it can be harder to parse

-

Tool Improvement

I have a 3 year license but am required to activate the license key each year. Is it possible to get the activation period matched to the duration of the purchased license? This would not only remove the need to update each year, but would also clarify the actual duration of the licence too.

-

Tool Improvement

For the purpose of debugging a workflow, I often filter just one customerID or any other ID to analyse the workflow.

With the Browse tool (ctrl-shift-B) you can just double click a cell and copy the value of it. This is not possible in the result tool, it would be nice if that would become possible.

Thanks,

Hans

-

Tool Improvement

It would be great if you could create default settings for the Tool Containers. As workflows become larger, I use containers a lot. But once I have 10-15 containers, I have to set all of them to have a Transparency of 1 and a margin of None. While the changes don't take long to make, it would be nice if they could be preset.

-

Tool Improvement

Field Summary is a great tool, but would be nice to have a count and count not null on it.

-

Tool Improvement

Would save an extra select for parsing text files in correct format for dates and times.

-

Tool Improvement

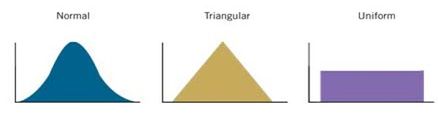

One of the common methods for generalization of different types of normal and beta distributions is triangular.

Though Alteryx doesn't have a function for this, even excel doesn't have this but

- SAS (randgen(x, "Triangle", c)) and

- Mathematica (TriangularDistribution[{min,max},c]) like tools include one.

Can we add something like randtriangular(min,mode,max)?

I have my solution attached, but this will ease the flow...

Best

-

Tool Improvement

Constantly using rand() function but also need;

- Normal distribution function like we have in Excel and

- Triangular distribution function too...

Idea: can we please add normdist() and triang(min,mode,max) functions...

Best

Edit: for normal dist. attached a discretized example...

-

Tool Improvement

Idea:

I know cache-related ideas have already been posted (cache macros; cache tools), but I would like it if cache were simply built into every tool, similar to the way it is on the Input Tool.

Reasoning:

During workflow development, I'll run the workflow repeatedly, and especially if there is sizeable data or an R tool involved, it can get really time consuming.

Implementation ideas:

I can see where managing cache could be tricky: in a large workflow processing a lot of data, nobody would want to maintain dozens of copies of that data. But there may be ways of just monitoring changes to the workflow in order to know if something needs to be rebuilt or not: e.g. suppose I cache a Predictive Tool, and then make no changes to any tool preceeding it in the workflow... the next time I run, the engine should be able to look at "cache flags" and/or "modified tool flags" to determine where it should start: basically start at the "furthest along cache" that has no "modified tools" preceeding it.

Anyway, just a thought.

-

Engine

-

Feature Request

-

Tool Improvement

Would it be possible to change the default setting of writing to a tde output to "overwrite file" rather than the "create new file" setting? Writing to a yxdb automatically overwrites the old file, but for some reason we have to manually make that change for writing to a tde output. Can't tell you how many times I run a module and have it error out at the end because it can't create a new file when it's already been run once before!

Thanks!

-

Category Input Output

-

Data Connectors

-

Tool Improvement

There is MD5 hashing capability,using MD5_ASCII(String) and MD5_UNICODE(String) found under string functions

it seems to be possible to encrypt/mask sensitive data...

BUT! using the following site it's child's play to decrypt MD5 --> https://hashkiller.co.uk/md5-decrypter.aspx

I entered password and encrypt it with MD5 giving me 5f4dcc3b5aa765d61d8327deb882cf99

Site gave me decrypted result in 131 m/s...

It may be wise to have;

SHA512_ASCII(String) and

SHA512_UNICODE(String)

Best

Altan Atabarut @Atabarezz

-

Tool Improvement

Regularly put true in or false in expecting it to work in a formula.

-

Tool Improvement

The Field Summary tool is a very useful addition for quickly creating data dictionaries and analysing data sets. However it ignores Boolean data types and seems to raise a strange Conversion Error about 'DATETIMEDIFF1: "" is not a valid DateTime' - with no indication it doesn't like Boolean field types. (Note I'm guessing this error is about the Boolean data types as there's no other indication of an issue and actual DateTime fields are making it through the tool problem free.)

Using the Field Summary tool will actually give the wrong message about the contents of files with many fields as it just ignores those of a data type it doesn't like.

The only way to get a view on all fields in the table is using the Field Info tool, which is also very useful, however it should be unnecessary to 'left join' (in the SQL sense) between Field Info and Field Summary to get a reliable overview of the file being analysed.

Therefore can the Field Summary tool be altered to at least acknowledge the existence of all data types in the file?

-

Tool Improvement

It would be great to get a random x number of records or x % of records for every grouped field in the sample tool.

Right now, the sample tool is lacking the random % feature and the random % tool is lacking the group by feature.

-

Tool Improvement

This is not a terribly important thing, but in the formula tool, the nitty gritty details of the Expression almost never fit into the annotation space. It would be nice if the annotation by default just contained the names of the variables being derived. So, e.g. if I'm deriving "CalcVar1", "CalcVar2", "CalcVar3" and so forth, the default annotation would just be:

This gives a much cleaner (by default) canvas. The same concept applies to the MultiRow and MultiField Formula tools, perhaps others. Just a thought and obviously not very important. Thanks.

-

Tool Improvement

I've seen several posts and questions concerning NULL dates. Is 09/31/2010 a valid date? I know that 02/29/206 isn't valid and that 02/00/2006 isn't either, but I really don't like finding out about these in conversion warning messages.

I might suggest a function that returns True or False on the date check and let the user configure appropriate rules to rethink the attempted date prior to committing the field to the date data type.

Cheers,

Mark

-

Feature Request

-

Tool Improvement

- New Idea 291

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 167

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |