Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be awesome if there was a cross tab in DB option because right now I have to stream out millions of records to build a cross tab.

-

Category In Database

-

Data Connectors

Please could you enhance the Alteryx download tool to support SFTP connections with Private Key authentication as well. This is not currently supported and all of our SFTP use cases use PK.

-

Category Connectors

-

Category In Database

-

Data Connectors

I noticed through the ODBC driver log that Alteryx doesn't care about the kind of base I precise. It tests every single kind of base to find the good one and THEN applies the queries to get the metadata info.

Here an example. I have chosen an Hive in db connection. If I read the simba logs, i can find those lines :

Mar 01 11:37:21.318 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER(), APPLICATION_ID() from system.iota

Mar 01 11:37:22.863 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER as USER_NAME from SYSIBM.SYSDUMMY1

Mar 01 11:37:23.454 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from rdb$relations

Mar 01 11:37:23.546 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select first 1 dbinfo('version', 'full') from systables

Mar 01 11:37:23.707 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select #01/01/01# as AccessDate

Mar 01 11:37:23.868 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: exec sp_server_info 1

Mar 01 11:37:24.093 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select top (0) * from INFORMATION_SCHEMA.INDEXES

Mar 01 11:37:24.219 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT SERVERPROPERTY('edition')

Mar 01 11:37:24.423 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select DATABASE() as `database`, VERSION() as `version`

Mar 01 11:37:24.635 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from sys.V_$VERSION at where RowNum<2

Mar 01 11:37:25.230 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select cast(version() as char(10)), (select 1 from pg_catalog.pg_class) as t

Mar 01 11:37:25.415 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select NAME from sqlite_master

Mar 01 11:37:25.756 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select xp_msver('CompanyName')

Mar 01 11:37:26.156 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select @@version

Mar 01 11:37:26.376 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from dbc.dbcinfo

Mar 01 11:37:26.522 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT @@VERSION;

I can understand that when Alteryx doesn't know the kind of base he tries everything.. (eg : in memory visual query builder) but here, I have selected the Hive database and I have to loose more than 5 seconds for nothing.

-

Category In Database

-

Data Connectors

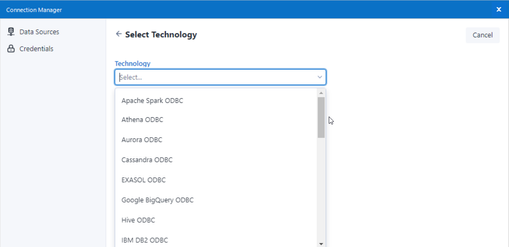

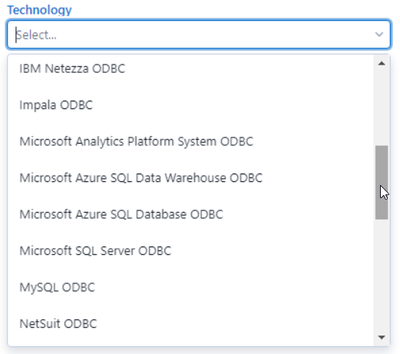

When creating a connection using DCM (example being ODBC for SQL) - the process requires an ODBC Data Source Name (see screenshot 1 below).

However, when you use the alias manager (another way to make database connections) - this does allow for DSN-free connections which are essential for large enterprises (see screenshot 2 below).

NOTE: the connection manager screens do have another option - Quick Connect - which seems to allow for DSN-free connections, but this is non-intuitive; and you're asked to type in the name of the driver yourself which seems to be an obvious failure point (especially since the list of all installed drivers can be read straight from the registry)

Please could we change DCM to use the same interfaces / concepts as the alias screens so that all DCM connections can easily be created without requiring an ODBC DSN; and so that DSN-free connections are the default mode of operation?

Screenshot 1: DCM connection:

screenshot 2

cc: @wesley-siu @_PavelP @ToddTarney

-

Category Connectors

-

Data Connectors

Hi Alteryx community,

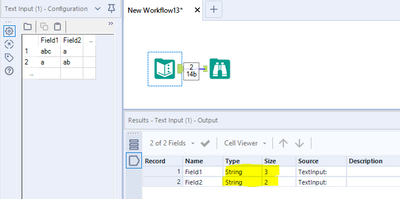

It would be really nice to have v_string/v_wstring and max character size as a standard for text columns.

it is countless how many times I found that the error was related to a string truncation due to string size limit from the text input.

Thumbs-up those who lost their minds after discovering that the error was that! 😄

-

Category Input Output

-

Data Connectors

-

Tool Improvement

At our organization we are required to change our passwords every few months forcing a change to my Tableau Server password. How does this relate to Alteryx? Well, every 90 days I have to change my password in the "Publish to Tableau Server Tool" for all of my workflows. This is quite a cumbersome process that could be eliminated with AD.

If you dislike manually changing your for each workflow that uses this tool then "star" this post!

-

Category Connectors

-

Data Connectors

Hello all,

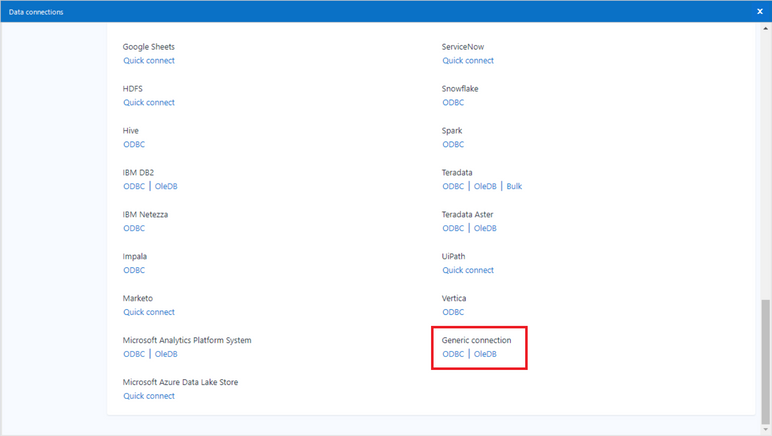

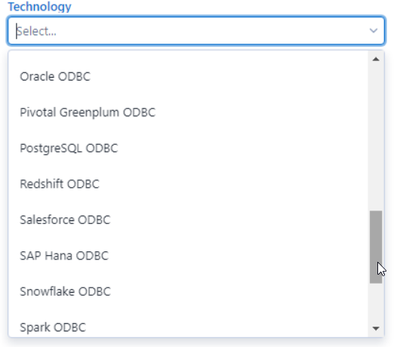

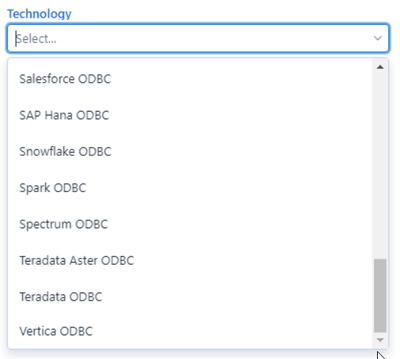

I really love the DCM feature present in the last two releases. However, I have noticed the Generic ODBC Connection is missing :

Classic Connection Manager :

Data Connection Manager :

Best regards,

Simon

-

Category Input Output

-

Data Connectors

In order to make the connections between Alteryx and Snowflake even more secure we would like to have the possibility to connect to snowflake with OAuth in an easier way.

The connections to snowflake via OAuth are very similar to the connections Alteryx already does with O365 applications. It requires:

- Tenant URL

- Client ID

- Client Secret

- Get Authorization token provided by the snowflake authorization endpoint.

- Give access consent (a browser popup will appear)

- With the Authorization Code, the client ID and the Client Secret make a call to retrieve the Refresh Token and TTL information for the tokens

- Get the Access Token every time it expires

With this an automated workflow using OAuth between Alteryx and Snowflake will be possible.

You can find a more detailed explanation in the attached document.

-

Category Connectors

-

Data Connectors

It would be helpful to be able to filter within the results window of a Browse tool for all "Not OK" records (records with leading/trailing spaces, embedded newlines, etc.) I can already filter for null and empty values, but this would be helpful for cleaning up data. I want to see the "dirty" data before taking out leading/trailing spaces or embedded new lines to see if there is something I'm missing in the data that needs to be further parsed or modified.

-

Category Input Output

-

Data Connectors

Hello,

As I mentioned in this previous idea : https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Generic-In-database-connection-please-stop-i...

field mapping in generic in-db connection is based on Microsoft Sql Server. Given the specificity of MSQL Server field types, I would like to change that in order to at least be able to use another database. Without that, this feature has no sense at all.

Best regards,

Simon

-

Category In Database

-

Data Connectors

95% of the times I see myself using the Directory Tool, it is only to access the FullPath content, so I immediatly add a Select tool to deselect the other attributes the tool returns.

Is there any chance to add a checkbox to only retrieve FullPath?

I couldn't find a previous idea on this, but let me know if it already exists.

-

Category Input Output

-

Data Connectors

It would be great to have an option in the Output Data tool to write the workflow name to the Info properties of Excel outputs.

Maybe something like this:

So that whenever you open an Excel file you always have a way of finding the name of the workflow that created the file.

This would make it so much easier as I often have to share Excel files with colleagues and customers and then need a way of tracking them back to workflows weeks or months later.

-

Category Input Output

-

Data Connectors

Alteryx has the ability to connect to data sources using fat clients and ODBC but not JDBC. If the ability to use JDBC could be added to the product it could remove the need to install fat clients.

-

Category In Database

-

Category Input Output

-

Category Reporting

-

Data Connectors

Hi GUI Gang

At the moment, I have a lovely formatted XLS with corporate branding, logos, filled cells, borders etc. The data from the Alteryx output needs to start in cell B6. I have tried the output tools to this named range, but Alteryx destroys all the Excel formatted cells in the data block.

As a workaround on the forums, many Alteryx users pump out to a hidden "Output" tab, and then code =OutputA1 in the formatted sheet. This looks messy to the users who then go hunting for the hidden tab. Personally I end up pumping the workflow out to a temporary CSV file. Then opening that in Excel, selecting all, and then pasting values in the pretty Excel file.

This is fine for one file, but I need to split the output report block by a country field and do this 100s of time for each month end.

Please can we have a output tool that does the same as my workaround. Outputs directly from a workflow to a range in Excel that doesnt destroy the workbook's formatting.

Jay

-

Category Input Output

-

Data Connectors

Extend the MongoDB tool to work with Atlas MongoDB instances.

-

Category Input Output

-

Data Connectors

It would be great if there was an option in the configuration of the Output Tool to create the output directory if it doesn't already exist. Maybe also to append instead of overwrite for all file types too?

-

Category Input Output

-

Data Connectors

When using the output data tool, it would save me and my cluttered organizational skills a lot of effort if the writing workflow was saved as part of the yxdb metadata.

I've often had to search to find a workflow which created the yxdb. I tend to use naming conventions to help me, but it would be easier if the file and or path was easily found.

cheers,

mark

-

Category Input Output

-

Data Connectors

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Directory Tool retrieves today a lot of information about a file. I must say I appreciate getting easily the size and the last write time.

But why not the owner? I have developped a macro with a powershell to do that but what a nightmare for a so little piece of information.

-

Category Input Output

-

Data Connectors

Currently, when one uses the Google BigQuery Output tool, the only options are to create a table, or append data to an existing table. It would be more useful if there was a process to replace all data in the table rather than appending. Having the option to overwrite an existing table in Google BigQuery would be optimal.

-

Category In Database

-

Data Connectors

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |