Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop : Ideas nuevas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi -

We are using the new(ish) Anaplan connector tools; in particular, the "Anaplan Output" tool (send data TO Anaplan).

The issue that I'm having is that the Anaplan Output Tool only accepts a CSV file. This means that I must run one workflow to create the CSV file, then another workflow to read the CSV file and feed the Anaplan Output Tool.

If it were possible to have an output anchor on the Output tool that would simply pass the CSV records through to the Anaplan Output tool, the workflows would be drastically simplified.

Thanks,

Mark Chappell

-

Category Input Output

-

Data Connectors

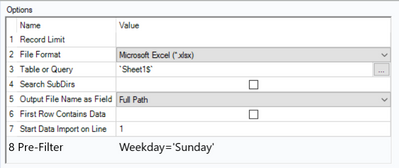

Pre-Filter as new option in Input Tool might reduce import data and allows to input only selected data (ie. for specific period or meeting certain conditions).

Cheers,

Pawel

-

Category Input Output

-

Data Connectors

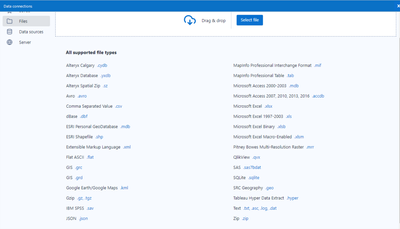

Add PowerPoint format file (ppt/pptx) into supported file type as direct connection in Input Tool.

PS. I know that we have workaround allowing to import PowerPoint slides into Alteryx but I'm describing automated solution :)

Cheers,

Pawel

-

Category Input Output

-

Data Connectors

Currently the Databricks in-database connector allows for the following when writing to the database

- Append Existing

- Overwrite Table (Drop)

- Create New Table

- Create Temporary Table

This request is to add a 5th option that would execute

- Create or Replace Table

Why is this important?

- Create or Replace is similar to the Overwrite Table (Drop) in that it fully replaces the existing table however, the key differences are

- Drop table completely removes the table and it's data from Databricks

- Any users or processes connected to that table live will fail during the writing process

- No history is maintained on the table, a key feature of the Databricks Delta Lake

- Create or Replace does not remove the table

- Any users or processes connected to that table live will not fail as the table is not dropped

- History is maintained for table versions which is a key feature of Databricks Delta Lake

- Drop table completely removes the table and it's data from Databricks

While this request was specific to testing on Azure Databricks the documentation for Azure and AWS for Databricks both recommend using "Replace" instead of "Drop" and "Create" for Delta tables in Databricks.

-

Category In Database

-

Data Connectors

Hyperion Smartview Connect

-

Category Connectors

-

Data Connectors

Alteryx really needs to show a results window for the InDB processes. It is like we are creating blindly without it. Work arounds are too much of a hassle.

-

Category In Database

-

Data Connectors

We really need a block until done to process multiple calculations inDB without causing errors. I have heard that there is a Control Container potentially on the road map. That needs to happen ASAP!!!!

-

Category In Database

-

Data Connectors

Hi Team,

Microsoft Outlook is extensively used for day to day operations. With more teams, adopting Alteryx within the firm. We wanted to explore the option of end to end automation and the outputs are directly triggered to external email domains however, due to firm restrictions we cannot send email to external/internal email domains without labelling the email. Is there way to in include email labelling/classification as part of emailing tool.

-

Category Input Output

-

Data Connectors

Hi all,

Something really interesting I found - and never knew about, is there are actually in-DB predictive tools. You can find these by having a connect-indb tool on the canvas and dragging on one of the many predictive tools.

For instance:

boosted model dragged on empty campus:

Boosted model tool deleted, connect in-db tool added to the canvas:

Boosted Model dragged onto the canvas the exact same:

This is awesome! I have no idea how these tools work, I have only just found out they are a thing. Are we able to unhide these? I actually thought I had fallen into an Alteryx Designer bug, however it appears to be much more of a feature.

Sadly these tools are currently not searchable for, and do not show up under the in-DB section. However, I believe these need to be more accessible and well documented for users to find.

Cheers,

TheOC

-

Category In Database

-

Data Connectors

Hello Team,

When we do run and cache workflow it remain in the cache memory for only the time the Alteryx designer or that workflow is open.

Advantage: We dont need to wait for the data to load in input data tool and the result is validated properly.

Disadvantage: Loss of time and money when the Alteryx Designer is closed automatically or mistaken.

Expectation:

Can we provide a token Expiry for 12 to 24 hrs so the precious time and money is not wasted.

This will be very useful when we are working on billions of Data.

-

Category Input Output

-

Data Connectors

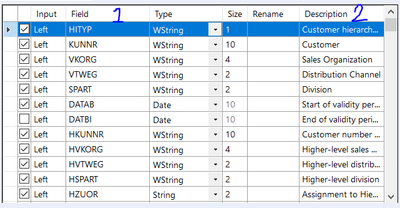

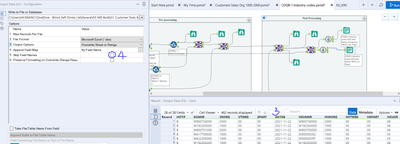

By default output always print Headers as shown in item 1 below, I am looking to print item 2 as Header. Item 3 is my output.

I tried item 4 below, doesn’t work.

Please consider this in your future releases. It saves lot of time as the Outputs can contain hundreds of fields and output files are shared with User community who understands the Field description much more than Field name. For example SAP Field-KUNNR does not mean nothing to a User than its description 'Customer'.

A check box on the Output Tool should able to toggle the selection between Field or its Description.

You might argue that the Rename column can be used, agree it would be difficult to manually type in hundreds of fields. As an alternative you can provide automatic Rename population with Description.

-

Category Input Output

-

Data Connectors

Hello,

My issue is very easy to solve. I want to use the generic ODBC in memo for a specific base (monetdb here but it isn't important).

I try to ouput a flow in a MonetDB SQL database. As you can see, I only take very simple field types

However I get this error message :

Error: Output Data (3): Error creating table "exemplecomparetable.toto": [MonetDB][ODBC Driver 11.44.0][MONETDB_SAU]Type (datetime) unknown in: "create table "exemplecomparetable"."toto" ("ID" int,"Libellé" char(50),"Date d"

syntax error in: ""Prix""

CREATE TABLE "exemplecomparetable"."toto" ("ID" int,"Libellé" char(50),"Date de Maj" datetime,"Prix" float,"PMP" float)

Reminder : SQL is an ISO Norm. Default type should follow it, not the MS SQL configuration. Interoperability is key

Links to : for in-db

https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Generic-In-database-connection-please-stop-i...

Issues constated : MonetDB

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Output-Data-date-is-now-datetime-makin...

Informix: https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Alteryx-date-data-type-error-trying-to...

Best regards,

Simon

-

Category Input Output

-

Data Connectors

Need an option in the output tool(Delete and append option is selected) so that if some error occur while writing into the table. workflow would automatically gets back to original record count. Right now if the workflow fails we are seeing zero records in the output table.

-

Category Input Output

-

Data Connectors

When working with .flat files, the actual Data file selection (Option #5) is a text input field.

Can we have a File Selection control here?

I think it's going to be easier than writing the whole path for a file.

Thanks!

-

Category Input Output

-

Data Connectors

Hi all,

Currently, only the Sharepoint list tool (deprecated) is working with DCM, it would be amazing to add the Sharepoint files input/output to also work with DCM.

Thank you,

Fernando Vizcaino

-

Category Connectors

-

Data Connectors

For in-DB use, please provide a Data Cleansing Tool.

-

Category In Database

-

Data Connectors

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/BigQuery-Input-Error/td-p/440641

The BigQuery Input Tool utilizes the TableData.List JSON API method to pull data from Google Big Query. Per Google here:

- You cannot use the TableDataList JSON API method to retrieve data from a view. For more information, see Tabledata: list.

This is not a current supported functionality of the tool. You can post this on our product ideas page to see if it can be implemented in future product. For now, I would recommend pulling the data from the original table itself in BigQuery.

I need to be able query tables and views. Not sure I know how to use tableDataList JSON API.

-

Category Connectors

-

Data Connectors

When loading multiple sheets from and Excel with either the Input Data tool or the Dynamic Input Tool, I usually want a field to identify which Sheet the data came from. Currently I have to import the Full Path and then remove everything except the SheetName.

It would be great if there was an option to output she SheetName as a field.

-

Category Input Output

-

Data Connectors

Hello all,

I try to use Alteryx and MonetDB, a very cool column store database in Open Source.

When I use the Visual Query Builder, I get this :

The fields names are totally absent.

The reason is Alteryx does not use the standard ODBC SQLColums() function at all but send some query (here "select * from demo.exemplecomparetable.fruit1 a where 0=1" ) to get a sample of data. In the same time, Monetdb sends the error "SELECT: only a schema and table name expected" (not shown to user, totally silent)

I think this should be implemented like that :

1/use of SQLColums() function which is a standard for ODBC and should work most of the time

2/if SQLColums() does not work, send the current queries.

It's widely discussed here with the MonetDB team.

https://github.com/MonetDB/MonetDB/issues/7313

Best regards,

Simon

-

Category Input Output

-

Data Connectors

When scheduled or manual Alteryx workflows try to overwrite or append the Tableau hyper extract file, it fails(File is locked by another process). This is because a Tableau workbook is using the hyper extract file. We know the behavior is expected as the file is opened by another user/process so Alteryx won't be able to modify it until the file is closed. Alteryx product team should consider this and implement it in the future to solve many problems.

-

Category Input Output

-

Data Connectors

- New Idea 377

- Accepting Votes 1.784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1.605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Anterior

- Siguiente »

- abacon en: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS en: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC en: Date time now input (date/date time output field t...

- EKasminsky en: Limit Number of Columns for Excel Inputs

- Linas en: Search feature on join tool

-

MikeA en: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 en: Select Tool - Bulk change type to forced

-

Carlithian en: Allow a default location when using the File and F...

- jmgross72 en: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com en: Select/Unselect all for Manage workflow assets

| Usuario | Cantidad |

|---|---|

| 32 | |

| 6 | |

| 5 | |

| 3 | |

| 3 |