Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

There is a great question in the Designer space right now asking about saving logs to a database: https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Save-workflow-messages-log-in-database...

This got me to think a little more about localized logging options in Alteryx.

At a high level, there are ways to accomplish this in Designer at a User or System level by enabling a Logging directory and then parsing those logs with a separate Alteryx job. However, this would involve logging ALL Designer executions, which seems like it may be overkill for this need. A user can also manually save a log after each execution, although this requires manual intervention.

I think adding an option in the Runtime settings for Workflow Configuration to Enable Logging and (optionally) specify a Logging directory would be a great feature add for Designer. In my opinion this should not apply once a workflow runs on Server (Server logging should be handled in a fully standardized way), but should apply to designer "UI" execution. Having the ability to add a logging naming convention (perhaps including a workflow name and run date in the log name) would be icing on the cake.

This would allow for a piecemeal logging solution to log specific flows or processes that might be high visiblity or high importance, while avoiding saving hundreds or thousands of logs daily of less important processes, and of dev test. It would also reduce or eliminate a manual process to save these logs individually.

-

API SDK

-

Category Developer

Is it possible to have a Sum(Column) or Total(Column) functions in formula tool? it will make the calculations much better as we don't need to use "Sum tool + Append tool + Formula tool + Select tool" or "couple of formula tools and then a select tool".

-

API SDK

-

Category Developer

I need support for outbound data streams to be gzip compressed. Ideally, this would be done by a new tool that can be inserted into a workflow (maybe similar to the Base 64 Encoding tool). Just including it in the Output Tool will not address my needs as I will be sending gzip payloads to a cloud API. There are two main reasons why this is necessary (and without it, quite possibly a roadblock for our enterprise's use of Alteryx):

- Some APIs enforce gzip encoding, therefore Alteryx cannot currently be used to interact with such APIs

- When transmitting large volumes of data across the Internet, gzip compression will significantly decrease transmission times

-

API SDK

-

Category Developer

This suggestion is particularly relevant for macros and custom tools created with the Python SDK, but I think it can apply to other tools as well.

When searching for tools in Alteryx, I can easily find tools I want fairly quickly. However, I often don't know which tool category it is in, which can sometimes slow me down (it is sometimes faster/easier for me to go to the tool category, rather than search for the tool I want).

As a quick example, I just installed the Word Cloud tool that @NeilR shared here: https://community.alteryx.com/t5/Dev-Space/Python-Tool-Challenge-BUILD-a-Python-tool-and-win-a-prize... . I was able to find the tool really easily using search once it was installed, but in order to find the tool category, I either had to unzip the .yxi file and find out where it was, or click around through the tool categories until I found it (it was in the Reporting tools, which makes a lot of sense).

Could we add something either to the search window or to the description/config of tools which calls out where a given tool is in the Tool Palette?

-

API SDK

-

Category Developer

A lot of popular machine learning systems use a computer's GPU to speed up some of the math to a huge degree. The header on this article on Medium shows a 15x difference from a high-end CPU vs a high-end GPU. It could also create an improvement in the spatial tools. Perhaps Alteryx should add this functionality in order to speed up these tools, which I can imagine are currently some of the slowest.

Create a standardized Mailbox application that could bolt onto Alteryx Server, to handle incoming attachments from sources like a Service Desk (Service Now for example) and other applications.

Essentially anything that regularly exports data in the form of an emailed attachments to which Alteryx could, using a series of predefined user rules and a designated email address, put those attachments into various directories ready for processing by automated Alteryx workflows.

This would save a huge amount of time as people currently have to manually drag and drop files. At least the on board Alteryx designers here haven't been able to come with a solution. Would also save any messy programming around systems like Outlook and bending any security issues within those systems. Many, many other applications have this simple feature built in to their products, especially service desks. I believe there would be a huge benefit to this very simple bolt on.

Implement a process to have looping in the workflow without resorting to Macros. Although macros do, generally, solve the issue, I find them confusing and non-intuitive.

I would suggest looping through the use of two new tools: A StartLoop and EndLoop tool.

The start loop would have two (or more) input anchors. One anchor would be for the initial input and the other(s) for additional iterative inputs. The start loop would hold all iterative inputs until the original inputs have passed the gate and then resubmit them in order returned to the start loop.

The end loop would have three output anchors. One anchor would be for data exiting the loop upon reaching the exit condition. Another loop would be for the iterative (return) data. Note that transformations can be performed on the data BEFORE it re-enters the loop. The third would be an "overloop" exit anchor. This would be for any data that failed to meet the exit condition within the (configurable) maximum iteration expression. The data from the overloop anchor could be dealt with as required by the business rules for the unsatisfied data after being output from the EndLoop tool

The primary configurations would be on the EndLoop tool, where you would indicate the exit condition and the maximum iteration expression. The tool would also create an iteration counter field. As part of the configuration you could have a check box to "retain iteration count field on exit". If checked, the field would be maintained. If not checked, the field would be dropped for the data as it exits the loop.

This would making looping a bit more intuitive and it would be graphically self-documenting as well. Worth a mention at least.

-

API SDK

-

Category Developer

Please add support for windows authentication to the download tool. I know there's a workaround but that involves using curl and the run command tool. The run command tool is awful and should be avoided at all costs, so please improve the download tool so I can use internal APIs.

-

API SDK

-

Category Developer

I've run into an issue where I'm using an Input (or dynamic input) tool inside a macro (attached) which is being updated via a File Browse tool. Being that I work at a large company with several data sources; so we use a lot of Shared (Gallery) Connections. The issue is that whenever I try to enter any sort of aliased connection (Gallery or otherwise), it reverts to the default connection I have in the Input or Dynamic Input tool. It does not act this way if I use a manually typed connection string.

Initially, I thought this was a bug; so I brought it to Support's attention. They told me that this was the default action of the tool. So I'm suggesting that the default action of Input and Dynamic Input tools be changed to allow being overridden by Aliased connections with File Browse and Action tools. The simplest way to implement this would probably be to translate the alias before pushing it to the macro.

Idea: I need a function that given two dates, will return the number of business days between them. I need to know the # of business days between when a sales order is placed and when it ships to the customer. I'm in the US, so I would want to not count Saturdays, Sundays, and US Holidays, but I can foresee others wanting the option to change to other calendars or ignore holidays.

There are a couple of posts on this in the community, but everything I've found so far is too laborious to implement or not robust.

Cheers,

Mark

-

API SDK

-

Category Developer

Hi there,

The download tool is currently very cryptic, and difficult for most users to grasp.. This is due, at least in part, to the fact that it tries to be generic and serve all needs instead of being broken into smaller tools which fit the need.

Could we please break the download tool into:

- Input FTP tool. This would allow you to download from FTP or SFTP sites, and work in a wizard fashion to get you to the file / files you wanted and take you through FTP authentication

- Input: Web API call. This would be much easier if there was a wizard where you could put the API you wanted to call, and then you could add the parameters using a wizard

- Input: Web-download: This would allow you to download frames or pages from the web. this would be a good place to do what so many users have asked for and which Excel does natively - i.e. allow you to see the site in a wizard in a browser, and pick the elements you want to download. Must allow for authentication and walk you through this with the wizard.

- Output; FTP put. AS above - splitting this out makes it more sensible

There are probably other variants, and we can keep the Download tool for super-complex or bespoke uses - but if we break this down into smaller tools with simpler capabilities, we'll get a higher usage.

Thank you

Sean

-

API SDK

-

Category Developer

Hi there,

The download tool currently does not work if the user is behind a corporate proxy setup - and the only way to download web-content is using CURL.

This is a significant impediment because this prevents almost all corporate alteryx users from being able to access this capaibilty

Could you please look into using the proxy settings that the workstation uses to access the internet in a corp env?

thank you

Sean

-

API SDK

-

Category Developer

This may be too much of an edge case...

I would like to be able to feed a dynamic input component with a input file and a format template file, so make the input component completely dynamic. This is because I have excel spreadsheets that I want to download, read and process hourly throughout the day. Every 4 hours another 4 columns are added to the spreadsheet, thereby changing the format of the spreadsheet. This then causes the dynamic input component to error because the input file does not match the static format template. I would be happy to store the 6 static templates, but feed these into to the dynamic input with the matching input file, thus making the component entirely dynamic. Does this make sense???

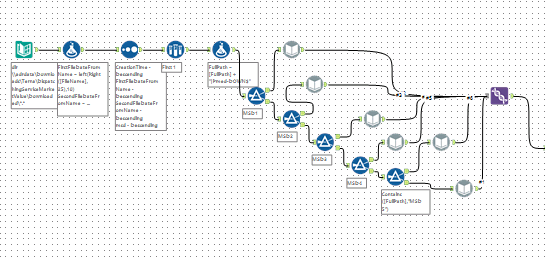

BTW, my workaround was to define six dynamic inputs, filter on the file type, then union the results:

Hi,

I am currently using Oracle Discoverer Tool which has option to drag or place an results column of a table or cross tab on to page items so that I can further filter the results. This helps the user to filter large results set without even downloading the output. I have attached a screenshot of current tool with page items.

Regards,

Sunil

-

API SDK

-

Category Developer

In Many of our tools,Before processing any file We create backup and move it to some backup with the datetime stamp.

Can we have such option like "CreateBackup" with timestamp in input and output tools?

Can we have string function that parse the string between 2 indices?

As FindString can find particular string occurrence,we can easily get required part of string easily from that index till required index.

or If we want entire remaining string we can have a function like:

Substring(String,StartIndex,EndIndex) Where endIndex can be : Length(String)-1

In the Test tool, the default is for the "don't report errors if there are other errors in the workflow" box to be checked. I think the default should be for it to be unchecked - it is very aggravating to think that you have found the problem with the workflow only for another to pop up.

-

API SDK

-

Category Developer

Currently - in workflows which make active use of dynamic queries - if the record set is empty then we end up with errors such as "a record was created with no fields". This creates issues when a dynamic query pumps rows out to a macro output, or where dynamic queries go into a join.

Could we change the result of the dynamic query so that if it returns an empty record set it still has columns but no rows and therefore doesn't cause errors in workflows?

Let me know if we need to provide a worked example?

Thank you

Sean

-

API SDK

-

Category Developer

Currently the R predictive tools are single thread, which means to utilise multi-threading we need to download separately a third party R package such as Microsoft R Client.

Given this is a better option, should this not be used as the default package upon installation?

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets