Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

Hi team,

There are some things that we would like to do with the download tool that currently are not possible:

1. use client side certificates to sign requests. This is a requirement for us in a project where we are interacting with the API of our customer's financial system. They provide us with a certificate and it is used to sign our requests, along with other authentication.Currently we have to use the external command tool to execute a powershell script using invoke-restmethod to do this interaction. I would much prefer to not have more tools in the chain.

Client certificates are described in the TLS 1.0 specification: https://tools.ietf.org/html/rfc2246 (page 43), and I believe them to be supported by cURL.

2. Multipart form-data. We have a number of workflows where we need to send multipart form data as part of a POST request. As this is not supported by the Download Tool, we have again used the external command tool to execute invoke-restmethod or invoke-webrequest in powershell. I don't know if modification of the Download Tool would be the best thing here, vs having either a dedicated tool for multipart form data, or having an HTTP POST specific tool that was able to handle multipart form data. What I envisage is something like the formula tool, with the ability to add an arbitrary number of cells, where we could use formula to either directly output a value from a column, synthesise a new value, or directly enter a static value). The tool would then compose this with boundaries between the parts, and calculate the content-size to add to the http request.

-

API SDK

-

Category Developer

Problem:

Dynamic Input tool depends on a template file to co-relate the input data before processing it. Mismatch in the schema throws an error, causing a delay in troubleshooting.

Solution:

It would be great if the users got an enhancement in this Tool, wherein they could Input Text or Edit Text without any template file. (Similar to a Text Editor in Macro Input Tool)

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

This has probably been mentioned before, but in case it hasn't....

The dynamic input tool is useful for bringing in multiple files / tabs, but quickly stops being fit for purpose if schemas / fields differ even slightly. The common solution is to then use a dynamic input tool inside a batch macro and set this macro to 'Auto Configure by Name', so that it waits for all files to be run and then can output knowing what it has received.

It's a pain to create these batch macros for relatively straightforward and regular processes - would it be possible to have this 'Auto Configure by Name' as an option directly in the dynamic input tool, relieving the need for a batch macro?

Thanks,

Andy

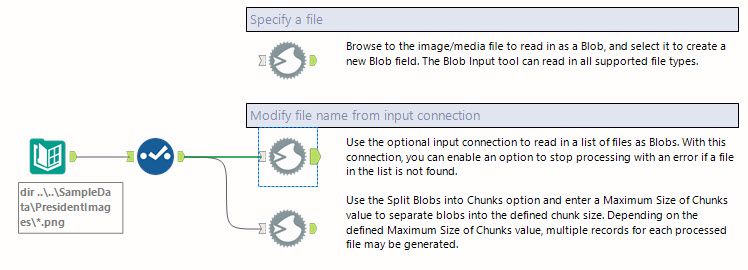

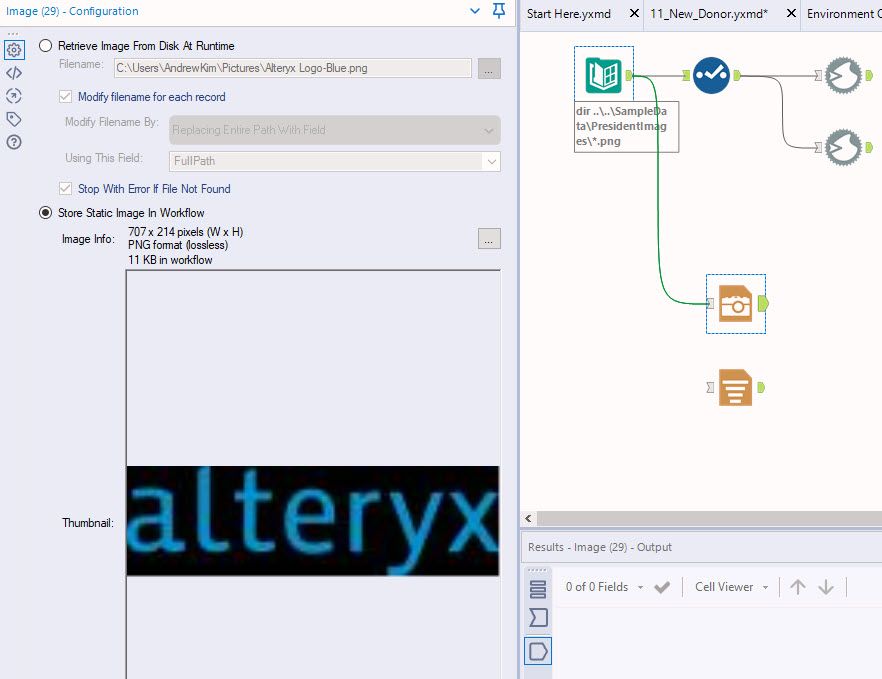

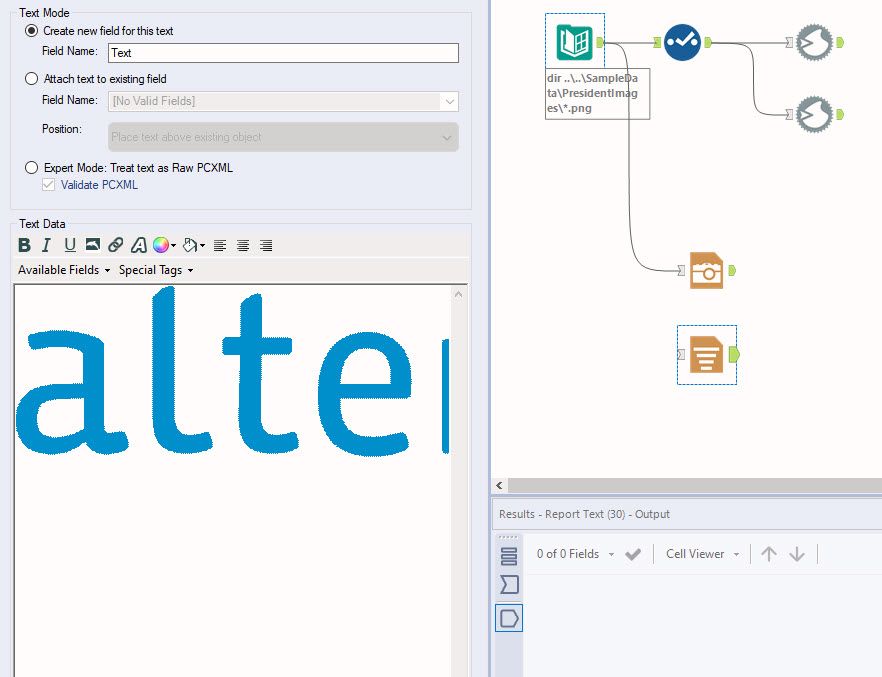

With the new intelligence suite there is a much higher use of blob files and we would like to be able to input them as a regular input instead of having to use non- standard tools like Image, report text or a combination of directory/blob or input/download to pull in images, etc. I would like to see the standard input tool capable of bringing in blob files as well.

The R tool has AlteryxProgress() and AlteryxMessage() functions for generating notifications in the Results window https://help.alteryx.com/current/designer/r-tool, however the Python tool does not. Since I'm writing more Python code than R code I'd like to have similar functionality available in the Python tool, e.g. an Alteryx.Progress() function and an Alteryx.Message() function.

Jonathan

-

API SDK

-

Category Developer

Please upgrade the "curl.exe" that are packaged with Designer from 7.15 to 7.55 or greater to allow for -k flags. Also please allow the -k functionality for the Atleryx Download tool.

-k, --insecure

(TLS) By default, every SSL connection curl makes is verified to be secure. This option allows curl to proceed and operate even for server connections otherwise considered insecure.

The server connection is verified by making sure the server's certificate contains the right name and verifies successfully using the cert store.

Regards,

John Colgan

-

API SDK

-

Category Developer

Figuring out who is using custom macros and/or governing the macroverse is not an easy task currently.

I have started shipping Alteryx logs to Splunk to see what could be learned. One thing that I would love to be able to do is understand which workflows are using a particular macro, or any custom macros for that matter. As it stands right now, I do not believe there is a simple way to do this by parsing the log entries. If, instead of just saying 'Tool Id 420', it said 'Tool Id 420 [Macro Name]' that would be very helpful. And it would be even *better* if the logging could flag out of the box macros vs custom macros. You could have a system level setting to include/exclude macro names.

Thanks for listening.

brian

When we try to call external web site from Alteryx Designer Download tool, our company proxy server failed the authentication because Alteryx uses the basic login/password authentication. This has happened to multiple applications that need to interact with external partners. Will like to request an enhancement to enable Alteryx to authenticate using Kerberos or NTLM.

Because Alteryx Designer is using basic authentication (not our standards like Kereberos and NTLM), Alteryx Designer failed to pass our proxy authentication. Our network security support decide we will use user agent to identify the request is coming from Alteryx Designer and let the authentication thru. Then we realize Alteryx Designer also does not set the user agent for the HTTPS request. We are requesting Alteryx set the user agent value for the download tool https request,

-

API SDK

-

Category Developer

Please enhance the dynamic select to allow for dynamic change data type too. The use case can be by formula or update in an action for a macro. If you've ever wanted to mass change or take precision action in a macro, you're forced to use a multi-field formula. It would be rather helpful and appreciated.

Cheers,

Mark

-

API SDK

-

Category Developer

While Alteryx allows for a proxy username and password in the settings, these are not passed properly to an NTLM proxy. Support for NTLM authentication would be incredibly useful for a number of corporations who utilize this firewall setup.

We currently have to either download via Python or cURL through batch commands called by Alteryx. Since Alteryx uses a cURL back-end, this should be a fairly simple addition to the existing download tool by allowing a selection of proxy server, port, and authentication method in addition to the proxy username and password. This could be done either in the tool itself or in User Settings.

Using the download tool is great and easy to use. However, if there was a connection problem with a request the workflow errors out. Having an option to not error out, ability to skip failed records, and retrying records that failed would be A LIFE CHANGER. Currently I have been using a Python tool to create multi-threaded requests and is proven to be time consuming.

-

API

-

API SDK

-

Category Developer

Passing Access and Secret Keys to connect to AWS S3 poses a security risk. It would be great if the Amazon Redshift Bulk Connection tool was enhanced to include an authentication option to use a Native IM group instead of keys.

-

API SDK

-

Category Developer

The Alteryx.Flexnetoperations.com license management site needs major work.

On the View Licenses page it shows all licenses going back several years. A basic need is to show only licenses which haven't expired, but that is not an option.You cannot even sort on the expiration column while you can sort on most others columns.

The most simple need is to see a list of my current active license users - but I do't see a way to do that.

I tried an "Advanced Search" and chose expiration date after 2019-10-29 and none of my licenses which expire in 2020 appear - I get a blank list.

Similarly on the administer machines page you cannot filter to hide expired licenses or even on the licenses column (which doesn't sort either).

The help link on the page doesn't bring you to help specific to that page but the general activation help front page. After several clicks I found this page:

But the help is incomplete (doesn't list Machine types or the difference between Active and Inactive)

Also, there is no export capability - copy and pasting into Excel is a formatting headache as it brings in check-boxes.

Lots of room for improvement here.

Cheers,

Bob

P.S. I understand that work is being done on this, but an ETA would be greatly appreciated.

-

API SDK

-

Category Developer

I still see that companies are expecting BI professionals to write SQL queries manually despite Alteryx giving complete solution. Idea is to spread value add of Alteryx in Indian market and global companies

-

API SDK

-

Category Developer

Can we have some support monitoring information added to the summary of each tool during/after a workflows run so we can determine how much memory is being used per component and per workflow run. Not just what is the default minimum. This will help to identify where in our workflow we can improve and/or help us by adjusting the default memory usage for sort/join tools on a workflow basis.

-

API SDK

-

Category Developer

If we have similar workflows within the gallery saved and we need to make slight changes in the process to all of them, it would be helpful if we could open all workflows from the gallery by either mutliselect or using a checkbox next to those workflows that we wish to open all at once.

-

API SDK

-

Category Developer

When the Python Tool operates, it seems to always ingest all the data before processing any of it (i.e. no batch processing). Python can handle this type of functionality with generators, can we update the tool so that it may do some preprocessing (like imports and data prep) and allow a defined generator function to be called repeatedly from a separate input handle and provide batch data frames on output for more parallel-like processing of data?

The Python Tool could be updated as such:

- Multi-Input - Same functionality as now, and also allow this data to be used for preprocessing and setting up the Python functions and a single batch function.

- Data Input - Ingests data in batches (as most other tools operate) where each batch passes in a dataframe (in this case, a subset of processed entries) into an existing Python function (with a name that is in globals()), and returns another dataframe with that desired output. This can give the option of adding/removing rows as necessary to a subset of the data.

- Data Output - Partial set of data after data processing to allow tools further in the chain to process in parallel.

- "On Complete" Multi-Outputs - Same functionality as now, to pass process-complete data to the next tool once all data ingested has been processed. Perhaps give the option to pass the complete set from Data Output.

A simple use-case, if a user wanted to use only the Python Tool:

Let's say a user wants to get all URLs from every post in a thread (containing millions of posts) that are in blacklisted domains.

- Data prep that sends the list of blacklisted domains into the Python Tool's Multi-Input handle, and that data is transformed and stored in a set within the Python tool once.

- A series of posts (strings) are sent in batches (let's say ~10000) to the Data Input of the Python Tool. The tool calls a defined Python function that extracts all the URLs, and filters those in the blacklist.

- That data is then transformed into a DataFrame which is then sent to the Data Output of the Python Tool, and only contains results corresponding to the small batch of posts that were ingested. Alteryx can also use this to track progress during execution.

- Once all posts have been processed, one of the Python Tool's Multi-Outputs can return a total count of URLs found that were NOT in the blacklist (sure this can be a part of the Data Output, but just for the sake of this example). Could also be used to trigger "on-complete events."

I know I used the term "generators" above, and the design could probably be simplified to instead call an Alteryx Python function that yields from a function to await input from the next batch to use actual Python generators. However, I feel my initial approach could be thought of as a simpler process since generators are more of an intermediate functionality.

I hope this makes sense and is elaborate enough to pursue. Thanks for the consideration!

-

API SDK

-

Category Developer

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets