Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I would like to able to limit the data being read from the source based on the volume , such as 10GB or 5 GB etc. This will help in case of POC's where we can process portion of the dataset and not the entire dataset. This will have many different used cases as well.

It would be a huge time saver if you had an option to unselect the fields selected and select the fields not selected in the Select tool.

Yes, I know, it's weird to have a situation where a decision tree decides that no branches should be created, but it happened, and caused great confusion, panic, and delay among my students.

v1.1 of the Decision Tool does a hard-stop and outputs nothing when this happens, not even the succesfully-created model object while v1.0 of the stool still creates the model ("O") and the report ("R") ... just not the "I" (interactive report). Using the v1.0 version of the tool, I traced the problem down to this call:

dt = renderTree(the.model, tooltipParams = tooltipParams)

Where `renderTree` is part of the `AlteryxRviz` library.

I dug deeper and printed a traceback.

9: stop("dim(X) must have a positive length")

8: apply(prob, 1, max) at <tmp>#5

7: getConfidence(frame)

6: eval(expr, envir, enclos)

5: eval(substitute(list(...)), `_data`, parent.frame())

4: transform.data.frame(vertices, predicted = attr(fit, "ylevels")[frame$yval],

support = frame$yval2[, "nodeprob"], confidence = getConfidence(frame),

probs = getProb(frame), counts = getCount(frame))

3: transform(vertices, predicted = attr(fit, "ylevels")[frame$yval],

support = frame$yval2[, "nodeprob"], confidence = getConfidence(frame),

probs = getProb(frame), counts = getCount(frame))

2: getVertices(fit, colpal)

1: renderTree(the.model)

The problem is that `getConfidence` pulls `prob` from the `frame` given to it, and in the case of a model with no branches, `prob` is a list. And dim(<a list>) return null. Ergo explosion.

Toy dataset that triggers the error, sample from the Titanic Kaggle competition (in which my students are competing). Predict "Survived" by "Pclass".

Dear Team

If we are having a heavy Workflow in development phase, consider that we are in the last section of development. Every time when we run the workflow it starts running from the Input Tool. Rather we can have a checkpoint tool where in the data flow will be fixed until the check point and running my work flow will start from that specific check point input.

This reduces my Development time a lot. Please advice on the same.

Thanks in advance.

Regards,

Gowtham Raja S

+91 9787585961

The error message is:

Error: Cross Validation (58): Tool #4: Error in tab + laplace : non-numeric argument to binary operator

This is odd, because I see that there is special code that handles naive bayes models. Seems that the model$laplace parameter is _not_ null by the time it hits `update`. I'm not sure yet what line is triggering the error.

The CrossValidation tool in Alteryx requires that if a union of models is passed in, then all models to be compared must be induced on the same set of predictors. Why is that necessary -- isn't it only comparing prediction performance for the plots, but doing predictions separately? Tool runs fine when I remove that requirement. Theoretically, model performance can be compared using nested cross-validation to choose a set of predictors in a deeper level, and then to assess the model in an upper level. So I don't immediately see an argument for enforcing this requirement.

This is the code in question:

if (!areIdentical(mvars1, mvars2)){

errorMsg <- paste("Models", modelNames[i] , "and", modelNames[i + 1],

"were created using different predictor variables.")

stopMsg <- "Please ensure all models were created using the same predictors."

}

As an aside, why does the CV tool still require Logistic Regression v1.0 instead of v1.1?

And please please please can we get the Model Comparison tool built in to Alteryx, and upgraded to accept v1.1 logistic regression and other things that don't pass `the.formula`. Essential for teaching predictive analytics using Alteryx.

We are big fans of the In-Database Tools and use them A LOT to speed up workflows that are dealing with large record counts, joins etc.

This is all fine, within the constraints of the database language, but an annoyance is that the workflow is harder to read, and looks messy and complicated.

A potential solution would be to have the bottom half of the icon all blue as is, but the top half to show the originaling palette for that tool.

ie Connect In-DB - Green/Dark Blue

Filter In-DB - Light Blue/Dark Blue

Join In-DB - Purple/Dark Blue

etc.

in-DB workflows would then look as cool as they are !

Thanks

dan

This would allow for a couple of things:

Set fiscal year for datasource to a new default.

Allow for specific filters on the .tde (We use this for row level security with our datasources).

Thanks

The Multi-Field Binning tool, when set to equal records, will assign any NULL fields to an 'additional' bin

e.g. if there are 10 tiles set then a bin will be created called 11 for the NULL field

However, when this is done it doesn't remove the NULLs from the equal distribution of bins across the remaining items (from 1-10).Assuming the NULLs should be ignored (if rest are numeric) then the binning of remaining items is wrong.

Suggestion is to add a tickbox in the tool to say whether or not NULL fields should be binned (current setup) or ignored (removed/ignored completely before binning allocations are made).

I've run into an issue where I'm using an Input (or dynamic input) tool inside a macro (attached) which is being updated via a File Browse tool. Being that I work at a large company with several data sources; so we use a lot of Shared (Gallery) Connections. The issue is that whenever I try to enter any sort of aliased connection (Gallery or otherwise), it reverts to the default connection I have in the Input or Dynamic Input tool. It does not act this way if I use a manually typed connection string.

Initially, I thought this was a bug; so I brought it to Support's attention. They told me that this was the default action of the tool. So I'm suggesting that the default action of Input and Dynamic Input tools be changed to allow being overridden by Aliased connections with File Browse and Action tools. The simplest way to implement this would probably be to translate the alias before pushing it to the macro.

The chart tool is really nice to create quick graphics efficiently, especially when using a batch macro, but the biggest problem I have with it is the inability to replace the legend icon (the squiggle line) with just a square or circle to represent the color of the line. The squiggly line is confusing and I think the legend would look crisper with a solid square, or circle, or even a customized icon!

Thank You!!!!

Hey Alteryx!

Here's one. I don't care for the tabs at the top showing each workflow. They are not in order by open date and it's hard to tell whether tab all the way on the right is the final workflow or not... They are hard to X out (especially the last one on the right that seems to have a semi hidden X). Depending on your monitor layout/resolution, you can only see like 10 visible workflows at a time in the gui. If you want to see more, you have to go into the workflow dropdown carrot which AFAIK also doesn't seem to be in any particular order esp when you open up several flows having same name. On a side note - what happened Alteryx? They USED to be in order by open date going across at the top AND in the workflow dropdown... That was useful.

So let get rid of these tabs. It's so windows95.

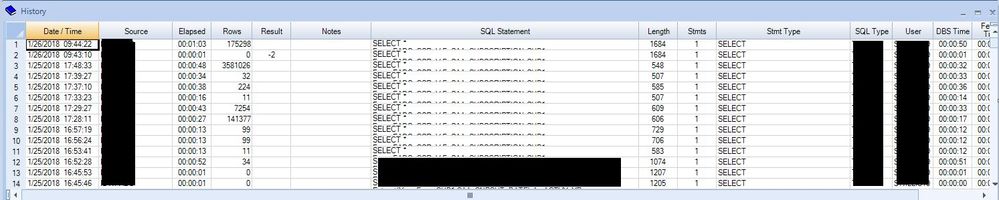

Instead create an interactive workflow history table (window) similar to what exists in TOAD, sql assist and other database tools (see image below). It's a table in the view but each row would reflect a workflow (formerly a tab). Top row is most recent workflow and moving down the table shows history of workflows.

You could can hover over a row to see details pop up for that workflow OR you could click each row (double click to run?) and workflow becomes visible in active canvas and overview and results window update accordingly. Maybe we could even have more than one active window per session? (sort vert/horz).

Additional benefits/functionality:

1. Slightly more screen real estate which we know you are always looking for and asked about 🙂

2. This could also be an autosave feature. When Alteryx crashes - and it does - when you reopen designer all your history will be there - no loss of productivity! Also high on your list.

3. Have an additional search function where we can search for tool names, comments, annotation, workflow/app/macro names, odbc connections, specific formulas or key words and then we see which history rows match our search criteria (rather than searching using notepad++ 'find in Files' feature in a directory)

4. It's not that much dev time since workflow history is already available in some buried log so why not show some of this metadata to the user in the gui?

Thanks,

Simon

Some of the predictive tools put out a "Score" field when output is run through the scoring tool, and some put out a "Score_1" and/or "Score_0". Since I frequently reuse the same workflow template for different predictive model types, it would be nice if they were consistent so that I wouldn't have to crash the workflow the first time through to get the input field names correct for downstream tools (e.g., Sort). Thank you

The current SharePoint connector works fine until you encounter SharePoint lists hosted in MS Groups which appears to use Azure Active directory much different from how the original SharePoint domain works. An easy way to determine this is if your SharePoint lists have a domain with https://groups.companyname.com instead of https://companyname.sharepoint.com.

Currently the work around is having go through the MS Groups API which is complicated and requires extra IT support for access and other credentials.

Hello --

I have a process where I send an email to users before updating a spreadsheet that is now produced by an Alteryx workflow. Currently, I do this outside of Alteryx because if I choose to use Events -- it will send an email and immediately continue on with the rest of the workflow.

What would be ideal is to have an option to Wait for 10 minutes (or 600 seconds) before continuing on with the rest of the workflow -- assuming the email is sent before the workflow runs.

Thanks,

Seth

I have long and large workflows, IMO, that are getting difficult to follow. I'd like the ability to highlight the joins and set specific colors or at the very least highlight and toggle on/off highlights. I'd also like to be able to move my joins and so they are not curving all over the canvas.

I've used tools like BRIO/Hyperion with a wide-range of control over the output to MS Excel. The Jooleobject allowed me to control MS Outlook, Excel, Word, etc.. The goal is to run a scheduler within Alteryx every week to blend data, insert the data into pre-formatted and renamed excel templates, copy those files to a network shared drive and finally send a formatted email to my users. If I can get past copy/paste routines, my job would be much more efficient. Thx

Some of my workflows requires about 2 hours to run. Would like a stopwatch feature on the workflow UI or application after I begin running it. Would like to get an email when completed, as well. Thx

Hi,

I think it would be great if the run time of a workflow could be displayed by tool or container. This would make refining the workflow at completion a lot easier and also help with thinking of better solutions. Even cooler would be some kind of speed heat map.

Thanks

Alteryx has a 3 hour demo session on CloudShare which is very useful for quick demos...

How about having most up to date version of Alteryx as a demo as a starter?

- Unfortunately it's 11.5 right now... Not 11.7!!!

- I'm demoing the older, slower version to the clients.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |