Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be really nice if we could save our own custom color palette when coloring tool containers and comments.

I use colors to define the purpose of my tool containers and it would be much easier if I could select a labeled, reusable color.

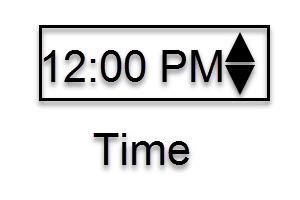

I would like to see a time interface tool similar to the Date and Numeric Up Down tools. I am working on some macros where the user can select the time they would like to use a filter for the data.

Example: I want all data loaded after 5:00 PM because its late and needs to be removed.

Example 2: I want to create an app where the user can select what time range they would like to see records for (business hours, during their shift, etc)

Currently this require 2-3 numeric up downs or a Text box with directions for the user on how to format field with Error tools to prevent bad entries. It could even be UTC time.

I've seen several posts and questions concerning NULL dates. Is 09/31/2010 a valid date? I know that 02/29/206 isn't valid and that 02/00/2006 isn't either, but I really don't like finding out about these in conversion warning messages.

I might suggest a function that returns True or False on the date check and let the user configure appropriate rules to rethink the attempted date prior to committing the field to the date data type.

Cheers,

Mark

Currently when a unique tool is used, and a field is removed upstream then the workflow fails to move forward. If you have one or two unique fields being used then it is no big deal, but when you have a very complex workflow then you have to click into each one of those tools in order to update. This can be very problematic and creates a lot of time following all the branches that is connected after the 1st unique tool is used. My suggestion is to make this a warning instead of a fail or have an option to select fail or warning like the union tool is setup. This way people can decide how they want this tool to react when fields are removed.

The idea behind encrypting or locking a workflow is good for users to maintain the workflow as designed.

However, when a user reaches a level of maturity equivalent to that of the builder or more, or even when changes are required - the current practice is to keep a locked and unlocked version of the workflow so that it allows for a change in the future.

It would be much simpler if we can have the power to lock and unlock workflows with a password. Users can then maintain and keep the passwords so that they can continue with the workflow.

Not everybody is on Server yet so this feature is very helpful for control before Server migration. Otherwise it’s just password protecting a folder containing the workflow package, then re-locking a new save file each time a change is made or when someone new takes over on prem.

I love that Alteryx lends itself to good workflow documentation, but I'd really like to be able to add a bit of basic formatting within my comment boxes. I tend to have one large (read: verbose) box at the top/beginning of the workflow describing the purpose of the workflow and quirks of the datasource to watch out for, and it would be easier to read these if I had some simple options like Bold, Italic, Underline, numbered list, bullet list. You know, the sorts of things you can do in basic HTML email? Those. I want them!

When making any type of macro, it's important to test the functionality of the macro via a debug. This is accomplished successfully with normal tools, however there's a bug that will not allow the user to debug In-DB macros that use either of the following standard Alteryx tools:

- Macro Input In-DB

- Macro Output In-DB

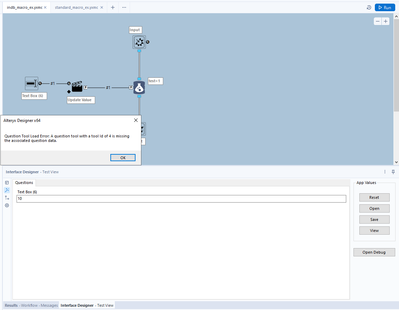

If either of these tools are included in the macro you are building, an error message will appear not allowing you to open a debug.

Error message: Question Tool Load Error: A question tool with a tool id of XXX is missing the associated question data.

Of course, Macro input and output tools do not require any specific action/question tool associated with it. This is a bug. A user pointed out the XML issue almost 3 years ago here:

In summary: "It appears that the tool itself inserts a hidden Question attribute into the XML which can also be seen in Workflow Configuration"

Source:

Examples....

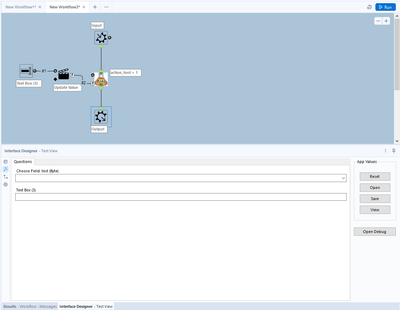

A normal macro, using standard tools:

After debugging a standard macro, the Macro Input/Output tools correctly change to a Text Input and a Browse tool. This allows the macro author to test the macro.

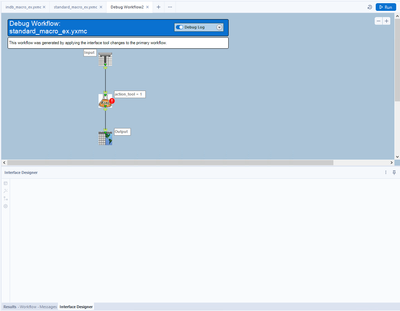

However, when trying the same thing with In-DB tools in a macro, an error message appears:

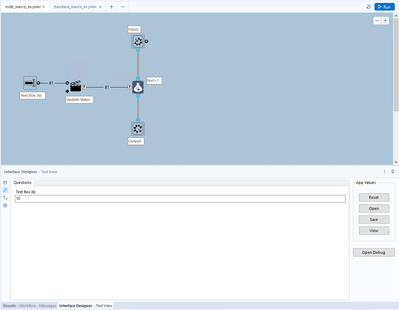

In-DB macro 1:

In-DB Macro error message (after clicking "Open Debug"):

Checkbox ability to ‘not’ output the original column on a text to columns tool

Please add support for Databricks' Unity Catalog

Currently, when selecting a Databricks-connection in the “Connect In-DB”-tool, and opening the “Query Builder”, only tables in the catalog named “hive_metastore” are listed. That is, Alteryx submits the following SQL query to Databricks:

Listing tables 'catalog : hive\_metastore, schemaPattern : %, tableTypes : null, tableName : %'

However, with Unity Catalog in Databricks the namespace is three-tier and there may be multiple catalogs (and not just the "hive_metastore" catalog), see https://docs.microsoft.com/en-gb/azure/databricks/lakehouse/data-objects#--what-is-a-catalog

I reached out to Alteryx support, which replied that you currently have a feature request for implementing this change (ID TDCB-4056) and they furthermore suggested that I post here.

Thanks in advance.

Hello!

I'm submiting this idea to put other products into alteryx students program, I think that we (students) should have access to study these products (not only the Intelligence Suite, but Server as well).

95% of the times I see myself using the Directory Tool, it is only to access the FullPath content, so I immediatly add a Select tool to deselect the other attributes the tool returns.

Is there any chance to add a checkbox to only retrieve FullPath?

I couldn't find a previous idea on this, but let me know if it already exists.

We see canvasses every day where dozens fields are brought into a canvas or a macro, but never used - and this just creates slowness for no good benefit.

Given that one of the selling features of Alteryx is the speed of processing - could we look at three improvements to the Alteryx engine & designer:

- easiest: Keep track of every field brought in / created - and if they are not used in an output, then throw a warning at the end of the execution process

- For example - you bring in fields a,b,c - you create field d and e during the flow in formula tools

- Field d is never used as an input to any filters or formulae - and it doesn't appear on any output - so it's just waste

- Field a and b are part of the output, so they are fine

- Field c is never used at all - so that's just waste.

- Field e is used to filter the records before output - so this one is fine.

- So we've immediately found 2 fields that we can eliminate and make this canvas faster

- Medium: Ignore the unused fields in the execution engine

- Hardest: Tell the users that their field is unused in Alteryx Designer by doing a lineage analysis of the tools, just like software environments like Visual Studio do. This may require a change to the engine & to designer 'cause we would need to make each tool capture the full detail of the fields that they know in their configuration in order to do this trace.

We've been looking into the phoneHome information that collects usage of Designer in the enterprise, and it looks like this data set (in the UsageReports collection, I believe).

Please can you add the CanvasFilename that was run to this data - we need to be able to surveil the use of Alteryx in our enterprise which is not being done within the server environment, and without the canvas name this becomes tremendously difficult.

Reference:

We frequently have issues where users report slowness from an Alteryx installation on a particular machine; or where a specific tool or package fails to install correctly.

For our admin teams - this becomes a debugging exercise to go through different permutations to understand the cause - and if this is escallated to Alteryx Support, this becomes even tougher.

Could we think about including a basic "Self Diagnostic" in to Alteryx which runs through the basic functionalities of Alteryx with some basic timings; checks that Python is working correctly; checks the memory allocation and temporary disk space - and then either persists this to disk and/or sends to a central environment for analysis?

Given a large deployed environment like ours (over 10 000 seats deployed) - self-checkout-telemetry like this would provide the central team with massive increase in their ability to manage the deployed base; and at the same time signficantly reduce the time to resolve support issues.

There needs to be a way to step into macro a which is component of parent workflow for debugging.

Currently the only way to achieve to debug these is to capture the inputs to the macro from the parent workflow, and then run the amend inputs on the macro. For iterative / batch macros, there is no option to debug at all. This can be tedious, especially if there are a number of inputs, large amounts of data, or you are have nested macros.

There should be an option on the tool representing the macro in the parent workflow to trigger a Debug when running the workflow, this would result in the same behavior when choosing 'Debug' from the interface panel in the macro itself: a new 'debug' workflow is created with the inputs received from the parent workflow.

On iterative / batch macros, which iteration / control parameter value the debug will be triggered on should be required. So if a macro returns an error on the 3 iteration, then the user ticks 'Debug' and Iteration = 3. If it doesn't reach the 3rd iteration, then no debug workflow is created.

Need a way to highlight lines whether that means right-clicking and selecting a color or what-not, but just having the lines become black & BOLD doesn't cut it. It's not easy on the eyes. If I could click this line/connector and make it bright green that would be ideal and then I can see where it connects better when zooming out.

Hello all,

From https://www.sqltutorial.org/sql-triggers/

Introduction to SQL Triggers

A trigger is a piece of code executed automatically in response to a specific event occurred on a table in the database.

A trigger is always associated with a particular table. If the table is deleted, all the associated triggers are also deleted automatically.

A trigger is invoked either before or after the following event:

- INSERT – when a new row is inserted

- UPDATE – when an existing row is updated

- DELETE – when a row is deleted.

When you issue an INSERT, UPDATE, or DELETE statement, the relational database management system (RDBMS) fires the corresponding trigger.

In some RDMBS, a trigger is also invoked in the result of executing a statement that calls the INSERT, UPDATE, or DELETE statement. For example, MySQL has the LOAD DATA INFILE, which reads rows from a text file and inserts into a table at a very high speed, invokes the BEFORE INSERT and AFTER INSERT triggers.

On the other hand, a statement may delete rows in a table but does not invoke the associated triggers. For example, TRUNCATE TABLE statement removes all rows in the table but does not invoke the BEFORE DELETE and AFTER DELETE triggers.

So basically, I would like to create some triggers from in db tools in Alteryx.

Best regards,

Simon

Speed up canvas edits - The Create/Remove Space Tool

Usually day two of working with a canvas I realize that I have been a fool, and I come up with a significantly more elegant or simple solution. Moving all of the containers or tools to fit my slick new container is cumbersome and slow. I've created a GIF of a feature several tools have which allows the user to easily move and arrange items on the canvas.

Open source tool used in demo: bpmnJs

Introducing: The Azure Machine Learning Training and Scoring Tools

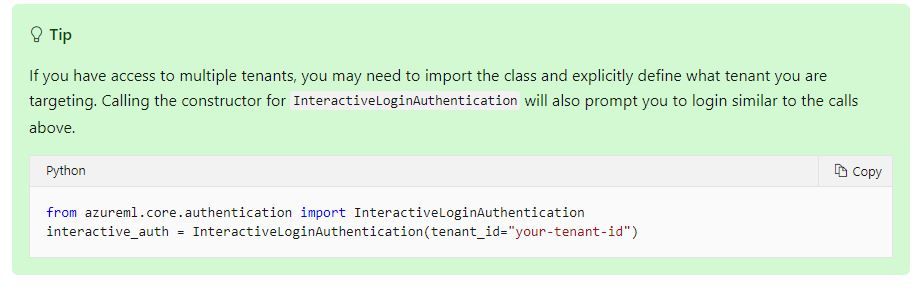

We tried to use this tool but can't log in to Azure ML correctly. We have several Tenant ID then log in to another tenant for office 365 not Azure ML.

====================== <Error Message> ==========================================================

Message: You are currently logged-in to 55f0a...-.............................................. tenant. You don't have access to d846a...-............................................. subscription, please check if it is in this tenant. All the subscriptions that you have access to in this tenant are =

[SubscriptionInfo(subscription_name='Microsoft Azure Enterprise', subscription_id='754c5...-...........................')].

Please refer to aka.ms/aml-notebook-auth for different authentication mechanisms in azureml-sdk.

InnerException None

ErrorResponse

=======================================================================================================

Microsoft states that tenant needs to be specified if we have access to multiple tenants.

Set up authentication for Azure Machine Learning resources and workflows

Could you add Tenant ID into Azure credentials so that we can use this tool?

- New Idea 301

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 169

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,558 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

209 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections