Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

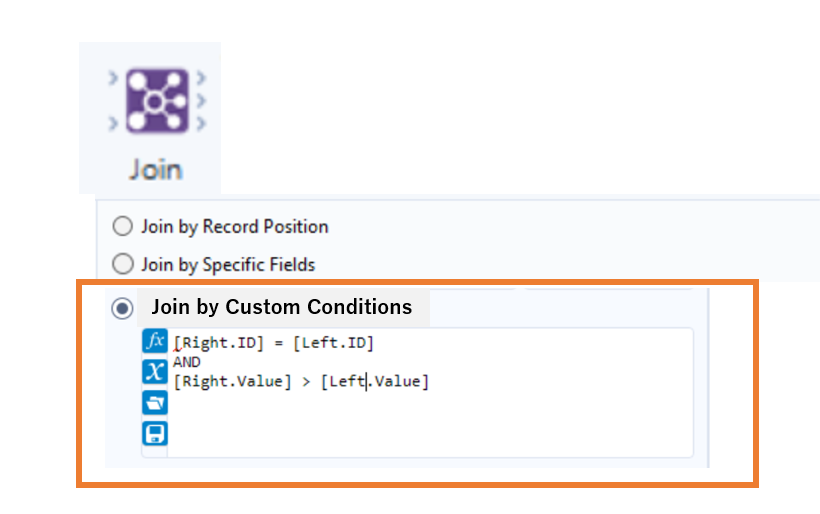

I want a feature to enable join by custom conditions. Currently, in Join tool, allowed condition is only equality of specific fields and specific position, however, in SQL, we can join data by much more flexible conditions like;

SELECT TableA.id FROM TableA INNER JOIN TableB ON TableA.id=TableB.id and TableA.value > TableB.value

Of course, my idea can be easily realized by using combination of Appendix Field + Filter tool, but I meant to say is that Appendix-Fields is quite expensive operation in calculation cost, and it would generate many unnecessary records, which is annoying us in case of handling a huge dataset.

I suppose this kind of flexible conditions can be specified by using expression editor, thereby configuration window of this feature would look like the below image; Adding one more radio button option, and expression editor similar to one used in Filter tool.

Any positive/negative feedback on my idea would be appreciated. Thank you for your attention!

Alteryx Support recreated the same issue on Designer 2024.1.1.93 and SharePoint Tool 2.6.3 for Designer 2024.

- Have anyone experienced the same error?

- If yes, is there any workaround to connect with M365?

I’ve been using the Regex tool more and more now. I have a use case which can parse text if the text inside matches a certain pattern. Sometimes it returns no results and that is by design.

Having the warnings pop up so many times is not helpful when it is a genuine miss and a fine one at that.

Just like the Union tool having the ability to ignore warnings, like Dynamic Rename as well, can we have the ignore function for all parse tools?

That’s the idea in a nutshell.

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

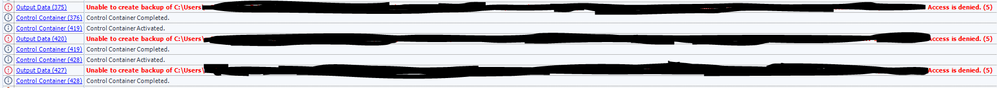

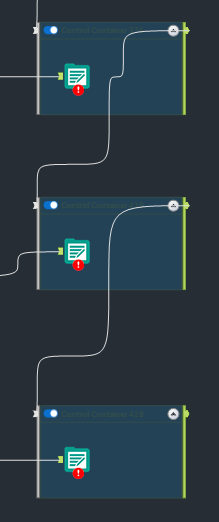

Allow users the ability to add a delay on the connection between Control Container tools. I frequently have to rerun workflows that use the control container because the workflow has not registered that the file was properly closed on outputting from one output tool to the next. The network drives haven't resolved and show that the file is still open while its moved on to the next control container. Users should have an option in the Configuration screen to add a delay before a signal is sent for the next container to run.

In the past I was able to use a CReW tool (Wait a Second) in conjunction with the Block Until Done tool to add the delay in manually. But I have since converted all of my workflows over to Control Containers. Since then half of the times the workflow has run I encounter the following errors.

Hello,

I think I have neer wrotten an easier idea : the tooltip for the run workflow button should indicate the keyboard shortcut (ctrl+R). So simple, so intuitive..

Best regards,

Simon

Hello all,

As of now, you have two very distinct kinds of connection :

-in memory alias

-in database alias

It happens than every single time I use a in-database alias I have to create the same for in memory since some operations cannot be realized in in-database (such as pre-sql or interface tools)

What does that mean for us :

-more complex settings operations/training/tests

-unefficient worflows that have to deal with two kinds of alias.

What I propose :

-a single "connection alias", that can be used either for in-db either for in-memory,

-one place to configure

-the in-db or in-memory being dependant on the tools you use

Best regards,

Simon

Hello all,

As of today, when you want to retrieve or create a file on Apache Spark for Databricks, you have only two choices : CSV and Avro

However it's clearly missing parquet file type :

-it's faster

-it's better for storage

-it's standard and already supported as input/output of Alteryx or for HDFS so doesn't seem hard to add here.

Best regards,

Simon

Hello all,

Apache Doris ( https://doris.apache.org/ ) is a modern datawarehouse with a lot of ambitions. It's probably the next big thing.

You can read the full doc here https://doris.apache.org/docs/get-starting/what-is-apache-doris but to sum it up, it aims to be THE reference solution for OLAP by claiming even better performance than Clickhouse, DuckDB or MonetDB. Even benchmarks from the Clickhouse team seem to agree.

Best regards,

Simon

Whenever I overwrite an Excel sheet with data of the same format just different values (e.g. Q2 data versus Q1 data) all of my Pivot Tables break and I have to manually recreate them even though the schema didn't change. Somehow the Table is being deleted/removed and replaced with a completely different Table which is what causes the Pivot Tables to break. The only way to avoid this is to manually set the Cell Range, but who has time for that? The only solution I have found is to manually copy all values and paste them over the existing data which is very inefficient the more sheets you are working with.

Hi everyone,

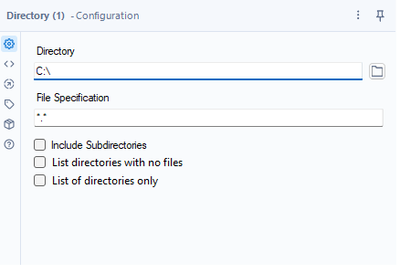

Add two additional features to a directory tool. Something like this:

Use cases:

1. Since it is not possible to use a folder browse on the Gallery, this could help a basic user create a list of possible folders to select from with the help of a drop-down

2. Directory analysis for cleaning purposes - currently, if you want to get a list of the folders with Alteryx, it takes forever for big file servers since Alteryx is mapping all the files

Both are achievable today through regex or a bat script.

Thank you,

Fernando Vizcaino

Hello all,

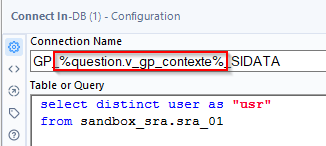

As of today, we use the good old alias in-memory to connect to our datasources in in-memory. We have several environments so we use constants in order to change the name of the in-memory alias during execution.

To illustrate :

Depending of the environment, the constant « v_gp_contexte » will take different values :

- GP_DS08_SIDATA for la dev.

- GP_EE_SIDATA for prod.

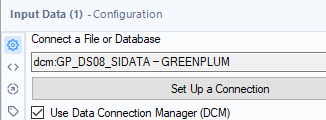

Sounds nice, right? But now, we would like to use DCM and the nightmare begins :

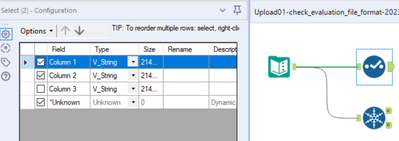

We can't manually change the name and set the question :

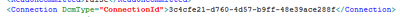

if we look at the xml of the workflow, we only find an id so editing it is useless :

(for informationDCM connections are stored in some sqlite db in C:\Users\{yourname}\AppData\Local\Alteryx

So, I would like to use the DCM inside the in-memory alias (the in-memory alias is stored and can be edited), just like for in-db connection alias.

Best regards,

Simon

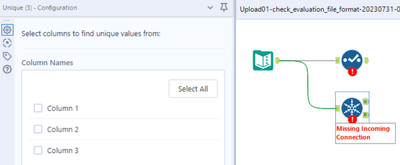

In some cases, the information about incoming columns to tools are (temporarily) forgotten, e.g. if Autoconfig is switched off, if the incoming connection is temporarily missing, or if column names are generated dynamically and the workflow has not been executed, yet.

Many tools deal with that situation well, e.g. Selection, Formula, or Summarize. In these cases, the tools tell the user that they cannot find incoming columns, but they preserve the configuration so that the user still can (at least partially) work on these tools and important information on the configuration is not lost:

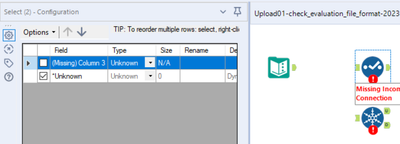

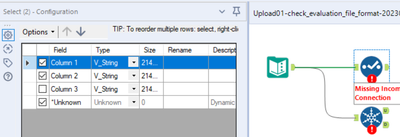

Example Select Tool

- First step: Connections present, configuration typed in:

- Second step: Connection cut, confguration opened. The configuration looks screwed up but implicitly contains all settings:

- Third step: Connection re-connected. The configuration is as before:

Other tools behave the opposite, for example Unique or Macro Input (an for sure many other tools). If the incoming columns are currently unknown to the Designer and you click once on the symbol, the entire configuration of this tool is lost. You might try to get the configuration back by pressing undo. This, in most cases does not work. Or, even worse, you find out what happened later when it's too late for undo. In this case, you either have an old version of that workflow to look up the configuration or you have to re-develop it. In any case, this is unnecessary and time-consuming software behaviour.

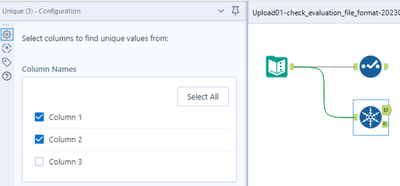

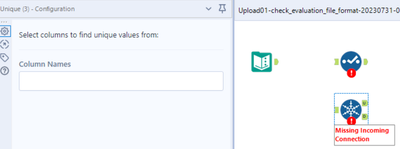

Example Unique Tool

- Step 1: Connections present, configuration typed in:

- Step 2: Connection cut, confguration opened. The configuration is empty:

- Step 3: Connection re-connected: The entire configuration is permanently lost:

I wasn't sure whether I should report this as a bug or a feature enhancement. It is somehow in between. Two aspects tell me that this should be changed:

- Inconsistent behaviour of different tools for now reason,

- Easy loss of programming work, resulting in time-consuming bug fixing.

Please make sure that all tools preserve their configuration also if information on incoming columns is temporarily lost.

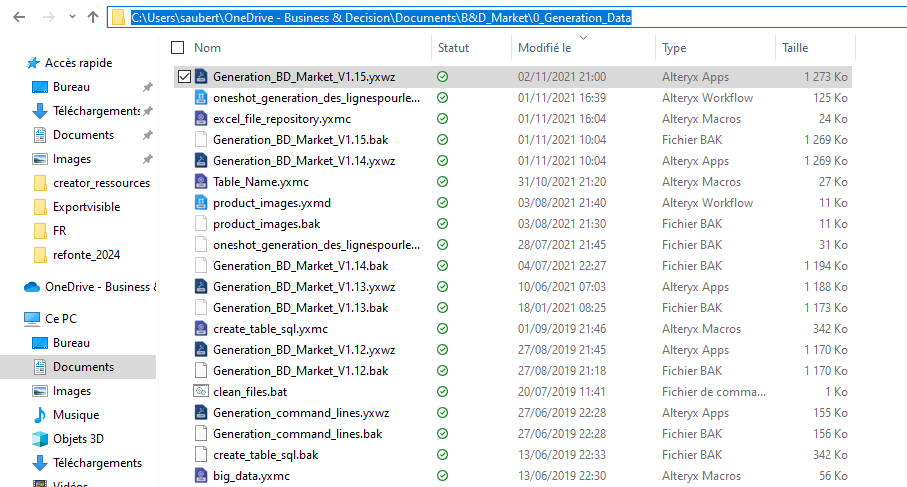

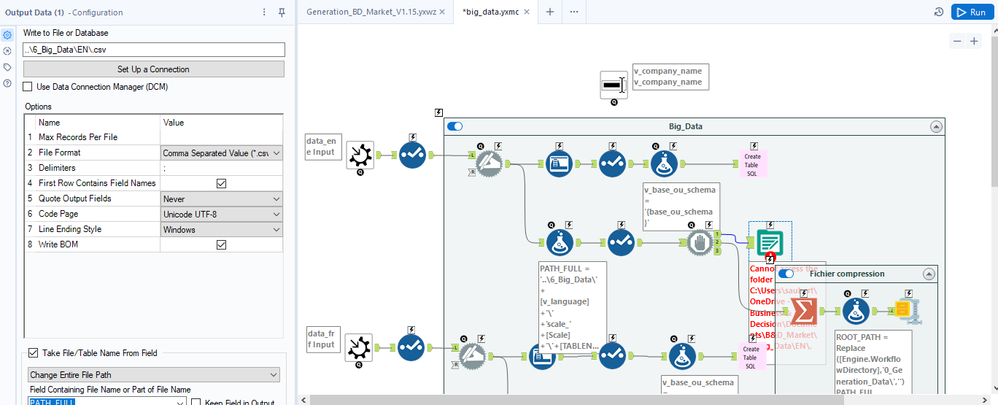

Hello all,

Here the issue : I have a workflow in my One Drive folder

In that workflow, I use a macro that writes a file with a relative path (..\6_Big_Data\EN\.csv ) :

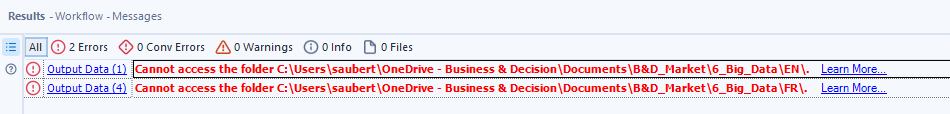

Strangely, it doesn't work and the error message seems to relate to a folder that doesn't exist (but also, not the one I have set)

ErrorLink: Output Data (1): https://community.alteryx.com/t5/*/*/ta-p/724327?utm_source=designer&utm_medium=resultsgrid|Cannot access the folder C:\Users\saubert\OneDrive - Business & Decision\Documents\B&D_Market\6_Big_Data\EN\.

I really would like that to work :)

Best regards,

Simon

Hello all,

We all have experienced these last years the now famous concept of hide/unhide password :

Here a few examples of it

I would like this exact principle everywhere we have a password on Alteryx.

Best regards,

Simon

Hello all,

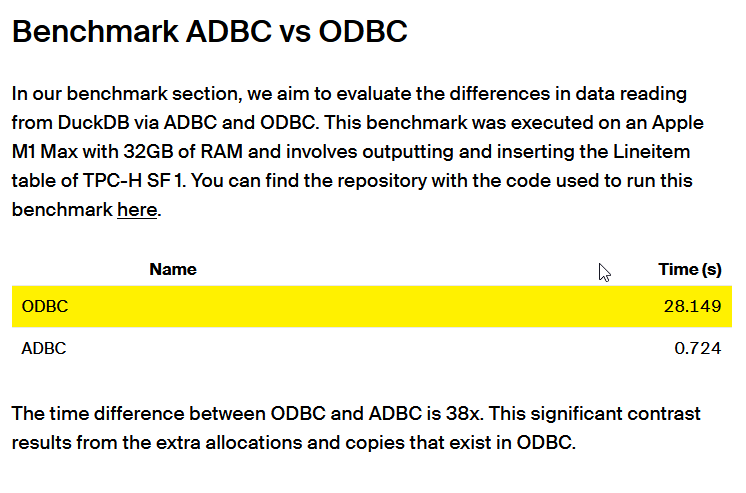

ADBC is a database connection standard (like ODBC or JDBC) but specifically designed for columnar storage (so database like DuckDB, Clickhouse, MonetDB, Vertica...). This is typically the kind of stuff that can make Alteryx way faster.

more info in https://arrow.apache.org/blog/2023/01/05/introducing-arrow-adbc/

Here a benchmark made by the guys at DuckDB : 38x improvement

https://duckdb.org/2023/08/04/adbc.html

Best regards,

Simon

Right now, the List Box interface tool allows end users to select multiple options of fields for selections, filtering, and formatting/formulating.

However, it doesn't do quite as good when a use case has over 1,000+ columns/fields. This is made even more complicated with each column/field having somewhat similar naming conventions thereby causing confusion.

Having a search function, as made available in standard Select Tools, Join tools, and other tools that has filtering capacity, will be most helpful for developers to give maximum flexibility to end users.

Today, there is an checkbox to "Disable All Tools that Write Output" within the Runtime settings for a workflow. Setting this option requires at least 3 clicks:

- Click on the canvas

- Click the "Runtime" tab in the Configuration pane

- Click the checkbox

Could a keyboard shortcut be added for this? I've spoken to several users who leverage this feature and, while it is already a time saver, it seems helpful enough where a keyboard shortcut is warranted.

Hello

Cartesian product is a common issue when joining dataset with a bad key. What I suggest is an option to check if there will be a cartesian product on the join tool.

-there is a label "Cartesian product (non join key uniqueness) detection"

-under it a drop down menu with three choices

-do nothing

-fail

-warning

Algo :

if do nothing==> well... do nothing more than actual behaviour.

if "fail" or "warning" : count distinct of join key versus count row on each side of the join. If none is unique, display a warning or an error message.

Best regards,

Simon

Parquet is a very fast, efficient and widely used data format, currently only below Parquet compression algorithms are supported and we cannot use Alteryx to read the parquet file that generated by other processes. This limits our usage in Alteryx.

Read support: Snappy and Gzip compression algorithms.

- Write support: Snappy only

It would be great for Alteryx to support all types of Parquet format so we can maximize the use of Alteryx in data analysis.

- New Idea 317

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 171

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 57

- Partner Dependent 4

- Inactive 674

-

Admin Settings

21 -

AMP Engine

27 -

API

11 -

API SDK

223 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

212 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

398 -

Category Prescriptive

1 -

Category Reporting

200 -

Category Spatial

82 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

91 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,569 -

Documentation

64 -

Engine

129 -

Enhancement

362 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

25 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

81 -

Machine Learning

13 -

My Alteryx

1 -

New Request

212 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

25 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

82 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

| User | Likes Count |

|---|---|

| 11 | |

| 8 | |

| 4 | |

| 3 | |

| 3 |