Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Not sure I'd call this a user setting, but I couldn't figure out the right heading this belongs to.

When opening files, there are often times a couple of files at that aren't run on any kind of schedule or set time frame but you come back to when you need to run them.

There should be a way to set "FAVORITES" for a handful of files that you find yourself referring to on a repeated basis, but too far back to be on the 'recents' list because you open too many other files.

Hello,

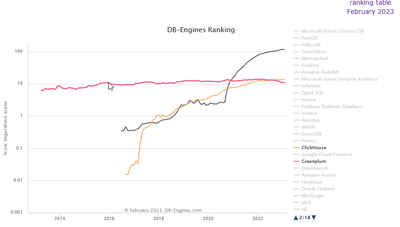

Just like Monetdb or Vertica, Clickhouse is a column-store database, claiming to be the fastest in the world. It's available on Cloud (like Snowflake), linux and macos (and here for free, it's open-source). it's also very well ranked in analytics database https://db-engines.com/en/system/ClickHouse and it would be a good differenciator with competitors.

https://clickhouse.com/

it has became more popular than Greenplum that is supported : (black snowflake, red greenplum, orange clickhouse)

Best regards,

Simon

Currently the only way to do IF / FOR / WHILE loop is either in Formula tool or via iterative/batch macro.

Instead, it will be hugely useful and a lot more intuitive if there is the ability to build the FOR / WHILE logic embedded in a container (similar to LabVIEW interface https://www.ni.com/en-sg/support/documentation/supplemental/08/labview-for-loops-and-while-loops-exp...).

Advantages include:

- Increased readability. (not having to go into a macro!)

- Increased agility. (more power/ features can be added or modified on the go for something that is more than a Formula tool but not too much interface like a Macro App)

- More intuitive

Dawn.

In a similar vein to the forthcoming enhancement of being able to disable a specific output tool, my idea is to have the inverse where you can globally disable all outputs and then enable specific ones only. This should help reduce the number of clicks required/avoid workarounds using containers to obtain this functionality and allow users to be very specific in which outputs run and don't run as required.

Here's a sample of what you get if no records are read into a python tool:

Error: CReW SHA256 (4): Tool #1: Traceback (most recent call last):

File "D:\Engine_10804_3513901e8d4d4ab48a13c314a18fd1f9_\2f1b1eb4701e445775092128efe77f76\workbook.py", line 7, in <module>

df = Alteryx.read('#1')

File "C:\Program Files\Alteryx\bin\Miniconda3\envs\DesignerBaseTools_venv\lib\site-packages\ayx\export.py", line 35, in read

return _CachedData_(debug=debug).read(incoming_connection_name, **kwargs)

File "C:\Program Files\Alteryx\bin\Miniconda3\envs\DesignerBaseTools_venv\lib\site-packages\ayx\CachedData.py", line 306, in read

data = db.getData()

File "C:\Program Files\Alteryx\bin\Miniconda3\envs\DesignerBaseTools_venv\lib\site-packages\ayx\Datafiles.py", line 500, in getData

data = self.connection.read_nparrays()

RuntimeError: DataWrap2WrigleyDb::GoRecord: Attempt to seek past the end of the file

I've fixed this in my macro by forcing a DUMMY record into the python tool (deleting it on the back-end). It would be much nicer to have error handling that prevents the issue. Even as a configuration option, what to do with no input this would simplify things.

This error condition potentially effects every python tool created.

Cheers,

Mark

Hello!

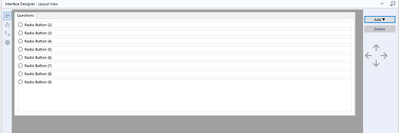

As many of you know, i'm a big fan of Alteryx Apps. However, one of the most painful parts of Alteryx Apps is moving around elements in the Interface Designer. Currently when you have many elements in your interface designer:

And add a new element from the dropdown, or through a new tool:

It is added to the bottom of the interface. Moving it to the top is currently done with the arrows, however this is very slow, especially when you have many interface elements:

Currently (with 9 radio buttons) it takes 18 clicks (each taking a couple of seconds due to delay between movements) to move it, because it moves between each step:

It would be fantastic if we could drag and drop the elements of the interface to where we like, for speed of development and ease of use.

Thanks,

TheOC

Visio is our organization's most common method of communicating business processes and workflows. Being able to export an Alteryx workflow to Visio would help us communicate the tool's functionality to process owners.

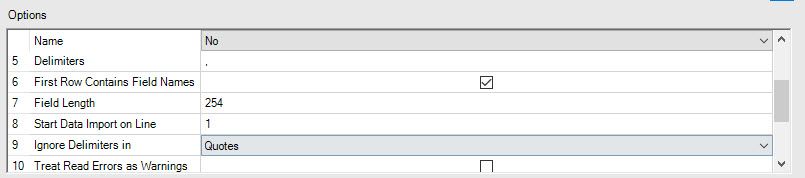

The default (option 7 below) often causes problems with imports and is difficult for new users to find and I see no reason for the option to be so low. Can it be set higher to aid parsing of larger text fields e.g. parsing HTML files as text.

Hello,

In Datascience, Levenshtein and Jaro Winkler distances are used to quanitify a similarity between two strings.

Here the wikipedia pages

https://en.wikipedia.org/wiki/Levenshtein_distance

https://en.wikipedia.org/wiki/Jaro%E2%80%93Winkler_distance

Note 1 : the Levenshtein and Jaro Distances are already used in Fuzzy Matching tool, so that shouldn't be a huge work to include it in formula

Note 2 : there is a useful macro on the galley https://gallery.alteryx.com/#!app/LevenshteinDistance/5c54701f826fd30988f02779

Note 3 : some product already have it implemented such as Apache Hive or Qlik Sense

Best regards,

Simon

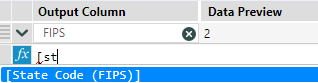

I use the field name auto-complete feature whenever I can. One issue with it, however, is when there are parentheses in a field name. After auto-completing the field name, Alteryx highlights a portion of the field name after the first parenthesis. This is not ideal as I typically expect the cursor to be at the end of the field name so I may continue to type. In this scenario, unfortunately, I would begin to type over my field name and the expression gets messed up.

For example, as shown below, I begin to type "st" and then hit the tab button to complete the field name in my expression.

In this case, because my field name has parentheses in it, however, some of the field name remains highlighted and the cursor does not go to the end of the right bracket, as one would expect.

If I were to continue typing at this point, the highlighted portion of the expression would get erased and replaced.

Field names that do not contain parentheses continue to function correctly as shown below.

Similar to the thoughts in this idea, it would be great if the parenthesis matching functionality could be added to the formula tool as well.

Instead of adding a tool container to the canvas, then moving my input tool into that - it would be nice if I could just click a box for 'Disable' in the input tool properties. This would speed up things if I'm trying to test inputs one at a time; or need to disable just one specific output while I test another data stream in my workflow.

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

Our company is implementing an Azure Data Lake and we have no way of connecting to it efficiently with Alteryx. We would like to push data into the Azure Data Lake store and also pull it out with the connector. Currently, there is not an out-of-the-box solution in Alteryx and it requires a lot of effort to push data to Azure.

Alteryx 2019.4 introduced support for Tableau's .hyper extract format, however it only supports single table extracts. .hyper files have supported multiple tables since mid-2018, so I'd like Alteryx to support that as well.

Here are a couple of current use cases (as of February 2020) and one future one.

- We have malaria incidence data that is joined to multiple sets of spatial data. Doing all of the joins in the extract creation process to build a single table extract is not possible due to processing time & memory constraints, so we use a multiple-table extract.

- There are multiple ways to do row level security in Tableau. A common way is to have separate tables for the data & the entitlements and then use calculations at run-time to filter the data, and for that having a multiple table extract is ideal.

- In 2020 Tableau will be introducing new data modeling capabilities (this was first demoed at the 2018 Tableau Conference, there were sessions on it at the 2019 Tableau Conference) where one goal is vastly improved performance for large fact table to fact table joins where previously we'd have to do much more data preparation. This is another case where multiple table extracts would be useful.

I've attached a sample Hyper file with two tables in the extract (it's zipped because the Community site doesn't accept .hyper files).

Supporting alternative schema and table names in Hyper extracts https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Input-tool-Support-more-than-Extract-Extract... is a prerequisite for this because by definition multiple table extracts have multiple table names.

A related idea is supporting multiple table extracts for the Output tool: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Support-multiple-table-extracts-in-the-Table...

Jonathan

Alteryx 2019.4 added support in the Input tool for Tableau .hyper extract files. The tables stored in the .hyper files have a schema and a table name. Tableau's old .tde files and Hyper files created by Alteryx & Tableau Desktop use "Extract.Extract" as the schema.tablename. However when using Tableau's Hyper API the default schema is "public" and the table name is arbitrarily specified by the user or application.

This has two impacts:

1) Without this support Alteryx can't open many .hyper files created by other applications. By way of example I've attached a sample .hyper file (in a .zip because the community software doesn't allow .hyper files) that has the schema.tablename "public.table1".

2) Also support for names beyond Extract.Extract is required in order to support multiple table extracts (submitted as a separate Idea).

Please update the Input tool so the user can select the particular schema and table name from the .hyper file.

Jonathan

Similar to previous ideas from @patrick_mcauliffe and @shailesh_patel - would like to request 2 things:

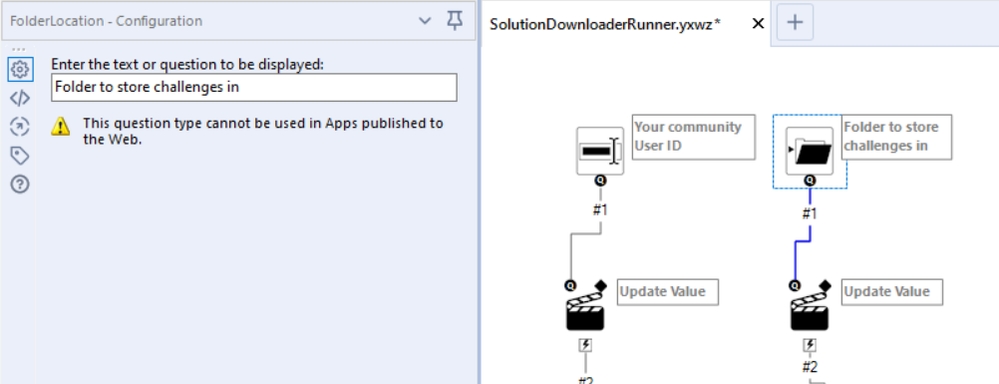

Default on Folder Picker Interface tool

The folder picker tool does not currently allow a default value - this unnecessarily adds work if users have the same value 90% of the time.

Please add a field for the default value that will show when the interface starts up

Similar ideas:

- Default on Date interface: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Default-Date-for-Interface-Tool/idi-p/35770

- Default on File Selector: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Default-file-location-in-file-broswer-Interf...

We have a large SAS Programming team that keeps most of thier data sets in a Unix environment. A more robust ODBC connection to this data would greatly enhance our use of Alteryx. The current SAS odbc Driver tends to lock Alteryx up. Creating edits to the connection also tends to lock ateryx up to an unrecoveable point.

As Tableau has continued to open more APIs with their product releases, it would be great if these could be exposed via Alteryx tools.

One specifically I think would make a great tool would be the Tableau Document API (link) which allows for things like:

- Getting connection information from data sources and workbooks (Server Name, Username, Database Name, Authentication Type, Connection Type)

- Updating connection information in workbooks and data sources (Server Name, Username, Database Name)

- Getting Field information from data sources and workbooks (Get all fields in a data source, Get all fields in use by certain sheets in a workbook)

For those of us that use Alteryx to automate much of our Tableau work, having an easy tool to read and write this info (instead of writing python script) would be beneficial.

It would be useful if enhancements could be made to the Sharepoint Input tool to support SSO. In my organisation we host a lot of collaborative work on SharePoints protected by ADFS authentication and directly pulling data from them is not supported with the SharePoint input tool, it is blocked. The addition of this feature to enable it to recognise logins would be very useful.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 7 | |

| 5 | |

| 3 | |

| 3 |