Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

All the input tools like Input Data and Dynamic Input will have a new flag "Skip on fail" that will process all the data, or none of the input data, or partial of the data requested and will return the data that could be read and do not return any error in the WFs.

If the 'Skip on fail' flag is false - the system should act like it is now.

if the 'Skip on fail' flag is true - the system should return the only the accepted or manager to read data on the default out put, and can have a second output connection for the error log, so we can parse it and do something with it, but the WFs should still run,

Some of the predictive tools put out a "Score" field when output is run through the scoring tool, and some put out a "Score_1" and/or "Score_0". Since I frequently reuse the same workflow template for different predictive model types, it would be nice if they were consistent so that I wouldn't have to crash the workflow the first time through to get the input field names correct for downstream tools (e.g., Sort). Thank you

The default variable size is a V_WSTRING of size 1073741823. If no one catches this, it uses up the memory on the server. Could the default be smaller?

Hi,

Today I pressed F1 in the Output Tool to find out what the setting Transaction Size does. It turned out that this is not documented.

It would be a great idea to make a documentation that covers the options of a tool.

Regards,

Frank

It would be very helpful to have a find and replace work in duplicated formula. especially if it is long.

Hi,

I was thinking that this might be nice addition - while Joining two inputs at the bottom there is always possibility to check if we want to include Unknown columns. Maybe we could specify if we want to join Unknown columns only from Right Input or Unknown only from Left Input. I know I would use this in my workflows.

Thanks

Alicja

Wouldn't it be great to be able to pick results from a drop down, based on the up-stream tools in the workflow? I had this situation many times, where I had to create a complex, chained app, just because the tools connected to the interface can't run before the interface tools are displayed to the end user.

For example, imagine an app, that based on what column it sees, it lets you drop one by just picking it from a drop down. It would open many development opportunities, and decrease the number of chained apps we need to build.

It will very good to connect multiple 2 or more tools by rightclicking and join. similar to cut and connect around.

I think double clicking. a tool will automatically take it to canvas is a good idea and hence a shortcut with it will be nice to have.

In our environment, we often have to replace a table. It would be nice if there was a tool that could pull out all of the input or output tools that referenced a given dataset. Someone wrote a XML file that we parsed, but it doesn't seem like all workflows are included.

The current SharePoint connector works fine until you encounter SharePoint lists hosted in MS Groups which appears to use Azure Active directory much different from how the original SharePoint domain works. An easy way to determine this is if your SharePoint lists have a domain with https://groups.companyname.com instead of https://companyname.sharepoint.com.

Currently the work around is having go through the MS Groups API which is complicated and requires extra IT support for access and other credentials.

Can you devise a way to bring out the dynamic network visualisation on to Powerpoint. Right now, we can only see a static image on a browser

As mentioned in detail here, I think that the addition of a "run as metadata" feature could be very helpful for making the analytic apps more dynamic in addition to enabling the dynamic configuration of the tools included in analytic apps chained together in a single workflow using control containers, therefore mostly eliminating the need to chain multiple YXWZ files together to be able to utilize the previous analytic app's output (this of course doesn't include the cases where a complex WF/App would have to be built by the previous app in chain to switch to it, but Runner helps solve this issue to a certain extent provided you don't have to provide any parameters/values to the generated WF/App).

The addition of this feature would be somewhat similar to running an app with its outputs disabled, without having to run the entire app itself, but rather only certain parts specified by the user in a limited manner. Clicking the Refresh Metadata button (which will be active only if there is at least one metadata tool in the workflow) will update the data seen in the app interface (such as Drop Down lists, List Boxes etc.), provided the user selected the up-to-date input file(s) (or the data in a database is up-to-date) where the data will be obtained from.

To explain in detail with a use case, suppose you have two flows added to separate Control Containers where the second CC uses the field info of a file used in the first CC to enable the user to select a field from a drop down list to apply a formula (such as parsing a text using RegEx) for example. After specifying the necessary branch in the first CC where the field info is obtained, the user could select these tools and then Right Click => Convert to Metadata Tool to select which tools will run when the user clicks on the Refresh Metadata button. The metadata tools could of course be specified across the entirety of the workflow (multiple Control Containers) to update the metadata for all Control Containers and therefore all tabs of a "concatenated app", where multiple apps are contained in a single workflow.

With this feature, all tools that are configured as metadata tools (excluding the tools that have no configuration) will be able to be configured as a "metadata only" tool or a "hybrid" tool, meaning a hybrid tool will be able to be configured separately for its both behaviours (being able to change all configurations for a tool without any restrictions in each mode, MetaInfo would dynamically update while refreshing metadata). Metadata only configuration of a tool could be left the same as the workflow only configuration if desired.

For example an Input File marked as a hybrid tool could be configured to read all records for its workflow tool mode and only 1 record for its metadata tool mode. This could be made possible with the addition of a new tab named Metadata Tool Configuration in addition to the already existing Configuration tab, and a MDToolConfig XML tree could be added to reflect these configurations to the XML of the tool in question, separate from the Configuration XML tree, and either one of those XML trees or both of them would be present depending on the nature of the tool chosen by the user (workflow tool, metadata tool or hybrid).

This would also mean that all the metadata tool configurations of a tool could optionally be updated using Interface tools. You could for example either update the input file to be read for both the workflow tool mode and metadata mode of an input tool at once, or specify separate input files using different interface tools. As another example, the amount of records to be read by a Sample tool could be specified by a Numeric Up/Down tool but the metadata tool configuration could be left as First 1 rows, without being able to change it from the App Interface.

Hybrid tool (note how Configuration and MDToolConfig has different RecordLimit settings):

<Node ToolID="1">

<GuiSettings Plugin="AlteryxBasePluginsGui.DbFileInput.DbFileInput">

<Position x="102" y="258" />

</GuiSettings>

<Properties>

<Configuration>

<Passwords />

<File OutputFileName="" RecordLimit="" SearchSubDirs="False" FileFormat="25">C:\Users\PC\Desktop\SampleFile.xlsx|||`Sheet1$`</File>

<FormatSpecificOptions>

<FirstRowData>False</FirstRowData>

<ImportLine>1</ImportLine>

</FormatSpecificOptions>

</Configuration>

<MDToolConfig>

<Passwords />

<File OutputFileName="" RecordLimit="1" SearchSubDirs="False" FileFormat="25">C:\Users\PC\Desktop\SampleFile.xlsx|||`Sheet1$`</File>

<FormatSpecificOptions>

<FirstRowData>False</FirstRowData>

<ImportLine>1</ImportLine>

</FormatSpecificOptions>

</MDToolConfig>

<Annotation DisplayMode="0">

<Name />

<DefaultAnnotationText>SampleFile.xlsx

Query=`Sheet1$`</DefaultAnnotationText>

<Left value="False" />

</Annotation>

<Dependencies>

<Implicit />

</Dependencies>

<MetaInfo connection="Output">

<RecordInfo />

</MetaInfo>

</Properties>

<EngineSettings EngineDll="AlteryxBasePluginsEngine.dll" EngineDllEntryPoint="AlteryxDbFileInput" />

</Node>

Please also note that this idea differs from another idea I posted (link above) named Dynamic Tool Configuration Change While the Workflow is Running in that the configuration is updated while the WF/App is actually running and for example the Text to Columns tool in the second CC is dynamically changed using the output of a tool in the first CC, unlike selecting an input file and clicking Refresh Metadata from the App Interface before the workflow is run.

Attached is a screenshot and an analytic app to better demonstrate the idea.

Thanks for reading.

Hello, I believe this feature will be useful for many people.

The idea is to select multiple instances of the same tool and the configuration that we set will be applied to all the selected tools. Furthermore, it will be useful to be an easy way to select all instances of the same tool across a workflow with a shortcut in order to edit them more easily.

Not sure if API/SDK is the appropriate portion of the product that this enhancement would pertain to, but I thought that it was the most fitting option available.

I understand this is a long shot and would probably never happen, but I think it would be super cool if Alteryx had tools for blockchain development and web3 interaction.

The blockchain space is a complicated space, and every blockchain is a little bit different. So I am not sure exactly sure what this would even look like. But I imagine tools like connect wallet, query events, write transactions or other common blockchain actions.

I think as the blockchain space continues to grow, there is going to be an continued increase in interest in developing blockchain applications including companies that want to use blockchain.

With the state the blockchain industry is in at the moment, I am sure most people reading this would think its crazy, but if the blockchain industry does prevail in the long term, which I think it will, this could definitely be something to keep on the radar.

Think big!

Hello --

I have a process where I send an email to users before updating a spreadsheet that is now produced by an Alteryx workflow. Currently, I do this outside of Alteryx because if I choose to use Events -- it will send an email and immediately continue on with the rest of the workflow.

What would be ideal is to have an option to Wait for 10 minutes (or 600 seconds) before continuing on with the rest of the workflow -- assuming the email is sent before the workflow runs.

Thanks,

Seth

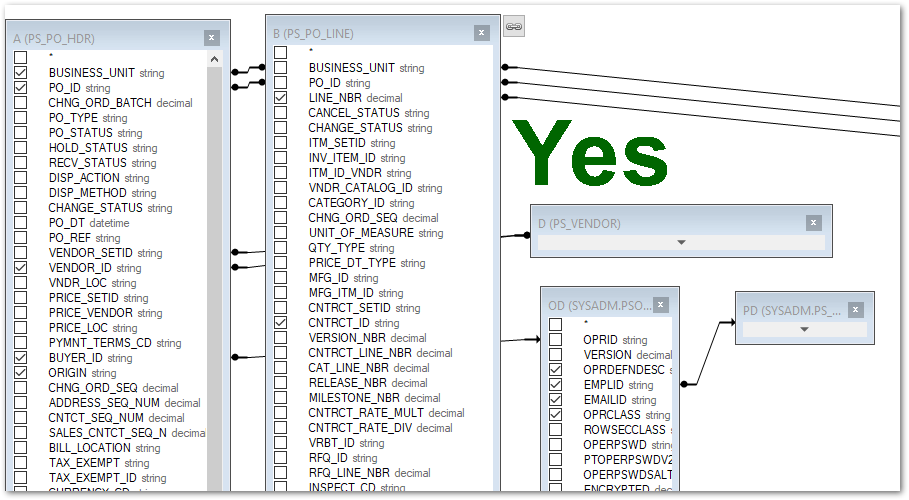

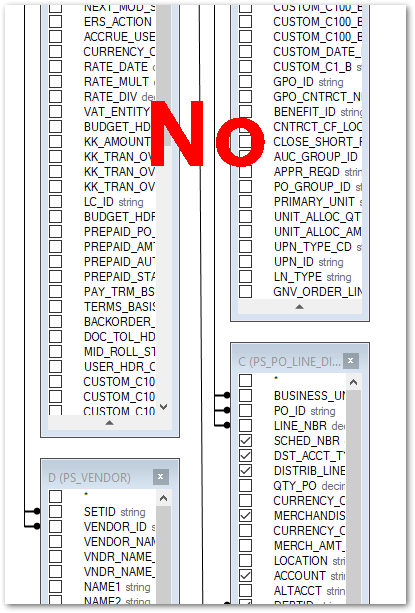

After closing the Table or Query option on an Input Data tool, the table layout in the Visual Query Builder view gets reset to stacking the tables/views on top of each other. It would be great if the layout stayed the way I left it the last time I closed it.

Currently, using AMP Engine will cause any workflow that depends on Proxies to fail. This includes any API workflow or any workflow with Download tool, etc.

They will all fail with DNS Lookup failures.

Many newer features in Alteryx Designer are now dependent on using AMP Engine, making those features (such as Control Containers) totally useless when running inside a corporate network that uses proxies to the outside world.

Please re-examine the difference between how a regular non-AMP workflow processes such traffic vs how AMP does it, because AMP is broken!

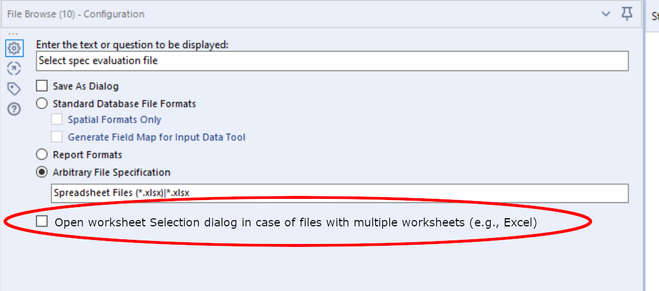

Using File Browse on Excel files first of all is inconsistent between running the Analytical App in the Designer and in the Gallery:

- In the Designer, the user is not being asked which Excel workflow shall be selected.

- In the Gallery, the user is always asked which Excel workflow shall be selected.

Depending on the use case, both behaviours can be the right one:

- To load a specific Excel file worksheet, the dialog for workflow selection is appropriate.

- When working with the entire Excel file (copying, getting the list of worksheets, etc.), the dialog is not helpful.

Thus, my idea is as follows:

- Add a checkbox to the File Browse tool which determines whether the worksheet selection dialog shall be opened (and the output will be <filename>.<ext>|<worksheet>) or not (and the output will be <filename>.<ext>) in case of Excel file selected.

- Make behaviour consistent in Alteryx Designer and Gallery.

This is a general request for uniform methods of connecting to data sources. The management of data connections is currently varied, and configurations/updates are completely different across connections.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |