Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Allow Input Data tool to accept variable length (ie., variable number of fields) per record. I have a file with waypoints of auto trips; each record has a variable number of points, eg., lat1, lon1, lat2, lon2, etc. Right now I have to use another product to pad out all the fields to the maximum number of fields in order to bring it into Alteryx.

The DateTime tool is a great way to convert various string arrangements into a Date/Time field type. However, this tool has two simple, but annoying, shortcomings :

- Convert Multiple Fields: Each DateTime tool only lets you convert one field. Many Alteryx tools (MultiField, Auto Field, etc.) allow you to choose what field(s) are affected by the tool. If I have a database with a large number of string fields all with the same format (such as MM/DD/YYYY), I should be able to use one DateTime tool to convert them all!

- Overwrite Existing Field: The DateTime tool always creates a new field that contains your converted date/time. I ALWAYS have to delete the original string field that was converted and rename the newly created date/time field to match the original string field's name. A simple checkbox (like the "output imputed values as a separate field" checkbox in the Imputation tool) could give the flexibility of choosing to have a separate field (like how it is now) or overwrite the string field with the converted date/time field (keeping the name the same).

Alteryx is overall an amazing data blending software. I recognize that both of these shortcomings can be worked around with combinations of other Alteryx tools (or LOTS of DateTime tools), but the simplicity of these missing features demonstrates to me that this data blending tool is not sufficiently developed. These enhancements can greatly improve the efficiency of date handling in Alteryx.

STAR this post if you dislike the inflexibility of the DateTime tool! Thank you!

I am having large denormalized tables as input, and each time I need to scroll down approx 700+ fields to get an exhaustive view of fields that are selected (even if I have selected 10 out of 700 fields).

It would be helpful if along with having a sort on field name and field type, I can have an additional sort on selected/deselected fields. Additionally if I can get sort by more than one options i.e sort within an already sorted list that will help too - i.e. sorted selected first and inside that selected by field name.

I can get an idea of selected fields from any tool down the line (following the source transformation), but I would like to have an exhaustive view of both selected and unselected fields so that I can pick/remove necessary fields as per business need.

"Enable Performance Profiling" a great feature for investigating which tools within the workflow are taking up most of the time.This is ok to use during the development time.

It would be ideal to have this feature extended for the following use cases as well:

- Workflows scheduled via the scheduler on the server

- Macros & apps performance profiling when executed from both workstation as well as the scheduler/gallery

Regards,

Sandeep.

It was discovered that 'Select' transformation is not throwing warning messages for cases where data truncation is happening but relevant warning is being reflected from the 'Formula' transformation. I think it would be good if we can have a consistent logging of warnings/errors for all transformations (at least consistent across the ones based on same use cases - for e.g. when using Alteryx as an ETL tool, 'Select' and 'Formula' tool usage should be common place).

Without this in place, it becomes difficult to completely rely on Alteryx in terms of whether in a workflow which is moving/populating data from source to target truncation related errors/warnings would be highlighted in a consistent manner or not. This might lead to additional overhead of having some logic built in to capture such data issues which is again differing transformation by transformation - for e.g when data passes through 'Formula' tool there is no need for custom error/warning logging for truncation but when the same data passes through 'Select' transformation in the workflow it needs to be custom captured.

I do a lot of ETL with data cleanup. I'd really like to be able to output the log file of any processes run on my desktop Alteryx. This would also allow adding Info tools to capture changes. The log file could be parsed and recorded as processing metadata.

Is there a reason why Alteryx does not include hierarchical clustering?

Well it's sort of slow especially with huge data sets, computation effort increases cubic, but then when you need to do two step clustering,

"creating more than enough k-means clusters and joining cluster centers with hierarchical clustering" it seems to be a must...

P.s. Knime, SPSS modeler, SAS, Rapidminer has it already...

It seems that version 10.6 (still in beta) will have easy to use linear programming tool... We'll be able to allocate assets optimally, optimize our marketing decisions by inputting the predictions we had with predictive tools etc.

But when it comes to Non-linear models what happens? The idea is to add Alteryx designer an evolutionary optimization capability as well...

I've used a similar tool in excel which was very useful called Evolver; http://www.palisade.com/evolver/ It will be awesome to see that in the coming versions...

To note that one optimisation method does not rule them all and evolutionary algorithms are the slowest probably,

But I believe it will enable us to optimize hyperparameters of our models and greatly get better results...

There is no way to search the S3 object list for a specific object which can make it impossible to find an object in a list of >1000. It would be great if there was some way of searching the object names similar to the SalesForce Input Connecter (10.5) which allows a user to start typing the name of a table to find it.

It would be great if the "fields from connected tool" option pulled fresh data at runtime when used in the gallery and pulling data from non-interface tools. The external source option doesn't have many settings (i.e. I can just point to one file), whereas the possibilities would be endless if I could use the full suite of tools to create a data set, at runtime, to pass to the list box/dropdown.

Could the workflow name be retained when browsing for a YXZP save location instead of blanking it out as soon as you change folders?

After upgrading to version 10.5, my workflows become unreadable for community members on versions prior to it. It would be nice if either the prior versions can (with warning) open the workflow or if I can readily export/downgrade the version header. I understand that if the workflow contains elements unique to the new version that this would be problematic, but it would be helpful to have.

There is a NOTEPAD solution that I use where I edit the XML,

Thanks for consideration.

Mark

It would help if there is some option provided wherein one can test the outcome of a formula during build itself rather than creating dummy workflows with dummy data to test same.

For instance, there can be a dynamic window, which generates input fields based on those selected as part of actual 'Formula', one can provide test values over there and click some 'Test' kind of button to check the output within the tool itself.

This would also be very handy when writing big/complex formulas involving regular expression, so that a user can test her formula without having to

switch screens to third party on the fly testing tools, or running of entire original workflow, or creating test workflows.

It would be good if an option can be provided wherein on clicking a particular data profiling output (cellular level) one can see the underlying records.

May be configurator/designer can be given this option where she can select her choice of technical/business keys and when an end user (of Data Profiling report output) clicks the data profiling result he can be redirected to those keys selected earlier.

One option might be to generate the output of data profiling in a zip folder which would contain the data profiling results along with the key fields (hyperlinked files etc).

Since in such case even data would be maintained/stored, it would be good to either encrypt or password protect the zip file based on various industry standards.

This can be provided as an optional feature under something like advanced properties for the tool, making use of the industry best practices followed in context of report formatting and rendering.

The reason why this should be optional is, not always there might be a need to have the detailed linking back to source level records in place.

For e.g. if the need is only to highlight the Data Profiling outcome at a high level to a Data Analyst this might not be useful.

On the other hand if there is a need for the Data Steward to actually go and correct the data based on the Profiling results, the linking of profiling results back to source data might come handy.

I'd like to see a tool that you can drop into a workflow and it will stop running at that tool and/or start running after that tool. I know about the cache dataset macro, but I think it could be simplified and incorporated into the standard set of tools.

It would be super cool to run a regular workflow in "test mode" or some other such way of running it just one tool at a time, so you can check tool outputs along the way and fix issues as they occur, especially for big workflows. Another advantage would be that if, for whatever reason, a working module stops working (maybe someone changed an underlying file - that NEVER happens to me lol), rather than running the whole thing, fixing something, running the whole thing again, you could just fix what's broken and run it that far before continuing.

Actually, that gives me an even better idea... a stop/start tool. Drop it in the workflow and the module will run up to that point and stop or start from that point. Hmm... time to submit a second idea!

Hello. While working at my company it has come up a few times that An Interface tool that acts like a standard "grid" would be extremely helpful.

This tool would allow users to update data that has a logical link to each-other like a stanard "row". Below are a few very simple examples where this would be extremely helpful.

#1) An application is being used as a file System ETL that has an interface that allows users to select what data to pull from a flat file and store in a database. The users can pick which 'columns' to keep by choosing the following information. The column name, type, and final database location of that column.

In this case a user needs to enter 3 key pieces of field information that have to relate to eachother.

#2) There is a table in a database that has thresholds for a specific law at the state level. (for Example: All loans in Florida must have a loan balance < 300k). However these laws can be updated, and the end users want the ability to #1: See the data, and then #2: Update it if the laws have changed.

A grid in this case would be perfect, as they could query the data to see the 50 states and specified limits, and then change the data in the "limits" column in order to update the database.

The previous 2 examples are pretty simple examples, but I have run into this request a few times from my personal experience, so figured I would see what everyone thought!

Give the option in the tags properties to place the tag to the right of the tool.

Have this be the default setting for Browse and Output tools or others that would normally be found at the end of a workflow. This allows the detail to remain and a cleaner view of the module.

It would be great to connect the email tool with MS Exchange without SMTP, because in my company the policy is to not use any SMTP (therefore its blocked) .

For this reason I am not able to use the email tool..

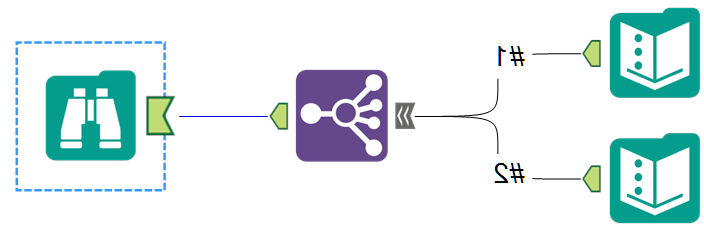

I'd like to see a tool that can take an input, then send it in different directions (similar to formula tool), but with many options... based on filters and/or formulas and/or fields.

Sometimes I need to perform actions on parts of my data or perform different actions depending on whether the data matches certain criteria and then re-union it later.

Right now, the filter tool only allows true or false. If we could customize further we could optimize our workflows rather than stringing filter tools together as if they are nested if/then.

So either the filter tool could have more options than true/false, and infinite ouputs, or the join multiple tool could be flipped, as shown below.

I envision something that says:

Split workflow:

- By Field: Field Name (perhaps with summarize functions such as min/max, etc.)

- By Formula (same configuration as current)

- By Filter

- Field

- Operator

- Variable

- New Idea 265

- Accepting Votes 1,818

- Comments Requested 24

- Under Review 172

- Accepted 56

- Ongoing 5

- Coming Soon 11

- Implemented 481

- Not Planned 117

- Revisit 63

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

218 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

245 -

Category Data Investigation

76 -

Category Demographic Analysis

2 -

Category Developer

208 -

Category Documentation

80 -

Category In Database

214 -

Category Input Output

638 -

Category Interface

239 -

Category Join

102 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

77 -

Category Preparation

393 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

88 -

Configuration

1 -

Content

1 -

Data Connectors

959 -

Data Products

2 -

Desktop Experience

1,528 -

Documentation

64 -

Engine

126 -

Enhancement

321 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

12 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

188 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

23 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

78 -

UX

222 -

XML

7

- « Previous

- Next »

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update - StarTrader on: Allow for the ability to turn off annotations on a...

-

AkimasaKajitani on: Download tool : load a request from postman/bruno ...

- rpeswar98 on: Alternative approach to Chained Apps : Ability to ...

-

caltang on: Identify Indent Level

- simonaubert_bd on: OpenAI connector : ability to choose a non-default...

- maryjdavies on: Lock & Unlock Workflows with Password

- noel_navarrete on: Append Fields: Option to Suppress Warning when bot...

- nzp1 on: Easy button to convert Containers to Control Conta...

| User | Likes Count |

|---|---|

| 8 | |

| 7 | |

| 5 | |

| 5 | |

| 5 |