Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Please add a configuration to the RedShift bulk load to EITHER use access keys or an IAM EC2 role for access.

We should not have to specify access keys when we are in an IAM enabled environment.

Thanks

In order to debug a call to a REST API - it is often necessary to take the web call, and pop this into a web browser. Can you add a second output to a RestAPI tool (a derivative of the Download tool) that has a second output that provides the full web call that was made, including the full parameterised URL. This would make it MUCH easier to debug rest API calls.

cc: @TashaA

Similar to this idea https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Download-tool-Request-and-Response-details/i...

except my preference would be to pull Rest API calls into a more specific tool and give a second output for the responses

See the discussion on this page:

Roughly, in all versions of Alteryx Designer, you can use the Annotations tab and rename a tool. This is awesome for execution in designer, because you can then easily search for certain tool names, better document your workflow, and see the custom tool name in the Workflow Results.

However, when log files are generated, either via email, the AlteryxGallery settings, or an AlteryxEngineCMD command, each tool is recorded using only its default name of "ToolId Toolnumber", which is not particularly descriptive and makes these log files harder to parse in the case of an error.

Having the custom names show in these log files would go a long way towards improving log readability for enterprise systems, and would be an amazing feature add/fix. For users who prefer that the default format be shown, this could be considered as a request to ADD renames in addition to the existing format. EG "Input Data 1" that I have renamed to "Load business Excel File" could be shown in the log as:

00:00:0.003 - ToolId 1 - Load business Excel File: 1 record was read from File Finished in 00:00:0.004

Hello all,

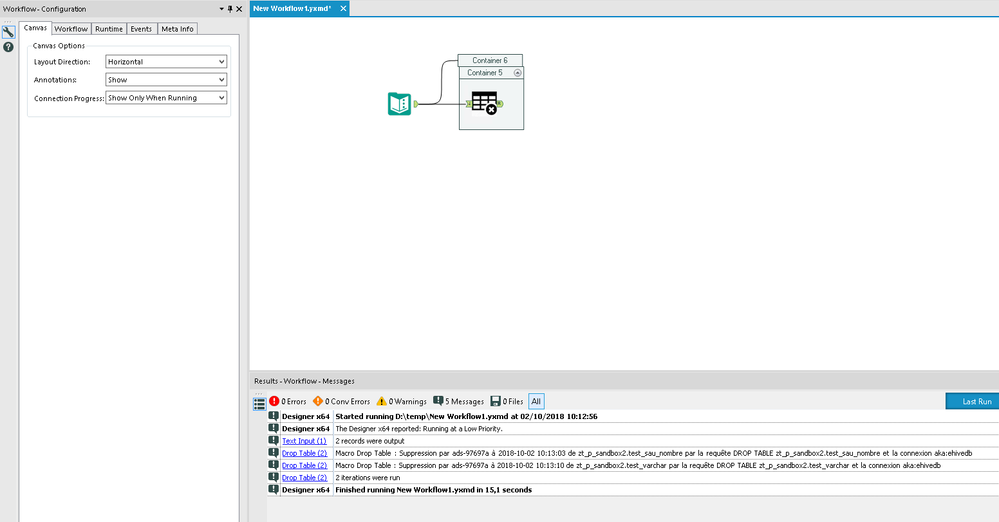

Big picture : on Hadoop, a table can be

-internal (it's managed by Hive or Impala, and act like any other database)

-external (it's managed by hadoop, can be shared among the different hadoop db such as hive and impala and you can't delete it by default when dropping the table

for info, about suppression on external table :

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/using-hiveql/content/hive_drop_external_table_...

Alteryx only creates internal tables while it would be nice to have the ability to create external tables that we can query with several tools (Hive, Impala, etc).

It must be implemented

-by default for connection

-by tool if we want to override the default

Best regards,

Simon

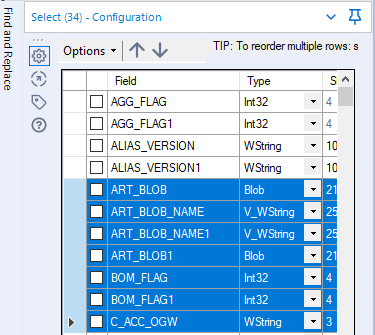

If the tables in the config window has lots of rows, it is quite complicated to find those of interest.

Please add a filter or search option (e.g. by the field name) to display only the relevant rows.

It would also be helpful to select or deselect multiple selected rows with one click.

Find an example from the "Select-Tool":

Where it stands now, only a file input tool can be used to pull data from Google BigQuery tables. The issue here is that the data is streamed and processed locally, meaning the power of BigQuery processing isn't actually being leveraged.

Adding BigQuery In-Database as a connection option would appeal to a wide audience. BigQuery is also standard SQL compliant with the SQL 2011 standard, so this may make for an even easier integration.

I wasn't able to find an existing idea for this, so here goes...

We often put URLs in Comment boxes in order to direct workflow users to different resources, but users are then required to go into the Comment and copy the string (and then paste in their browser). It would be quite handy if the Comment tool simply turned text strings with a url format into clickable links (as seen in Word, Slack, etc).

It would be even more handy if it also had basic text formatting tools (bold, italic, underline, coloring, highlighting of specified characters/words) -- or if it could just render html (like this text tool I'm typing into right now) 😎

When working in a large workflow wireless connections help to make it easier to work with. However sometimes you want to be able to see all your connections (when debugging).

I'd like to see a toggle (button on the toolbar) which would display all the connections including wireless. Ideally the wireless connections would be a different color. You could then click the button again to make the the wireless connections invisible.

Reason:

The existing options to display are limited as you have to click on individual tools to see the connections.

all too often, we build an alteryx flow just to realise that step 8 out of 10 was wrong -so back to the beginning and rerun the entire thing. this often is tedious if your work requires a big data set.

So there is a workaround, using the Cache Macro which can be downloaded (but this does require quite a bit of fiddling with containers; disabling items; setting flags; etc) - but it would be good to allow the user to "restart from here" like you can with a powerpoint slide deck. I appreciate that this may be tricky since Alteryx may be flushing data out of memory as it goes along, so it cannot restart from any arbitrary point - but if we put the workflow into a "testing cached mode" to cache data at each step; or allowed users to set particular controls as a breakpoint and cache at these points, that would help immensely.

Thank you

Sean

Hello,

As of today, the in db connexion window is divided into :

-write tab

-read tab

However, writing means two different thing : inserting and in-db writing. Alteryx has already 2 different tools (Data Stream In and Write Data).

Si what I propose is to divide the window into :

-read

-write

-insert

Best regards,

Simon

Today, the behaviour of batch macro can be strange.

If I refer to https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Batch-Macro-not-looping-after-running-...

we can have big behaviour differences between :

-wf and app

-designer and scheduler

Example here with a batch macro running for all lines in designer and only for line in scheduler

I know the turnaroud (just use a message box) but it's not natural and I think

-at least the same behaviour is needed in any use case

-if you want to do some optimization, ok, but make it an option!!

The Transpose In-db stands in the "Laboraty" for years now. I understand Alteryx invested some time and money to develop that but sadly we still can't use that tool for sensitive workflows. Did you get some bugs on it? Can you please correct it and make this tool an "official" tool?

Thanks

Hello Alteryx Devs -

When I got to write some scripting in the formula tool, my data stream properties should be the first to be suggested once a user starts typing a letter, not the last.

uppercase(Ad -> gives me:

DateTimeAdd

FileAddPaths

PadLeft

PadRight

ReadRegistryString

[Address]

I think we would need a dedicated R macro to ascertain the chances anyone in is going to need [ReadRegistryString] before they need a column of their own data that starts with [Ad...]

Easy fix. Makes a big difference.

Thanks.

Currently it's possible to use the Output tool to output to either a sheet, a place in a sheet or a named range in Excel, but it is not possible to output to a preformatted excel table - it would be really good if the output tool had an option to output to [Table1] in an Excel workbook for example. This enhancement would be incredibly helpful for reporting purposes.

Hello all,

DuckDB is a new project of embeddable database by the team behind MonetDB. From what I understand, it's like a SQLite database but for analytics (columnar-vectorized query execution engine on a single file). And of course it's open-source and free.

More info on their website : https://duckdb.org/

Best regards,

Simon

Hi there,

Adam ( @AdamR_AYX ), Mark ( @MarqueeCrew) and many others have done a great job in putting together super helpful add-in macros in the CREW pack - and James ( @jdunkerley79 ) has really done an incredible job of filling in some gaps in a very useful way in the formula tools.

Would be possible to include a subset of these in the core product as part of the next release?

I'm thinking of (but others will chime in here to vote for their favourite):

- Unique only tool (CReW)

- Field Sort (CReW)

- Wildcard XLSX input (CReW) - this would eliminate a whole category of user queries on the discussion boards

- Runner (CReW - although this may have issues with licensing since many people don't have command line permission - Alteryx does really need the ability to do chained dependancy flows in a more smooth way.

- Date Utils (JDunkerly) - all of James's Date utils - again, these would immediately solve many of the support questions asked on the discussion forum

I think that these would really add richness & functionality to the core product, and at the same time get ahead of many of the more common queries raised by users. I guess the only question is whether the authors would have any objection?

Thank you

Sean

Please enhance the input tool to have a feature you could select to test if the file is there and another to allow the workflow to pause for a definable period if the input file is locked by another user, then retry opening. The pause time-frame would be definable for N seconds and the number of iterations it would cycle through should be definable so you can limit how many attempts to open a file it would try.

File presence should be something we could use to control workflow processing.

A use case would be a process that runs periodically and looks to see if a file is there and if so opens and processes it. But if the file is not there then goes to sleep for a definable period before trying again or simply ends processing of the workflow without attempting to work any downstream tools that might otherwise result in "errors" trying to process a null stream.

An extension of this idea and the use case would be to have a separate tool that could evaluate a condition like a null stream or field content or file not found condition and terminate the process without causing an error indicator, or perhaps be configurable so you could cause an error to occur or choose not to cause an error to occur.

Using this latter idea we have an enhanced input tool that can pass a value downstream or generate a null data stream to the next tool, then this next tool can evaluate a condition, like a filter tool, which may be a null stream or file not found indicator or other condition and terminate processing per the configuration, either without a failure indicated or with a failure indicated, according to the wishes of the user. I have had times when a file was not there and I just want the workflow to stop without throwing errors, other times I may want it to error out to cause me to investigate, other scenarios or while processing my data goes through a filter or two and the result is no data passes the last filter and downstream tools still run and generally cause a failure as they have no data to act on and I don't want that, it may be perfectly valid that on a Sunday or holiday no data passes the filters.

Having meandered through this I sum up with the ideal being to enhance the input tool to be able to test file presence and pass that info on to another tool that can evaluate that and control the workflow run accordingly, but as a separate tool it could be applied to a wider variety of scenarios and test a broader scope of conditions to decide if to proceed or term the workflow.

This functionality would allow the user to select (through a highlight box, or ctrl+click), only the tools in a workflow they would want to run, and the tools that are not selected would be skipped. The idea is similar to the new "add selected tools to a new tool container", but it would run them instead.

I know the conventional wisdom it to either put everything you don't want run into a tool container and disable it, or to just copy/paste the tools you want run into a blank workflow. However, for very large workflows, it is very time consuming to disable a dozen or more containers, only to re-enable them shortly afterwards, especially if those containers have to be created to isolate the tools that need to be run. Overall, this would be a quality of life improvement that could save the user some time, especially with large or cumbersome workflows.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 7 | |

| 5 | |

| 3 | |

| 3 |