Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

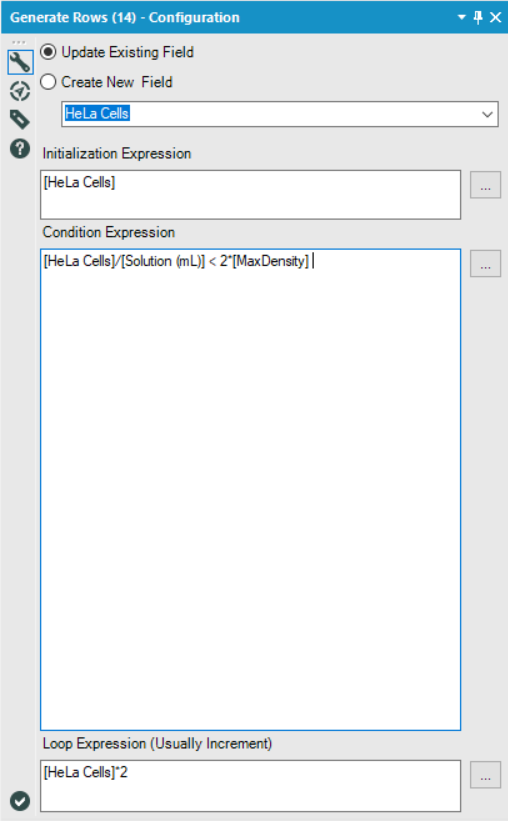

When working on the Weekly Challenge #108, I was trying to design a non-macro solution.

I ended up settling on the Generate Rows tool and was trying to find a way to generate rows until I had reached or exceeded the maximum density, however, I ran into an issue where I'd always have one too few rows, since the final row I was looking for was the one that broke the condition I specified.

In order to get around this, I came up with the following solution:

Essentially, I just set my condition to twice that of the true threshold I was looking for. This worked because I was always doubling the current value in my Loop Expression, and so anything which broke the 'actual' condition I was looking for ([MaxDensity]), would necessarily also break the second condition if doubled again.

However, for many other loop expressions, this sort of solution would not work.

My idea is to include a checkbox which, when selected, would also generate the final row which broke the specified condition.

By adding such a checkbox, it would allow users to continue using the Generate Rows tool as they already do, but reduce the amount of condition engineering that users are required to do in order to get that one extra row they're looking for, and reduce the number of potentially unseen errors in their workflows.

-

Category Preparation

-

Desktop Experience

-

Feature Request

-

Tool Improvement

The CrossValidation tool in Alteryx requires that if a union of models is passed in, then all models to be compared must be induced on the same set of predictors. Why is that necessary -- isn't it only comparing prediction performance for the plots, but doing predictions separately? Tool runs fine when I remove that requirement. Theoretically, model performance can be compared using nested cross-validation to choose a set of predictors in a deeper level, and then to assess the model in an upper level. So I don't immediately see an argument for enforcing this requirement.

This is the code in question:

if (!areIdentical(mvars1, mvars2)){

errorMsg <- paste("Models", modelNames[i] , "and", modelNames[i + 1],

"were created using different predictor variables.")

stopMsg <- "Please ensure all models were created using the same predictors."

}

As an aside, why does the CV tool still require Logistic Regression v1.0 instead of v1.1?

And please please please can we get the Model Comparison tool built in to Alteryx, and upgraded to accept v1.1 logistic regression and other things that don't pass `the.formula`. Essential for teaching predictive analytics using Alteryx.

-

Category Predictive

-

Desktop Experience

-

Tool Improvement

1. The Union tool

When switching to Manual method and then adding fields up stream, the result is a warning "Field was not found". I don't look for warnings. This should create a red error. Having fields fall off the workflow is a pain.

2. Unique tool

Changing fields upstream causes the tool to error out when the workflow runs. No issues are shown before the run.

3. Having containers all open up when I reopen a workflow is a nightmare when you have 20+ containers all over lapping.

-

Category Join

-

Desktop Experience

-

Tool Improvement

Please provide an option to have a the caption of a tool container sent to log/results after all items within it have been processed.

-

Tool Improvement

Can you devise a way to bring out the dynamic network visualisation on to Powerpoint. Right now, we can only see a static image on a browser

-

Tool Improvement

The "Field Summary" tool and several others have a configuration requirement that provides a list of fields to select or deselect. The selection action is singular meaning you can only effect the action on one item in the list of many. As the number of fields we work with grows significantly this becomes a time consuming and tedious task.

This should be enhanced to allow highlighting of multiple fields to select or deselect as we can do in tools like the "Select" tool.

-

Tool Improvement

The field summary tool is an excellent resource to get an overview of the data and spot targets for analysis or data cleansing.

Unfortunately it has limitations either in the number of fields included or some combination of the number of fields and one or more of its attributes. There is nothing in the documentation I found to make a user aware of this. When you exceed N fields selected the system just hangs, indicating it is running but there is no connection progress shown and nothing seems to happen, even if you limit input to 1 record.

Through trial and error I found an approximate limit in number of fields I can include and still have it work.

I request that Alteryx update the tool help info and devise enhancements to dynamically load balance the tool so it can scale to the number of fields requested or at least warn when the limit is reached or approached. The latter warning could be similar to the red font warnings in the formula tool when you have a malformed expression. However a load balancing version is most desired.

The issue as it stands results in users wasting a lot of time trying to make the tool work as expected, then report it to support as an apparent bug in the tool which can be argued both ways.

I realize in the real world there are limits but in this real world we are seeing the number of fields to analyze increase significantly, especially when you have a data license and integrate 3rd party data to you own native data adding a hundred or hundreds more fields.

-

Category Data Investigation

-

Desktop Experience

-

Tool Improvement

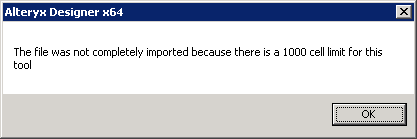

Hi Alteryx,

I tried importing a file into my text input tool and I ran across this error.

Why is there a limit? Can we get rid of it since we're not living in DOS anymore.

-

Tool Improvement

During execution the user cannot scroll around. Large workflows need to be shrunk to very small icons to be able to follow the progress. Either have an option to automaticaly center on the active icon or allow scrolling during execution.

-

Tool Improvement

It would be helpful to have one of the following methods to disable output modules to prevent overwriting output files each time a workflow is run:

- A global 'Disable All Output Modules' option, which would effectively mute the workflow without removing any connections

- A module-specific 'disable/enable this module' option, to the same end

-

Feature Request

-

Tool Improvement

- New Idea 291

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 167

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |