Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop : Idées en tête de liste

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

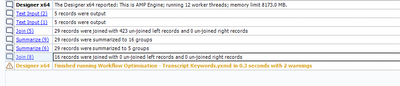

There are three places that provides the log information:

1) Regular results window:

Pro: In the process sequence so the user can understand the order of the process.

Con: Doesn't have info on how long each tool takes to process.

2) Workflow -> Runtime -> Enable Performance Profiling

Pro: Processes are sorted in the processing duration descending order which helps to identify the ones that took long to run.

Con: Doesn't show the process sequence.

3) Actual Alteryx log file:

Pro: There are timestamps for each process so the duration can be calculated.

Con: Not ready accessible and not user friendly to be seen from the interface. Not clickable to see more details in the workflow.

I think it will be SUPER HELPFUL to integrate all three together to show in the process order along with the running time.

-

Engine

-

Enhancement

It's often challenging to estimate run time of various workflows AND a run time of over 3+ hours can often be indicative of errors in the workflow. Could we have an estimated runtime calculator? This would also help when pushing against deadlines for timing.

Fingers crossed and thanks!

-

Engine

-

Runtime

Hi All,

This is a fairly straightforward request. I'd like to be able to pass through interface tool values to the workflow events the same way I would pass it through to a tool in the workflow (%Question.<tool name>%). One use-case for this is that we are calling a workflow and passing in an ID, and if this workflow fails, I'd like to trigger an event that will call back to the application and say this specific workflow for this ID failed.

The temporary solution is to have the workflow write to a temp file and have the event reference that temp file, but this is clunky and risky if there are parallel runs occurring.

Best,

devKev

In the Overview pane - can you please show which tools have completed the current run, when viewing this pane during a canvas run? That would allow for a progress check at a glance.

-

Engine

-

Runtime

Additional Dynamic Select Mode for All Native (Non-Macro) Tools with Select Functionality (with or without Data Type Selection)

This is the updated version of an idea I posted a while ago (which only included Multi-Field Formula), and after the release of Alteryx Designer 2025.1, which I found to be very successful from a new tool and functionality perspective, I decided to post about it.

My proposition is to add the Dynamic Select functionality* (at least the Select via a Formula mode) to all native (non-macro) tools in all tool categories that include a Select functionality (as an alternative, where the user would be OK with not being able to also change the field types of the selected fields, such as Join and Append tools, the opposite would apply to Multi-Field Formula, where the user would be able to dynamically select which fields the Multi-Field Formula would be applied to, in addition to changing the data type), including but not limited to (to account for any new tool with a Select functionality that might be added in the future):

Preparation Category

- Auto Field

- Data Cleanse Pro (added in 2025.1)

- Multi-Field Formula

- Multi-Row Formula (for Group By option)

- Rank (for Group By option)

- Record ID (for Group By option)

- Sample (for Group By option)

- Tile (for Group By option)

- Unique

Join Category

- Append Fields

- Find Replace

- Join

- Join Multiple

Transform Category

- Arrange

- Cross Tab

- Make Columns (for Grouping Fields (Optional) option)

- Running Total (for both Group By (Optional) and Create Running Total options)

- Transpose (for both Key Columns and Data Columns options, the tool would generate an error if the Dynamic Select formula written for both options are selecting the same field(s), as the Transpose tool is not supposed to allow it)

- Weighted Average (for Grouping Fields (Optional) option)

In-Database Category

- Select In-DB

Reporting Category

- Layout (for Group By and Per Column Configuration options)

- Table (for Group By and Per Column Configuration options)

Machine Learning Category

- Transformation (for Select Features mode only, as the other two modes with Select functionality (Clean Up Missing Values and One Hot Encoding) require Method and Missing Category Action specification)

Developer Category

- Download (for And values from these fields option present in Headers and Payload tabs)

- Dynamic Rename (for the Select functionality present in Formula mode)

Spatial Category

- Find Nearest

- Spatial Info

- Spatial Match

Data Investigation Category

- Pearson Correlation

Skipping Address and Demographic Analysis categories as they have tools that seem to be using a static input, therefore not requiring a Dynamic Select functionality.

Laboratory Category

- JSON Build (for Grouping Fields (Optional) option)

- Transpose In-DB (with a similar logic to the regular Transpose tool found in Transform category)

*The Dynamic Select functionality added tools that have more than one input anchor (such as Join and Join Multiple) could have new additional fields the users can utilize, such as:

- [Origin] (can have the values "L" or "R" for Join and Append tools)

- [Connection_ID] (can have the values 1, 2, 3 etc. for Join Multiple tool)

- [Unknown] (can have the values "True" or "False" for the Data Columns option of the Transpose tool, or any other tools such as Join that would have the Dynamic or Unknown Columns option as a part of their Select functionality)

-

Desktop Experience

-

Engine

-

Enhancement

-

UX

I would love to see an option to run only one container without having to disable all others (and tools not in containers).

I've got workflows with MANY different queries/tools each in their own containers and some tools outside of containers. Occasionally I need to run or re-run just one of the containers (usually several times when the datastream contains Crosstab or Transpose tools where some fields/options will not populate until the workflow has previously run). Normally I'd either have to disable all other containers and/or select EVERYTHING that I do not wish to run an add them all to another container that I could then disable. An option to disable everything outside of a specific container would be most welcome and save a lot of time!

-

Engine

-

Enhancement

Similar to being able change the parameters of a tool using the interface tools, it could be very useful if Alteryx Designer had an option where the configuration of a tool can be modified by another tool's output (which can only consist of one row & column and may include line breaks/tab characters, only first row is used if there are multiple rows) while the workflow is running, therefore reducing the need to chain multiple apps.

This feature could be made possible as the "Control Containers" feature is now implemented, and it could work like below:

Suppose you need to write to a database and may need to specify a Pre-SQL statement or Query that needs to be dynamically changed by the result of a previous tool in the workflow.

In this case, as the configuration of a tool in the next container needs to be changed by the result of a previous formula, there would need to be an additional icon below the tools, indicating that the tool's result can be used for configuration change.

This icon which will appear below the tools will only be visible once at least one Control Container and an Action tool is added to the workflow, and will automatically be removed if all the control containers are removed from the workflow. User can change the configuration of the destination tool using an action tool, which must be connected to a tool in a container that will be run after the one it is contained in has finished running, as a tool (or several tools) that is contained in the next CC in the workflow needs to be dynamically modified before the container it is contained in is activated.

If a formula tool containing multiple formula fields is added to the action tool, the user will see all the formula outputs similar to connections (i.e. [#1], [#2]...) that can be used as a parameter.

The screenshot below demonstrates the idea, but please note that this is a change where adding an action tool may not mean that this workflow will need to become either a macro or an analytic app, so a new workflow type may or may not have to be defined, such as "Dynamic Configuration Workflow (YXDW)". Analytic Apps and Macros which utilize this feature could still be built without having to define a new workflow type.

-

Category Apps

-

Engine

-

New Request

-

UX

I just noticed in a workflow I'm looking at, that I derived a column but after a bit of developing, forgot about it, so there it sat, unused. It doesn't hurt anything, but it would be useful if that sort of thing would automatically generate a soft warning on the tool in question: e.g. any item not referenced downstream automatically generates an "Unused variable" warning.

-

Engine

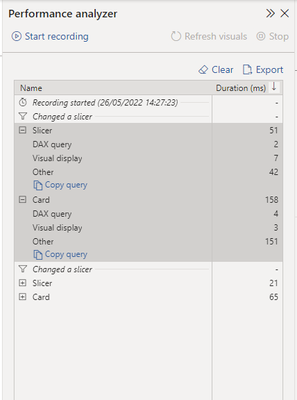

Alteryx is very quick already but it world be useful to know the computational cost of different approaches to building a workflow using a lot of data. This would make it easier to know if your optimization to the workflow is working as expected and also which tools in particular are doing the work best. Other software such as Power BI has a performance analysis section which breaks down how each action impacted performance.

It would be great to get a similar breakdown of how long each tool is taking to run in the results window.

-

AMP Engine

-

Engine

With the onset of Workflow Comparisons in V2021.3, it only seems natural to me that the next step would be a method of handling those changes. Maybe have some clickable dropdowns on the changed tools that have a few options as to what you'd like to do about them. I think the options to start off with would be "Apply this change to that workflow" and "Apply the other workflow's change to this one" along with the "Apply all of this workflow's changes to that one" and "Apply all of that workflow's changes to this one" somewhere in the header.

I know that I will occasionally get a request to change a workflow while I'm already in the middle of making a change to it or am waiting on approval for a change I've made and am hoping to implement. The current version control system on Server does not make it easy to implement multiple changes that may need to be implemented in an order other than the one in which they were started. The current process seems to be to merge them later by going through the whole process of selectively copying the changed tools and pasting/replacing them or otherwise manually modifying the tools to make them match.

Likewise, implementing the version merging proposed here will allow versioning strategies more akin to branches in git. One could more-or-less maintain two streams of changes until they were both complete and merge or productionize them as they're complete and ready.

-

Engine

-

XML

See this community link for context:

tl;dr:

An option to clear the In-DB File History is not available in the Designer's GUI. If this feature is required, it's recommended to open an Idea on the Alteryx Community to submit an enhancement request.

Please implement this as an idea; I need to clear some In-DB connections that are no longer valid and in a managed environment, accessing the registry is laughable.

Thank you!

-

Engine

-

New Request

The excel driver (.xlsx) converts these values to 0. If you use the legacy excel driver (.xlsx) it brings in the #N/A values. This issue was reported in the community and I am forwarding it to the New Idea as a problem that needs to be addressed on behalf of @JohnDoe.

-

Engine

In case of system crash/ upgrade, transfer of Alteryx license from one system to another system or from one user to another. User should be able to surrender/ borrow/ transfer license from one machine to another. This helps for more flexible use of product.

-

Engine

To measure the computational complexity of an Alteryx workflow, you need a unit of measure. Because the execution time depends on hardware performance, execution time is not suitable for comparison on different PC's. I temporarily named this to Alteryx Calculation Score (ACS).

ACS is useful for:

1. For troubleshooting purposes, I want to compare my workflow ACS and execution time between my PC and another PC. If the workflow overflow PC's memory, ACS is same but execution time goes worse.

2. I would like to compare the workflow ACS for Weekly Challenge with other people's workflows.

3. When you want to choose the suitable Alteryx tool for your purpose, ACS will be good guide.

ACS is roughly proportional to execution time without DISK and network I / O. Each Alteryx tool has a fixed ACS value because its computational cost depends on the data and settings.

I believe ACS will improve the performance of Alteryx and its workflow.

-

Engine

-

Feature Request

It would be handy if it were possible to order (i.e. right-click to drag, as in the Select Tool) ALL constants created by the user, including Question constants etc.

-

Engine

-

General

Problem:

Currently, the scheduling via designer controller is independent of the gallery. So, even after a canvas is deleted, the scheduler still continues to execute the cached version of the canvas, as long as the scheduler exists.

Note, this issue does not occur when the canvas is directly scheduled in the gallery, and only occurs when you schedule via the Designer on the controller directly.

Steps to replicate issue:

1) Publish a canvas into gallery

2)Schedule the canvas to run daily via the Designer --> Options --> View Schedules --> Select Controller --> Create new workflow and schedule

3) Delete the canvas from gallery

4) You will notice that the canvas is still getting run on the defined schedule, even though you have deleted the canvas

Observed in Alteryx 11.5.1

Idea Recommendation:

Golden copy of a canvas should be the version existing in the gallery. Once the gallery instance of the canvas is deleted/replaced with a new version,

- All related artifacts to the old version should be marked as "Deleted"

- All existing schedules should be stopped from being executed

- We should continue to retain all meta data attributes and execution history related to the old version (should not be wiped out) but clearly marked as archived/deleted

-

Engine

-

Runtime

The constant [Engine.GuiInteraction] can be used to determine whether a workflow was run in the Designer or Gallery. Currently, there's no method to also find out whether a workflow was initiated by a schedule or run manually in the Gallery. The information is available in the Gallery but not forwarded to inside the workflow.

Please introduce a new variable [Engine.ScheduledRun] (or similar) which determines whether the workflow was initiated by a schedule (value "true" if boolean or "schedule" if string type) or manually (value "false" or "manual").

-

Engine

-

New Request

-

Scheduler

When using certain tools, particularly market place tools like the SharePoint input/ output etc. it would be helpful to have a quick way to find out which version is being used in a workflow. Something along the lines of an option when you right click the tool, that displays the current version would be ideal.

This would be helpful in several cases but primarily when handing over workflows. There are cases when I have multiple versions of the same tool installed so that I don't have any issues inheriting workflows. This does however, make things confusing when handing workflows back. Tool Version Labelling would solve this problem.

Regards - Pilsner

-

Engine

-

Enhancement

Vanilla Alteryx Chained Apps can only progress linearly, which means developers could not let users skip few applications ( or ) reach the last app in the chain ( or ) let the user select which specific app to trigger based on the requirement.

This can be bypassed by using a render tool with output as PCXML and HTML link of the Application you can trying to divert to, which does not affect the existing workflow in any way.

By using the below set of tools on any workflow/chained app you can either branch the flow of apps ( or ) you can skip a few apps in the chain.

- A excel or csv file which has the links of the apps - the reason for keeping the hyperlinks in an external file is so that we can update the link if the server link changes/updates - refer Image 1

- A filter tool to specify which application to move to ( can be changed using a radio button/drop down to app 2/3/4/5 etc.)

- A text tool ( This is where the magic is ) - configure it to pick the server link from the incoming data from the filter tool as hyperlink and generate a output preview, as shown below - refer Image 2

- Use a render tool as output and write to any PCXML file ex: "File.pcxml" - refer Image 3

Image 1 - Input Configuration with the flow that can be part of any existing application

Image 2 - Text Tool Configuration

Image 3 - Render tool Configuration

POC in action

- Let assume our 3rd application is located in www.alteryx.com - if the user selects 3rd app in the radio button

- Which would generate an Output Preview like below

Now If clicked on App 1, it would divert me to www.Alteryx.com

Keywords : Chained Applications, Chained Apps, Application Sequence, Skip Application Sequence, Branch Application Sequence, Application Order, Controlled Order, Trigger Next Application

Regards,

Maithreyan S

-

Category Apps

-

Engine

-

Enhancement

Maybe this pointless but my guess is that memory usage could be as important as processing time and is probably a simple addition to the performance profiling feature.

-

Engine

-

Enhancement

- New Idea 377

- Accepting Votes 1 784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1 605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Précédent

- Suivant »

- abacon sur : DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS sur : Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC sur : Date time now input (date/date time output field t...

- EKasminsky sur : Limit Number of Columns for Excel Inputs

- Linas sur : Search feature on join tool

-

MikeA sur : Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 sur : Select Tool - Bulk change type to forced

-

Carlithian sur : Allow a default location when using the File and F...

- jmgross72 sur : Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com sur : Select/Unselect all for Manage workflow assets

| Utilisateur | Compte |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |