Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

We are big fans of the In-Database Tools and use them A LOT to speed up workflows that are dealing with large record counts, joins etc.

This is all fine, within the constraints of the database language, but an annoyance is that the workflow is harder to read, and looks messy and complicated.

A potential solution would be to have the bottom half of the icon all blue as is, but the top half to show the originaling palette for that tool.

ie Connect In-DB - Green/Dark Blue

Filter In-DB - Light Blue/Dark Blue

Join In-DB - Purple/Dark Blue

etc.

in-DB workflows would then look as cool as they are !

Thanks

dan

-

Category In Database

-

Data Connectors

I've run into an issue where I'm using an Input (or dynamic input) tool inside a macro (attached) which is being updated via a File Browse tool. Being that I work at a large company with several data sources; so we use a lot of Shared (Gallery) Connections. The issue is that whenever I try to enter any sort of aliased connection (Gallery or otherwise), it reverts to the default connection I have in the Input or Dynamic Input tool. It does not act this way if I use a manually typed connection string.

Initially, I thought this was a bug; so I brought it to Support's attention. They told me that this was the default action of the tool. So I'm suggesting that the default action of Input and Dynamic Input tools be changed to allow being overridden by Aliased connections with File Browse and Action tools. The simplest way to implement this would probably be to translate the alias before pushing it to the macro.

The current SharePoint connector works fine until you encounter SharePoint lists hosted in MS Groups which appears to use Azure Active directory much different from how the original SharePoint domain works. An easy way to determine this is if your SharePoint lists have a domain with https://groups.companyname.com instead of https://companyname.sharepoint.com.

Currently the work around is having go through the MS Groups API which is complicated and requires extra IT support for access and other credentials.

-

Category Connectors

-

Data Connectors

I've used tools like BRIO/Hyperion with a wide-range of control over the output to MS Excel. The Jooleobject allowed me to control MS Outlook, Excel, Word, etc.. The goal is to run a scheduler within Alteryx every week to blend data, insert the data into pre-formatted and renamed excel templates, copy those files to a network shared drive and finally send a formatted email to my users. If I can get past copy/paste routines, my job would be much more efficient. Thx

-

Category Input Output

-

Data Connectors

Hi,

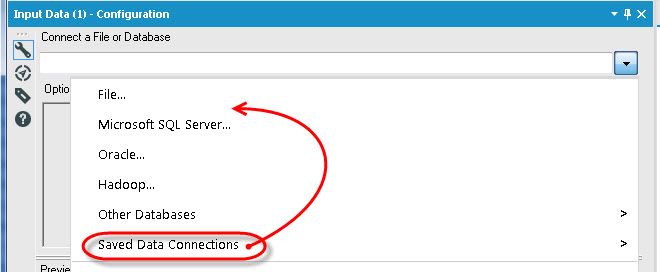

In the Input tool, it would be useful to have the Saved Database Connections options higher in the menu, not last. Most users I know frequently use this drop down, and I find myself always grabbing the Other Databases options instead as it expands before my mouse gets down to the next one. I would vote to have it directly after File..., that way the top two options are available, either desktop data or "your" server data. To me, all the other options are one offs on a come by come basis, don't need to be above things that are used with a lot more frequency. Just two cents from a long time user...love the product either way!

Thanks!!

Eli Brooks

-

Category Input Output

-

Category Interface

-

Category Preparation

-

Data Connectors

Many tab files lately (I am finding when they are created in mapinfo 16) Alteryx cannot read. I have posted about this in other forums but wanted to bring it up on the product ideas section as well.

-

Category Input Output

-

Category Spatial

-

Data Connectors

-

Location Intelligence

When using an SQLite database file as an input, please provide the option to Cache Data as is available when opening an ODBC database. Currently, every time the input tool is selected, it takes an inordinate amount of time for Alteryx to become responsive; especially if the query is complex. SQLite is not a multi user database and is locked when accessed so there is no need to continuously poll it for up-to-date values. Pulling data when run or if F5/Refresh is pressed would be sufficient.

The issue may be more primitive than what is solved by having a Cache Data option. I am more concerned that the embedded query seems to be "checked" or "ran" every time the tool is selected. I think this issue was resolved with other file formats but SQLite was overlooked.

-

Category Input Output

-

Data Connectors

One of my favorite features of Alteryx is the in-line browse in the results window, as well as the descriptive log, highlighting record counts into and out of tools. As I develop bigger and longer-running workflows, I would love to be able to save off these results to provide my QA analyst with a "cached" version of a run without them having to run it themselves. Providing them not only with a well-documented workflow, but with a complete data flow would be tremendously helpful in getting work checked. Our current process is to pass workflows off, and encourage the QA analyst to run them with the "Disable all Tools that write Output" option checked. While this is not an issue for smaller workflows, it is inefficient for larger ones, and also can cause some difficulties with access logistics like missing or inconsistent connection aliases.

I have seen some requests asking for saving of browse results (https://community.alteryx.com/t5/Alteryx-Product-Ideas/Save-Browse-Results-Until-Next-Run/idi-p/1827), but these primarily seem to be geared at data caching for further workflow development. My aim is to save the browse and results statuses of a completed workflow for the purpose of QA.

Note: I posed this as a question in the Data Preparation and Blending forum, but didn't receive an answer so thought I would propose it as an idea.

-

Category Input Output

-

Data Connectors

It would be great if the deselecting of fields in a select tool updated the output window(before next run) as a "review" to make sure you are removing what you expect and/or you can see other items left behind that should be removed. This would also be useful for seeing field names update as you organize and rename.

Often I join tables w/o pre-selecting the exact fields i want to pass and so I clean up at the end of the join. I know this is not the best way but a lot of times i need something downstream and have to basically walk through the whole process to move the data along.

-

Category Input Output

-

Category Join

-

Data Connectors

-

Desktop Experience

As recommended by HenrietteH at Alteryx to submit as a suggested future enhancement.

I have Oracle 11 and Oracle 12 clients on my machine. With Toad, I can toggle between either client's current home, but it appears that is not a feature in Alteryx. So now I am unable to execute ETL jobs off my machine or server because Alteryx gets confused. Currently, the only remedy I can think of is having 11 client uninstalled, which can cause dependency issues if I need it in the future.

-

Category Input Output

-

Data Connectors

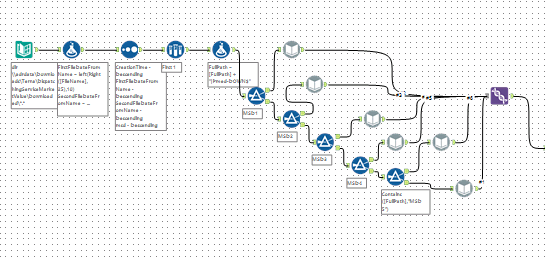

This may be too much of an edge case...

I would like to be able to feed a dynamic input component with a input file and a format template file, so make the input component completely dynamic. This is because I have excel spreadsheets that I want to download, read and process hourly throughout the day. Every 4 hours another 4 columns are added to the spreadsheet, thereby changing the format of the spreadsheet. This then causes the dynamic input component to error because the input file does not match the static format template. I would be happy to store the 6 static templates, but feed these into to the dynamic input with the matching input file, thus making the component entirely dynamic. Does this make sense???

BTW, my workaround was to define six dynamic inputs, filter on the file type, then union the results:

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Hi All,

I had posted in the Tool Master | Unique thread about how when I use this tool it does not preserve my sort.

This seems odd to me. I understand that it's "a blocking tool and that records are then sorted prior to executing the tool process" as @TaraM points out. However, in a program where data is explicitly manipulated to a condition, there is an expectation on my side (maybe mistakenly?) that if I sort something that it would not be changed unless stated or indicated in some fashion.

For my use case I was sorting to eliminate the duplicates, then joining that data to eliminate other duplicates and outputting the resultant to my users and future-state will be automatically fed into a system, for each stage it matters that the sort function is kept intact.

I know it's a simple complaint / fix, but its unexpected behavior on my end and could mean less confusion and less tools on the board. which is always a good thing in my book.

-

Category Input Output

-

Data Connectors

Wonder if it would be possible to have a set of SQL tools in Alteryx which to the user would look like you are dragging in a tool to manipulate the data (such as filtering data) but in the background it is updating the SQL (such as adding a WHERE clause) meaning the module runs faster. This would be easier than having to manually update the SQL code and would run quicker than bringing all data through and filtering afterwards.

I know there is currently the Visual Query Builder, but this is quite fiddly to use so would be good to have something more user-friendly.

-

Category In Database

-

Data Connectors

I would like to see the pencil (that means writing to a record) go away when the Apply/Check button is clicked.

-

Category Input Output

-

Data Connectors

Add input of ArcGrid file format.

-

Category Input Output

-

Data Connectors

Slightly off the track, but definitely needed...

I'd like like to propose a novel browsing.

With this new feature you may no longer require the traditional Browse Tool, to the extent that it may be decommissioned later. Here's how new Browse would work.

So far Browse Tool is helpful for mid-stream data sanity check...

But a complex workflow will need so many Browse Tools, thereby wasting a lot of canvas space and unnecessarily complicating / slowing the workflow further.

Expected Browse:

Clicking on any tool should automatically populate its results in the Results window without the need of Browse Tool.

1) Tools with a single output: Clicking on the tool or its output plug should reveal its data (ex: Summarize Tool)

2) Tools with more than one output: Clicking on each of the output plug should reveal its data (ex: Join)

BONUS: Clicking on the input plug of a tool should reveal its input data

-

Category Input Output

-

Data Connectors

Imagine We have following o/p fields like Name,RegNo,Mark1,Mark2 ,total

Here total can be computed and can be brought as derived field using formula tool.

However Name and RegNo will be the same in O/p too.

- Instead of Mapping Manually mapping Output_Name to Input_Name, A smart Mapping feature can be introduced so that it can automatically map based on name of the columns.

- Once smart mapping is done,Developer can review and make changes if needed.

- This will reduce the manual intervention of selecting from existing fields.

- Will be helpful when we process 100s of Input data into Output report.

Thanks,

Krishna

-

Category Input Output

-

Data Connectors

I would love to have the ability to easily populate an entire schema of tables into my work space without having to perform repetitive connections to individual tables.

Ideally, this would include the ability to drag a schema onto the workflow and have all of the connection tools auto-populate.

I often have a need to investigate data in many tables to verify its presence / validate the table contents. This would be a very helpful feature for completing this easily and completely.

Thank you!

-

Category In Database

-

Data Connectors

Cisco, which has been building enterprise networks, and is literally close to the Internet of Things, is creating some data products, perhaps a platform, perhaps a suite, to handle big data. Part of their offering is DNA Data Virtualization (http://www.cisco.com/c/en/us/solutions/enterprise-networks/dna-virtualization/index.html). Compare Cisco at 75,000 employees to SAS at 15,000.

-

Category Connectors

-

Data Connectors

In the same way In-DB generates SQL, might it be possible to have an In-PowerBI and generate DAX?

-

Category In Database

-

Data Connectors

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |