Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I the current Output Data Tool, choosing a bulk Loader option, say for Teradata, the tool automatically requests the first column to be the primary index. That is absolutely incorrect, especially on Teradata because of how it might be configured. My Teradata Management team notifies me that the created table, whether in a temp space or not, becomes very lopsided and doesn't distribute the "amps" appropriately.

They recommend that instead of that, I should specify "NO PRIMARY INDEX" but that is not an option in the Output Tool.

The Output tool does not allow any database specific tweaks that might actually make things more efficient.

Additionally, when using the Bulk Loader, if the POST SQL uses the table created by the bulk loading, I get an error message that the data load is not yet complete.

It would be very useful if the POST SQL is executed only and only after the bulk data is actually loaded and complete, not probably just cached by Teradata or any database engine to be committed.

Furthermore, if I wanted either the POST SQL or some such way to return data or status or output, I cannot do so in the current Output Tool.

It would be very helpful if there was a way to allow that.

-

Category Input Output

-

Data Connectors

Would be great if you could support Snowflake window functions within the In-DB Summarize tool

-

Category In Database

-

Data Connectors

The current Azure Data Lake tool appears to lack the ability to connect if fine grained access is enabled within ADLS using Access Control Lists (ACL)

It does work when Role Based Access Control (RBAC) is used by ACL provides more fine grained control to the enviroment

For example using any of the current auth types: End-User Basic, End-User (advanced) or Service-to-Service if the user has RBAC to ADLS the connector would work

In that scenario though the user would be granted to an entire container which isn't ideal

- azureStorageAccount/Container/Directory

- Example: azureStorageAccount/Bronze/selfService1 or azureStorageAccount/Bronze/selfService2

- In RBAC the user is granted to the container level and everything below so you cannot set different permissions on selfService 1 or selfService2 which may have different use cases

The ideal authentication would be to the directory level to best control and enable self service data analytic teams to use Alteryx

- In this access pattern the user would only be granted to the directory level (e.g. selfService1 or selfService2 from above)

The existing tool appears to be limited where if don't have access at the container level but only at the directory level then the tool cannot complete the authentication request. This would require the input for the tool to be able to select a container (aka file system name) from the drop down that included the container+ the directory

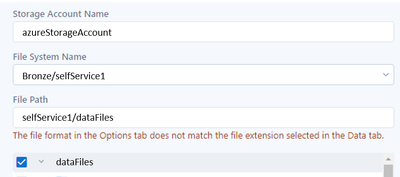

- Screenshot example A below shows how the file system name would need to be input

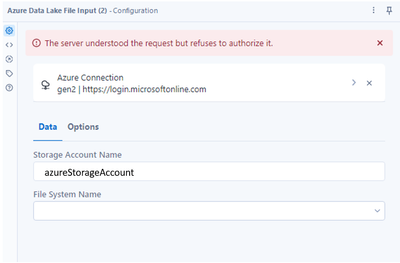

- Screenshot example B below shows what happens if you have ACL access to ADLS at the directory level and not at the container level

Access control model for Azure Data Lake Storage Gen2 | Microsoft Docs

Example A

Example B

-

Category Connectors

-

Data Connectors

The tool currently does not write a complete XLSX spec file. Excel files created via Alteryx do not support Windows File Explorer search content capabilities. The sharedStrings.xml section of the XLSX file is empty or omitted. This section includes all unique strings within the file and thereby makes search for content fast.

This upgrade seems possible by non-Microsoft 3rd parties as other tools can save Excel XLSX files in the proper format (e.g. Tableau Prep, Google Sheets).

-

Category Input Output

-

Data Connectors

Love the new Sharepoint Files Input tool. Significant improvement over previous options!

A suggestion that could improve the impact of the tool:

File Download Only

Current state: The tool performs two actions simultaneously: 1) downloading the file (csv/xlsx/yxdb) AND 2) reads the file into the data stream. When processing the supported file types, this is very convenient! However, the beauty of Alteryx is being able to read in raw data from almost any file type (pdf/zip/doc/xml/txt/json/etc) and process the data myself. Being limited to only officially sanctioned file types greatly diminishes the impact of the tool.

Suggestion: Allow the user to 1) specify a filepath, 2) download the selected file(s) to that destination, and 3) optionally use a dynamic input tool to process the file.

-

Category Connectors

-

Data Connectors

Allow the Macro input tool to answer a question for other interface tools. Example if I want to open and run a container I cannot automate this. It can only be done with a human interface tool. Interface tools are not able to be updated dynamically. This issue hinders full automation with Alteryx server. Yes there are workarounds which lead to a big Ol Mess and creating errors leads to missed true errors. This option would remove many issues with automation.

-

Category Input Output

-

Data Connectors

I often work with files from vendor software packages where the input/output files are actually delimited text files, but where the filetype is a vendor defined filetype (Branding!), rather than .csv or .txt. I would like to be able to whitelist these file extensions as data files so that they can be dragged into workflows. I'm able to import them using "All Types" and then manually defining the import specifications, but it would be nice if I could define this on a file type basis rather than on a per file basis.

-

Category Input Output

-

Data Connectors

To insert and update rows to our Oracle database, we're mostly using the output data tool (especially updating, since it's not available for Oracle via the in-DB tools). The output data tool just has more options to choose from versus the in-DB toolset (screenshot 1).

However, the one thing that we would really like to see in the output data tool is the option to connect to a database via a connection file (like f.e. in the connect in-db or data stream in tool, see screenshot 2). The thing is, we can set an encrypted connection in the output data tool (and upload it to our Gallery). However, doing this for 80 workflows and 10 output tools per flow makes it quite a annoying task to do. We're not the administrators of our Gallery and want to be able to run the workflows locally as well. Therefore, in my opinion, it would be great to utilize the connection file function in the output data tool, it provides the option to centrally manage your db connections without being dependent on a server administrator.

Screenshot 1

Screenshot 2

-

Category Input Output

-

Data Connectors

I am a citizen developer utilising Microsoft Power Apps and would like to see a Dataverse connector.

-

Category Connectors

-

Data Connectors

I have a workflow that outputs to several files at once. If one of those files is open, an error is returned (of course).

It would be really great if Alteryx could still save any failed files down into a different folder or with a different name such as V2.

-

Category Input Output

-

Data Connectors

-

New Request

Hello Alteryx Comunity! Recently, i was working on a project to generate a database with information such as size, last access date and fullpath of a disk. I noticed that the alteryx component "directory" brings almost all that i need, but it doesn't bring the "size on disk" property. When a file is compressed, it becomes hard to know what is its actual size on disk. My idea for you is to bring one more collumn in the directory tool, which is the "size on disk" field. Thanks for the great work you did by creating the alteryx, it helps a lot in the job.

-

Category Input Output

-

Data Connectors

The current encryption methodology (MD5) used by EncryptPassword to connect to databases does not satisfy the FIPS 140-2 encryption standard that is required by the US Federal Government. The FIPS 140-2 compliance standard requires that any hardware or software cryptographic module implements algorithms from an approved list.

More information on FIPS 140-2: https://csrc.nist.gov/publications/detail/fips/140/2/final

-

Category Input Output

-

Data Connectors

The find and replace feature is great. Unfortunately, I was unpleasantly surprised to learn the hard way that workflow events are outside of its reach. Please expand to include the entire workflow to act on everything opening the xml in notepad could find and replace. The following demonstrates the omission...

On left side I search for the string “v022”

Below that shows zero matches

In the open event box near the center, “v022” appears in the command box

The occurrence in event command box should appear as a match, but does not.

-

Category Connectors

-

Data Connectors

-

Engine

-

XML

Hi All,

Issue:

When input <List of Sheet Names>, Alteryx will assign data type and size accordingly.

Example:

| File | Sheet Name | Data Type | Size |

| Book1 | MTD 매출 조회 | V_WString | 9 |

| Book2 | MTD 매출 (KT&G) | V_WString | 13 |

It become a problem, when input with multiple files with wildcard (Input file Book*xlsx).

It will be skipped due to "has a different schema than the 1st file in the set and will be skipped"

Solution:

Very simple, always same data type and size, like in formula tool. V_Wstring, with size 1073741823.

-

Category Input Output

-

Data Connectors

-

Enhancement

Hello,

I do not want to go to quick access and then to the downloads folder. Mostly we search on the community and download workflows as well as companying files. to reopen the files which are placed in the downloads folder i have to go there.

to speed this up, please put a downloads folder quick link to the left bar:

thx

-

Category Input Output

-

Data Connectors

I have 10 .txt files that I dragged and dropped onto the canvas. I clicked on the first one and set it to read as a delimited text file. I clicked on the second file, set it up, and then the third, and on and on. You can see where this is going. Generally speaking, I can copy some or all of a tool's configuration by grabbing the XML and pasting it into a target tool. This is also an imperfect solution as it can take plenty of editing, see above case, it doesn't contain the annotation, and it is not a solution for beginners.

What if I clicked on a given tool, clicked an Import Settings button, and it launched a dialogue box? This dialogue box would ask me to select a tool from the canvas. Once selected, it would let me pick some or all of the configuration settings (include the annotation) and apply them to the current tool. If I clicked on a Select tool, the dialogue box would only show other Select tools, and so on.

Again, I know about copying and pasting XML, about editing it, I've even used Sublime to help with the above case. I know about saving Select tool field configurations. i know about saving a formula. I know about copying a tool itself and pasting it elsewhere. These methods have their uses and some apply only to specific tools or situations. This idea transcends them all.

Please craft an Import Settings button that is friendly for beginners and experts alike. While we're at it, please also allow exporting and saving settings. That way, I can use them in other workflows later on.

-

Category Input Output

-

Data Connectors

Currently, when sharing a workflow with a Python-based connector such as Google BigQuery, the credentials for the tool have to be reentered if the workflow is opened on a workstation different from where the workflow was created or by a different user on the same workstation.

There is no need to re-authenticate when publishing a workflow to run by a Server schedule or on the Gallery. This functionality should be extended to sharing the workflow between workstations with the Python registry key enabled.

-

Category Connectors

-

Data Connectors

The ability to Sort and Filter in the Results window is a huge time saver. Please allow the same functionality when viewing results in a new window.

-

Category Input Output

-

Data Connectors

The new enhancements to the Input tool, File, Excel file type being able to input a named range is fantastic!

One trick I use often when creating a template Excel file for user input is I give the form sheet a "Code Name" - sadly in Excel this cannot be set when creating the template using code or from Alteryx - it needs to be set manually in the <alt><F11> IDE, select the sheet in browser then set the code name in the properties window. Advantage: the user can change the visible label/name of the sheet to their liking but the code name does not change - therefore, an automated pipeline with a fixed sheet name for import (like a workflow picking up files dropped into a drop folder to ETL new results into a database), could reference the sheet code name and not be prone to workflow failure if the user changes the name of the sheet.

-

Category Input Output

-

Data Connectors

I would like to propose an idea for the evolution of INPUT TOOLS and OUTPUT TOOLS in relation to their compatibility with DATA CONNECTIONS configured in Settings ALTERYX.

Indeed, it is now possible to create a Data Connection of SQL Bulk Loader (SSVB) type and to use this DATA CONNECTION in an Input Tool. The configuration is possible (Choose the table, the query ...), when you run the Workflows it works and you get the data well.

On the other hand, when we try to click again on the INPUT Tools, there is an error message and we cannot retrieve the contained request because the File Format is unknown.

After analysis with the support, there is a compatibility problem between SSVB and INPUT Tools in ALTERYX because there is no support for SSVB in INPUT in ALTERYX but it is supported in OUTPUT Tool.

My proposal would therefore be that there be a validation made during the configuration of the INPUT Tool and the Output Tool between the DATA Connection chosen and the type of tool used.

Thnaks for your return.

Regards,

Psyrio

-

Category Input Output

-

Data Connectors

-

Enhancement

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 32 | |

| 5 | |

| 5 | |

| 3 | |

| 3 |