Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

Hello,

SQLite is :

-free

-open source

-easy to use

-widely used

https://en.wikipedia.org/wiki/SQLite

It also works well with Alteryx input or output tool. 🙂

However, I think a InDB SQLite would be great, especially for learning purpose : you don't have to install anything, so it's really easy to implement.

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Hello,

I had a business case requiring a cost effective and quick storage solution for real time online sourced survey data from customers. A MongoDB instance would fit the need, so I quickly spun up a cluster on Mongo Atlas. Atlas was launched by MongoDB in 2016 as a database-as-a-service deployed on AWS. All instances for Atlas require TLS/SSL to connect. Currently, the Alteryx MongoDB connector does not support TLS/SSL connections and doesn't work against Atlas. So, I was left with a breakdown in my plan that would require manual intervention before ingesting data to Alteryx (not ideal).

Please consider expanding this functionality on all connectors. I am building Alteryx out in my agency as a data platform that handles sensitive customer information (name, address, email, etc.). Most tools I use to connect to secure servers today support this type of connection and should be a priority for Alteryx to resolve.

Thanks,

Mike Schock

-

Category Connectors

-

Data Connectors

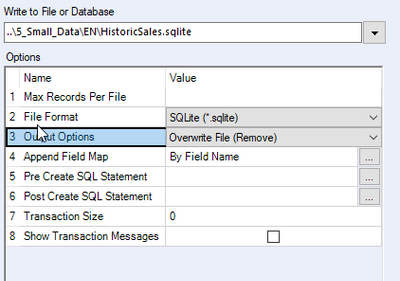

Hello,

I think the option Overwrite File (Remove) shouldn't throw an error if the file is not present

Or, I don't know, make 2 options : one with fail if file is missing, the other to not fail.

This idea is the same than https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Overwrite-Table-for-output-write-indb-should... but for files.

Best regards,

Simon

-

Category Input Output

-

Data Connectors

-

Enhancement

Hello all,

DuckDB is a new project of embeddable database by the team behind MonetDB. From what I understand, it's like a SQLite database but for analytics (columnar-vectorized query execution engine on a single file). And of course it's open-source and free.

More info on their website : https://duckdb.org/

Best regards,

Simon

-

Category Input Output

-

Data Connectors

The Transpose In-db stands in the "Laboraty" for years now. I understand Alteryx invested some time and money to develop that but sadly we still can't use that tool for sensitive workflows. Did you get some bugs on it? Can you please correct it and make this tool an "official" tool?

Thanks

-

Category In Database

-

Data Connectors

Currently it's possible to use the Output tool to output to either a sheet, a place in a sheet or a named range in Excel, but it is not possible to output to a preformatted excel table - it would be really good if the output tool had an option to output to [Table1] in an Excel workbook for example. This enhancement would be incredibly helpful for reporting purposes.

-

Category Input Output

-

Data Connectors

Originally posted here: https://community.alteryx.com/t5/Data-Sources/Input-Data-Tool-Can-we-control-use-of-Cursors/m-p/5871...

Hi there,

I've profiled a simple query using SQL Server Profiler (Query: Select * from northwind.dbo.orders; row limit: 107; read Uncommitted: true) and interestingly it opens up a cursor if you connect via ODBC or SQL Native; but not by OleDB - full queries and profile details are on the discussion thread above.

However - in some circumstances a cursor is not usable - e.g. https://community.alteryx.com/t5/Data-Sources/Error-SQL-Execute-Cursors-Not-supported-on-Clustered-C... because SQL doesn't allow cursors on columnstore indexed tables & columns

Is there any way (even if we need to manually adjust via the XML settings) to ask Alteryx not to create the cursor and execute directly on the server as written?

Thank you

Sean

-

Category Input Output

-

Data Connectors

Please add official support for newer versions of Microsoft SQL Server and the related drivers.

According to the data sources article for Microsoft SQL Server (https://help.alteryx.com/current/DataSources/SQLServer.htm), and validation via a support ticket, only the following products have been tested and validated with Alteryx Designer/Server:

Microsoft SQL Server

Validated On: 2008, 2012, 2014, and 2016.

- No R versions are mentioned (2008 R2, for instance)

- SQL Server 2017, which was released in October of 2017, is notably missing from the list.

- SQL Server 2019, while fairly new (~6 months old), is also missing

This is one of the most popular data sources, and the lack of support for newer versions (especially a 2+ year old product like Sql Server 2017) is hard to fathom.

ODBC Driver for SQL Server/SQL Server Native Client

Validated on ODBC Driver: 11, 13, 13.1

Validated on SQL Server Native Client: 10,11

- ODBC Driver 17+ is not mentioned, even though it was released in February of 2018. https://docs.microsoft.com/en-us/sql/connect/odbc/windows/release-notes-odbc-sql-server-windows?view...

- SQL Server Native Client is deprecated. It is being replaced by Microsoft OLE DB Driver for SQL Server. However, there is not a mention of Microsoft OLE DB Driver for SQL Server. The latest version of this is 18.3.0. https://docs.microsoft.com/en-us/sql/connect/oledb/release-notes-for-oledb-driver-for-sql-server?vie...

-

Category Input Output

-

Data Connectors

-

Engine

-

Runtime

Please support GZIP files in the input tool for both Designer and Server.

I get several large .gz files every day containing our streaming server logs. I need to parse and import these using Alteryx (we currently use Sawmill). Extracting each of these files would take a huge amount of space and time.

This was previously requested and marked as "now available", but what is available only addressed a small part of the request. First, that request was for both ZIP and GZIP. What is now available is only ZIP. Second, it requested both input and output, what is now available is input only. Third, while not explicitly stated in the request, it needs to function in Alteryx Server in order to be scheduled on a daily basis.

-

Category Input Output

-

Data Connectors

Hello all,

As of today, you must set which database (e.g. : Snowflake, Vertica...) you connect to in your in db connection alias. This is fine but I think we should be able to also define the version, the release of the database. There are a lot of new features in database that Alteryx could use, improving User Experience, performance and security. (e.g. : in Hive 3.0, there is a catalog that could be used in Visual Query Builder instead of querying slowly each schema)

I think of a menu with the following choices :

-default (legacy) and precision of the Alteryx default version for the db

-autodetect (with a query launched every time you run the workflow when it's possible). if upper than last supported version, warning message and run with the last supported version settings.

-manual setting a release (to avoid to launch the version query every time). The choices would be every supported alteryx version.

Best regards,

Simon

-

Category In Database

-

Data Connectors

-

Desktop Experience

-

User Settings

Hello all,

Big picture : on Hadoop, a table can be

-internal (it's managed by Hive or Impala, and act like any other database)

-external (it's managed by hadoop, can be shared among the different hadoop db such as hive and impala and you can't delete it by default when dropping the table

for info, about suppression on external table :

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/using-hiveql/content/hive_drop_external_table_...

Alteryx only creates internal tables while it would be nice to have the ability to create external tables that we can query with several tools (Hive, Impala, etc).

It must be implemented

-by default for connection

-by tool if we want to override the default

Best regards,

Simon

-

Category In Database

-

Data Connectors

Hello all,

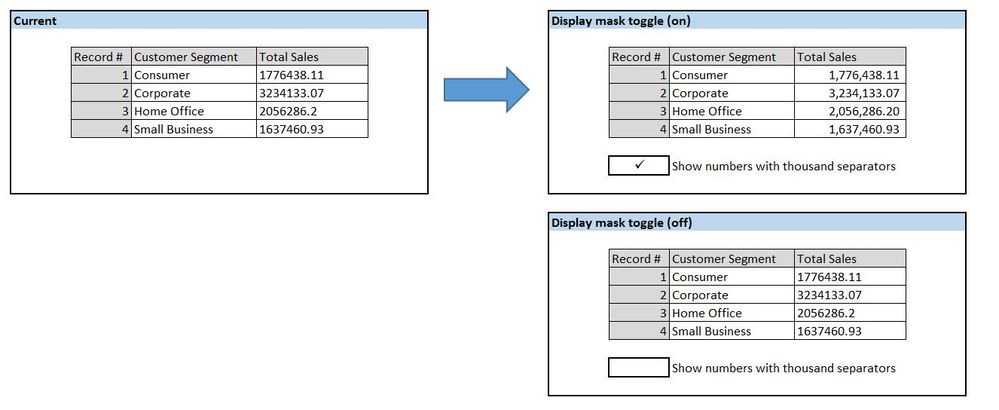

When looking at the Results window, I often find it a headache to read the numeric results because of the lack of commas. I understand that incorporating commas into the data itself could make for some weird errors; however, would it be possible to toggle an option that displays all numeric fields with proper commas and right-aligned in the Results window? I am referring to using a display mask to make numeric fields look like they have the thousands separator while retaining numeric functionality (as opposed to converting the fields to strings).

What do you think?

-

Category Input Output

-

Data Connectors

-

General

Hello,

As of today, the in db connexion window is divided into :

-write tab

-read tab

However, writing means two different thing : inserting and in-db writing. Alteryx has already 2 different tools (Data Stream In and Write Data).

Si what I propose is to divide the window into :

-read

-write

-insert

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

Data Connectors

Please add a configuration to the RedShift bulk load to EITHER use access keys or an IAM EC2 role for access.

We should not have to specify access keys when we are in an IAM enabled environment.

Thanks

-

Category Connectors

-

Data Connectors

Where it stands now, only a file input tool can be used to pull data from Google BigQuery tables. The issue here is that the data is streamed and processed locally, meaning the power of BigQuery processing isn't actually being leveraged.

Adding BigQuery In-Database as a connection option would appeal to a wide audience. BigQuery is also standard SQL compliant with the SQL 2011 standard, so this may make for an even easier integration.

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

Presently when mapping an Excel file to an input tool the tool only recognizes sheets it does not recognize named tables (ranges) as possible inputs. When using PowerBI to read Excel inputs I can select either sheets or named ranges as input. Alteryx input tool should do the same.

-

Category Input Output

-

Data Connectors

Please enhance the input tool to have a feature you could select to test if the file is there and another to allow the workflow to pause for a definable period if the input file is locked by another user, then retry opening. The pause time-frame would be definable for N seconds and the number of iterations it would cycle through should be definable so you can limit how many attempts to open a file it would try.

File presence should be something we could use to control workflow processing.

A use case would be a process that runs periodically and looks to see if a file is there and if so opens and processes it. But if the file is not there then goes to sleep for a definable period before trying again or simply ends processing of the workflow without attempting to work any downstream tools that might otherwise result in "errors" trying to process a null stream.

An extension of this idea and the use case would be to have a separate tool that could evaluate a condition like a null stream or field content or file not found condition and terminate the process without causing an error indicator, or perhaps be configurable so you could cause an error to occur or choose not to cause an error to occur.

Using this latter idea we have an enhanced input tool that can pass a value downstream or generate a null data stream to the next tool, then this next tool can evaluate a condition, like a filter tool, which may be a null stream or file not found indicator or other condition and terminate processing per the configuration, either without a failure indicated or with a failure indicated, according to the wishes of the user. I have had times when a file was not there and I just want the workflow to stop without throwing errors, other times I may want it to error out to cause me to investigate, other scenarios or while processing my data goes through a filter or two and the result is no data passes the last filter and downstream tools still run and generally cause a failure as they have no data to act on and I don't want that, it may be perfectly valid that on a Sunday or holiday no data passes the filters.

Having meandered through this I sum up with the ideal being to enhance the input tool to be able to test file presence and pass that info on to another tool that can evaluate that and control the workflow run accordingly, but as a separate tool it could be applied to a wider variety of scenarios and test a broader scope of conditions to decide if to proceed or term the workflow.

-

Category Input Output

-

Data Connectors

In-database enables large performance benefits on big datasets, it would be great to incorporate multirow and multifield formulas for use within the in-database funcions for redshift

-

Category In Database

-

Category Input Output

-

Data Connectors

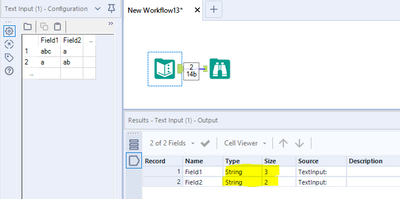

Hi Alteryx community,

It would be really nice to have v_string/v_wstring and max character size as a standard for text columns.

it is countless how many times I found that the error was related to a string truncation due to string size limit from the text input.

Thumbs-up those who lost their minds after discovering that the error was that! 😄

-

Category Input Output

-

Data Connectors

-

Tool Improvement

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 6 | |

| 5 | |

| 4 | |

| 3 | |

| 2 |