Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

-

Admin Settings

-

Category Developer

-

Enhancement

-

User Settings

It would be great if we could set the default size of the window presented to the user upon running an Analytic App. Better yet, the option to also have it be dynamically sized (auto-size to the number of input fields required).

-

API SDK

-

Category Apps

-

Category Developer

-

Category Interface

I use a mouse which has a horizontal scroll wheel. This allows me to quickly traverse the columns of excel documents, webpages, etc.

This interaction is not available in Alteryx Designer and when working with wide data previews it would improve my UX drastically.

-

API SDK

-

Category Developer

-

Category Interface

-

Desktop Experience

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

-

API SDK

-

Category Developer

-

Category In Database

-

Category Input Output

-

API SDK

-

Category Developer

Please upgrade the "curl.exe" that are packaged with Designer from 7.15 to 7.55 or greater to allow for -k flags. Also please allow the -k functionality for the Atleryx Download tool.

-k, --insecure

(TLS) By default, every SSL connection curl makes is verified to be secure. This option allows curl to proceed and operate even for server connections otherwise considered insecure.

The server connection is verified by making sure the server's certificate contains the right name and verifies successfully using the cert store.

Regards,

John Colgan

-

API SDK

-

Category Developer

I would really love to have a tool "Dynamic change type" or "Dynamic re-type" which is used just as "Dynamic Rename".

- "Take Type from First Row of Data": By definition, all columns are of a string type initially. Sets the type of the column according to the string in the first row of data.

Col 1 Col 2 Col 3 Col 4 Double Int32 V_String Date 123.456 17 Hello 2023-10-30 3.4e17 123 Bye 2024-01-01 - "Take Type from Right Input Metadata": Changes the types of the left input table to the ones by right input.

- "Take Type from Right Input Rows": Changes the types based on a table with columns "Name" and "New Type".

Name New Type Col 1 Double Col 2 Int32 Col 3 V_String Col 4 Date

-

API SDK

-

Category Developer

The Dynamic Input will not accept inputs with different record layouts. The "brute force" solution is to use a standard Input tool for each file separately and then combine them with a Union Tool. The Union Tool accepts files with different record layouts and issues warnings. Please enhance the Dynamic Input tool (or, perhaps, add a new tool) that combines the Dynamic Input functionality with a more laid-back, inclusive Union tool approach. Thank you.

-

API SDK

-

Category Developer

Please add support for windows authentication to the download tool. I know there's a workaround but that involves using curl and the run command tool. The run command tool is awful and should be avoided at all costs, so please improve the download tool so I can use internal APIs.

-

API SDK

-

Category Developer

Imagine a browse tool that was inline as opposed to a terminus tool (input and output). Now allow that browse tool to persist its data after a run of the module. When an option on that tool was activated, it would block all of the dependent tools upstream from it and instead send its cached data downstream.

The reason I think this would be a useful tool is that I often come to the end of creating a module when I'm working on the Reporting tools. I run multiple times to see the changes I've made. When the module has a lot of incoming data and complex data transformations, it can take a long time just to get to the point where the data gets to the reporting tools. This cache tool would eliminate that wait.

-

API SDK

-

Category Developer

How about a quick method of disabling a container.

Current state - Click on the container, pan the mouse all the way over to the tiny checkbox target in the configuration pane and click disable.

Future state - little icon by the rollup icon that can be clicked to disable/enable, differentiated by perhaps a color change of the minimized pane perhaps?

I know what you're thinking, "talk about lazy, he's whining about moving the mouse (which his hand was already on) 2 cm along his desktop and clicking"... but still what an easy usability win and one less click to do a task I find myself repeating frequently.

-

API SDK

-

Category Developer

Please enhance the dynamic select to allow for dynamic change data type too. The use case can be by formula or update in an action for a macro. If you've ever wanted to mass change or take precision action in a macro, you're forced to use a multi-field formula. It would be rather helpful and appreciated.

Cheers,

Mark

-

API SDK

-

Category Developer

The R tool has AlteryxProgress() and AlteryxMessage() functions for generating notifications in the Results window https://help.alteryx.com/current/designer/r-tool, however the Python tool does not. Since I'm writing more Python code than R code I'd like to have similar functionality available in the Python tool, e.g. an Alteryx.Progress() function and an Alteryx.Message() function.

Jonathan

-

API SDK

-

Category Developer

We need some way (unless one exists that I am unaware of - beyond disabling all but the Container I want to run) to fire off containers in particular order. Run Container "Step1" then Run Container "Step2" and so on.

-

API SDK

-

Category Developer

When I proceed with this command in a python tool:

from ayx import Package

Package.installPackages(package='pandas',install_type='install --upgrade')

in Alteryx it only updates to 0.25, but the Latest version is 1.1.2.

When I would like to upgrade from the Python side i get the following:

ERROR: ayx 1.0.54 has requirement pandas<0.25.0,>=0.24.2, but you'll have pandas 1.1.2 which is incompatible.

Can you please make sure we can upgrade to the latest version of pandas without any compatibility issue?

This is important because of json_normalize. Really useful tool, available from pandas 1.0.3!

-

API SDK

-

Category Developer

-

Engine

-

Enhancement

I would to suggest to add a configuration in the Block Until Done tool, which allow the user to prioritize the release of a data stream through multiple Block Until Done tools in the same module.

In the example below, the objective is to update multiple sheets in a single Excel workbook. Each sheet is a different data stream, that cannot be unioned together, therefore making the filtering of a single stream feeding into multiple Block Until Done from that filter solution impossible.

What I would like to be able to do is have a configuration, where Block Until Done #2 will not allow the data stream to pass through until Block Until Done #1 is complete, Then Block Until Done #3 will not pass through the data stream until Block Until Done #2 is complete, and so forth through the all the Block Until Done instances.

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

Hello Dev Gurus -

The message tool is nice, but anything you want to learn about what is happening is problematic because the messages you are writing to try to understand your workflow are lost in a sea of other messages. This is especially problematic when you are trying to understand what is happening within a macro and you enable 'show all macro messages' in the runtime options.

That being said, what would really help is for messages created with the message tool to have a tag as a user created message. Then, at message evaluation time, you get all errors / all conversion warnings / all warnings / all user defined messages. In this way, when you write an iterative macro and are giving yourself the state of the data on a run by run basis, you can just goto a panel that shows you just your messages, and not the entire syslog which is like drinking out of a fire hose.

Thank you for attending my ted talk regarding Message Tool Improvements.

-

API SDK

-

Category Developer

-

Feature Request

-

Tool Improvement

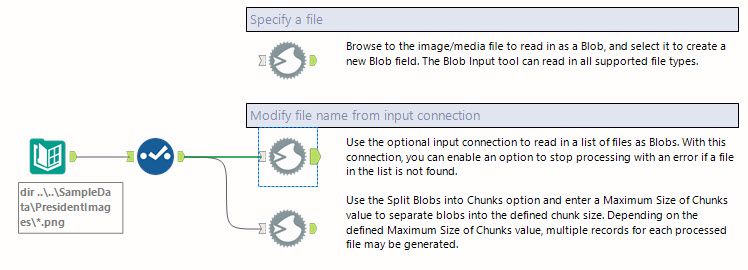

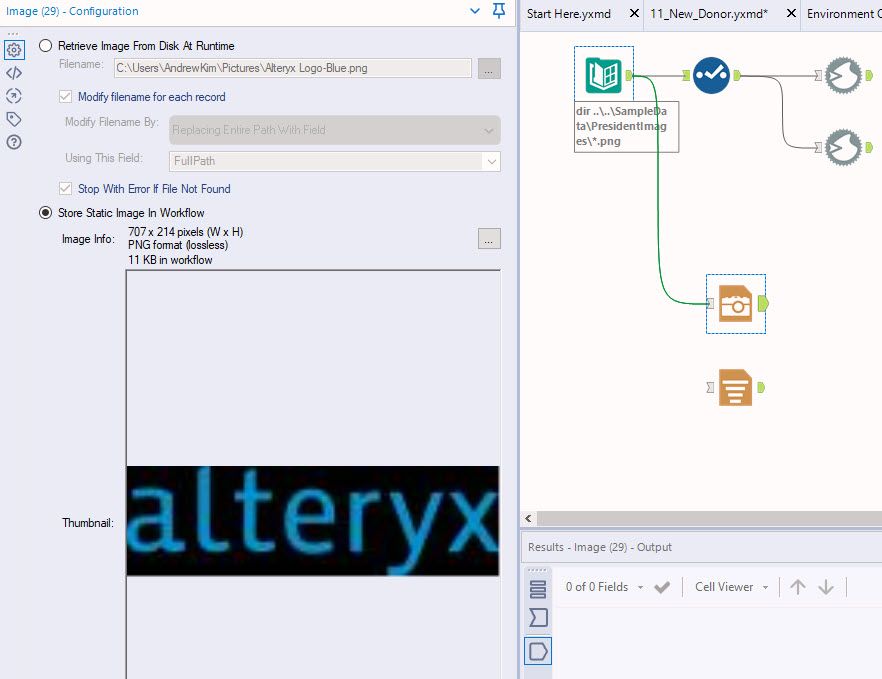

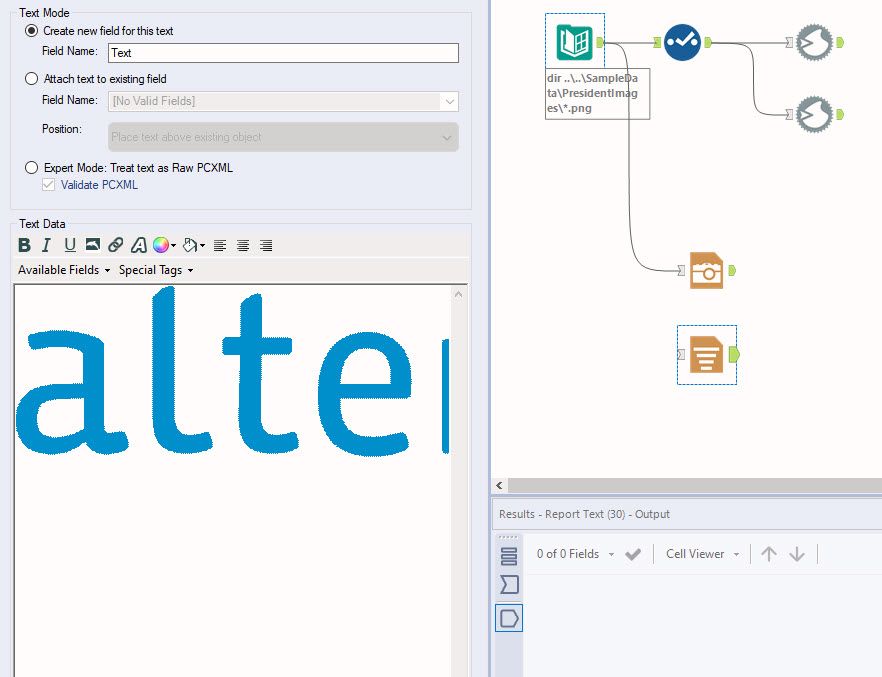

With the new intelligence suite there is a much higher use of blob files and we would like to be able to input them as a regular input instead of having to use non- standard tools like Image, report text or a combination of directory/blob or input/download to pull in images, etc. I would like to see the standard input tool capable of bringing in blob files as well.

-

API SDK

-

Category Developer

-

Category Reporting

-

Category Text Mining

I love the dynamic rename tool because quite often my headers are in the first row of data in a text file (or sometimes, Excel!).

However, whenever I open a workflow, I have to run the workflow first in order to make the rest of the workflow aware of the field names that I've mapped in the dynamic rename tool, and to clear out missing fields from downstream tools. When a workflow takes a while to run, this is a cumbersome step.

Alteryx Designer should be aware of the field names downstream from the dynamic rename tool, and make them available in the workflow for use downstream as soon as they are added (or when the workflow is initially opened without having been run first).

-

API SDK

-

Category Developer

In normal output tool, when file type is csv, it is possible to custom select the delimiter. It would be great to be able to have the same option in the Azure Data Lake output tool, so for example you can write a pipe delimited file to your ADLS storage account.

-

API SDK

-

Category Connectors

-

Category Developer

-

Category Input Output

- New Idea 396

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

230 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

220 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

406 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

93 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,616 -

Documentation

64 -

Engine

136 -

Enhancement

422 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

16 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

229 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

228 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

| User | Likes Count |

|---|---|

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |