Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I’m writing about a short-coming I see in the Publish to Tableau Server Tool v2.0 (PTTS). I work in a development environment where we use different Tableau servers (i.e. development, test, production) to support product development. One of the shortcomings of PTTS is that once the Tableau server information you are connecting to is entered, validated, and the tool is configured, you can no longer ‘see’ which Tableau server/site the tool is publishing to. I think this piece of information is quite important. I know I can always us the “Disconnect” button in the tool and re-enter the information so I know which server it is pointing to, but this defeats the purpose of entering that information in the tool in the first place.

Please consider an enhancement to the tool so we developers know at a glance where (server/site) the tool is publishing. Project and Data source names are helpful, but in a development cycle, all Tableau servers may have the same Project and Data source names across all environments.

I've attached examples of the tool options when being defined and once the tool is configured – notice server URL and Site are aren’t displayed in the tool once it has been configured.

-

Category Connectors

-

Data Connectors

We extensively use the AWESOME functionality of SharePoint List Input and SharePoint List Output tools. They're great! BUT... they require valid credentials to pull back the valid list and view values. Not normally an issue until you go to share your workflow. If you strip out your credentials from Alteryx the List and view fields go blank, do it from the xml and when the person you share it with opens it up the fields go blank and you have to count on that user selecting the proper list and view.

I propose to have these tools load valid lists and views only upon pressing a button or running the macro in initial configuration state.

Found this https://community.alteryx.com/t5/Alteryx-Designer-Discussions/SharePoint-Passwords/td-p/17182

and we could use a macro tool but every implementation still requires storing a valid username and password to avoid the error and the list id which I imagine the sharepoint API requires and which is why the tool behaves the way it does is not easily obvious to most ppl.

-

Category Connectors

-

Data Connectors

-

Tool Improvement

-

Category Connectors

-

Category Input Output

-

Data Connectors

Currently you get an "Unexpected error" if you try to connect to a table with numeric fields, the solution now is to make a custom query and cast all numeric fields as string and then use a select tool to make it a double.

But to make things easier for everyone, the support for numeric fields is needed.

(It would also be great if we could connect to views without having to use a custom query....)

-

Category Connectors

-

Data Connectors

Please test/certify Teradata 16.2 for designer, server, scheduler, and Gallery applications.

-

API SDK

-

Category Connectors

-

Category Developer

-

Category Input Output

After developing complicated workflows (using over 200 tools and over 30 inputs and outputs) in my DEV or QA environment, I need to switch over to Production to deploy it, but it's incredibly annoying to have to change 30 data inputs individually from QA to Prod, DEV to QA, etc. If I need to go back to QA to change something and re-test, I have to do it all again. etc.etc.

I need a way to be able to change mass amounts of data sources at once or at least make the process a lot more streamlined to make it bearable. Otherwise it is incredibly difficult to work within multiple environments.

-

Category Connectors

-

Category Input Output

-

Data Connectors

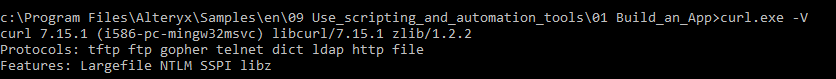

Curl currently doesn't have Secure protocols supported. Please find below screen-shot. We are currently using Alteryx 11.7.6

Can Alteryx take this as feature request and add the secure libraries to existing cURL tool so that it can support the secure SFTP protocol.

-

Category Connectors

-

Category In Database

-

Data Connectors

In the Alteryx SharePoint list tool, Alteryx fails to authenticate using to connect to SharePoint list that is protected by ADFS. There Sharepoint sites outside of our company's firewall that use ADFS for authentication. We would like to connect to those sites via the Sharepoint List tool.

-

Category Connectors

-

Data Connectors

The actual Mongo output tool does not allow to specify field type (except for the primary _id field). The tool just assumes that all fields are string. Many of our CSV files incorporate string representation of ObjectId (ex: "56df422c08420b523aa00a77").

When we import those CSV we have to run a an additional script that will convert all the id into ObjectId fields. Same thing with the date -> Mongo Date.

If the tool would allow us to do this, it would save us a billion time across all our ETL processes.

Regards,

-

Category Connectors

-

Data Connectors

Our development team prefers that we connect to their MongoDB server using a private key through a .pem file, instead of a username/password. Could this option be built into the MongoDB Input?

-

Category Connectors

-

Data Connectors

Clustering your data on a sample and then appending clusters is a common theme

especially if you are in customer relations and marketing related divisions...

When it comes to appending clusters that you have calculated form a 20K sample and then you're going to "score" a few million clients you still need to download the data and use the append cluster...

Why don't we have an In-db append cluster instead, which will quicken the "distance based" scoring that append cluster does on SQLServer, Oracle or Teradata... |  |

Best

-

Category Connectors

-

Category In Database

-

Data Connectors

Hi All,

It would be great if Alteryx 10.5 supports connectivity to SAS server.

Regards,

Gaurav

-

Category Connectors

-

Category In Database

-

Data Connectors

I want to use Alteryx to pull data from a SharePoint List. This shouldn't be a problem, but I use SharePoint Content Types. Alteryx won't allow me to import any list that has Content Types enabled; thus rendering the SP list input type not usable.

My interim workaround is to create a data connection thru excel to the list and then pull the data in that way, but optimally, I would like to pull directly from the list.

Content types are a best practice in SharePoint, so any list or library in my site collection contains them.

Please update the SharePoint list input to support content types.

thank you,

Someone else inquired about this but I didn't see an idea entered /

-

Category Connectors

-

Category Input Output

-

Data Connectors

Hi All,

It would be a given wherein IT would have invested effort and time building workflows and other components using some of the tools which became deprecated with the latest versions.

It is good to have the deprecated versions still available to make the code backward compatible, but at the same time there should be some option where in a deprecated tool can be promoted to the new tool available without impacting the code.

Following are the benefits of this approach -

1) IT team can leverage the benefits of the new tool over existing and deprecated tools. For e.g. in my case I am using Salesforce connectors extensibly, I believe in contrast to the existing ones the new ones are using Bulk API and hence are relatively much faster.

2) It will save IT from reconfiguring/recoding the existing code and would save them considerable time.

3) As the tool keeps forward moving in its journey, it might help and make more sense to actually remove some of the deprecated tool versions (i.e. I believe it would not be the plan to have say 5 working set of Salesforce Input connectors - including deprecated ones). With this approach in place I think IT would be comfortable with removal of deprecated connectors, as they would have the promote option without impacting exsiting code - so it would ideally take minimal change time.

In addition, if it is felt that with new tools some configurations has changed (should ideally be minor), those can be published and as part of

promotions IT can be given the option to configure it.

Thanks,

Rohit Bajaj

-

Category Connectors

-

Category Transform

-

Data Connectors

-

Desktop Experience

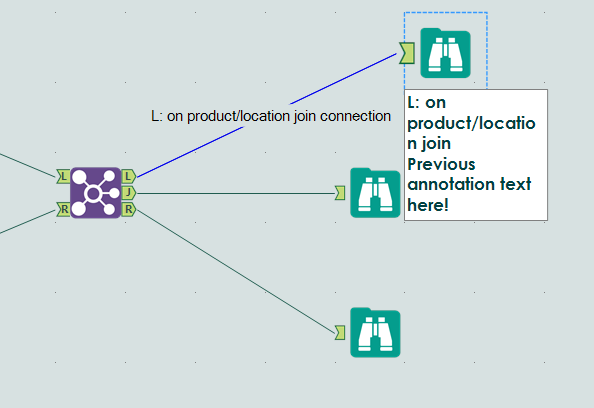

I find that when I'm using Alteryx, I'm constantly renaming the tool connectors. Here's my logic, most of the time:

I have something like a Join and 3 browses.

- I name the L join something like "L: on product/location join"

- I then copy that descriptor, and past it in the Annotation field

- I then copy that descriptor, select the wired connector, and paste that in the connection configuration

MY VISION:

Have a setting where I could select the following options:

- Automatically annotate based on tool rename

- Automatically rename incoming connector based on tool rename

If I rename a tool, and "Automatically annotate based on tool rename" is enacted, it will insert that renaming at the top of the annotation field. If there is already data in that field, it will be shifted down. If I rename a tool and "Automatically rename incoming connector..." is on, then the connection coming into it gets [name string]+' connection' put into its name field. I included a picture of the end game of my request.

Thanks for your ear!

-

Category Connectors

-

Category Interface

-

Data Connectors

-

Desktop Experience

When users who have no idea what the Field API Name is try to pull data from Salesforce it can be problematic. A simple solution would be to add the Field Label to the Query Window to allow users to pick the fields based on API NAME or FIELD LABEL.

-

Category Connectors

-

Data Connectors

When pulling data from Oracle and pushing to Salesforce, there are many times where we have an ID field in Oracle and a field containing this ID in Salesforce in what is called an external ID field. Allowing us to match against those external ID fields would save us a lot of time and prevent us from having to do a query on the entire object in Salesforce to pull out the ID of the records we need.

-

Category Connectors

-

Data Connectors

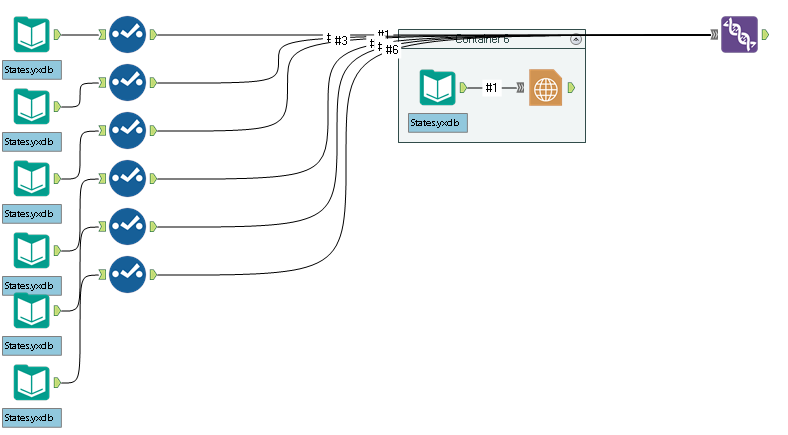

Sometimes in a crowded workflow, connector lines bunch up and align across the title bar of a tool container. This blocks my view of the title, but also makes it hard to 'grab' the tool container and move it.

Could Alteryx divert lines around tool containers that they don't connect into, or make tool containers 'grab-able' at locations other than the title bar?

-

Category Connectors

-

Data Connectors

Hi,

When you create a data model in excel you can create measures (aka KPI). These is then something you can then use when you pivot the data and measure would dynamically be updated as you segment the data in your pivot table.

By example, let's say you have a field with the customer name and a field with the revenue, you could create a measure that will calculate the average revenue per customer (sum of revenue / disctintcount of customer)

Now if you have a 3rd field in your data that inidcates your region, the measure would allow to see the average revenue by customer and by region (but the measure formula would remain the same and wouldn't refer to the region field at all)

Excel integrates well with PowerBI and currently these measures flow into PowerBI.

While we have a "Publish to PowerBI" in Alteryx I haven't seen any way to create such measures and export them to PowerBI.

Hence I still need to load to Excel to create these measures before I can publish to PowerBI, it'd be great to avoid that intermediate tool.

Thanks

Tibo

-

Category Connectors

-

Data Connectors

We don't have Server. Sometimes it's easy to share a workflow the old fashioned way - just email a copy of it or drop it in a shared folder somewhere. When doing that, if the target user doesn't have a given alias on their machine, they'll have issues getting the workflow to run.

So, it would be helpful if saving a workflow could save the aliases along with the actual connection information. Likewise, it would then be nice if someone opening the workflow could add the aliases found therein to their own list of aliases.

Granted, there may be difficulties - this is great for connections using integrated authentication, but not so much for userid/password connections. Perhaps (if implemented) it could be limited along these lines.

-

Category Connectors

-

Category In Database

-

Data Connectors

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 32 | |

| 6 | |

| 5 | |

| 3 | |

| 3 |